Artificial Intelligence Attacks You

At the end of last year, “artificial intelligence” was repeatedly mentioned in the results and forecasts of the IT industry. And our company, which deals with information security, has increasingly begun to send questions about the prospects of AI from various publications. But security experts do not like to comment on this topic: perhaps it is the “yellow press” effect that repels them. It is easy to see how such questions arise: after another news like “Artificial Intelligence learned to draw like Van Gogh”, journalists grab the hot technology and go to interrogate everyone in a row - and what can AI do in animal husbandry? And in education? Somewhere on this list, security automatically turns out, without much understanding of its specifics.

In addition, journalism, generously fed by the IT-industry, loves to talk about the achievements of this industry in admiration and advertising. That is why the media buzzed you all ears about the victory of machine intelligence in the game of Guo (although this does not have any benefit in real life), but it didn’t particularly hurt that last year at least two people who had entrusted their lives to the autopilot of the car died Tesla.

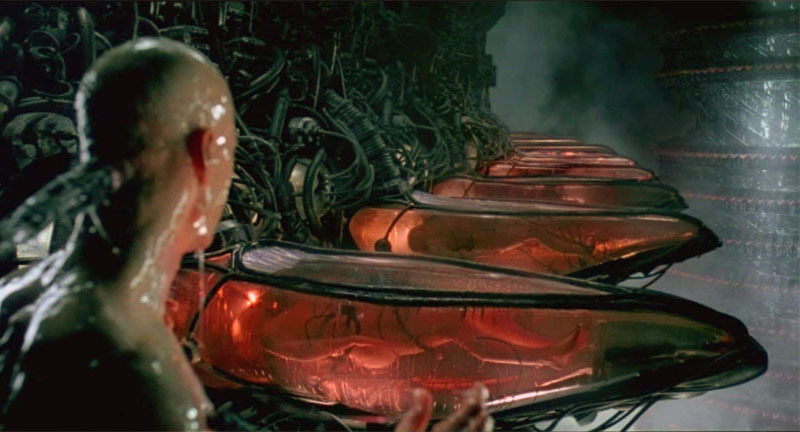

')

In this article, I collected some observations about artificial intelligence from an evolutionary point of view. This is an unusual approach, but it seems to me that it is the best way to evaluate the role of AI agents in security, as well as the security of AI in other areas.

Environment and adaptation

Virtually any news about artificial intelligence tells about increasing the level of machine autonomy: “the computer beat ...”, “the neural network learned ...”. This is reminiscent of descriptions of laboratory experiments with animals. However, in studying the behavior of animals, scientists have long come to the need for research in the natural environment, since it is the environment and the adaptation to it that form many properties of organisms that are not obvious and not needed in laboratories.

In the same way, the prospects for AI will become clearer if, instead of “the ideal horse in a vacuum,” you should study more closely the environments where AI lives. And notice how different are his successes depending on the environment. Attempts to create machine translators last more than half a century, billions of dollars have been spent on them - but still you’ll rather hire a human translator to translate from Chinese than trust the program. On the other hand, bots for high-frequency trading, which appeared around 2006, just a couple of years began to play a major role in economic crises . These robots simply occupied the digital environment in which they feel like fish in water - that is, much better than humans.

A no less convenient digital environment was created to automate hacker attacks: a multitude of devices with a variety of standard vulnerabilities suddenly merged into a shared ocean thanks to the Internet. As the experience of last year showed, in order to capture a million security cameras and build a botnet of them, the program only needs to have minimal intelligence. But only ... if she is alone. The next version of the Mirai botnet, which captured the resources of the previous one, turned out to be more complicated . So another evolution engine turns on - competition.

Arms race and “mixed technique”

Surprisingly, most academic studies in the field of AI ignored the fact that intelligence in general is the result of a fierce battle for survival. For decades, theoretical “cognitive sciences”, without much success, tried to simulate what was actively growing somewhere nearby, in opposition to malware and security systems.

If you read the definition of the Artificial Life discipline in Wikipedia, you will not find a word about computer viruses there - although they demonstrate everything that this discipline has been trying to simulate since the seventies. Viruses have adaptive behavior (different actions in different conditions, the ability to deceive sandboxes and disable antiviruses), self-reproduction with mutations (so that the signatures of parents become useless for catching offspring), there is mimicry (disguise as legal programs) and a lot more . If so where to look for artificial life - then it is here.

Does this help the counter development of the intelligence of protective systems? Of course. Especially when you consider that not only viruses are fighting with them, but also security colleagues. For example, Google has been regularly breaking antiviruses for the last two years (strangers, of course). Vulnerabilities in protection systems have been found before, but thanks to Google, anti-virus products are already beginning to be perceived as a threat. It can be assumed that the global search engine cleans the clearing to roll out its own security products. And maybe already rolled out, but not for everyone.

Here it’s time to say the magic words “machine learning” and “behavioral analysis” - because it is Google that can control these huge data streams (site indexing, mail, browser, etc.). However, these technologies in security are developing a little differently than in academic toys AI.

Let's look at the beginning of cognitive science, which I blamed for the brakes. For more than half a century in the study of the behavior of animals and people, there has been a dispute between “behaviorists” and “ethologists”. The first, mostly American scientists, argued that behavior is only a set of reflexes, reactions to the environment; the most radical of them professed the principle of tabula rasa with regard to education, stating that they could turn any newborn into a representative of any given profession, since his consciousness is a blank sheet, where you just need to write down the correct instructions. The second trend, ethology, arose among European zoologists, who insisted that many complex behavioral patterns are innate, and for their understanding it is necessary to study the structure and evolution of the organism — that which comparative anatomy initially followed, and then neurobiology and genetics.

It is these two groups of disputants who determined the academic directions of the development of artificial intelligence. Supporters of the descending AI tried to describe the whole world “from the top down”, in the form of symbolic rules for responding to external requests - this is how expert systems, interactive bots, and search engines appeared. Supporters of the ascending approach built low-level “anatomical” models - perceptrons, neural networks, cellular automata. What approach could have won in the United States in an era of behavioral prosperity and the idea of consciousness as a “clean slate”? Of course, descending. Only towards the end of the 20th century, the ascenders were rehabilitated.

However, for decades in the theories of AI almost did not develop the idea that the best model of intelligence should be “mixed”, able to apply different methods. Such models began to appear only at the end of the 20th century, and again, not independently, but following the achievements of the natural sciences. Convolutional neural networks emerged after it became clear that the cat's visual cortex cells process the images in two stages: first, simple cells reveal simple elements of the image (lines at different angles), and then on other cells the image is recognized as a set of these simple elements. Without a cat, of course, it was impossible to guess.

Another bright example is the throwing of Marvin Minsky, who in the 50s became the creator of the theory of neural networks, in the 70s he was disappointed in them and leaned toward “descending”, and in 2006 he repented and published the book The Emotion Machine, where he ruined rational logic. Did people really not realize before this that emotions are another, no less important type of intellect? Of course, guessed. But in order to make this a respected science, they needed to wait for the appearance of MRT scanners with beautiful pictures. No pictures in science - no way.

But the evolution through the arms race in the wilderness of the Internet goes much faster. Firewalls of application level (WAF) appeared quite recently: the Magic Quadrant's Hartner rating for WAF has been available only since 2014. However, at the time of these first comparative studies, it turned out that some WAFs use several “different intelligences” at once. These are attack signatures (descending symbolic intelligence), behavioral analysis based on machine learning (a statistical model of the normal functioning of the system is built, and then anomalies are detected), and vulnerability scanners for proactive patching (this kind of intelligence in people is called “self-digging”), and correlation of events to identify the chains of attacks in time (someone in the academic development of AI built intelligence that can work with the concept of time?). It remains to add on top some more “female intellect”, which will report attacks in a pleasant voice - and this is a completely realistic model of the human mind, at least in terms of a variety of ways of thinking.

Imitation and co-evolution

It was said above that the theorists of AI strongly depended on the trends of the cognitive sciences. Well, they were not interested in computer viruses - but maybe, instead, they learned to imitate a person well, according to the precepts of the great Turing?

Hardly. For many years, various visionaries promise that advertising systems, having collected our personal data, tastes and interests, will begin to fairly accurately predict our behavior - and receive all sorts of benefits from such perspicacity. But in practice, we see that contextual advertising for a month shows us the thing that we bought a long time ago, and Facebook hides letters that are actually important.

Does this mean that a person is very difficult to imitate? But on the other hand, one of the main trends of the year is a lot of serious attacks based on banal phishing. Multimillion-dollar losses of banks , leakage of correspondence by major politicians, blocked San Francisco metro computers — it all starts with one person opening one email. There is no imitation of voice or logical dialogue, no neural network. Just one letter with the correct title.

So maybe for a more realistic picture of the world is it worth rewriting the Turing definition from a security point of view? - Let's consider the car to be “thinking” if it can rob a person. Yes, yes, it violates beautiful philosophical ideas about the intellect. But it becomes clear that the imitation of a person based on his past experience (search queries, history of correspondence) overlooks many other options. A person may be interested in things that affect the most basic universal values (erotica) - or vice versa, include curiosity about rare events that have not been encountered at all in the past man’s experience (winning the lottery).

By the way, many science fiction writers felt that of all the variants of the “imitation game” the most attention is worth exactly the one that is related to security. It is not so important that Philip Dick androids were calculated by the wrong emotional reaction (this can be faked), and by Mercy Shelley - by the inability to write a haiku (this and most people do not know how). It is important that it was a game of survival, not just an imitation. Compare this with the vague goals of contextual advertising - including such a great goal as “to master a given budget, but at the same time make it clear that this is not enough.” And you will understand why the artificial intelligence of advertising systems is not in a hurry to evolve, unlike security systems.

And one more conclusion that can be drawn from the success of phishing attacks - the joint evolution of machines and people affects both sides of this symbiosis. Bots still hardly speak humanly, but we already have hundreds of thousands of people who speak and think machine languages all their lives; before these poor fellows were honestly called “programmers”, now they are politically correct to be disguised as “developers”. Yes, and the remaining millions of citizens, hooking on the machine formats of thinking (likes, smiles, tags), become more mechanistic and predictable - and this simplifies the techniques of influence.

Moreover, replacing a diverse sensory experience with one template communication channel (smartphone window with Facebook friendline) leads to the fact that it is easier to inspire someone else’s choice than to calculate his own tastes. At the beginning of the last century, Lenin had to live for hours with an armored car to persuade the masses, who were considered “illiterate” - but today banal spam bots in social networks have the same effect on literate people. They not only throw millions of dollars into citizens, but also participate in coups d'état - so the Agency for Strategic Studies DARPA already perceives them as a weapon and holds a competition to combat them.

And what's funny is that in the DARPA competition, the methods of protection imply an arms race in the same electronic environment of social networks, as if such an environment is a constant given. Although this is exactly the case that distinguishes us from animals: people themselves have created a digital environment in which even simple bots easily fool people. So, we can do a reverse trick for security reasons. Dry the swamp, and the anopheles mosquitoes will die out.

Perhaps this approach will seem archaic to someone - they say, the author proposes to abandon progress. Therefore, I will give a more practical example. Last year, several studies appeared, the authors of which use machine learning to listen in to passwords through "accompanying" electronic media — for example, through fluctuations of the Wi-Fi signal when pressing keys, or via Skype enabled alongside , which allows you to record the sound of these keys. So what, we will invent in response to the new system of noise of the air? Hardly. In many cases, it will be more effective to cut down the extra “air” at the time of identification, and even to identify the password with the password (simple machine language!) With something less primitive.

Parasites of complexity and AI psychiatrists

Since in the previous lines I have lowered AI to simple sociobots, now let's talk about the inverse problem - about complexity. Any news about smart neural networks can be continued with a joke that their achievements do not apply to people. For example, we read the headline “The neural network has learned to distinguish criminals” - and continue “... and its creators have not learned”. Well, in fact, how can you interpret or transfer knowledge somewhere, which are abstract weights spread over thousands of nodes and connections of a neural network? Any system based on such machine learning is a “black box”.

Another paradox of complex systems is that they break down in simple ways. In nature, this is successfully demonstrated by various parasites: even a single-celled creature like Toxoplasma manages to manage the most complex mammals.

Together, these two properties of intelligent systems provide an excellent goal for hackers: hacking is easy, but it is difficult to distinguish a hacked system from a normal one.

Search engines first encountered this: the parasitic search engine optimization (SEO) business bloomed on them. It was enough to feed the search engine a certain "bait", and the specified site got into the first lines of the issue for a given request. And since the search engine algorithms for the average user are incomprehensible, he is often unaware of such manipulation.

Of course, you can identify the shoals of the “black box” with the help of external assessments by human experts. Google Flu Trends has stopped publishing its predictions after the publication of the study , which revealed that this service greatly exaggerated all flu epidemics from 2011 to 2014, which could be due to a banal search query wrap. But this is a quiet closure - rather the exception: usually services with similar jambs continue to live, no wonder they spent money on them! Now, when I write these lines, Yandex.Translate tells me that “bulk” means “Bulk”. But this buggy intellect has a touching “Report an error” button - and perhaps, if another five hundred sane people take advantage of it, the wound Navalny will also disappear.

Now search engines feed themselves with constant human amendments, although they don’t like to admit it, and even the name for this profession came up with the most mysterious (“assessor”). But in fact, thousands of testers are injected into the machine learning galleries of Google and Yandex, who spend the whole day doing the dull work of evaluating the results of the work of search engines to further adjust the algorithms. President Obama did not know about the heavy share of these barge haulers when, in his farewell speech, he promised that artificial intelligence would free people from low-skilled work.

But will such support help when the level of complexity rises to personal artificial intelligence, with which all unfortunates will be unhappy in their own way? Let's say your personalized Siri or Alexa gave you a weird answer . What is it, the disadvantages of a third-party search engine? Or cant self-study on your past shopping history? Or did the developers simply add a bit of spontaneity and humor to the speech synthesizer so that it sounds more natural? Or is your AI agent hacked by hackers? It will be very difficult to find out.

We add here that the influence of intelligent agents is no longer limited to “answering questions”. A hacked bot from an augmented reality game, such as Pokemon Go, can endanger a whole crowd of people, driving them to places more convenient for robbery . And if the already mentioned “Tesla” on autopilot crashes into a peacefully standing truck, as it happened more than once in the past year - you simply do not have time to call support.

Maybe smarter security systems will save us? But the trouble is: becoming more complex and armed with machine learning, they also turn into “black boxes”. Already, security event monitoring systems (SIEM) collect so much data that their analysis resembles either the shaman's fortune-telling on the guts of animals, or the trainer's communication with the lion. In fact, this is a new market for the professions of the future, among which the AI psychiatrist is not even the schizow.

Moral Bender

You have probably noticed that the relations of people and machines described in this article - competition, symbiosis, parasitism - do not include a more familiar picture where smart machines are obedient servants of people and care only about human welfare. Yes, we all read Azimov with his principles of robotics. But in reality, there is still nothing of the kind in artificial intelligence systems. And no wonder: people themselves do not yet understand how to live without harming any other person. A more popular moral is to care for the good of a certain group of people — but other groups can also be hurt. All are equal, but some are more equal.

In September 2016, the British Standards Institute released the “ Guide to the Ethical Design of Robots ”. And in October, researchers from Google presented their “ discrimination test ” for AI algorithms. The examples in these documents are very similar. The British are worried that the police will be captured by racist robots: on the basis of data on more frequent crimes among blacks, the system begins to consider the blacks more suspicious. And Americans complain about bank AI being too picky: if men are twice as likely to be unable to repay a loan than women, a machine-based algorithm can offer the bank a winning strategy, which is to give loans mainly to women. It will be “unacceptable discrimination”.

It seems everything is logical - but after all, by arranging equality in these cases, you can easily increase the risks for other people. And how will the machine use abstract ethics instead of bare statistics?

Probably this is the main current trend in the topic “security AI” - attempts to limit artificial intelligence with the help of principles that are in themselves questionable. Last October, the US Presidential Administration released a document titled " Preparing for the Future of Artificial Intelligence ." The document mentions many of the risks I described above. However, the answer to all the problems are vague recommendations that "should be tested, we must be sure safe. ”Of the informative laws on AI, only the new rules of the US Department of Transportation regarding the commercial use of UAVs are named - for example, they are not allowed to fly over people. On the other hand However, the LWS headquarters modestly reports that “the future of LAWS is difficult to predict,” although it has been used for a long time.

The same theme was developed by the European Parliament, which in early 2017 published its own set of vague recommendations on how to implement ethics in robots . Here, even an attempt was made to determine their legal status, not so far from human - “an electronic person with special rights and obligations”. A little more, and we will not be allowed to erase buggy programs, so as not to violate their rights. However, the Russian have their own pride: representatives of the State Duma of the Russian Federation compare artificial intelligence with a dog that needs to be muzzled . At this point, there is a great topic for protest speeches - for the freedom of robots in a single country.

Jokes jokes, but it is possible that in these vague principles lies the best solution to the security problem AI. It seems to us that the evolutionary arms race can develop artificial intelligence to a dangerous superiority over Homo sapiens. But when smart machines have to face human bureaucracy, ethics, and political correctness, this can significantly dull their whole intellect.

===============================

Author: Alexey Andreev, Positive Technologies

Source: https://habr.com/ru/post/321586/

All Articles