Wit and courage: how we were wrong many times when creating iFunny

This is not an article, this is a facebook. What you read under the cut is squeezing our ridiculous techno-misses for all 5 years of work on the flagship product - iFunny. Perhaps our faylovaya history will help you avoid mistakes, and perhaps cause laughter. Which is good too. To make people laugh - FunCorp has been calling for 13 years.

Some introduction to the team and product

We formed the backbone of the team as students back in 2004 in Penza. For many years they have done something mobile and, as a rule, funny. In 2010, in a series of many, we are releasing the IDAPricol, an iOS application for viewing funny pictures. Without much investment, it reaches the audience in Russia and the CIS.

“Why not?” - we think and already in 2011 we are making a version for the North American market. In the first 12 months, iFunny from the App Store and Google Play will download more than 3 million people, and in 5 years the product will turn into a full-fledged social media entertainment very popular in the USA ( DAU = 3,5M).

The main part of the audience are users of iOS and Android applications. All functionality is available there. But the Web version is gradually catching up the apps both in terms of audience and content. Every day, users post about 400K memes in iFunny, and browse more than 600M.

One often hears: “What is so complicated here, just a list of pictures. Is it really impossible to do this after work? ”However, in addition to implementing cunning business logic (in our case, entertainment logic), it is also a serious technical challenge under the hood. Here, for example, with what it is necessary to cope:

- 15,000 RPS in peak come backend servers that form the answer within 60 milliseconds;

- 3.5 billion events per day are written and analyzed in Data Warehouse;

- 5.5 petabytes of content per month is distributed using a CDN . At the peak it is 28,000 requests per second, or 44 Gbit per second.

In the very first version, this article was on 22 pages: they gathered into it everything that they only remembered. Then they decided that it would be another file, only now on Habré, and chose the most characteristic: about the confusion with the repositories, errors in DAU calculations, BDSM games with Mongo, the specifics of the audience. And a little intrigue: we brought Trump and Putin together when it was not yet mainstream.

Highload project

Before iFunny, we had many projects, but none with the highload prefix. The most impressive was the daily attendance of 100,000 unique visitors. It all happened too abruptly. We could not cope with the load and data volumes, so we regularly changed the hosts, then the technologies.

It looked like this:

Each move was caused by its own reasons: somewhere it was expensive, somewhere there was not enough functionality, and somewhere, as in the case of Hetzner, the hosting itself forced to run. We were a rather dysfunctional neighbor, who is regularly ddosyat and fill with reasonable complaints about users booted boobs / pussy and other dismemberment.

As you have noticed, especially we managed to run from iron to the clouds and back. About the case of "back" just tell.

Obstacle Escape

At the beginning of 2012, the application and database were on AWS , and we will soon begin to distribute content via the CDN, going thorny from one 10Gbps server to several with rsync.

But by May, our vertically-scalable MySQL on AWS rests against the ceiling and begins to fail under load. And since this is RDS , we almost can not twist the pens. Realizing that the clouds are still about horizontal scaling, and we broke, we decide to move to OVH, which justified itself even when distributing content.

Any normal developer would start thinking about changing the architecture, sharding, but once again we took the path of least resistance, that is, vertical scaling. First of all, MySQL was taken to OVH. Productivity has increased, because it's still hardware, not virtualization, which has a small overhead and a lot of nuances with disk I / O. In this case, the iron took a powerful - 8-core Xeon, 24 GB of RAM and 300 GB SSD, and the money came out cheaper AWS.

An interesting fact came to light later. When digging into the logs, noticed Russian IP, who stole content, sending 50 requests per second. This created a heavy load on API backends, especially on MySQL. These requests were one of the reasons for the rapid flight to OVH. After we filtered the whole thing, the database became even easier, only three times already.

They were not very upset then, because 50 requests per second could even run in a couple of weeks with new users. But they decided to take revenge, because the pumping was really very tough methods and large pages. Instead, they sent a 700-MB Ubunt distribution kit disguised as jpeg instead of images. We are not sorry - they were very angry. It is now at peak 15000 RPS you can only laugh at the “problem” 50, but then it was significant.

As a result, the OVH capacities were not happy for long. A year after the move, they had problems with the operational provision of new servers. The delay reached a month and this is just at the behest of their founder . We continued to grow and were seriously affected by such a policy. We returned with the whole lineup to AWS, where there are already proven solutions, SaaS products, and most importantly, flexibility. Suppose there is a severe enterprise that does capacity planning, and we will go write code. And now we increasingly understand that if it were not for the clouds and SSD at first, we would not exist at all. Like this article.

Now we are trying to use AWS managed services to the maximum: S3, Redshift, ELB, Route 53, CloudFront, ElasticMapReduce, Elastic Beanstalk. For a small team, this is the way out, it works more efficiently. But this does not mean that it is possible to solve a problem with the help of magical technology. The same Redshift we have already dug deep: we had to thoroughly study the giblets. Therefore, all this, like NoSQL, is definitely not a silver bullet. Need to understand the details.

Fast growth

LDAU

All the same 2012 year. In the period of turmoil and wobbling in technology, we are doing well with growth: TOP-3 on the App Store and the rise in daily traffic.

But as you know, any distemper gives rise to a couple of Lzhedmitriev. We are faced with two cases of LDADA.

First jump

Suddenly, in 2 days, we get suspicious +300 thousand: 2200K turned into 2500K.

The reason was found out quickly. iFunny can be used anonymously without registering. Such users are also counted. Someone who picked up our API took advantage of this. I just sent requests, substituting random identifiers, which we considered.

Second jump.

As soon as we moved the base to OVH and it stopped braking, DAU jumped. We are happy to think that the UX - this is not a joke. The application began to work faster and everyone started using it right away. Therefore, we began to prepare for the April Fool corporate event with even more enthusiasm - a record. But alas, it was not a record, but a period of the application that was lasting for a couple of weeks from AWS to OVH. There it was supposed to meet with the database and heal with it happily. This discrepancy revealed an incorrect DAU count in MySQL. Smart guys would count by access-logs, but for some reason we made non-atomic queries like “if the user has not logged in that day, then do +1 to the counter” for each user action. As a result, the longer these queries to the database lasted, the more DAU became due to parallel queries. : facepalm:

Transferred registration and DAU counting in Redis to the usual Sorted Set - this solved the problem. We liked this pattern, but in three years we turned the code into noodles from counting metrics to Redis. In 2016, we implemented server and client events collection via fluentd + S3 + Redshift. This saved us from the spaghetti in the code. About how this happened, you need to write a separate post. And not even one.

Redis-Mister

In due course began to bring Redis with which earlier problems were not. The peak of problems fell at night. He simply became inaccessible from PHP, and everything immediately went down, since we had to go to him for almost every HTTP request.

We start to understand: we look at the Redis-process, we uncover strace, and there “Too many open files”. It turns out that we have a limit of 1024 connections, which is not affected by the maxclients parameter. Run with ulimit -n 60000 - the maximum number of connections rises only to 10240 and no more. An amazing thing was dug up here: in Redis 2.4 and older there was a hard limit for 10240 connections. A couple of days later, Redis version 2.6 RC5 was installed, the persistent connections to it were turned on, and we breathed a sigh of relief, knowing that now we definitely have enough handles.

Mongo-BDSM

Team growth will begin only in 2013-14. In addition to QA, a DevOps team will be formed, a backend and a frontend will be completed. In the mobile development of three developers on iOS and Android. But in the very early stages, one and a half people remained. Hence the lack of resources, lack of sleep due to time differences and the banal inattention. And had to do a lot. For example, several times to redo the API or completely rewrite the code on the backend to work with MongoDB. Although many of its normal database and do not consider.

Who where draws inspiration, and we at YouPorn. On the well-known in narrow circles, Highscalability read that they use Redis as the main data repository. Of course, it’s scary to keep everything in RAM, it will take difficult architectural solutions. But if YouPorn is already sitting on Redis, can't we cope with MongoDB?

Yes, sometimes it was pleasant to her, but more often she tied the atat and inserted a gag. This novel continues to this day, but then we, in the status of the first guy, experienced many tantrums and shoals on ourselves.

The excuse for all was sharding out of the box and, of course, HYIP. In our case it was convenient, because we are lazy and did not want to bother with writing additional code and deal with issues of balancing data. In MongoDB we were attracted by in-place updates, schema-less & embedded documents. It made us think about the approach to designing a data structure in high-load services. So began the rewriting and migration from MySQL. This is about the middle of 2012 and we started using MongoDB 2.0.

The bad sign, which anticipated all our future ordeals, was already at the very beginning. Error made in the process of rewriting. The fact is that any meme that is poured into iFunny can be banned: this requires a certain number of complaints. We set the threshold values in MongoDB, and when we put it into operation, they continued to be read from MySQL. There they were almost zero and literally one or two complaints were enough to ban the work. And with our trolls ... 4,000 selected memes are missing from Featured. Everything was done in a day, but the situation was dramatic due to our banal inattention.

Migration

After 5 months of rewriting the code, in the time free from extinguishing fires, the day of great migration came, which immediately failed: MongoDB could not stand the load. Because they have forgotten a lot of indexes to throw. Because a bunch of bugs created. The situation was aggravated by the raw MongoDB-PHP driver with memory leaks and problems with forked scripts. Who then said about testing? There was no testing at that time, we have no time - we saw new features and immediately in production.

On that day, our users hoped to laugh at least somewhere overflowed on the resources of 9GAG and safely laid it. At least one joy.

The time difference also threw up problems: when there is the most active activity in the USA - it is night here. And this is how we looked the next morning when we had to go to bed:

Two weeks of hard work and we decided on a second attempt. Everything went smoothly, almost perfect. There was an understanding that without replicas and shard we would not go far, but with MongoDB it was easy to crank.

Everything was fine, until we reached the peak load. The base could not withstand the influx of users at night, and we again adjoined. At the same time, the reasons for the brakes were not clear - CPU or IO, the devices did not really talk about anything. After another sleepless nights, with the help of a good Samaritan , there is a source of problems: the standard memory allocator in MongoDB does not work well under our load. As a result, they changed the allocator to tcmalloc and waited for the night. Voila, the load dropped 3 times and we sometimes began to sleep at night. Then they understood something else important: people write databases too. In the next version, the MongoDB 2.6 developers began to include tcmalloc in the default distribution.

All this time, our support raked a bunch of letters complaining about the work of the application:

Apparently, then we had a unique situation. Once again it was confirmed that very few people face such a high load. Or to paraphrase differently: we are the only dolt who uses such technologies on such a load and in principle admits that she can go to the same node.

If you go back in time and decide whether to migrate to MongoDB, then the answer would be unequivocal: no need as primary storage. Now the work on the errors would look like this: first get rid of the joins, do sharding with virtual shards, carry the counters somewhere and count them asynchronously, and for schema-less use something like jsonb from PostgreSQL. Who knows, maybe we are migrating again. We do not promise.

Lecture hall

The audience of the humorous application has one huge plus - you can laugh at everything. For example, over how our servers fall:

Also, no one bothers to arrange a vote for the title of “Best on toilet app”:

Often letters of gratitude for the fact that the application helps to cope with depression fall to us. Humor treats:

All this directly affects us.

The first time after the launch of iFunny technical marriage was so much that users called the development team monkeys. We do not deny. Take a look at at least the FunCorp logo.

But there is another side.

Firstly, there are a lot of users. The slightest mistake - and support is overloaded.

Secondly, they want to assert themselves. In the order of things to arrange a raid on the content and minus everything.

Your API is not working here.

Our API methods have stopped working. Complaints showered. There were many hypotheses, they even thought about blocking by cellular networks. But when there were enough user complaints, we were able to find a pattern. These were iPhones with jailbreak. Then it was fashionable to remove the protection and implement their own tweaks with their own fonts and other thingies. For many, especially teenagers, it was fun and helped to stand out. We had an allergy to one of these tweaks: it intercepted outgoing HTTP requests and deleted some of the headers before sending it to the server. We began to warn users about problems with specific tweaks, and this was the case.

We did not even think about such nuances, but a large audience does its job. Now we don’t remember how many of these were, 10 or 50 thousand. Regarding the entire application - this is a miser, but for support ... you have already seen how it looks.

Conclusion: reputation suffers, even if Krivoruk is not you. For all the long experience of development, we ran across all the bugs and crashes, starting with Flurry and AWS, ending with Apple and Google. This beat both in our reputation to users, and on DAU.

Inattention

Finally, inattention. Because of the human factor, a lot of mistakes happen. And we are no exception.

146% can organize not only Churov

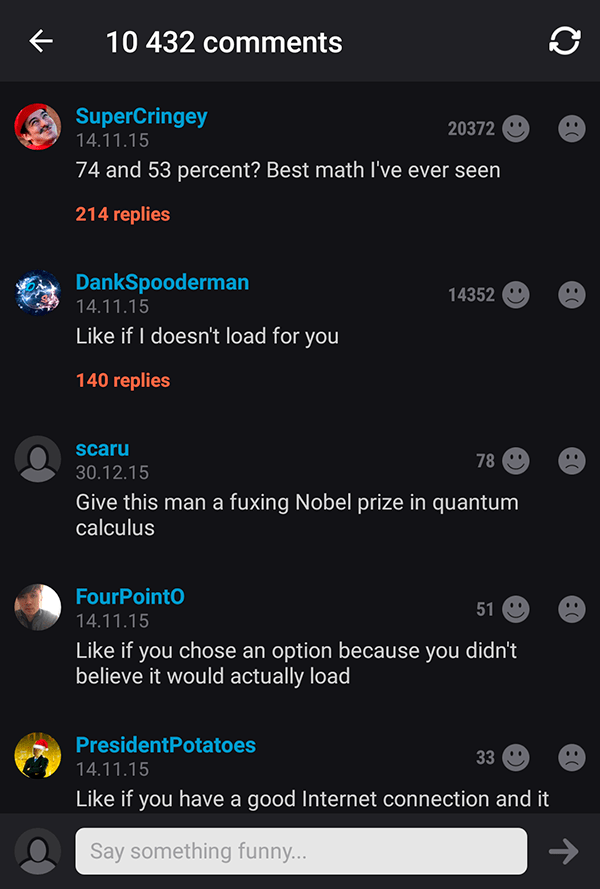

In iFunny web app appeared for voting. So the stars agreed that, due to the lack of time, the backenders and the desire of the front-vendors to write the backend code, the voting turned out to be non-atomic. The update of the number of votes went through the usual increment of the field in the code, and for the base it is a typical read-before-write without locks. There was a discrepancy in the best traditions of the Russian elections. Users, of course, pushed us down. Perhaps they even thought that it was done on purpose. But only on Habré we will tell the whole truth.

The testimony of shame did not survive, but eloquent comments remained:

In the next vote, everything was already correct, but if we once again count in our style, then we can conclude that Trump, who received 48% of the vote, would have lost Putin almost 2.5 times.

Banal sloppiness

You collect, you collect users, you involve them, you merrily mercilessly, and then you forget about some trifle, that's all. No them. We made our own shooting to the audience 2 years ago.

Made badge support for an Android application. In 2015, they appeared only in Samsung. Notification was cool and not annoying. After some time, the badges broke and nobody noticed. After a few more months, one of the products noticed this. We have all repaired and added to the DAU 120K.

It turns out that you need to be more careful with everything: both QA, and products, and developers. And you. Yes, yes - you. Now you are reading this longrid, and in the meantime, somewhere, something is breaking. Don't be like us, go check.

And we resume the blog on Habré, where we will share new-old stories.

In 2013, we only had enough for one post about the optimization of animation To Gif or not to Gif . Over the past years, a lot of interesting things have accumulated, which I would like to tell about: AWS, NoSQL, iOS, Android, math in tapes, Big Data, content pre-moderation, spam. Sometimes it is not even Feil, and successful solutions and for each there is a whole mountain of memes. Therefore, more humor in life, because Fun Happens :)

')

Source: https://habr.com/ru/post/321508/

All Articles