NetApp ONTAP & VMware vVOL

In this article, I would like to review the internal structure and architecture of the vVol technology implemented in NetApp storage systems with ONTAP firmware.

Why did this technology appear and why is it needed, why will it be in demand in modern data centers? I will try to answer these and other questions in this article.

The vVol technology has provided profiles that, when creating a VM, create virtual disks with specified characteristics. In such an environment, the virtual environment administrator can easily and quickly check in his vCenter interface which virtual machines live on which media and find out their characteristics on the storage system: what kind of media is there, is the caching technology enabled for it, is it running replication for DR, is there compression, deduplication, encryption, etc. With vVol technology, storage has become just a collection of resources for vCenter, integrating them much more deeply with each other than it was before.

')

The problem of consolidating snapshots and increasing the load on the disk subsystem from VMware snapshots may not be noticeable for small virtual infrastructures, but even they can meet with their negative impact in the form of slowing down the robots of the virtual machine or the impossibility of consolidation (removal of snapshots). vVol supports hardware QoS for virtual disks of the VM, as well as NetApp Hardware-Assistant replication and snapshots , since they are architecturally designed and work fundamentally differently without affecting performance, unlike VMware snapshots of VMWs. It would seem who uses these snapshots and why is this important? The fact is that all the backup schemes in the virtualization environment are somehow forced to use the VMware snapshots COW if you want to get consistent data.

What is vVol? vVol is a layer for a datastor, i.e. This is a datastor virtualization technology. Previously, you had a datastor located on a LUN (SAN) or file ball (NAS); as a rule, each datastor hosted several virtual machines. vVol made the datastor more granular by creating a separate datastor for each disk of the virtual machine. vVol also, on the one hand, unified the work with NAS and SAN protocols for the virtualization environment, the administrator anyway, these are moons or files, on the other hand, each virtual VM disk can live in different volums, moons, disk pools (aggregates), with different Cache and QoS settings on different controllers and may have different policies that follow this VM disk . In the case of a traditional SAN, everything that the storage system “saw” for its part was also what it could manage — the whole datastor, but not separately each virtual disk VM. With vVol when creating one virtual machine, each of its individual disks can be created in accordance with a separate policy. Each vVol policy, in turn, allows you to select the free space from the available storage resource pool, which will meet the pre-specified conditions in the vVol policies.

This allows more efficient and convenient use of the resources and capabilities of the storage system. Thus, each virtual machine disk is located on a dedicated virtual datastor similar to LUN RDM. On the other hand, in vVol technology, using one common space is no longer a problem, because hardware snapshots and clones are performed not at the level of the whole datastor, but at the level of each individual virtual disk, while not using the VMware snapshots of VMware. At the same time, the storage system will now be able to “see” each virtual disk separately; this allowed delegating storage capabilities in the vSphere (for example, snapshots), providing deeper integration and transparency of disk resources for virtualization.

VMware began developing vVol in 2012 and demonstrated this technology two years later in its preview, while NetApp announced its support for its storage systems with ONTAP firmware with all supported VMWare protocols: NAS (NFS), SAN (iSCSI, FCP).

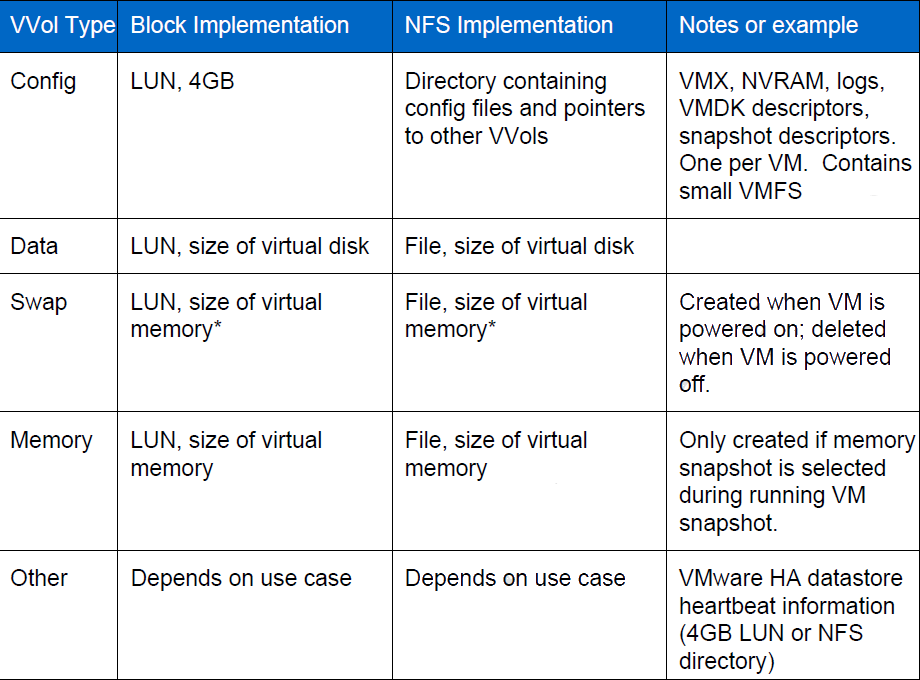

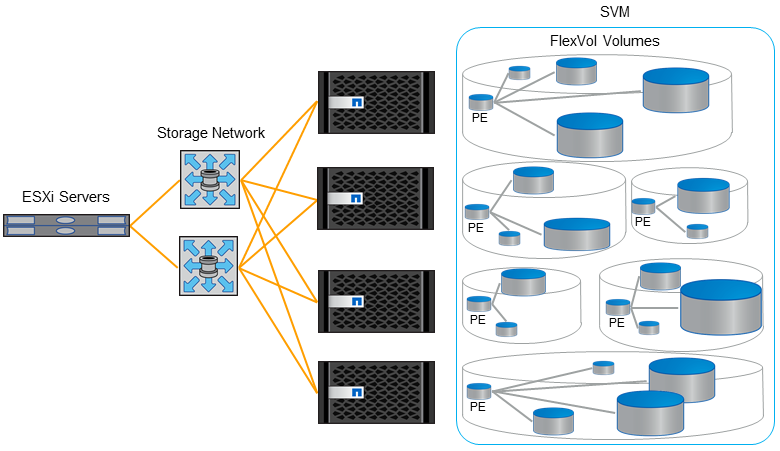

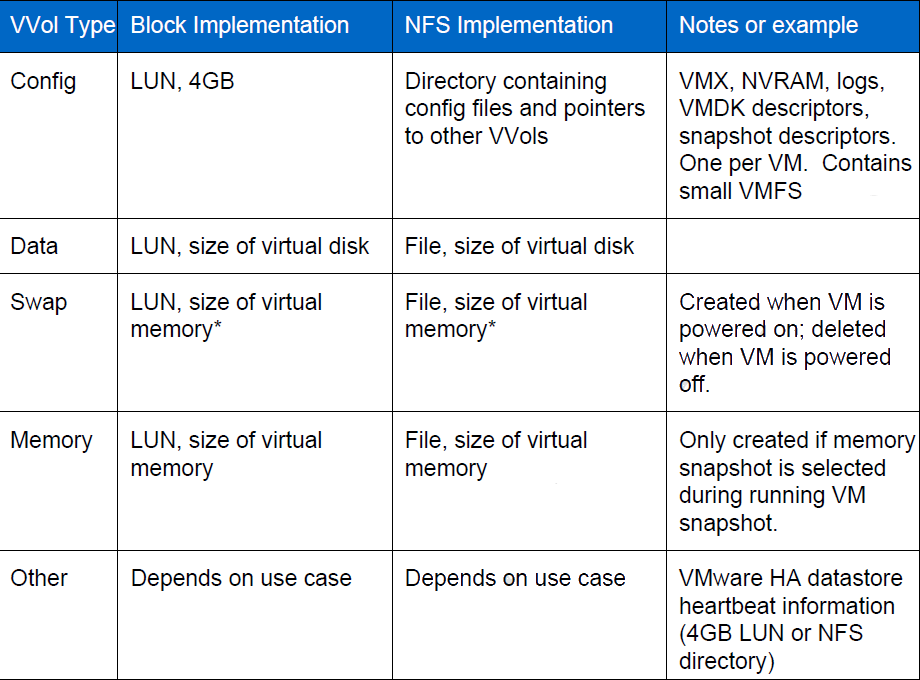

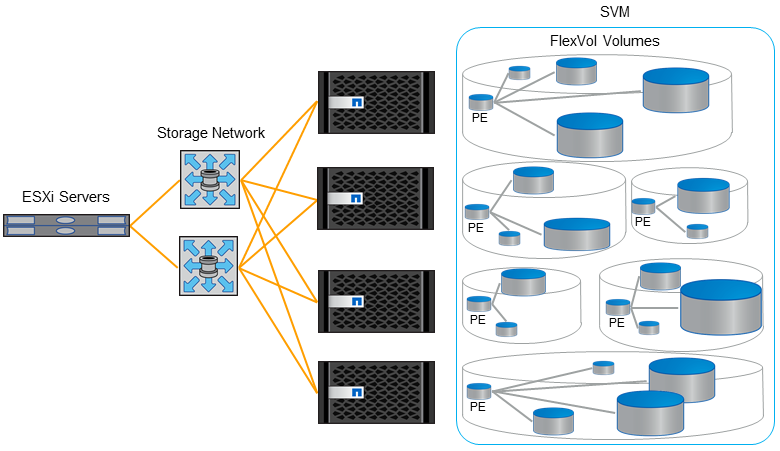

Let's now move away from the abstract description and move on to the specifics. The vvols are located on FlexVol, for NAS and SAN, respectively, files or moons. Each VMDK disk lives on its dedicated vVol disk. When moving a vVol disk to another storage node, it transparently re-maps to another PE on this new node. The hypervisor can start creating snapshots separately for each disk of the virtual machine. A single virtual disk is a complete copy of it, i.e. one more vVol. Each new virtual machine without snapshots consists of several vVol. Depending on the purpose, vVols come in the following types:

Now, actually, about PE. PE is the entry point to vVols, a kind of proxy. PE and vVols are located on the storage system. In the case of a NAS, the entry point is each Data LIF with its own IP address on the storage port. In the case of SAN, this is a special 4MB of moons.

PE is created on demand by VASA Provider (VP). PE primaplivaet all its vVol by means of a Binging request from VP to the storage system, an example of the most frequent request is the start of the VM.

ESXi is always connected to PE, not directly to vVol. In the case of SAN, this avoids the 255 moon limit per ESXi host and the maximum number of paths to the moon. Each virtual machine can consist of only one protocol vVol: NFS, iSCSI or FCP. All PEs have a LUN ID of 300 or higher.

Despite the slight differences, NAS and SAN are very similarly structured in terms of how vVol works in a virtualization environment.

The iGroup and Export Policy are the mechanisms for hiding information available on the storage system from the hosts. Those. provide each host with only what it should see. As in the case of NAS, and SAN, moon mapping and file sphere export are automatically from VP. iGroup is not mapped on all vVol, but only on PE, since ESXi uses PE as a proxy. In the case of the NFS protocol, the export policy is automatically applied to the file globe. The iGroup and export policy is created and populated automatically using a request from vCenter.

In the case of the iSCSI protocol, you must have at least one Data LIF on each storage node that is connected to the same network as the corresponding VMkernel on the ESXi host. iSCSI and NFS LIFs should be separated, but can coexist on the same IP network and one VLAN. In the case of FCP, it is necessary to have at least one Data LIF on each storage node for each factory, in other words, as a rule, these are two Data LIFs from each storage node that live on their own target port. Use soft-zoning on the switch for FCP.

In the case of the NFS protocol, you must have at least one Data LIF with an IP address installed on each storage node that is connected to the same subnet as the corresponding VMkernel on the ESXi host. You cannot use the same IP address for iSCSI and NFS at the same time, but both of them can coexist on the same VLAN, on the same subnet and on the same physical Ethernet port.

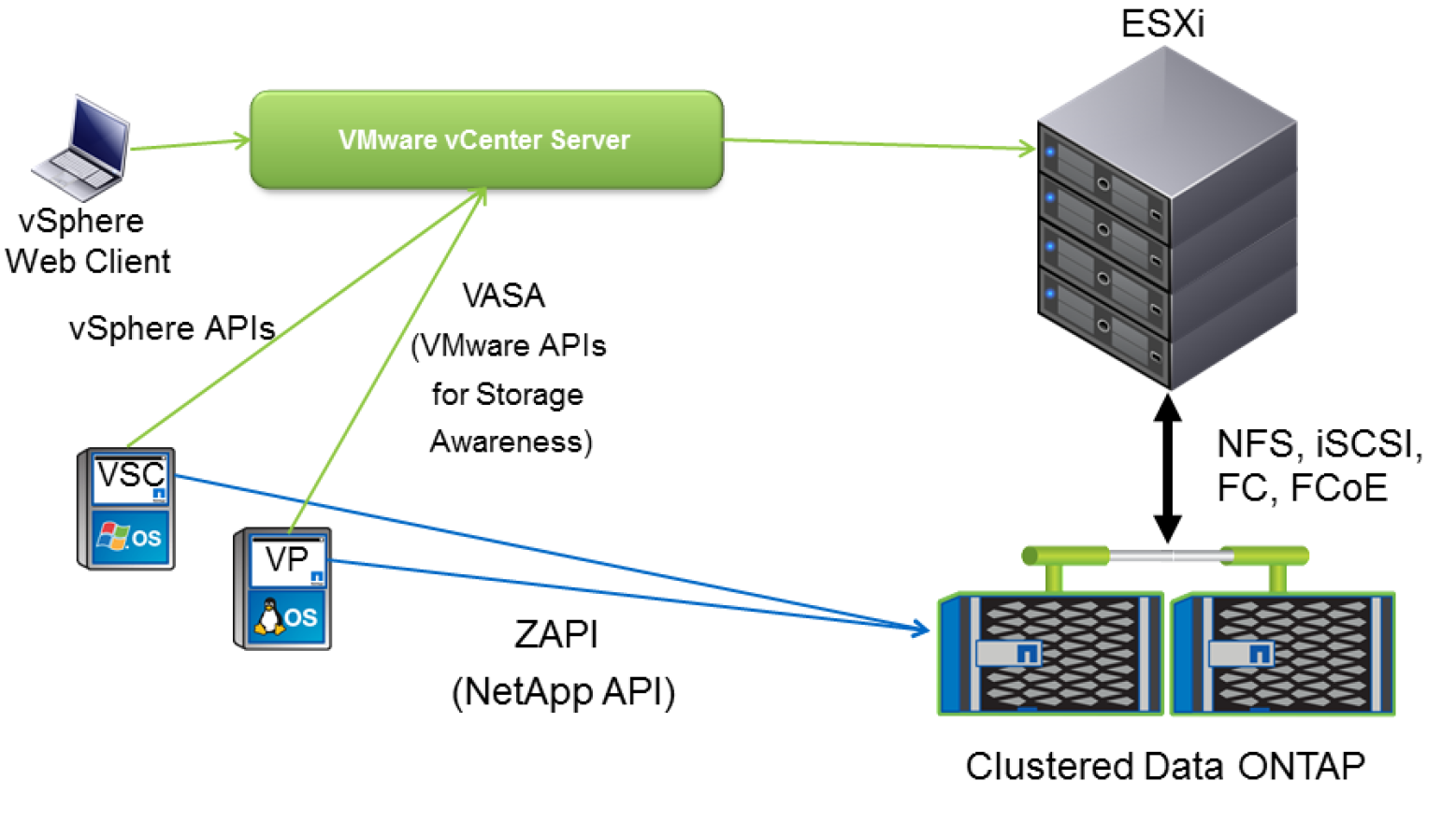

VP is an intermediary between vCenter and the storage system, he explains the storage system that the vCenter wants from it and vice versa tells the vCenter about important alerts and available storage resources, such as those that are physically in fact. Those. vCenter can now know how much free space there really is, it is especially convenient when the storage system presents the thin moons to the hypervisor.

VP is not a single point of failure in the sense that if it fails, virtual machines will still work fine, but you cannot create or edit policies and virtual machines on vVol, start or stop VM on vVol. Those. in the case of a complete reboot of the entire infrastructure, the virtual machines will not be able to start, since it will not execute the Binding VP request to the storage system to apply PE to its vVol. Therefore, VP is definitely desirable to reserve. And for the same reason, it is not allowed to have a virtual machine from VP to vVol, which it manages. Why is it “desirable” to reserve? Because, starting with the version of NetApp VP 6.2, the latter is able to restore the contents of vVol simply by reading the meta information from the storage system itself. Learn more about setting up and integration in the documentation for VASA Provider and VSC .

VP supports Disaster Recovery functionality: in case of VP removal or damage, the vVol environment database can be restored: The meta information about the vVol environment is stored in a duplicate form: in the VP database, as well as with the vVol objects themselves on ONTAP. To restore the VP, it is enough to register the old VP, raise the new VP, register the ONTAP with it, execute the vp dr_recoverdb command in the same place and connect it to the vCenter, in the latter run the “Rescan Storage Provider”. Read more in KB .

The DR functionality for VP will allow vVol to be replicated using SnapMirror hardware replication and restore the site to the DR site when vVol starts supporting storage replication (VASA 3.0).

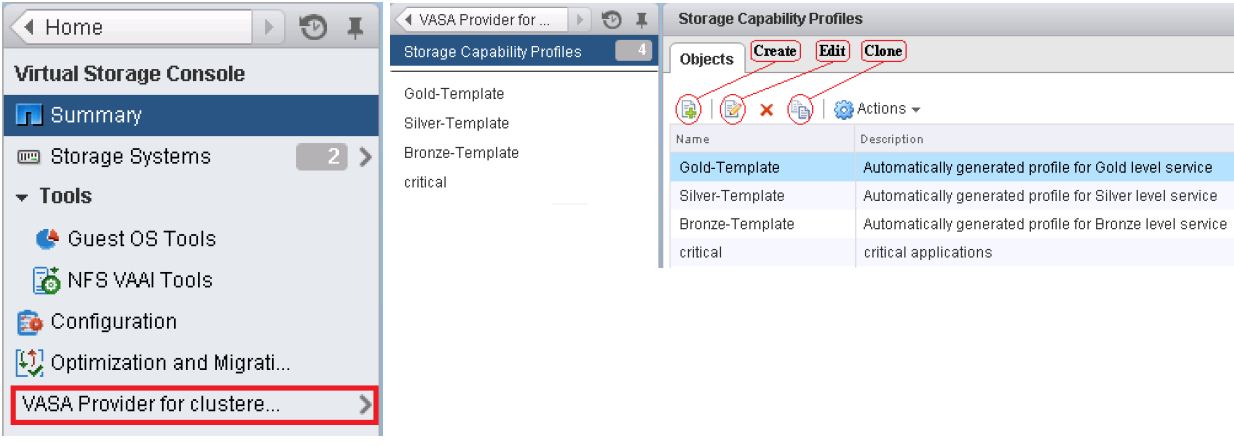

VSC is a plug-in for storage in the vCenter GUI, including for working with VP policies. VSC is a required component for vVol operation.

Let's draw the line between snapshots in terms of the hypervisor and snepshots in terms of storage. This is very important for understanding the internal structure of how vVol works on NetApp with snapshots. As many already know, snapshots in ONTAP are always executed at the level of the clause (and aggregate) and are not executed at the file / moon level. On the other hand, vVol, these are files or moons, i.e. they cannot be used for each individual VMDK file to remove snapshots using data storage systems. This is where the FlexClone technology comes to the rescue, which is able to make clones not only entirely, but also files / moons. Those. when the hypervisor removes a snapshot of a virtual machine living on vVol, the following happens under the hood: vCenter contacts VSC and VP, which in turn find exactly where the necessary VMDK files live and give the ONATP command to remove the clone from them. Yes, the fact that from the side of the hypervisor looks like a snapshot on the storage system is a clone. In other words, in order for the Hardware-Assistant Snapshot to work on the vVol, a FlexClone license is required. It is for this or similar purposes that ONTAP has a new functionality called FlexClone Autodelete, which allows you to set policies for deleting old clones.

Since snapshots are performed at the storage level, the problem of snapshot consolidation (ESXi) and the negative impact of snapshots on the performance of the disk subsystem are completely eliminated due to the internal arrangement of the ONTAP cloning / snapshotting mechanism.

Since the hypervisor itself removes snapshots using storage systems, they are immediately immediately consistent. That fits very well with the NetApp ONTAP backup paradigm . vSphere supports VM memory snapshots on a dedicated vVol.

The cloning function is often very much in demand in the VDI environment, where there is a need to quickly deploy many of the same type of virtual machines. In order to use the Hardware-Assistant cloning, a FlexClone license is required. It is important to note that the FlexClone technology not only dramatically accelerates the deployment of copies of a large number of virtual machines, drastically reducing the consumption of disk space, but also indirectly speeds up their work. Cloning essentially performs a function like deduplication, i.e. reduces the amount of space occupied. The fact is that NetApp FAS always puts data into the system cache, and the system and SSD caches in turn are Dedup-Aware, i.e. they do not drag out duplicate blocks from other virtual machines that are already there, logically accommodating much more than the physically system and SSD cache can. This dramatically improves performance during storage operation, and especially during Boot-Storm moments, due to the increased hit / read data to / from cache (a).

Technology vVol starting with version VMware 6.0, VM Hardware version 11 and Windows 2012 with the NTFS file system supports the release of space inside the thin moon on which this virtual machine is located automatically. This significantly improves utilization of the usable space in the SAN infrastructure using Thing Provisioning and Hardware Assistant snapshots. And starting with VMware 6.5 and the Linux guest OS with SPC-4 support, it will also allow you to free up space from inside the virtual machine, back to the storage system, allowing you to significantly save expensive storage space on the storage system. Read more about UNMAP .

For details, check with your authorized NetApp partner or compatibility matrix .

Unfortunately, Site Recovery Manager does not yet support vVol, since in the current implementation of the VASA protocol, VMware does not support replication and SRM. NetApp FAS systems have SRM integration and work without vVol. VVol technology is also supported with vMSC (MetroCluster) for building geo-distributed, highly accessible storage for building.

The vVol technology will drastically change the approach to backups, reducing backup time and more efficiently using storage space. One of the reasons for this is a fundamentally different approach of snooping and backup, since vVol uses hardware snooping, which is why the issue of consolidation and removal of VMware snapshots is eliminated. Due to the elimination of problems with the consolidation of VMware snapshots, the removal of application-aware (hardware) snapshots and backups ceases to be a headache for admins. This will allow for more frequent backup and snapshots. Hardware snapshots that do not affect performance will allow them to be stored a lot right on the production environment, while hardware replication will allow more efficient replication of data for archiving or to a DR site. Using snapshots compared to Full-backup will allow more economical use of storage space.

Do not confuse the plugin from the storage vendor (for example, NetApp VASA Provider 6.0) and the VMware VASA API (for example, VASA API 3.0). The most important and expected innovation in the VASA API, in the near future, will be support for hardware replication. It is hardware replication support that is so lacking in order for vVol technology to become widely used. The new version will support hardware replication of vVol disks by means of storage systems to provide DR function. Replication could have been done before using storage systems, but the hypervisor previously could not work with the replicated data due to the lack of support for such functionality in VASA API 2.X (and younger) by vSphere. Also, PowerShell cmdlets will appear to control DR on vVol and, importantly, the ability to run replicated machines for testing. For a planned migration from the DR site, virtual machine replication may be requested. This will allow you to use SRM along with vVol.

Oracle RAC will be validated to run on vVol, which is good news.

The following components are necessary for vVol to work: VP, VSA and storage with the necessary firmware, supporting vVol. These components themselves do not require any licenses to run vVol. On the NetApp ONTAP side, a FlexClone license is required in order for the vVol to work - this license is also needed in order to support hardware snepshots or clones (removal and restoration). Data replication (if needed) will require a NetApp SnapMirror / SnapVault license (on both storage systems: on the primary and backup sites). On the vSphere side, you need Standard or Enterprise Plus licenses.

The vVol technology was designed to even more closely interoperate the ESXi hypervisor with storage and simplify management. This allowed a more rational use of the capabilities and resources of storage, such as Thing Provisioning, where, thanks to UNMAP, space from thin moons can be released . At the same time, the hypervisor reports on the real state of storage space and resources, all this allows using Thing Provisioning not only for the NAS, but also to use it in “combat” conditions with the SAN, to abstract from the access protocol and to ensure the transparency of the infrastructure. And policies for working with storage resources, thin cloning and snapshots that do not affect performance will allow even more quickly and conveniently to deploy virtual machines in a virtualized infrastructure.

Why did this technology appear and why is it needed, why will it be in demand in modern data centers? I will try to answer these and other questions in this article.

The vVol technology has provided profiles that, when creating a VM, create virtual disks with specified characteristics. In such an environment, the virtual environment administrator can easily and quickly check in his vCenter interface which virtual machines live on which media and find out their characteristics on the storage system: what kind of media is there, is the caching technology enabled for it, is it running replication for DR, is there compression, deduplication, encryption, etc. With vVol technology, storage has become just a collection of resources for vCenter, integrating them much more deeply with each other than it was before.

')

Nepshots

The problem of consolidating snapshots and increasing the load on the disk subsystem from VMware snapshots may not be noticeable for small virtual infrastructures, but even they can meet with their negative impact in the form of slowing down the robots of the virtual machine or the impossibility of consolidation (removal of snapshots). vVol supports hardware QoS for virtual disks of the VM, as well as NetApp Hardware-Assistant replication and snapshots , since they are architecturally designed and work fundamentally differently without affecting performance, unlike VMware snapshots of VMWs. It would seem who uses these snapshots and why is this important? The fact is that all the backup schemes in the virtualization environment are somehow forced to use the VMware snapshots COW if you want to get consistent data.

vVol

What is vVol? vVol is a layer for a datastor, i.e. This is a datastor virtualization technology. Previously, you had a datastor located on a LUN (SAN) or file ball (NAS); as a rule, each datastor hosted several virtual machines. vVol made the datastor more granular by creating a separate datastor for each disk of the virtual machine. vVol also, on the one hand, unified the work with NAS and SAN protocols for the virtualization environment, the administrator anyway, these are moons or files, on the other hand, each virtual VM disk can live in different volums, moons, disk pools (aggregates), with different Cache and QoS settings on different controllers and may have different policies that follow this VM disk . In the case of a traditional SAN, everything that the storage system “saw” for its part was also what it could manage — the whole datastor, but not separately each virtual disk VM. With vVol when creating one virtual machine, each of its individual disks can be created in accordance with a separate policy. Each vVol policy, in turn, allows you to select the free space from the available storage resource pool, which will meet the pre-specified conditions in the vVol policies.

This allows more efficient and convenient use of the resources and capabilities of the storage system. Thus, each virtual machine disk is located on a dedicated virtual datastor similar to LUN RDM. On the other hand, in vVol technology, using one common space is no longer a problem, because hardware snapshots and clones are performed not at the level of the whole datastor, but at the level of each individual virtual disk, while not using the VMware snapshots of VMware. At the same time, the storage system will now be able to “see” each virtual disk separately; this allowed delegating storage capabilities in the vSphere (for example, snapshots), providing deeper integration and transparency of disk resources for virtualization.

VMware began developing vVol in 2012 and demonstrated this technology two years later in its preview, while NetApp announced its support for its storage systems with ONTAP firmware with all supported VMWare protocols: NAS (NFS), SAN (iSCSI, FCP).

Protocol endpoints

Let's now move away from the abstract description and move on to the specifics. The vvols are located on FlexVol, for NAS and SAN, respectively, files or moons. Each VMDK disk lives on its dedicated vVol disk. When moving a vVol disk to another storage node, it transparently re-maps to another PE on this new node. The hypervisor can start creating snapshots separately for each disk of the virtual machine. A single virtual disk is a complete copy of it, i.e. one more vVol. Each new virtual machine without snapshots consists of several vVol. Depending on the purpose, vVols come in the following types:

Now, actually, about PE. PE is the entry point to vVols, a kind of proxy. PE and vVols are located on the storage system. In the case of a NAS, the entry point is each Data LIF with its own IP address on the storage port. In the case of SAN, this is a special 4MB of moons.

PE is created on demand by VASA Provider (VP). PE primaplivaet all its vVol by means of a Binging request from VP to the storage system, an example of the most frequent request is the start of the VM.

ESXi is always connected to PE, not directly to vVol. In the case of SAN, this avoids the 255 moon limit per ESXi host and the maximum number of paths to the moon. Each virtual machine can consist of only one protocol vVol: NFS, iSCSI or FCP. All PEs have a LUN ID of 300 or higher.

Despite the slight differences, NAS and SAN are very similarly structured in terms of how vVol works in a virtualization environment.

cDOT::*> lun bind show -instance Vserver: netapp-vVol-test-svm PE MSID: 2147484885 PE Vdisk ID: 800004d5000000000000000000000063036ed591 vVol MSID: 2147484951 vVol Vdisk ID: 800005170000000000000000000000601849f224 Protocol Endpoint: /vol/ds01/vVolPE-1410312812730 PE UUID: d75eb255-2d20-4026-81e8-39e4ace3cbdb PE Node: esxi-01 vVol: /vol/vVol31/naa.600a098044314f6c332443726e6e4534.vmdk vVol Node: esxi-01 vVol UUID: 22a5d22a-a2bd-4239-a447-cb506936ccd0 Secondary LUN: d2378d000000 Optimal binding: true Reference Count: 2 iGroup & Export Policy

The iGroup and Export Policy are the mechanisms for hiding information available on the storage system from the hosts. Those. provide each host with only what it should see. As in the case of NAS, and SAN, moon mapping and file sphere export are automatically from VP. iGroup is not mapped on all vVol, but only on PE, since ESXi uses PE as a proxy. In the case of the NFS protocol, the export policy is automatically applied to the file globe. The iGroup and export policy is created and populated automatically using a request from vCenter.

SAN & IP SAN

In the case of the iSCSI protocol, you must have at least one Data LIF on each storage node that is connected to the same network as the corresponding VMkernel on the ESXi host. iSCSI and NFS LIFs should be separated, but can coexist on the same IP network and one VLAN. In the case of FCP, it is necessary to have at least one Data LIF on each storage node for each factory, in other words, as a rule, these are two Data LIFs from each storage node that live on their own target port. Use soft-zoning on the switch for FCP.

NAS (NFS)

In the case of the NFS protocol, you must have at least one Data LIF with an IP address installed on each storage node that is connected to the same subnet as the corresponding VMkernel on the ESXi host. You cannot use the same IP address for iSCSI and NFS at the same time, but both of them can coexist on the same VLAN, on the same subnet and on the same physical Ethernet port.

VASA Provider

VP is an intermediary between vCenter and the storage system, he explains the storage system that the vCenter wants from it and vice versa tells the vCenter about important alerts and available storage resources, such as those that are physically in fact. Those. vCenter can now know how much free space there really is, it is especially convenient when the storage system presents the thin moons to the hypervisor.

VP is not a single point of failure in the sense that if it fails, virtual machines will still work fine, but you cannot create or edit policies and virtual machines on vVol, start or stop VM on vVol. Those. in the case of a complete reboot of the entire infrastructure, the virtual machines will not be able to start, since it will not execute the Binding VP request to the storage system to apply PE to its vVol. Therefore, VP is definitely desirable to reserve. And for the same reason, it is not allowed to have a virtual machine from VP to vVol, which it manages. Why is it “desirable” to reserve? Because, starting with the version of NetApp VP 6.2, the latter is able to restore the contents of vVol simply by reading the meta information from the storage system itself. Learn more about setting up and integration in the documentation for VASA Provider and VSC .

Disaster Recovery

VP supports Disaster Recovery functionality: in case of VP removal or damage, the vVol environment database can be restored: The meta information about the vVol environment is stored in a duplicate form: in the VP database, as well as with the vVol objects themselves on ONTAP. To restore the VP, it is enough to register the old VP, raise the new VP, register the ONTAP with it, execute the vp dr_recoverdb command in the same place and connect it to the vCenter, in the latter run the “Rescan Storage Provider”. Read more in KB .

The DR functionality for VP will allow vVol to be replicated using SnapMirror hardware replication and restore the site to the DR site when vVol starts supporting storage replication (VASA 3.0).

Virtual Storage Console

VSC is a plug-in for storage in the vCenter GUI, including for working with VP policies. VSC is a required component for vVol operation.

Snapshot & FlexClone

Let's draw the line between snapshots in terms of the hypervisor and snepshots in terms of storage. This is very important for understanding the internal structure of how vVol works on NetApp with snapshots. As many already know, snapshots in ONTAP are always executed at the level of the clause (and aggregate) and are not executed at the file / moon level. On the other hand, vVol, these are files or moons, i.e. they cannot be used for each individual VMDK file to remove snapshots using data storage systems. This is where the FlexClone technology comes to the rescue, which is able to make clones not only entirely, but also files / moons. Those. when the hypervisor removes a snapshot of a virtual machine living on vVol, the following happens under the hood: vCenter contacts VSC and VP, which in turn find exactly where the necessary VMDK files live and give the ONATP command to remove the clone from them. Yes, the fact that from the side of the hypervisor looks like a snapshot on the storage system is a clone. In other words, in order for the Hardware-Assistant Snapshot to work on the vVol, a FlexClone license is required. It is for this or similar purposes that ONTAP has a new functionality called FlexClone Autodelete, which allows you to set policies for deleting old clones.

Since snapshots are performed at the storage level, the problem of snapshot consolidation (ESXi) and the negative impact of snapshots on the performance of the disk subsystem are completely eliminated due to the internal arrangement of the ONTAP cloning / snapshotting mechanism.

Consistency

Since the hypervisor itself removes snapshots using storage systems, they are immediately immediately consistent. That fits very well with the NetApp ONTAP backup paradigm . vSphere supports VM memory snapshots on a dedicated vVol.

Clone and VDI

The cloning function is often very much in demand in the VDI environment, where there is a need to quickly deploy many of the same type of virtual machines. In order to use the Hardware-Assistant cloning, a FlexClone license is required. It is important to note that the FlexClone technology not only dramatically accelerates the deployment of copies of a large number of virtual machines, drastically reducing the consumption of disk space, but also indirectly speeds up their work. Cloning essentially performs a function like deduplication, i.e. reduces the amount of space occupied. The fact is that NetApp FAS always puts data into the system cache, and the system and SSD caches in turn are Dedup-Aware, i.e. they do not drag out duplicate blocks from other virtual machines that are already there, logically accommodating much more than the physically system and SSD cache can. This dramatically improves performance during storage operation, and especially during Boot-Storm moments, due to the increased hit / read data to / from cache (a).

UNMAP

Technology vVol starting with version VMware 6.0, VM Hardware version 11 and Windows 2012 with the NTFS file system supports the release of space inside the thin moon on which this virtual machine is located automatically. This significantly improves utilization of the usable space in the SAN infrastructure using Thing Provisioning and Hardware Assistant snapshots. And starting with VMware 6.5 and the Linux guest OS with SPC-4 support, it will also allow you to free up space from inside the virtual machine, back to the storage system, allowing you to significantly save expensive storage space on the storage system. Read more about UNMAP .

Minimum system requirements

- NetApp VASA Provider 5.0 and higher

- VSC 5.0 and higher.

- Clustered Data ONTAP 8.2.1 and above

- VMware vSphere 6.0 and up

- VSC and VP must have the same version, for example, both 5.x or both 6.x.

For details, check with your authorized NetApp partner or compatibility matrix .

SRM and hardware replication

Unfortunately, Site Recovery Manager does not yet support vVol, since in the current implementation of the VASA protocol, VMware does not support replication and SRM. NetApp FAS systems have SRM integration and work without vVol. VVol technology is also supported with vMSC (MetroCluster) for building geo-distributed, highly accessible storage for building.

Backup

The vVol technology will drastically change the approach to backups, reducing backup time and more efficiently using storage space. One of the reasons for this is a fundamentally different approach of snooping and backup, since vVol uses hardware snooping, which is why the issue of consolidation and removal of VMware snapshots is eliminated. Due to the elimination of problems with the consolidation of VMware snapshots, the removal of application-aware (hardware) snapshots and backups ceases to be a headache for admins. This will allow for more frequent backup and snapshots. Hardware snapshots that do not affect performance will allow them to be stored a lot right on the production environment, while hardware replication will allow more efficient replication of data for archiving or to a DR site. Using snapshots compared to Full-backup will allow more economical use of storage space.

VASA API 3.0

Do not confuse the plugin from the storage vendor (for example, NetApp VASA Provider 6.0) and the VMware VASA API (for example, VASA API 3.0). The most important and expected innovation in the VASA API, in the near future, will be support for hardware replication. It is hardware replication support that is so lacking in order for vVol technology to become widely used. The new version will support hardware replication of vVol disks by means of storage systems to provide DR function. Replication could have been done before using storage systems, but the hypervisor previously could not work with the replicated data due to the lack of support for such functionality in VASA API 2.X (and younger) by vSphere. Also, PowerShell cmdlets will appear to control DR on vVol and, importantly, the ability to run replicated machines for testing. For a planned migration from the DR site, virtual machine replication may be requested. This will allow you to use SRM along with vVol.

Oracle RAC will be validated to run on vVol, which is good news.

Licensing

The following components are necessary for vVol to work: VP, VSA and storage with the necessary firmware, supporting vVol. These components themselves do not require any licenses to run vVol. On the NetApp ONTAP side, a FlexClone license is required in order for the vVol to work - this license is also needed in order to support hardware snepshots or clones (removal and restoration). Data replication (if needed) will require a NetApp SnapMirror / SnapVault license (on both storage systems: on the primary and backup sites). On the vSphere side, you need Standard or Enterprise Plus licenses.

findings

The vVol technology was designed to even more closely interoperate the ESXi hypervisor with storage and simplify management. This allowed a more rational use of the capabilities and resources of storage, such as Thing Provisioning, where, thanks to UNMAP, space from thin moons can be released . At the same time, the hypervisor reports on the real state of storage space and resources, all this allows using Thing Provisioning not only for the NAS, but also to use it in “combat” conditions with the SAN, to abstract from the access protocol and to ensure the transparency of the infrastructure. And policies for working with storage resources, thin cloning and snapshots that do not affect performance will allow even more quickly and conveniently to deploy virtual machines in a virtualized infrastructure.

Source: https://habr.com/ru/post/321366/

All Articles