How to evaluate big tasks

There are many ways to evaluate user stories. We use our own methodology to evaluate and work out tasks before writing code. As we reached it and why our approach is better, than Planing Poker, read under a cat.

For three years we have been using Planning Poker. With this approach, each programmer in a closed evaluation of history in the 0.5, 1, 2, 3, 5, 8, 13, 20, 40 conventional units (story points). Then the people who gave the highest and lowest scores explain why this task seems so difficult to them, or vice versa - simple. The discussion continues until everyone comes to a single assessment.

After the sprint is completed, the master calculates how many story points in completed stories. Based on the collected statistics, it determines how many tasks will fit in the next sprint.

')

To evaluate a user story, developers need to understand how to implement it, at least in general terms. To understand how to implement, you need to understand what the client wants. All this is cleared up and discussed during the assessment. For one story, the team spends 5-30 minutes. At the same time, 2-3 people who are better versed in the subject are actively involved in the discussion. The rest of the time is wasted.

Since the time for evaluation is still very limited, developers often miss important points. This can lead to the fact that the story is greatly underestimated, or it will have to be redone from scratch.

Sometimes it turns out that the manager did not recognize important nuances from the client, and the evaluation of the story has to be postponed.

We estimate one story at 20. Another ten stories are estimated at 2 each. The score of ten small stories in the sum equals one big. However, a great story is almost always done longer than several small ones with the same rating.

With this method of evaluation, 20> 2 * 10 .

Why it happens? The greater the size of the story, the more opportunities to forget to take something into account when evaluating it. Even if everything is taken into account, the probability to underestimate by 50% one story is greater than the probability to underestimate ten stories as much.

In the middle of the task of size 20, the manager asks the developer when he finishes. The developer will either answer something unintelligible, or give an overly optimistic estimate, or take his optimistic estimate and multiply it by 2. The manager is unlikely to achieve an accurate assessment.

This is due to the fact that for the answer the developer divides the task into the part that he did and the part that remained, after which he quickly assesses the remaining part. He may be mistaken when trying to take into account everything that he still has to do, and in assessing the size of this part. Moreover, he gives this assessment alone and very quickly, so as not to make the manager wait.

The team gives a score of 40, which means that programmers really do not know how long the task will take. They do not fully understand how they will do it.

The same problem, albeit to a lesser extent, concerns ratings of 20, 13, 8. If the story described by a pair of sentences is estimated at 13 or 20, this also means that developers do not have a complete understanding of how to do it. Trying the whole team to describe in detail the solution to the problem during the assessment is an inefficient waste of time. This can be done by one person. To solve this problem, we assigned a person responsible for each task, who described the solution before the team assessment. However, there were no clear criteria for how detailed the solution should be. Sometimes a couple of sentences is enough, and sometimes you need a few paragraphs to make the solution of the problem understandable.

Trying to deal with these problems, we came up with the following idea: the history should be divided into subtasks until no one has any questions about how long each solution will take. Subtasks must be of equal size so that we can get a rating just by adding them together.

As a result, our design-evaluation process looks like this:

After evaluating all the tasks we get an assessment of the user history. The number of subtasks in history is its assessment in story points.

Since the number of assessment options is very limited, the team assessment is faster. You can further accelerate the team assessment, if you do not vote on each subtask, and ask open-ended questions:

Clear criteria for the division of tasks into subtasks and the assessment of the result of the design by the whole team reduces the likelihood of forgetting about any important points and do not allow for framing while designing. When the person responsible for working out the task breaks it into fairly small parts, he automatically has questions for both the customer of the feature and the existing code, which may have to be refactored. This is better than when the same questions arise in the midst of working on a task.

Moreover, if some questions did not occur to the designer, they will be asked during the team evaluation. When a task is worked out only while working on a ticket, only one person is responsible for this and he may not ask any important questions, as a result of which the task may have to be redone.

We often enough history is worked through by one person, and fulfilled by another. But even if you want to assign the task to the developer prior to the assessment, it is still useful to separate the design and assessment tasks separately by the team. At the evaluation stage, someone from the team can tell how to make the task easier, or it may turn out that it should not be done at all - somewhere there is already the necessary functionality. Although, of course, assigning tasks to developers does not add flexibility to the process. I recommend building the process so that the designed task can be performed by any developer in the team.

Since each subtask has acceptance criteria that are understandable to the programmer, he always understands how many subtasks he has done and how many are left. Therefore, he can easily answer the question when the task will go to the acceptance.

Dividing one task into subtasks usually takes about 5-30 minutes, but for some stories consisting of several tasks, in difficult cases it can take a couple of days.

Is it worth spending so much time just for the sake of a more accurate estimate? Of course not. But in fact, almost all the time is spent not on splitting the task, but on working out and designing its solution. To describe in detail the solution of some stories you may need to discuss it with several people and view the existing code. It takes a lot of time. The process of dividing into subtasks takes half an hour at worst.

A pre-designed solution that a team can evaluate before starting work is worth it to spend so much time on it.

Usually with Agile approaches, the design is carried out simultaneously with the development. From this point of view, our approach is less agile. However, the main thing in Agile is to deliver value to the customer as quickly as possible. If you select MVP, then it is not so important in what order you develop it. You will still receive feedback from the client only after the completion of the MVP development.

Since the team openly assesses the split that the design engineer provided for them, a binding effect may arise - team members will agree with the split that has already been set, especially if it was made by a more authoritative developer. In our practice, this problem does not manifest itself much, usually people do not hesitate to say that the subtask is too big, too small or incomprehensible. But, if your team has problems with this, you can move each subtask to a closed vote with options:

At the same time, before voting, anyone who does not understand the subtask can ask questions.

When performing a detailed task, the programmer has much less freedom for creativity. If a developer only writes code for stories designed for him, he will not grow and can be heavily demotivated. Therefore, it is important that each developer in the team has a chance to engage in design - everyone should be divided into stories into tasks and subtasks. It's not scary if more experienced developers are more likely to work on user stories. It is important that even the juniors have a bit of design.

The process of evaluating tasks through partitioning is quite laborious, so it will not be possible to use it for a quick assessment. For a preliminary approximate assessment of stories, you can use an expert assessment of two or three people. Such an assessment will be inaccurate, but it is enough to prioritize it. It’s not worth giving a preliminary estimate in story points so that the accuracy of this estimate does not arise.

I recommend using the good old man-weeks for a preliminary assessment: half a week, one, two, a month. After evaluating the history of the team, people who gave an expert assessment can quickly find out how wrong they were - it is enough to translate their man-weeks into story points (we know the average speed of one developer from statistics).

If possible, try not to evaluate the tasks prior to prioritization. If, after evaluating the story, it is postponed for a long time, then you will lose time:

Therefore, the assessment should come tasks that already need to do. If for prioritization you need to know how long the story will take, you should get by with a preliminary estimate.

If you are satisfied with the assessment using Planning Poker, but there are problems with the quality of the design, you can break the story into subtasks after evaluation and prioritization. Then the development process will be as follows:

With this approach, you still get a review of the design result before the developer starts writing code. Although totally such a process is likely to take more time.

For example, let's take the following feature: we want to implement push notifications in the browser with our js-plugin for the subscription, with blackjack and poetess. Ideally, we want:

Let's select the MVP from this feature - we’ll remove all that is possible so that we can get a useful feature for at least one of the clients:

MVP features can be broken down into the following tasks, each of which can be done independently:

A little we study the Google documentation web push notifications, then we divide the first task into subtasks:

As a result, we evaluated the first task in 5S (if, of course, the team agrees with such a partition). The rest of the tasks are broken down in the same way.

Team evaluation tells you when the story is ready. For this, the number of story points completed in previous weeks is taken into account.

The history is considered completed only if it has passed acceptance or is even used. The real interest is precisely how fast we deliver value to customers, and not how quickly tasks get into acceptance.

In order for such an assessment to work, it is necessary to agree on the maximum time for review and acceptance. For example, in our case the person responsible for the review of the task is obliged to make the first review within 2 days from the moment of sending the task to the review, and the revision according to the results of the review should be taken within a day.

If it is not possible to select a small MVP and some features are stretched into two sprints, then the sprint statistics will jump. But even in this case, you can calculate the speed of the team, if you take the average result for the last few sprints.

No matter how well you work out the task, there is always the chance that something will be left out. If in the process of working on a feature there appear new unaccounted subtasks, they should be underestimated. Then you will always know the estimated time to complete the task. You can also collect statistics as far as story points you assessed for the sprint. This will help to understand the estimation error.

Undervalued story points cannot be used when calculating team speed. If the team in the past sprint made stories for 50 story points and during the sprint, re-evaluated them for 10 story points - this does not mean that you can take 60 story points in the next sprint. Take 50, as this time you might have underestimated something.

When we began to pre-divide stories into subtasks, there were fewer problems with underestimating large tasks. There are no more situations when the task has to be reworked from scratch due to incorrect design.

I recommend that you try this approach, if you spend too much time on Planning Poker, there are problems with evaluating large tasks or designing.

Something about Planning Poker

For three years we have been using Planning Poker. With this approach, each programmer in a closed evaluation of history in the 0.5, 1, 2, 3, 5, 8, 13, 20, 40 conventional units (story points). Then the people who gave the highest and lowest scores explain why this task seems so difficult to them, or vice versa - simple. The discussion continues until everyone comes to a single assessment.

After the sprint is completed, the master calculates how many story points in completed stories. Based on the collected statistics, it determines how many tasks will fit in the next sprint.

')

What's the problem

Understanding history on the go

To evaluate a user story, developers need to understand how to implement it, at least in general terms. To understand how to implement, you need to understand what the client wants. All this is cleared up and discussed during the assessment. For one story, the team spends 5-30 minutes. At the same time, 2-3 people who are better versed in the subject are actively involved in the discussion. The rest of the time is wasted.

Since the time for evaluation is still very limited, developers often miss important points. This can lead to the fact that the story is greatly underestimated, or it will have to be redone from scratch.

Sometimes it turns out that the manager did not recognize important nuances from the client, and the evaluation of the story has to be postponed.

The illusion of accurate evaluation of large stories

We estimate one story at 20. Another ten stories are estimated at 2 each. The score of ten small stories in the sum equals one big. However, a great story is almost always done longer than several small ones with the same rating.

With this method of evaluation, 20> 2 * 10 .

Why it happens? The greater the size of the story, the more opportunities to forget to take something into account when evaluating it. Even if everything is taken into account, the probability to underestimate by 50% one story is greater than the probability to underestimate ten stories as much.

How many percent did you finish your job?

In the middle of the task of size 20, the manager asks the developer when he finishes. The developer will either answer something unintelligible, or give an overly optimistic estimate, or take his optimistic estimate and multiply it by 2. The manager is unlikely to achieve an accurate assessment.

This is due to the fact that for the answer the developer divides the task into the part that he did and the part that remained, after which he quickly assesses the remaining part. He may be mistaken when trying to take into account everything that he still has to do, and in assessing the size of this part. Moreover, he gives this assessment alone and very quickly, so as not to make the manager wait.

40 = unknown

The team gives a score of 40, which means that programmers really do not know how long the task will take. They do not fully understand how they will do it.

The same problem, albeit to a lesser extent, concerns ratings of 20, 13, 8. If the story described by a pair of sentences is estimated at 13 or 20, this also means that developers do not have a complete understanding of how to do it. Trying the whole team to describe in detail the solution to the problem during the assessment is an inefficient waste of time. This can be done by one person. To solve this problem, we assigned a person responsible for each task, who described the solution before the team assessment. However, there were no clear criteria for how detailed the solution should be. Sometimes a couple of sentences is enough, and sometimes you need a few paragraphs to make the solution of the problem understandable.

Divide into parts

Trying to deal with these problems, we came up with the following idea: the history should be divided into subtasks until no one has any questions about how long each solution will take. Subtasks must be of equal size so that we can get a rating just by adding them together.

As a result, our design-evaluation process looks like this:

- At the entrance, a user story is minimal functionality that will benefit the customer. The user history must have acceptance criteria that the manager or client can understand so that it can be accepted.

- If the story looks too big, then MVP stands out: everything is thrown out, without which the client can do at first. Upon completion of this story, the following is done, in which already added functionality will be expanded.

- Behind this story, a responsible person is appointed who, if necessary, breaks the story into separate tasks so that each task can be done in parallel with others. Often there are user stories that do not make sense to break into tasks: either they do not parallel, or the story is too small. If possible, tasks should be done so that they can be tested independently. Then testing can be started after the completion of the first task, and not wait until they finish the whole story.

- Then the responsible person breaks each task into subtasks. Each subtask is a paragraph with a description from one sentence to a paragraph. It may make no sense to non-programmers. Separate subtasks like “write a class” and “write tests for this class” - this is normal. When breaking into subtasks, the responsible person is guided by the following rules:

- all subtasks should be about the same minimum size (1 story point)

- for each subtask it should be clear what should be done in it

- the subtask has the acceptance criteria that the programmer understands (the class is written, the tests pass)

- The team evaluates the task. In a team assessment, a review is made of how well the responsible person worked out the decision and divided the task into subtasks, whether there are too many or too small subtasks among the subtasks. Wherein:

- if a specific subtask is incomprehensible to someone, it is described in more detail;

- if the team decides that the subtask is too large, it is broken into pieces;

- if, when reading the description of subtasks, many questions arise that the responsible person cannot answer, the task is sent to a more detailed study;

- if the team does not like the solution to the problem, it is changed on the fly or sent for redesign.

After evaluating all the tasks we get an assessment of the user history. The number of subtasks in history is its assessment in story points.

Arguments for

Rapid Team Evaluation

Since the number of assessment options is very limited, the team assessment is faster. You can further accelerate the team assessment, if you do not vote on each subtask, and ask open-ended questions:

- What are the subtasks incomprehensible?

- What subtasks should be divided?

- What are the subtasks worth combining?

Formalization of design quality

Clear criteria for the division of tasks into subtasks and the assessment of the result of the design by the whole team reduces the likelihood of forgetting about any important points and do not allow for framing while designing. When the person responsible for working out the task breaks it into fairly small parts, he automatically has questions for both the customer of the feature and the existing code, which may have to be refactored. This is better than when the same questions arise in the midst of working on a task.

Moreover, if some questions did not occur to the designer, they will be asked during the team evaluation. When a task is worked out only while working on a ticket, only one person is responsible for this and he may not ask any important questions, as a result of which the task may have to be redone.

We often enough history is worked through by one person, and fulfilled by another. But even if you want to assign the task to the developer prior to the assessment, it is still useful to separate the design and assessment tasks separately by the team. At the evaluation stage, someone from the team can tell how to make the task easier, or it may turn out that it should not be done at all - somewhere there is already the necessary functionality. Although, of course, assigning tasks to developers does not add flexibility to the process. I recommend building the process so that the designed task can be performed by any developer in the team.

Clear understanding of how the task is completed

Since each subtask has acceptance criteria that are understandable to the programmer, he always understands how many subtasks he has done and how many are left. Therefore, he can easily answer the question when the task will go to the acceptance.

Arguments against

It takes time to prepare the task for the team assessment.

Dividing one task into subtasks usually takes about 5-30 minutes, but for some stories consisting of several tasks, in difficult cases it can take a couple of days.

Is it worth spending so much time just for the sake of a more accurate estimate? Of course not. But in fact, almost all the time is spent not on splitting the task, but on working out and designing its solution. To describe in detail the solution of some stories you may need to discuss it with several people and view the existing code. It takes a lot of time. The process of dividing into subtasks takes half an hour at worst.

A pre-designed solution that a team can evaluate before starting work is worth it to spend so much time on it.

Well this is not agile.

Usually with Agile approaches, the design is carried out simultaneously with the development. From this point of view, our approach is less agile. However, the main thing in Agile is to deliver value to the customer as quickly as possible. If you select MVP, then it is not so important in what order you develop it. You will still receive feedback from the client only after the completion of the MVP development.

Binding effect is possible

Since the team openly assesses the split that the design engineer provided for them, a binding effect may arise - team members will agree with the split that has already been set, especially if it was made by a more authoritative developer. In our practice, this problem does not manifest itself much, usually people do not hesitate to say that the subtask is too big, too small or incomprehensible. But, if your team has problems with this, you can move each subtask to a closed vote with options:

- too big to divide;

- too small to combine with another;

- just right.

At the same time, before voting, anyone who does not understand the subtask can ask questions.

A developer cannot grow by performing tasks in which everything is already thought out before him

When performing a detailed task, the programmer has much less freedom for creativity. If a developer only writes code for stories designed for him, he will not grow and can be heavily demotivated. Therefore, it is important that each developer in the team has a chance to engage in design - everyone should be divided into stories into tasks and subtasks. It's not scary if more experienced developers are more likely to work on user stories. It is important that even the juniors have a bit of design.

You can not quickly assess the big story

The process of evaluating tasks through partitioning is quite laborious, so it will not be possible to use it for a quick assessment. For a preliminary approximate assessment of stories, you can use an expert assessment of two or three people. Such an assessment will be inaccurate, but it is enough to prioritize it. It’s not worth giving a preliminary estimate in story points so that the accuracy of this estimate does not arise.

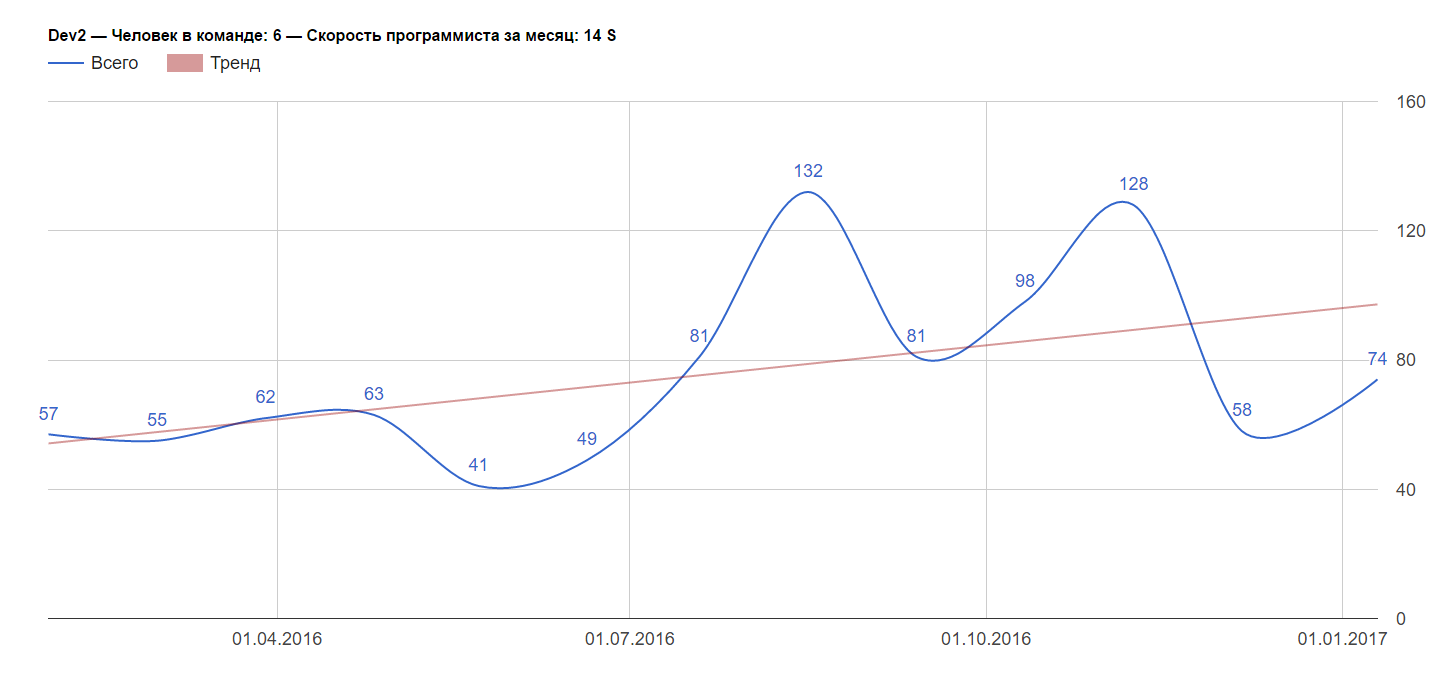

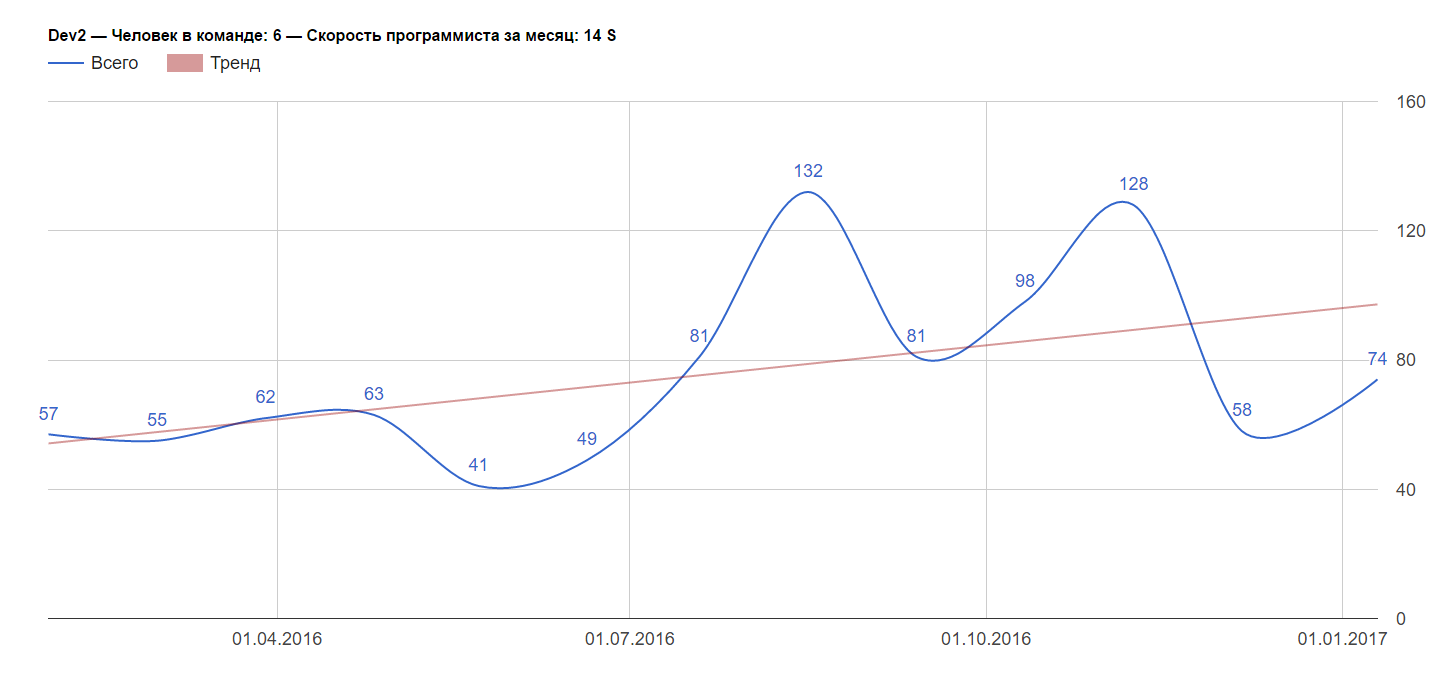

I recommend using the good old man-weeks for a preliminary assessment: half a week, one, two, a month. After evaluating the history of the team, people who gave an expert assessment can quickly find out how wrong they were - it is enough to translate their man-weeks into story points (we know the average speed of one developer from statistics).

If possible, try not to evaluate the tasks prior to prioritization. If, after evaluating the story, it is postponed for a long time, then you will lose time:

- this time you could spend on something useful to the client now;

- by the time you take up this story, its assessment may become irrelevant due to refactorings or new features.

Therefore, the assessment should come tasks that already need to do. If for prioritization you need to know how long the story will take, you should get by with a preliminary estimate.

If you are satisfied with the assessment using Planning Poker, but there are problems with the quality of the design, you can break the story into subtasks after evaluation and prioritization. Then the development process will be as follows:

- evaluate stories using Planning Poker,

- prioritize

- the developer paints the solution to the subtasks,

- the team evaluates this decision

- the developer writes the code.

With this approach, you still get a review of the design result before the developer starts writing code. Although totally such a process is likely to take more time.

Example

For example, let's take the following feature: we want to implement push notifications in the browser with our js-plugin for the subscription, with blackjack and poetess. Ideally, we want:

- js-plugin works in all browsers that support push notifications;

- js-plugin works on both https and http sites;

- notifications can be sent both manually and by mass to the event for individual consumers;

- track deliveries and clicks in notifications.

Let's select the MVP from this feature - we’ll remove all that is possible so that we can get a useful feature for at least one of the clients:

- remove Safari support, since it has its own implementation of push notifications;

- remove support for http sites, since for their support you need to write a complex hack;

- let's leave only the mass sending of notifications, since most of the clients with https sites need mass sending first of all;

- in the beginning we will do without statistics, so we’ll postpone tracking of delivery and clicks until later.

MVP features can be broken down into the following tasks, each of which can be done independently:

- js-library responsible for the subscription and display notifications.

- Micro service sending push notifications.

- UI for sending mass notifications.

A little we study the Google documentation web push notifications, then we divide the first task into subtasks:

- Implement the following methods in the js library:

- verification that the browser supports push notifications;

- check whether the site user is subscribed to notifications;

- subscription to notifications.

- Write tests that verify that these methods work correctly in browsers that support push notifications.

We use for this BrowserStack. Alternatively, at this point there may be a task to test js-methods manually in different browsers. - Learn to work with serviceWorkers.

ServiceWorker handles push notifications. We have not used them before, so we will allocate 1S to study a new technology. - Write a serviceWorker handling notifications. Add the registration of this serviceWorker to the subscription method.

- Write tests on serviceWorker.

As a result, we evaluated the first task in 5S (if, of course, the team agrees with such a partition). The rest of the tasks are broken down in the same way.

About statistics

Team evaluation tells you when the story is ready. For this, the number of story points completed in previous weeks is taken into account.

The history is considered completed only if it has passed acceptance or is even used. The real interest is precisely how fast we deliver value to customers, and not how quickly tasks get into acceptance.

In order for such an assessment to work, it is necessary to agree on the maximum time for review and acceptance. For example, in our case the person responsible for the review of the task is obliged to make the first review within 2 days from the moment of sending the task to the review, and the revision according to the results of the review should be taken within a day.

If it is not possible to select a small MVP and some features are stretched into two sprints, then the sprint statistics will jump. But even in this case, you can calculate the speed of the team, if you take the average result for the last few sprints.

Reassessment of tasks

No matter how well you work out the task, there is always the chance that something will be left out. If in the process of working on a feature there appear new unaccounted subtasks, they should be underestimated. Then you will always know the estimated time to complete the task. You can also collect statistics as far as story points you assessed for the sprint. This will help to understand the estimation error.

Undervalued story points cannot be used when calculating team speed. If the team in the past sprint made stories for 50 story points and during the sprint, re-evaluated them for 10 story points - this does not mean that you can take 60 story points in the next sprint. Take 50, as this time you might have underestimated something.

Conclusion

When we began to pre-divide stories into subtasks, there were fewer problems with underestimating large tasks. There are no more situations when the task has to be reworked from scratch due to incorrect design.

I recommend that you try this approach, if you spend too much time on Planning Poker, there are problems with evaluating large tasks or designing.

Source: https://habr.com/ru/post/321270/

All Articles