sudo rm -rf, or Chronicle of the incident with the database GitLab.com from 2017/01/31

He got drunk slowly, but still got drunk, somehow immediately, abruptly; and when, at the moment of enlightenment, I saw before me a hacked oak table in a completely unfamiliar room, a drawn sword in my hand and shrieking the moneyless don around, I thought it was time to go home. But it was too late.

Arkady and Boris Strugatsky

On January 31, 2017, an important event for the world of OpenSource occurred: one of the administrators of GitLab.com, trying to repair the replication, confused the console and deleted the main base of PostgreSQL, as a result of which a large amount of user data was lost and the service itself went offline. Moreover, all 5 different backup / replication methods were disabled. Recovered from the LVM image, accidentally taken 6 hours before the base was removed. It, as they say, happens. But we must pay tribute to the project team: they found the strength to treat everything with humor, did not lose their heads and showed surprising openness by writing about everything on Twitter and putting in the shared access, in fact, an internal document in which the team conducted in real time description of unfolding events.

During his reading, you literally feel yourself in the place of poor YP, who at 11 o'clock in the evening after a hard day of work and unsuccessful struggle with Postgres, squinting tiredly, drives fatal sudo rm -rf into the console of the combat server and presses Enter. After a second, he realizes that he has done, cancels the deletion, but it's too late - the base is no more ...

Due to the importance and in many ways the instructiveness of this case, we decided to fully translate into Russian his journal-report made by the staff of GitLab.com in the process of working on the incident. Result you can find under the cut.

So let's find out in all the details how it was.

Incident with the GitLab.com database dated 01/31/2017

Note: this incident has affected the database (including issues (issues) and merge requests); git repositories and wiki pages are not affected.

Live broadcast on YouTube - watch how we discuss and solve the problem!

- Losses incurred

- Timeline (time indicated in UTC)

- Recovery - 2017/01/31 23:00 (backup from approximately 17:20 UTC)

- Problems encountered

- Help from

- HugOps (add here posts from twitter and from somewhere else, in which people kindly responded to what happened)

- Stephen frost

- Sam McLeod

Losses incurred

- Data is lost in about 6 hours.

- 4,613 normal projects were lost, 74 forks and 350 imports (roughly); only 5037. Since Git repositories are NOT lost, we will be able to recreate those projects whose users / groups existed before data loss, but we will not be able to recover the issues of these projects.

- About 4979 (about 5000) can be lost.

- 707 users are potentially lost (it is difficult to say more precisely on the Kibana logs).

- Web hooks created before January 31, 5:20 pm, restored, created after - lost.

Timeline (time indicated in UTC)

- 2017/01/31 16: 00/17: 00 - 21:00

- YP is working on setting up pgpool and replication in staging, creating an LVM snapshot to load combat data into staging, and also in the hope that it will be able to use this data to speed up the loading of the base to other replicas. This happens about 6 hours before data loss.

- Configuring replication is problematic and very long (an estimated ~ 20 hours only for the initial synchronization pg_basebackup). LVM snapshot YP could not be used. The work at this stage was interrupted (because YP needed the help of another colleague who was not working that day, and also because of the spam / high load on GitLab.com).

- 2017/01/31 21:00 - The surge in site load due to spammers - Twitter | Slack

- Block users by their IP addresses.

- Deleting a user for using the repository as a CDN, as a result of which 47,000 aypishnikov logged in under the same account (causing a high load on the database). Information was transferred to the technical support and infrastructure teams.

- Deleting users for spam (using snippets) - Slack

- The load on the database returned to normal, a vacuum of several PostgreSQL tables was manually started to clean up the large number of empty lines left.

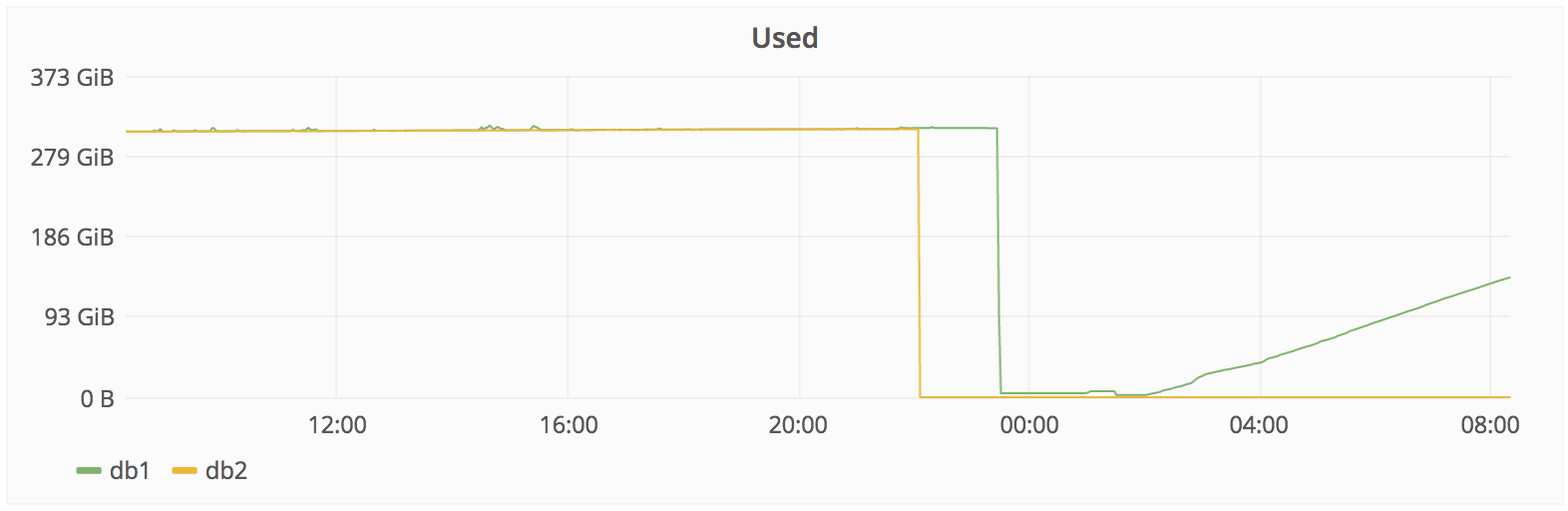

- 2017/01/31 22:00 - Replication lag warning received - Slack

- Attempts to fix db2, the lag at this stage is 4 GB.

- db2.cluster refuses to replicate, the / var / opt / gitlab / postgresql / data directory is cleaned up to ensure clean replication.

- db2.cluster refuses to connect to db1, swearing at a max_wal_senders value that is too low. This setting is used to limit the number of WAL clients (replication).

- YP increases max_wal_senders to 32 by db1, restarts PostgreSQL.

- PostgreSQL swears that too many semaphores are open and it does not start.

- YP reduces max_connections from 8000 to 2000, PostgreSQL starts (despite the fact that it worked fine from 8000 for almost a year).

- db2.cluster still refuses to replicate, but no longer complains about connections, but instead it just hangs and does nothing.

- At this time, YP begins to feel hopeless. Earlier that day, he said that he was going to finish the work, as it was late (around 11:00 pm local time), but remained in place due to unexpected problems with replication.

- 2017/01/31 around 23:00

- YP thinks that pg_basebackup may be too pedantic about the cleanliness of the data directory, and decides to delete it. After a couple of seconds, he notices that he ran the command on db1.cluster.gitlab.com instead of db2.cluster.gitlab.com .

- 2017/01/31 23:27: YP cancels the deletion, but it's too late. Of the approximately 310 GB, only 4.5 - Slack remained.

Recovery - 2017/01/31 23:00 (backup from ~ 17: 20 UTC)

- Suggested recovery methods:

- Migrate db1.staging.gitlab.com to GitLab.com (about 6 hours behind).

- CW: Problem with web hooks that were deleted during synchronization.

- Restore LVM image (lags by 6 hours).

- Sid: try to recover files?

- CW: Impossible! rm -Rvf Sid: OK.

- JEJ: It’s probably too late, but can it help if you quickly put the disk into read-only mode? Is it also impossible to get a file descriptor if it is used by a running process (according to http://unix.stackexchange.com/a/101247/213510 ).

- YP: PostgreSQL does not keep all of its files open all the time, so it will not work. It also looks like Azure deletes the data very quickly, but sending it to other replicas is not so fast. In other words, the data from the disk itself cannot be recovered.

- SH: It seems that on a db1 staging server, a separate PostgreSQL process pours a stream of production data from db2 into the gitlab_replicator directory. According to the lag of replication, db2 was redeemed in 2016-01-31 05:53, which led to the gitlab_replicator being stopped. The good news is that the data up to this point looks intact, so we may be able to restore the web hooks.

- Migrate db1.staging.gitlab.com to GitLab.com (about 6 hours behind).

- Actions taken:

- 2017/02/01 23:00 - 00:00: It was decided to restore data from db1.staging.gitlab.com to db1.cluster.gitlab.com (production). Although they are 6 hours behind and do not contain web hooks, this is the only available snapshot. YP says that today it is better for him not to run any commands starting with sudo anymore, and passes control to JN.

- 2017/02/01 00:36 - JN: I am backing up data db1.staging.gitlab.com.

- 2017/02/01 00:55 - JN: I mount db1.staging.gitlab.com to db1.cluster.gitlab.com.

- I copy data from staging / var / opt / gitlab / postgresql / data / to production / var / opt / gitlab / postgresql / data /.

- 2017/02/01 01:05 - JN: nfs-share01 server is allocated as temporary storage in / var / opt / gitlab / db-meltdown.

- 2017/02/01 01:18 - JN: I copy the remaining production data, including the packed pg_xlog: '20170131-db-meltodwn-backup.tar.gz'.

- 2017/02/01 01:58 - JN: I start syncing from stage to production.

- 2017/02/01 02:00 - CW: To explain the situation, the deployment page has been updated (deploy page). Link .

- 2017/02/01 03:00 - AR: rsync ran by about 50% (by the number of files).

- 017/02/01 04:00 - JN: rsync ran by about 56.4% (in the number of files). Data transfer is slow for the following reasons: the network bandwidth between us-east and us-east-2, as well as the limitation of disk performance on the staging server (60 Mb / s).

- 2017/02/01 07:00 - JN: Found a copy of the intact data on db1 staging in / var / opt / gitlab_replicator / postgresql. I started the db-crutch VM virtual machine on us-east to backup this data to another machine. Unfortunately, it is limited to 120 GB of RAM and does not pull the workload. This copy will be used to check the status of the database and upload the data of the web hooks.

- 2017/02/01 08:07 - JN: Data transfer is slow: 42% of data transferred.

- 2017/02/02 16:28 - JN: The data transfer is over.

- 2017/02/02 16:45 - Below is the recovery procedure.

- Recovery procedure

- [x] - Take a snapshot of the DB1 server - or 2 or 3 - taken at 16:36 UTC.

- [x] - Update db1.cluster.gitlab.com to PostgreSQL 9.6.1, it is still 9.6.0, and staging uses 9.6.1 (otherwise PostgreSQL may not start).

- Install 8.16.3-EE.1.

- Move chef-noop to chef-client (it was manually disabled).

- Run the chef-client on the host (done at 16:45).

- [x] - Run DB - 16:53 UTC

- Monitor the launch and make sure everything went fine.

- Make a backup.

- [x] - Update Sentry DSN so that errors do not get into staging.

- [x] - Increase identifiers in all tables by 10k to avoid problems when creating new projects / comments. Done using https://gist.github.com/anonymous/23e3c0d41e2beac018c4099d45ec88f5 , which reads a text file containing all the sequences (one per line).

- [x] - Clear the Rails / Redis cache.

- [x] - Try to recover web-hooks if possible.

- [x] Run staging using a snapshot taken before removing the web hooks.

- [x] Make sure the web hooks are in place.

- [x] Create a SQL dump (data only) of the “web_hooks” table (if there is data there).

- [x] Copy SQL-dump to production-server.

- [x] Import SQL dump into the working database.

- [x] - Check through Rails Console whether workers can connect (workers).

- [x] - Run workflows gradually.

- [x] - Disable the deployment page.

- [x] - Tweet with @gitlabstatus.

- [x] - Create crash related tasks describing future plans / actionsHidden text

- https://gitlab.com/gitlab-com/infrastructure/issues/1094

- https://gitlab.com/gitlab-com/infrastructure/issues/1095

- https://gitlab.com/gitlab-com/infrastructure/issues/1096

- https://gitlab.com/gitlab-com/infrastructure/issues/1097

- https://gitlab.com/gitlab-com/infrastructure/issues/1098

- https://gitlab.com/gitlab-com/infrastructure/issues/1099

- https://gitlab.com/gitlab-com/infrastructure/issues/1100

- https://gitlab.com/gitlab-com/infrastructure/issues/1101

- https://gitlab.com/gitlab-com/infrastructure/issues/1102

- https://gitlab.com/gitlab-com/infrastructure/issues/1103

- https://gitlab.com/gitlab-com/infrastructure/issues/1104

- https://gitlab.com/gitlab-com/infrastructure/issues/1105

[] - Create new Project Git repository entries that do not have Project entries in cases where the namespace matches an existing user / group.- PC -

I create a list of these repositories so that we can check in the database whether they exist.

[] - Delete repositories with unknown (lost) namespaces.- AR - working on a script based on data from the previous point.

[x] - Delete spam users again (so that they do not create problems again).- [x] CDN user with 47,000 IP addresses.

- To do after data recovery:

- Create a change task in the PS1-format / color terminals so that it is immediately clear which medium is used: production or staging (production — red, staging — yellow). For all users, the default bash prompt is to show the full hostname (for example, “db1.staging.gitlab.com” instead of “db1”): https://gitlab.com/gitlab-com/infrastructure/issues/1094

- How can I disable rm -rf for a PostgreSQL data directory? Not sure if this is doable or necessary (in case there are normal backups).

- Add alerts for backups: check S3 storage, etc. Add a graph showing changes in backups size, issue a warning when the size is reduced by more than 10%: https://gitlab.com/gitlab-com/infrastructure/issues/1095 .

Consider adding the time of the last successful backup to the database so that admins can easily see this information.(suggested by the client at https://gitlab.zendesk.com/agent/tickets/58274 ).- To figure out why PostgreSQL suddenly had problems with max_connections set to 8000, despite the fact that it worked from 2016-05-13. The unexpected appearance of this problem is largely responsible for the despair and hopelessness that has come down: https://gitlab.com/gitlab-com/infrastructure/issues/1096 .

- A look at the increase in replication thresholds via WAL / PITR archiving will also be useful after unsuccessful updates: https://gitlab.com/gitlab-com/infrastructure/issues/1097 .

- Create a user guide for solving problems that may arise after starting the service.

- Experiment with moving data from one data center to another using AzCopy: Microsoft says this should work faster than rsync:

- It seems that this is a Windows-specific thing, and we do not have experts on Windows (or someone at least remotely, but sufficiently familiar with the question in order to correctly test it).

Problems encountered

- LVM images by default are taken only once every 24 hours. By luck, YP made one manually 6 hours before the crash.

- Regular backups also seem to have been done only once a day, although YP has not yet figured out where they are stored. According to JN, they do not work: files of several bytes are created.

- SH: It looks like pg_dump does not work properly, because the binaries from PostgreSQL 9.2 are running instead of 9.6. This is due to the fact that omnibus uses only Pg 9.6 if data / PG_VERSION is set to 9.6, but this file is not on the work nodes. As a result, 9.2 runs by default and silently finishes without doing anything. As a result, SQL dumps are not generated. Fog-hem may have cleaned out old backups.

- Disk snapshots in Azure are included for the NFS server, for database servers it is not.

- The synchronization process deletes the web hooks after it has synchronized the data on the staging. If we can’t get them out of a regular backup made within 24 hours, they will be lost.

- The replication procedure turned out to be very fragile, prone to errors, dependent on random shell scripts and poorly documented.

- SH: We later found out that staging database updating works by creating a snapshot of the gitlab_replicator directory, deleting the replication configuration, and running a separate PostgreSQL server.

- Our S3 backups also do not work: the folder is empty.

- We do not have a reliable alert system about unsuccessful attempts to create backups, we now see the same problems on a dev-host.

In other words, none of the 5 used backup / replication methods works. => now we are restoring a working backup made 6 hours ago.

http://monitor.gitlab.net/dashboard/db/postgres-stats?panelId=10&fullscreen&from=now-24h&to=now

Help from

Hugops (add here posts from twitter or from somewhere else, in which people kindly responded to what happened)- A Twitter Moment for all the kind tweets: https://twitter.com/i/moments/826818668948549632

- Jan Lehnardt https://twitter.com/janl/status/826717066984116229

- Buddy CI https://twitter.com/BuddyGit/status/826704250499633152

- Kent https://twitter.com/kentchenery/status/826594322870996992

- Lead off https://twitter.com/LeadOffTeam/status/826599794659450881

- Mozair https://news.ycombinator.com/item?id=13539779

- Applicant https://news.ycombinator.com/item?id=13539729

- Scott Hanselman https://twitter.com/shanselman/status/826753118868275200

- Dave Long https://twitter.com/davejlong/status/826817435470815233

- Simon Slater https://twitter.com/skslater/status/826812158184980482

- Zaim M Ramlan https://twitter.com/zaimramlan/status/826803347764043777

- Aaron Suggs https://twitter.com/ktheory/status/826802484467396610

- danedevalcourt https://twitter.com/danedevalcourt/status/826791663943241728

- Karl https://twitter.com/irutsun/status/826786850186608640

- Zac Clay https://twitter.com/mebezac/status/826781796318707712

- Tim Roberts https://twitter.com/cirsca/status/826781142581927936

- Frans Bouma https://twitter.com/FransBouma/status/826766417332727809

- Roshan Chhetri https://twitter.com/sai_roshan/status/826764344637616128

- Samuel Boswell https://twitter.com/sboswell/status/826760159758262273

- Matt Brunt https://twitter.com/Brunty/status/826755797933756416

- Isham Mohamed https://twitter.com/Isham_M_Iqbal/status/826755614013485056

- Adriå Galin https://twitter.com/adriagalin/status/826754540955377665

- Jonathan Burke https://twitter.com/imprecision/status/826749556566134784

- Christo https://twitter.com/xho/status/826748578240544768

- Linux Australia https://twitter.com/linuxaustralia/status/826741475731976192

- Emma Jane https://twitter.com/emmajanehw/status/826737286725455872

- Rafael Dohms https://twitter.com/rdohms/status/826719718539194368

- Mike San Romån https://twitter.com/msanromanv/status/826710492169269248

- Jono Walker https://twitter.com/WalkerJono/status/826705353265983488

- Tom Penrose https://twitter.com/TomPenrose/status/826704616402333697

- Jon Wincus https://twitter.com/jonwincus/status/826683164676521985

- Bill Weiss https://twitter.com/BillWeiss/status/826673719460274176

- Alberto Grespan https://twitter.com/albertogg/status/826662465400340481

- Wicket https://twitter.com/matthewtrask/status/826650119042957312

- Jesse Dearing https://twitter.com/JesseDearing/status/826647439587188736

- Franco Gilio https://twitter.com/fgili0/status/826642668994326528

- Adam DeConinck https://twitter.com/ajdecon/status/826633522735505408

- Luciano Facchinelli https://twitter.com/sys0wned/status/826628970150035456

- Miguel Di Ciurcio F. https://twitter.com/mciurcio/status/826628765820321792

- ceej https://twitter.com/ceejbot/status/826617667884769280

Stephen frost

- https://twitter.com/net_snow/status/826622954964393984 @gitlabstatus Hi, I'm a PG developer, and I like what you do. Let me know if I can help with anything, I would be glad to be helpful.

Sam McLeod

- Hi Sid, I am very sorry that you have problems with the database / LVM, this is pretty darn unpleasant. We have several PostgreSQL clusters (master / slave), and I noticed a few things in your report:

- You use Slony, and this is the piece you yourself know what, and this is not an exaggeration at all, here even show it at http://howfuckedismydatabase.com , with the built-in PostgreSQL binary, which is responsible for streaming replication, very reliable and fast, I suggest switch to it.

- The use of connection pools is not mentioned, but it is said about thousands of connections in postgresql.conf - this is very bad and inefficient in terms of performance, I suggest using pg_bouncer and not setting max_connection in PostgreSQL above 512-1024; in practice, if you have more than 256 active connections, you need to scale horizontally, not vertically.

- The report says how unreliable your failover and backup processes are, we wrote and documented a simple script for postgresql failover - if you want, I will send it to you. As for backups, for incremental backups during the day we use pgbarman, and we also do full backups twice a day with barman and pg_dump, it is important in terms of performance and reliability to store your backups and directory with postgresql data on different disks.

- Are you still in Azure?!?! I would suggest to move out of there as quickly as possible, since there are a lot of strange problems with internal DNS, NTP, routing and storage, I also heard some frightening stories about how everything is arranged inside.

Sid and Sam's long correspondence with a focus on PostgreSQL setup- Tell me if you need help setting up PostgreSQL, I have a decent experience on this issue.

- Capt. McLeod: another question: how much disk space does your database (s) occupy? Is it about terabytes or is it still gigabytes?

- Capt. McLeod: Laid Your Failover / Replication Script:

- I also see that you are looking at pgpool - I would suggest pgbouncer instead

- Capt. McLeod: pgpool has plenty of problems, we tested it well and threw it out.

- Capt. McLeod: Also let me know if I can say something publicly via Twitter or something else in support of GitLab and your transparency in working on this issue, I know how hard it is; when I started, we had a split-brain at the SAN level in infoxchange, and I literally vomited - I was so nervous!

- Sid Sijbrandij: Hi Sam, thanks for the help. Do you mind if I copy this into a public document so that the rest of the team can?

- Capt. McLeod: Failover Script?

- Sid Sijbrandij: Everything you wrote.

- Of course, in any case, this is a public repository, but it is not perfect, very far from it, but it does its job well, I constantly confuse hosts without any consequences, but everything could be different with you.

- Yes, of course, you can send me my recommendations.

VM, PostgreSQL PostgreSQL.conf, .

Sid: Slony 9.2 9.6, .

: , , : PostgreSQL .- Rails (25 ). 20 20 - 10 000 , 400 ( Unicorn — ).

: PostgreSQL- , ; pg_bouncer — . , , pgpool . , , . Pgpool ORM/db-.

: https://wiki.postgresql.org/wiki/Number_Of_Database_Connections

- , , . . pgpool + ( ). Pgbouncer, , ( , ). https://github.com/awslabs/pgbouncer-rr-patch .

: active/active PostgreSQL-, , ?

: ? ?

* , , GitLab.com . , .

Conclusion

, GitLab , , , . , Twitter Google Docs, , , , .

, : "Database (removal) specialist" ( [] ), - 1 http://checkyourbackups.work/ , :

?

- .

- ( , Azure).

- LVM — , , GitLab.com, .

- dev/stage/prod- .

- dev/stage/prod- /.

- — , .

- , .

Related Links:

- : GitLab.com Database Incident — 2017/01/31: https://docs.google.com/document/d/1GCK53YDcBWQveod9kfzW-VCxIABGiryG7_z_6jHdVik/pub

- GitLab.com: https://about.gitlab.com/2017/02/01/gitlab-dot-com-database-incident/

- : https://habrahabr.ru/post/320988/

')

Source: https://habr.com/ru/post/321074/

All Articles