How to test RoR containers with GitLab CI in a container

What is good about GitLab is that, being an elephant in size in a china shop, it can be carefully installed and almost always works from the box. But he is not able to recover and take care of himself, when very straight arms like mine break his usual environment. I will not go into how I managed to kill him to a state where even removing and installing from scratch does not help, but in order to avoid another endless epic with debugging and reinstalling the server, I brought the whole thing to the Docker container. Conveniently - there are no million dependencies on the working machine, mounted the directories for repositories, logs and databases and everything works. Recovery - recreate the container and feed backups (by the way, do not forget to check your backups, as the GitLab experience says, this is not superfluous).

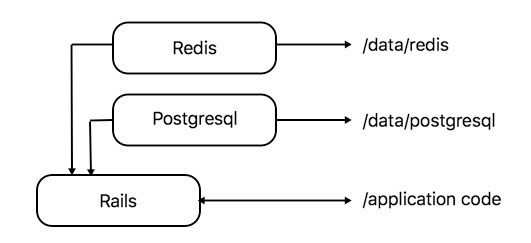

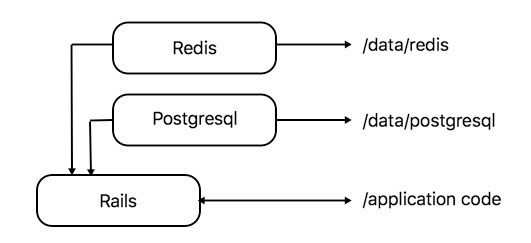

On the other hand, there is an application being developed by Rails that only holds code on a real machine; Rails, gems, and everything else rests in the Docker container. For its work, it uses Redis and Postgres, each in its own container. For each container, a directory is mounted so that the data important for the application does not remain inside.

')

The challenge is for Gitlab CI to work properly. It seems simple, but - he himself is in the container.

The first thought is to install Docker in Docker. I believe in humanity, and in the fact that there is a person in the world who in a reasonable time made a real nesting, with the installation of a Docker in a container that really works, but I personally did not succeed. So, we need the second option - to test from the outside: all containers should be located next to the GitLab container, automatically created and destroyed by the CI system from inside the container.

For tests, we do not need to mount external directories, all the same, these are one-time. After the tests, all containers automatically stop.

To allow the container to manage its father, you need to forward the parent socket to it. In an ideal world, this is done like this:

but for those who are not looking for easy ways in life, it does not work. First you need to add more

then discover that this only works if the docker's binaries on the host are static (do not ask), otherwise you will have to take care of each library in this directory separately. If your host's docker binary is not static (don't ask!) How it is with me, then work on.

See the list of libraries in / usr / bin / docker:

Writing to Gitlab's docker-compose.yml (not a project, do not confuse) paths that seem to be intuitive, and having tried to start the Gitlab container:

docker at the start broke out a terrible rugannya, which was so rude that almost every word was shielded seven times!

The solution "in the forehead":

That is, we need file paths from the right side of the library table.

To create this file correctly, it is best to look at the docker-compose.yml applications. For example, the postgres container - we don’t need to connect volumes, we don’t need an env file with a password to the database (we don’t care what the password is in the database that lives for 10 seconds), the ports are out. That is, it remains only to specify the image name (image). Redis: volumes are not needed, so only the image name. The main image is ruby, into which Gitlab will mount the code, and will run before_script.

You can screw anything you like on a GitLab configured in this way, from the Registry of images to Docker-in-docker solutions. For example, instead of deploying code in a ruby container with GitLab tools, create a solution based on gitlab / dind in which to run the docker build. But this is a completely different article.

On the other hand, there is an application being developed by Rails that only holds code on a real machine; Rails, gems, and everything else rests in the Docker container. For its work, it uses Redis and Postgres, each in its own container. For each container, a directory is mounted so that the data important for the application does not remain inside.

')

The challenge is for Gitlab CI to work properly. It seems simple, but - he himself is in the container.

By the way, docker-compose.yml to rails application

In the .env file is for example the password to the database, POSTGRES_PASSWORD. Here you can see which variables are used in the postgresql container. Instead of ports, you can use expose, but for monitoring from a host it is better to open a window to the world.

There are no comments to Redis at all - he connected, he himself expose at 6379, and it works. Everyone would like that.

services: postgres: image: postgres:9.4.5 volumes: - /var/db/test/postres:/var/lib/postgresql/data env_file: .env ports: - "54321:5432" redis: image: redis:latest volumes: - /var/db/test/redis:/data app: build: . env_file: .env volumes: - /var/www/test:/var/www/test ports: - "3000" links: - postgres - redis In the .env file is for example the password to the database, POSTGRES_PASSWORD. Here you can see which variables are used in the postgresql container. Instead of ports, you can use expose, but for monitoring from a host it is better to open a window to the world.

There are no comments to Redis at all - he connected, he himself expose at 6379, and it works. Everyone would like that.

The first thought is to install Docker in Docker. I believe in humanity, and in the fact that there is a person in the world who in a reasonable time made a real nesting, with the installation of a Docker in a container that really works, but I personally did not succeed. So, we need the second option - to test from the outside: all containers should be located next to the GitLab container, automatically created and destroyed by the CI system from inside the container.

For tests, we do not need to mount external directories, all the same, these are one-time. After the tests, all containers automatically stop.

Sharim Docker

It is not recommended to give untrusted programs in containers access to the Docker on the host. Having such, you can easily launch the creation of another container on the host, which will mount the desired directory or even a whole volume (or devices, for example a webcam or printer), install the necessary code for the attacker, and slowly pull out all your bitcoins, and all this without leaving cozy sandbox. But since I am testing my code, caution can be ignored.

To allow the container to manage its father, you need to forward the parent socket to it. In an ideal world, this is done like this:

docker run .. -v /var/run/docker.sock:/var/run/docker.sock but for those who are not looking for easy ways in life, it does not work. First you need to add more

-v /usr/bin/docker:/usr/bin/docker then discover that this only works if the docker's binaries on the host are static (do not ask), otherwise you will have to take care of each library in this directory separately. If your host's docker binary is not static (don't ask!) How it is with me, then work on.

See the list of libraries in / usr / bin / docker:

ldd /usr/bin/docker linux-vdso.so.1 (0x00007fffb9ff4000) libpthread.so.0 => /lib/x86_64-linux-gnu/libpthread.so.0 (0x00007f711fe27000) libltdl.so.7 => /usr/lib/x86_64-linux-gnu/libltdl.so.7 (0x00007f711fc1d000) libc.so.6 => /lib/x86_64-linux-gnu/libc.so.6 (0x00007f711f871000) /lib64/ld-linux-x86-64.so.2 (0x000055a205b12000) libdl.so.2 => /lib/x86_64-linux-gnu/libdl.so.2 (0x00007f711f66d000) Lazy

I only needed the ibltdl.so.7 library, everything else in the container was there, all the same Gitlab is not Alphine Linux for you. You can check it using the container console, calling docker in it, it will tell you which library does not exist. Connect the right and try again. If you don’t think that such a path is easier, mount everything. He will forgive.

Writing to Gitlab's docker-compose.yml (not a project, do not confuse) paths that seem to be intuitive, and having tried to start the Gitlab container:

volumes: ... - /var/run/docker.sock:/var/run/docker.sock - /usr/bin/docker:/usr/bin/docker - /usr/bin/docker/libltdl.so.7:/usr/bin/docker/libltdl.so.7 docker at the start broke out a terrible rugannya, which was so rude that almost every word was shielded seven times!

ERROR: for gitlab Cannot start service gitlab: invalid header field value "oci runtime error: container_linux.go:247: starting container process caused \"process_linux.go:359: container init caused \\\"rootfs_linux.go:53: mounting \\\\\\\"/usr/bin/docker/libltdl.so.7\\\\\\\" to rootfs \\\\\\\"/var/lib/docker/devicemapper/mnt/2df228e042aed186eebbb484989e44cee3126c0a3bfb42d25c8998ada5afb9bd/rootfs\\\\\\\" at \\\\\\\"/usr/bin/docker/libltdl.so.7\\\\\\\" caused \\\\\\\"stat /usr/bin/docker/libltdl.so.7: not a directory\\\\\\\"\\\"\"\n" The solution "in the forehead":

volumes: ... - /var/run/docker.sock:/var/run/docker.sock - /usr/bin/docker:/usr/bin/docker - /usr/lib/x86_64-linux-gnu/libltdl.so.7:/usr/lib/x86_64-linux-gnu/libltdl.so.7 That is, we need file paths from the right side of the library table.

docker-compose.yml by Gitlab

gitlab: image: 'gitlab/gitlab-ce:8.14.5-ce.0' restart: unless-stopped hostname: 'git.habrahabr.ru' environment: GITLAB_OMNIBUS_CONFIG: "external_url 'http://git.habrahabr.ru'" ports: - "3456:80" # 80- . - "52022:22" volumes: - /docker/gitlab/data/conf:/etc/gitlab - /docker/gitlab/data/logs:/var/log/gitlab - /docker/gitlab/data/data:/var/opt/gitlab - /var/run/docker.sock:/var/run/docker.sock - /usr/bin/docker:/usr/bin/docker - /usr/lib/x86_64-linux-gnu/libltdl.so.7:/usr/lib/x86_64-linux-gnu/libltdl.so.7 Create a .gitlab-ci.yml

image: "ruby:2.3" services: - redis:latest - postgres:9.4.5 cache: paths: - vendor/ruby before_script: - apt-get update -q && apt-get install nodejs -yqq # . 5-10 . - gem install bundler --no-ri --no-rdoc - bundle install -j $(nproc) --path vendor rspec: stage: test script: - bundle exec rake db:create - bundle exec rake db:migrate - bundle exec rake db:seed - rspec spec To create this file correctly, it is best to look at the docker-compose.yml applications. For example, the postgres container - we don’t need to connect volumes, we don’t need an env file with a password to the database (we don’t care what the password is in the database that lives for 10 seconds), the ports are out. That is, it remains only to specify the image name (image). Redis: volumes are not needed, so only the image name. The main image is ruby, into which Gitlab will mount the code, and will run before_script.

Instead of an epilogue

You can screw anything you like on a GitLab configured in this way, from the Registry of images to Docker-in-docker solutions. For example, instead of deploying code in a ruby container with GitLab tools, create a solution based on gitlab / dind in which to run the docker build. But this is a completely different article.

Source: https://habr.com/ru/post/320982/

All Articles