How to write curve queries with a non-optimal plan and make the DBMS think

It's simple. Here you can find the "Basics of Query Analysis for Dummies" in the case of PostgreSQL and some wonderful non-fictional examples from production about how not to write queries on PostgreSQL and MySQL and what happens if you write them all the same.

It's simple. Here you can find the "Basics of Query Analysis for Dummies" in the case of PostgreSQL and some wonderful non-fictional examples from production about how not to write queries on PostgreSQL and MySQL and what happens if you write them all the same.Introduction

I will tell you about a few obvious things that are quite well described in mans and documentation, which is usually read after a dozen rakes, or after a certain number of limbs have been shot or after other self-harming.

')

There will be several parts, logically they are loosely connected with each other, but they all met in the course of solving various business needs and somehow satisfied its needs.

Nuances of work with

The very thing WITH, which is very similar to syntactic sugar without a large semantic load, is associated (with the uninitiated) with splitting a large footcloth into separate methods in the spirit of Martin and Fowler. Here the main feature is that this is not an analogue of the method / function, especially when it comes to query optimization.

Immediately I apologize to the reader, but later in the text there will be only pieces of requests that are of fundamental importance; requests will not be published in full. First, in order not to bore with the features of the data structure, and second, so that I do not accidentally post something private corporate. If the pieces are absolutely not readable, please do not hit hard, but suggest how to refine them. Thank.

How to do not.

The original sql piece from the main request body

LEFT JOIN specifications_history AS specification_history ON specification_history.id = specification_detail.entity_history_id AND specification_history.specification_id = ANY(specification_parts.ids) LEFT JOIN specification_revision_details AS specification_section_detail ON specification_section_detail.specification_revision_id = specification_revision.id AND specification_section_detail.entity_type = 1002 LEFT JOIN specification_sections_history AS specification_section_history ON specification_section_history.id = specification_section_detail.entity_history_id LEFT JOIN specification_revision_details AS section_item_detail ON section_item_detail.specification_revision_id = specification_revision.id AND section_item_detail.entity_type = 1003 LEFT JOIN section_items_history AS section_item_history ON section_item_history.id = section_item_detail.entity_history_id 'Refined' request piece

WITH revision_products AS ( SELECT DISTINCT specification_revision.id AS revision_id, specification_history.specification_id AS specification_id, section_item_history.product_id AS product_id FROM specification_revisions AS specification_revision INNER JOIN specification_revision_details AS specification_detail ON specification_detail.specification_revision_id = specification_revision.id AND specification_detail.entity_type = 1001 INNER JOIN specifications_history AS specification_histor ON specification_history.id = specification_detail.entity_history_id INNER JOIN specification_revision_details AS specification_section_detail ON specification_section_detail.specification_revision_id = specification_revision.id AND specification_section_detail.entity_type = 1002 INNER JOIN specification_sections_history AS specification_section_history ON specification_section_history.id = specification_section_detail.entity_history_id INNER JOIN specification_revision_details AS section_item_detail ON section_item_detail.specification_revision_id = specification_revision.id AND section_item_detail.entity_type = 1003 INNER JOIN section_items_history AS section_item_history ON section_item_history.id = section_item_detail.entity_history_id WHERE section_item_history.product_id IS NOT NULL ) Here the following happened: from the main request body, in which there were quite a few LEFT JOINs they were taken to WITH and turned into INNER JOIN. The piece was given an euphonious name, so as to increase the readability of the main body, and all the details of the implementation dragged away. Practice clean code at its best. With readability, really, it became better. In the main body of the request, there are 5 joins left instead of 10. Here, only the speed of the query execution immediately fell from 75 ms to 95 seconds. Interesting things appeared in Explain:

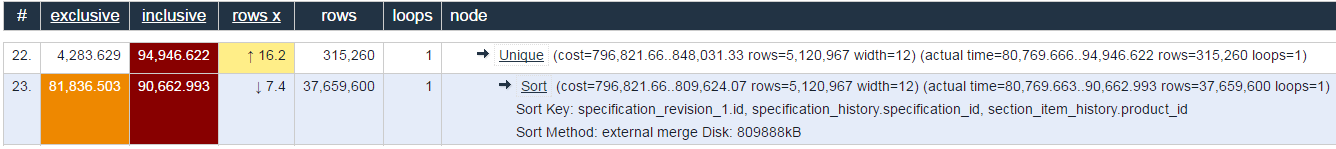

-> Unique (cost=796821.66..848031.33 rows=5120967 width=12) (actual time=80769.666..94946.622 rows=315260 loops=1) -> Sort (cost=796821.66..809624.07 rows=5120967 width=12) (actual time=80769.663..90662.993 rows=37659600 loops=1) Sort Key: specification_revision_1.id, specification_history.specification_id, section_item_history.product_id Sort Method: external merge Disk: 809888kB That is, someone took 37 lyam lines and began to sort them cheerfully in 1 gig of memory. Then questions arose:

- “But where do we have 37kk lines, when is the 1.5kk in the largest table?”

- “We didn’t change the algorithm, we just made the code readable, why did everything hang?”

- “He is declarative, we said that we want, and how didn’t they say why it broke?”

Answer : transferring joins from the main body to WITH did exactly what is described in the documentation :

WITH Queries (Common Table Expressions)

If you are looking for a query, even if you have been a query or sibling. It is an expensive way to get redundant work. It is possible to prevent unwanted multiple evaluations with side-effects. However, it’s not a problem. It would be possible to appreciate that it would be possible to discard afterwards. (If you can use only the limited number of rows.)

Briefly and roughly: requests from WITH are executed once and, more often, not optimized, that is, the place of their use does not affect the execution plan.

That is, we leveled a piece of the request to the level of an independent part, forgetting to add to it the important conditions from WHERE, which cut back the selection for most tomatoes. As a result, the entire base was crossed, and then they gave this monster to the main body, where they took a dozen lines from it.

In the specific case described above, WHERE had a condition of the form “product_id = 1234”, which set the main constraint on the data. If this condition were dragged into WITH, then everything would continue to work at about the same speed. However, this can only be done in the case of a static value for the right side of the condition. If aidishs are obtained, for example, during a recursive query, then in WITH such a condition cannot be dragged off and the idea of splitting the query into pieces will shamelessly slow down.

Conclusion :

- need to read the documentation;

- Not all development practices are equally useful in different areas of this development.

Visualize explain

I think everyone is aware of explain.depesz.com . They beautifully show what is wrong with the request.

In fact, this is just a coloring for the default output of the explain command, but this is very clear and especially helps at the beginning, until you know what to look at ... although it’s nice and convenient to lie and not at the beginning.

Here I would like to say a few words for each of the columns and explain how they affect the outcome of the implementation. Yes, it is written there in the help , but rarely does anyone read the help until it is secure.

- # - just the sequence number of the operation during the execution of the request

- Exclusive - time to perform a specific operation (in milliseconds)

- Inclusive - time to execute the entire command pipeline (for example, in the picture above, to perform uniq, you need to sort at least)

- Rows X - how long

Akellamissed the planner when he vangal the number of rows that the operation should return (yes, this is important for later decisions about how to proceed with the query)

Tips for novice optimizers

If everything slows down and do not know where to start, then here are a couple of tips. You need to take a colored explain (preferably along with analyze) from the previous paragraph and look at it. Most often, the problem (read, 80% + runtime) is concentrated in one of the operations described in the execution plan. That is, by Exclusive / Inclusive to find the darkest and dumb place. Again, in the example above, it is clear that the uniq operation lasts 94 seconds out of a total of 95 seconds for which the request is executed. In the same place we see that in uniq almost all the time is occupied by sort, which lasts 90 seconds. Here you can see the problem in the form of the number of rows, the sorting algorithm and the memory used. It remains nothing: to understand "who is to blame and what to do." Here, only knowledge of the target database's data structure and the requirements for the query results will help. It may be enough to rearrange a couple of lines or add an additional condition, or it may be necessary to completely rewrite the request, since in its original form the only thing it can do well is to slow down.

It is also worth paying attention to the big “Rows X”. This indicates a miss of the predicted and actual results of the sample and, most often, due to the lack of statistics on the tables. This can lead to a non-optimal query execution plan. For example: we want to select one row from a table with 1 million items; if the planner decides that the result of the sample will not be 1 line, but ~ 200,000, then it will not search by index, but go full scan, since this is the optimal strategy for such a ratio of the resulting lines and table size. Conclusions about the speed do yourself.

Standard rake

Here's what happened most often in practice and what caused the indecent behavior of requests:

- misunderstanding of the data structure and data joining through unknown detours and detours, or, better yet, joining unnecessary data; the extreme case was in MySQL; Here is a slightly lightweight example that conveys the essence of the problem:

SELECT ordered_products.* FROM products, products AS ordered_products GROUP BY ordered_products.id

On the one hand, they simply indicated an extra table inside the FROM and did not use it at all. On the other hand, we got an implicit connection of two tables through a CROSS JOIN and resulting rows (relevant for at least MySQL 5.5). In my case, the products table was 40k rows, but I did not wait for the end of the query. As far as I know, Oracle can do join elimination , but, in any case, it's better not to rely on DBMS features, but to think with your head.Bonus: how to do it in ActiveRecord and hang everythingProduct.joins(", (#{Product.table_name}) AS ordered_products"). select('ordered_products.*'). group("ordered_products.#{Product.primary_key}") - Love for outer joins. They generate, at a minimum, the geometric growth of rows in intermediate results and it may easily turn out that on some input data the query will slow down and the DBMS will drown on the amount of data. An extreme example was on the query mentioned above on the test (which is with WITH). It worked fine with a strict restriction on product_id. The same query worked well with an array of 5-15 ID and the query execution time grew linearly, but then, each following ID in the array increased the query execution time by 2-3 times. The problem was just in the OUTER JOIN set, which multiplicatively increased the number of rows processed and, from some point onwards, their number became outrageously large, and the execution plan could not be shown to underage developers.

- In the continuation of the previous paragraph: some people like to put FULL OUTER JOIN instead of LEFT / RIGHT, which is enough in the overwhelming majority of cases (tested on the inhabitants of the habr, with whom they discussed the request from the previous article about interviews ). The problem is the same: generating extra data and increasing resource consumption. From a personal one: FULL OUTER JOIN recently really needed production for the first time in 2 years ... was happy like a child.

- Wonderful magic with functions, for example, from PostgreSQL, when instead of a pair of joins in declarative style, they try to do the same, but in the imperative style through arrays and other data structures, together with the functions that transform them. Example, unfortunately, I will not find, so I have to take my word for it. I only remember that such things occasionally flash on stackoverflow . The only good news is that they almost never get the leaders on likes.

the end

Thanks to all. If you have your own wonderful examples of how not to do it, then please don't be silent, there are not many “bad advice” and “living examples”. And the article is, like the exam ticket, just a reason to talk.

Source: https://habr.com/ru/post/320916/

All Articles