Protecting information on Google - some technical details

The other day, Google has published an interesting document called Infrastructure Security Design Overview . In it, the company described the main approaches that it uses to ensure both physical and “virtual” security of its data centers and information that is stored on servers of such data centers. We in King Servers are trying to closely monitor this kind of technical information, so we tried to study the document.

And there is a lot of interesting things there, despite the fact that the document clearly serves to attract customers to the “digital fortress”, which is the corporation’s data centers. But there are enough technical details, and not all of them were known before.

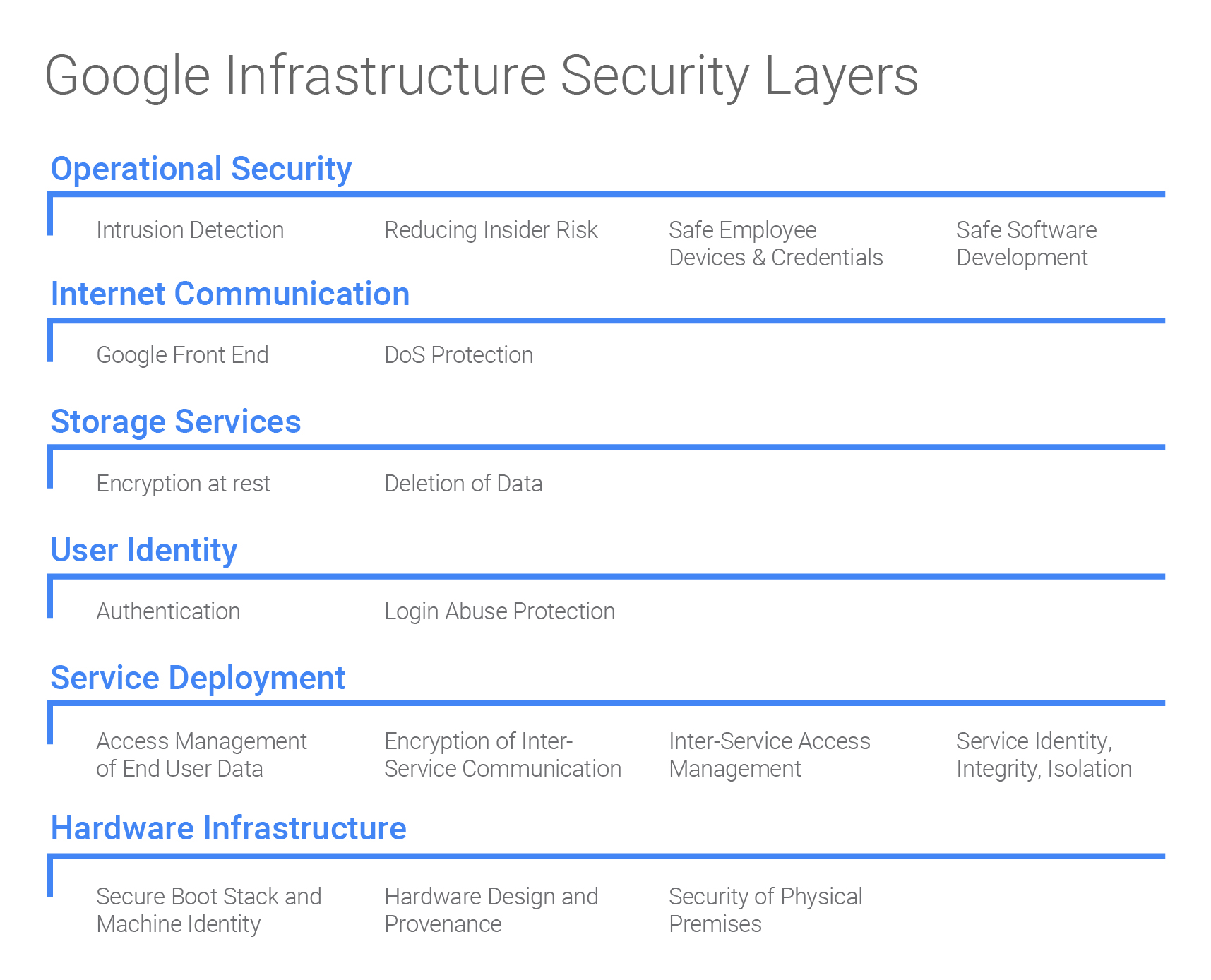

The security infrastructure at Google is divided, conditionally, into six different levels, which include both hardware and software protection.

')

The first level is physical security systems. We wrote about some of them earlier. In addition to such elements of the infrastructure, Google uses "biometric identification systems, metal detectors, cameras, barriers that block the passage of vehicles and laser intrusion detection systems of outsiders."

The second level is hardware, and the corporation does not accept work with physically or morally outdated equipment. Many systems are custom, created by third-party manufacturers specifically for the corporation. This equipment is repeatedly tested by the company's engineers. In addition, to ensure security, the company independently developed chips that guard both its own servers and partners' servers. The development of this proprietary, the company considers this method of protection reliable, since its own chips identify and confirm the authenticity of Google gadgets at the hardware level. In addition, in third-party server farms, a corporation uses other methods of protection. In particular, all employee devices are scrutinized: thus, Google checks for the presence of current updates for the OS and software installed in the system.

The third level is cryptographic signatures, authentication and authorization systems, which are used when organizing the interaction of low-level components: BIOS, operating system bootloader, kernel and base OS image. Such signatures can be verified for each download or update. And all the components are “created or completely under the control of Google”. All this, according to experts of the company, ensures that the correct software stack is loaded onto the server systems.

The corporation pays special attention to protecting drives, trying to prevent malicious scripts or other types of software from accessing their systems at the hardware and software levels. Hardware encryption is used by Google almost everywhere, and the life cycle of each drive is tracked very carefully. After the write-off, the drives undergo a multi-stage cleaning procedure and independent inspections by specialists. Those devices that have not passed the cleaning procedure are physically destroyed.

The company checks its source code especially carefully. At this stage, there is a team that includes specialists from various profiles, including experts in the field of cryptography, experts in web and OS security. All company software source codes are stored in a special repository. If necessary, specialists can conduct a thorough check of any part of the code of any source. All modifications must be approved by the owner of the system. The company believes that, as a result, the possibility of an insider or an infringer is extremely limited.

The company is now using processors such as the Tensor Pocessing Unit for machine learning projects. Such chips have been used by Google for a couple of years, and during this time they have demonstrated high efficiency. And they are much more energy efficient than conventional chips, if we take the ratio of performance per 1 watt of energy expended. True, they are used in cases where high accuracy of calculations is not too critical. That is why TPU show better performance than traditional chips.

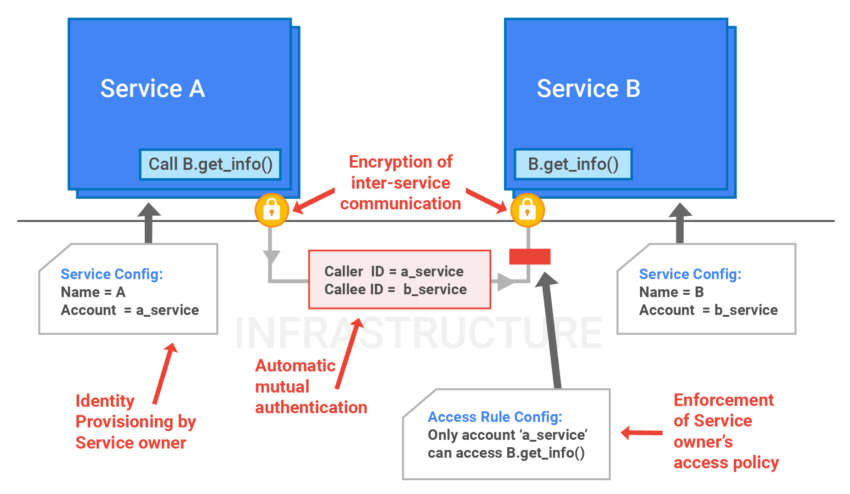

Well, plus to everything, each service of the company, both for general use and for internal needs, can be configured so that access to the administrative part has a specific person (in fact, there is nothing new here). The only thing is that each server, service and engineer gets their own identifier. The connections between human operators, hardware, and software are coded and distributed in the global namespace.

Source: https://habr.com/ru/post/320750/

All Articles