All the best from Lean Startup methodology, and how testers live with it

“This should be done yesterday,” “Test it somehow quickly,” “The time from the start of development to the production calculation should be minimal, and if possible, even less” - probably, many are familiar with similar quotes. And as long as we (testers) are one of the last links in the development chain, we most often have to balance between the speed at which features are released and their quality.

“This should be done yesterday,” “Test it somehow quickly,” “The time from the start of development to the production calculation should be minimal, and if possible, even less” - probably, many are familiar with similar quotes. And as long as we (testers) are one of the last links in the development chain, we most often have to balance between the speed at which features are released and their quality.In this article I want to share how we in our company apply successful practices from Lean Startup (despite the fact that many of our projects are fully formed and settled), what problems testers face when using this methodology and how we cope with these difficulties.

A few words about myself: I am a tester, I had experience in projects of various scales, I was the only tester on a project and worked in teams that used different approaches and methodologies. In my experience, working on Lean Startup is cool, but there are also pitfalls for testing, which are not bad to know in advance.

For a start, it's worth to tell a little about what our company does. Tutu.ru is a service for online purchase of train, air and bus tickets, tours and other things related to travel. One of our projects, Tours , is still under active development, it is very dynamic, and the functionality is rapidly changing. Our PO (product-products) practice Lean Startup, and in particular, we conduct a lot of experiments.

')

Lean Startup, the desires of managers and the reality of a simple tester

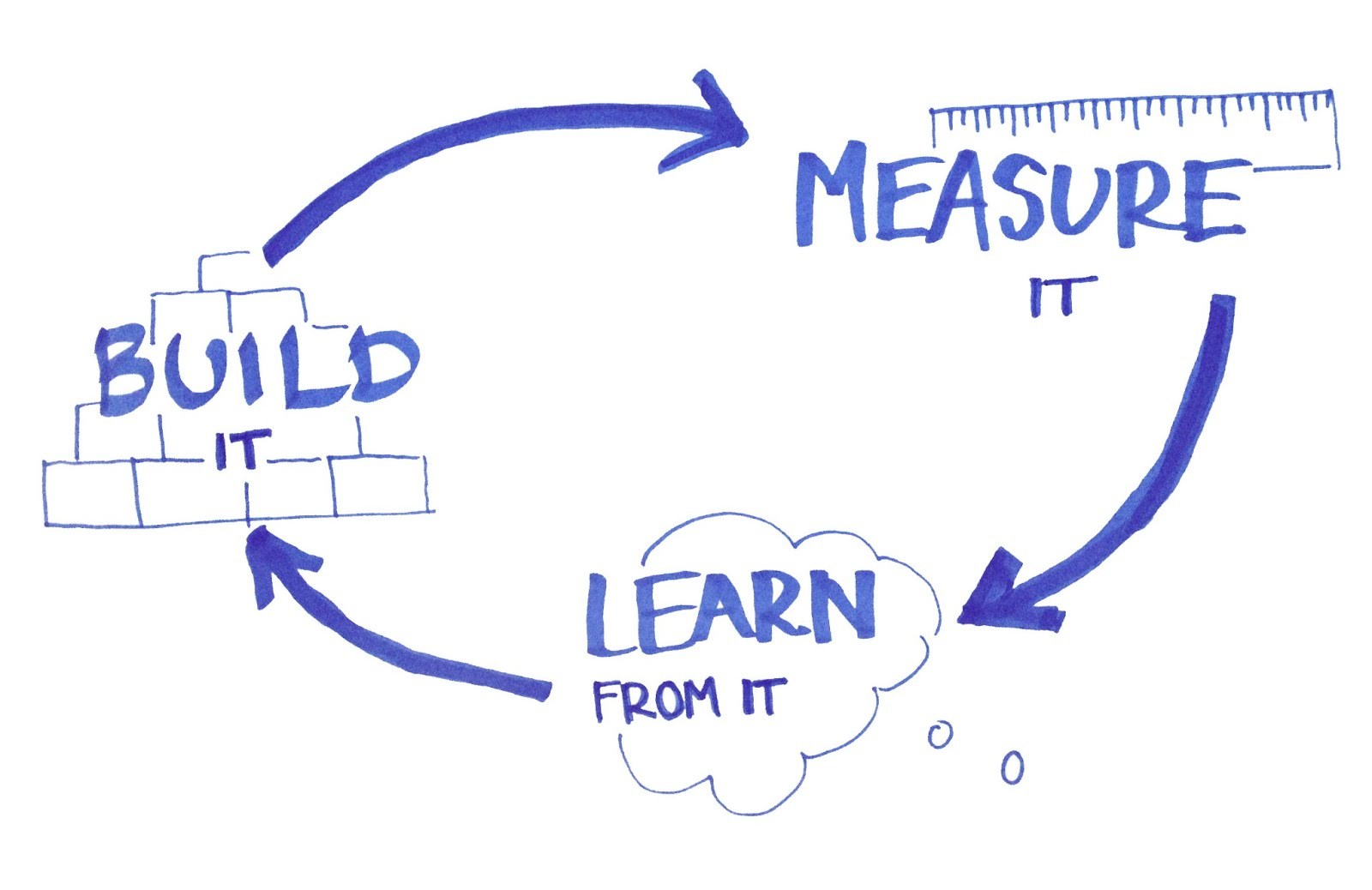

Of course, we are not exactly a startup, but in the Lean Startup concept there are a lot of interesting things, including for us. What does Lean Startup mean?

- Minimize unnecessary costs and save all possible funds.

- Involvement in the process of creating a product of each employee. In other words, about how much this decision costs; when it can be done faster, and when - add more functions, carefully check the result - not only managers, ROs or some other mythical people, but also ourselves should think. Each of us. Before testing, you should ask yourself: what is the purpose of a feature / project? Do not test for the sake of the testing process, and test in accordance with some goals, features.

- Orientation to the user and getting the maximum amount of information about the client per unit of time. Yes, we think about our users and care about them. We try to do not only as it is convenient for us, the engineers, from the technical side, but it is for them - the people who will use our final product.

And in order to understand who our users are, what they want, what they like and what doesn't, we use experiments with fast iterations.

The point of all this is to make the minimally viable functionality, to test any hypothesis, and then, according to the results of the analytics, to find out whether it is necessary to develop this hypothesis (feature) further. Experimenting, we reduce the risk of developing features that nobody needs. At the same time, we need as short an iteration as possible: to get the necessary information as quickly as possible, to finalize everything in accordance with the data obtained - as quickly as possible.

From the point of view of management, all this, of course, is very fun and healthy: we quickly saw the necessary functionality, quickly check hypotheses and variants, quickly change the functionality according to the results. But what about all of this we do - testers? When we constantly hear: “Faster, even faster! This feature should have been released on production yesterday! ”

We can use many different techniques, infinitely increase coverage - then we will ensure the maximum level of quality. And of course, we would like our project and our new features to be of the highest quality and finest. But you need to understand that all this costs certain resources: effort, money and time.

Under the conditions of frequent experiments, we usually do not have these resources: after all, we must release and analyze the results of what has been done quickly. In addition, at this stage, the project may not need the maximum level of quality that we would like to ensure in our dreams and secret desires. At the same time, we cannot completely throw out or greatly reduce the amount of testing, because our new feature should still be of high quality, up to a certain level. But where is this border? How to keep this balance? How to scale testing for the needs of the project?

To do this, we use the following methodologies and tools:

- Business analysis task

- Risk management

- Gradations of quality

- Developer Checklists

- “Delayed” writing documentation

And now we will consider each of these points in more detail:

Business analysis task

Of course, one cannot say that we (testers) conduct a full-fledged business analysis: after all, there are special people for this, and the task is quite extensive. But we try to apply this to our realities and our situation. We concentrate on the objectives of the project.

For example, if we know that the number of users who buy tours for two adults and two infants (children under 2 years old) tends to zero - we most likely will not check what type of room the tour operator offers us in this case - because it’s easier no one will become of it. But at the same time we will definitely check the search and the proposed type of room for 2 adults and one child - because such a story happens much more often, and because when searching with children, mistakes are often seen in general - we can say that this case is a boundary case.

Or, for example, testing the API of connecting a new provider, we will not pay special attention to the prices of tours with business class flights (the main thing is that they are not substituted by default instead of economic ones!), Because we know that we should fly business class when buying a tour I wished only one tourist for the whole time of our section.

The temptation to check clever cases for a tester is always great, but the main thing is not to forget about reality.

Risk management

The goal of quality assurance is risk reduction. Even if we owned unlimited resources, we would not be able to completely eliminate all risks.

If we are limited by time, means or some other things, then we divide the risks into 2 groups:

- Valid

- Invalid

The first category includes things that may not work, work incorrectly, we may not even know that something is wrong with them (this is the worst thing!) - and for us this is normal. We can take these risks for the sake of speeding out of tasks or another purpose.

What can be attributed here? Some additional scenarios, places where, according to statistics, “almost no one pokes” (0.1% of users, for example), work in unpopular browsers, etc.

The second category - unacceptable risks - can be attributed to what should work for sure and of which we should be sure. We need to provide the user with minimal, but working functionality . If we make a feature that should help the user cross the ravine and eventually release half of the bridge, this is no good because it does not solve the user's problem. Such an experiment will always show a negative result, this applies to our unacceptable risks. And if we pull a rope with ropes over the sides of the ravine, it will be possible to switch to the other side, which means that the user's problem can be solved.

We manage acceptable risks and reduce unacceptable.

Gradations of quality

Graduations generally follow from the preceding paragraph and were made for convenience by both testers and ROs. They agree on the rules of the game between us. We have 5 gradations of quality, each of which corresponds to a certain number of things that we look at.

For example, in 2 gradations we need to make sure that the functionality behaves adequately in one, the most popular browser among our users, the main user scenarios are executed, the main business function is working.

At quality level 5, in addition to all of the above, we draw attention to:

- additional user scenarios and business functions;

- UI;

- display in all supported browsers and mobile devices;

- the maximum level of automation of the main and additional user scenarios;

- testability

This is also convenient because you can set a different and quite certain level of quality for different projects and even different tasks.

For experimental tasks, the quality level is set lower, and if such problems are decided to be developed further, then the quality gradation grows with them. For example, we assume that users will find it interesting and useful if we offer them prices for the next days and months, as well as to similar countries (compared to the current search). But technically, this task is quite complicated, so we execute and run it, so to speak, in demo mode, to see if there will be interest from our users. Suppose, in this form, the task falls into our testing. And we suddenly find out that if the search is performed for two people (our default option, the most popular one), then the prices for the next months / similar countries are adequate and do not lie. But if you search not for two, but for three or one - then “oh” - not everything is so rosy. And at this stage, we, as testers, after consulting with the developers and learning that the editing is quite laborious, we decide to release the task as it is.

Next, we collect analytics and understand that about 40% of our visitors use this new block. Then we start the next iteration of the task, and here the quality gradation will be higher - we realized that the functionality needs to be developed and we can’t afford the “wrong prices” when searching for a different number of people.

Here another question may arise: what is the main scenario in this case, and what is additional.

If you go back to Lean, then everything very much depends on the stage of the project where we are.

This figure on the left shows the stages of the Lean Analytics project, and the so-called “gates” (on the right), which you must go through to go to the next stage. Here is a brief description of these stages:

- Empathy. The purpose of this stage is to inform potential buyers about product development and your experiments. The gate in this case is a clear definition of the real, actual and unresolved problem. Now that you have decided on this, you can go on.

- Stickiness. Now do something such a feature that will force users to return. Gate is a formulated problem solving technique that is ready to pay for.

- Virality. Maximize the number of users (preferably without or with minimal use of paid methods). Gate - the number of users, as well as the quality and demand for features is growing.

- Revenue. The project should bring money, and more than you spend on it. Gate - you have found and found your niche in the market.

Thus, in order to understand which scenarios are now considered additional and basic, it is worth considering at what stage the project is now.

For example, at the Stickiness stage, various “tricks” and distinctive features will be important for us, at the Virality stage, we cannot do without SEO - and if we know what the project’s priorities are at the moment - we can more effectively approach the quality assurance process and “apply special benefit "only where it is really needed.

Check-lists for developers (acceptance criteria).

Here we will talk about increasing the speed of the entire cycle of work on the task.

In a perfect world, each team member is responsible for quality. There are no raw tasks for testing. Testers do not find critical bugs, because the developers have already taken everything into account. Our world is not perfect. We were faced with the fact that the task came to us with obvious bugs. But there are no obvious things. Such situations were transparent only for us - testers.

Developers think differently - this is normal and natural. And that is why we would like to contribute a part of our “vision” of the project as early as possible, preferably even before the start of testing itself.

We found this way: we use the so-called “acceptance criteria”.

This checklist is a list of checks with specific parameters, data and links, as well as describing the expected behavior of objects. At the same time, we try to minimize the number of passes and put the maximum number of checks in them so that the check list is easier, faster and more fun to use. The checklist should be simple enough and concise, so that developers do not miss items from it. It almost never contains negative checks. Writing a checklist makes sense only when the developer has not finished the task, because our goal: the minimum number of switchings from task to task. If we did not have time to write a check-list, we simply do not write it at all.

In addition, if the developer passed the checklist, this does not mean that when testing, we do not need to look at the things that were described in the checklist. Always worth a quick run through them just in case. Here the difference in our perception of the product plays a role.

An example of a fragment of our checklist:

- Go to / hotel / 3442 / (page without search parameters)

- There are no dice "send link to tours"

- Go to XXX (sample link to hotel page with parameters)

- Dice present

- You can go to the next step by clicking on the bid price.

- There are inscriptions about the possibility of partial payment and that included in the tour

- Opposite them, under the buttons on prices, there is a die with the pseudo reference “Find out if the price decreases”, in it the percentage is active and turns red when you hover

- Click on the link “Show more N offers”

- Below the subscription, already outside the locale in the right column is a die with the inscription "Send a link to tours to this hotel by e-mail"

We use checklists for more than a year and have already been able to draw some conclusions. Both testers and developers say that checklists are uniquely useful and convenient to use. So what are the advantages?

Why do testers like it?

- fewer raw tasks;

- Testers used to work on the task before.

When a development methodology is flexible, there is usually very little documentation and specifications on the project. If the project is dynamic and the ROs are constantly experimenting (as it happens with us), then the “preliminary” documentation does not make much sense - usually, we only have user-experience and approximate solutions. Therefore, in this situation, the stage “testing requirements” as a separate unit is simply absent. But it is important for testers to take part in the formation of requirements and we do it just at the stage of creating checklists for developers. Of course, there are also separate meetings for discussing tasks, discussing layouts, but even this is not enough.

- Testing tasks with checklists takes less time than testing similar ones without checklists.

- We learn to formulate concretely and clearly, we highlight the problem areas in the product.

- We have the opportunity to look at the problem from different points of view.

It often happens that one person tests a task, and another checklist writes to it. We all have slightly different styles, views, testing methodology. When you manage to look at the task with a “fresh” look, it is very healthy and useful.

Why do developers like it?

- a clear list of scenarios with specific data to check;

- preliminary analysis of the task;

- developers get statistics and feedback.

We take into account the nature of the bugs found on the checklists and we can say that, for example, someone makes mistakes of a certain nature: logic, code, etc. When people know where they are wrong, they have something to strive for and it is easier to correct their mistakes.

What we all like: we do not throw the task from development to testing a hundred thousand times. Many things are corrected at the check-lists stage. And we are getting closer to the ideal world, where everything is taken into account and done right the first time. Maybe one day.

Of the minuses it can be noted that time is still spent on writing checklists and their passage. But, judging by our statistics, it takes less time than throwing tasks between testing and development in their absence. Testers write one checklist for an average of half an hour, developers pass it in 10-30 minutes (depending on the complexity of the task).

In addition, not all tasks in our sprints "deserve" checklists. On planning, we estimate whether we need a checklist in a task or not. As a result, we have 6-7 checklists per sprint (on average, we do about 30 tasks per sprint). About 67% of checklists were passed successfully (the developers did not find bugs for them). In 35% of tasks with checklists, no bugs were found during testing.

"Deferred" documentation

We do not set a goal to write detailed test cases for all new features. And the reason is the same: experimental tasks. We do not want to write documentation that is not useful to anyone. It is better to describe the functionality, when it will be known for sure that it is waiting for further development. We write cases without too much detail to make them easier to maintain. In addition, we try to think a few times before writing a case to avoid, again, unnecessary wastage of time.

All of the above tools in our realities really work - we checked.

They help us maintain a balance between quality and speed.

→ Based on my performance on SQA Days-19

Video of the performance can be seen here:

Source: https://habr.com/ru/post/320326/

All Articles