Multithreading (concurrency) in Swift 3. GCD and Dispatch Queues

It must be said that multithreading (oncurrency) in iOS is always included in the questions asked in interviews with developers of iOS applications , as well as among the top errors that programmers make when developing iOS applications. Therefore, it is important to own this tool perfectly.

So, you have an application, it works on

How can I change the architecture of the application so that such problems do not arise? In this case, multithreading (

The processor at any given time can perform one of your tasks and the corresponding thread is allocated for it.

In the case of a single-core processor (iPhone and iPad), multithreading (

')

A kind of fee for introducing multithreading in your application is the difficulty of ensuring safe execution of code on different threads (

In iOS programming, multi-threading is provided to developers in the form of several tools: Thread , Grand Central Dispatch (GCD for short) and Operation - and is used to increase the performance and responsiveness of the user interface. We will not consider

I must say that before

In

Another good news is that since

For a better understanding of multithreading (

But this is just a general idea of how multithreading (

Serial (

Queues can be “

We see that on the

Once the

The

The

In the case of asynchronous execution as a sequential (

and parallel (

The task of the developer is only to select the queue and add the task (usually the closure) to this queue synchronously using the

Returning to the task presented at the very beginning of this post, we will switch the task of receiving data from the Data from Network to another queue:

After getting the

When we receive

To load the imageURL

After receiving the

You see how easy it is to execute a switching chain to another queue in order to “divert” the execution of “expensive” tasks from the

Notice that switching cost jobs from the

You need to be very careful with the

In addition to custom queues that need to be specifically created, the

1.) a sequential

If you want to perform a function or closure that does something with a user interface (

2.) 4 background

Below are the various

There is also the Global

If it is possible to determine

It is important to understand that all these global queues are SYSTEM global queues and our tasks are not the only tasks in this queue! It is also important to know that all global queues, except one, are

Apple provides us with a single GLOBAL

It is strongly recommended to “divert” such resource-intensive operations to other threads or queues:

There is one more rigid requirement - ONLY on the

This is because we want the

As soon as we allow tasks to work in parallel, there are problems related to the fact that different tasks will want to gain access to the same resources.

There are three main problems:

We can reproduce the simplest case of

We have the usual

and a diagram that clearly shows the phenomenon called "

Let's replace the

Both print and result have changed:

<img

<img

and a diagram that lacks a phenomenon called "

We see that although it is necessary to be very attentive with the

The concept of

Suppose there are two tasks in the system with low (A) and high (B) priority. At time T1, task (A) locks the resource and starts servicing it. At time T2, task (B) crowds out the low-priority task (A) and tries to seize the resource at time T3. But since the resource is locked, task (B) is transferred to the waiting, and task (A) continues execution. At time T4, task (A) completes the maintenance of the resource and unlocks it. Since the resource is awaiting task (B), it immediately starts execution.

The time interval (T4-T3) is called limited priority inversion . In this interval, there is a logical inconsistency with the rules of planning - a task with a higher priority is pending while a low priority task is being performed.

. : (), () ():

(), (), — . , () (), () . () , (T5-T4). , () , (), (). (T6-T3) . .

, , . «» .

,

Mutual blocking is an emergency state of the system, which can occur when nested resource locks. Suppose there are two tasks in the system with low (A) and high (B) priority, which use two resources - X and Y:

At time T1, task (A) blocks resource X. Then, at time T2, task (A) displaces more priority task (B), which, at time T3, blocks resource Y. If task (B) attempts to block resource X (T4) without releasing resource Y, it will be transferred to the idle state, and task (A) will continue. If at time T5, task (A) attempts to block resource Y without releasing X, a deadlock state will occur — none of tasks (A) and (B) will be able to get control.

, () . , , .

, , , main queue sync, (

For the experiments, we will use the

There is another cool opportunity to

and Editor's Assistant (

Let's start with simple experiments. We also define a number of global queues: one consistent

As a job,

Once you have a global queue, for example,

In the case of synchronous

In the case of asynchronous

start without waiting for the tasks to complete

, and the priority of the global queue

, , —

We look at how user

In the case of synchronous,

What happens if we use the

asynchronously with respect to the current queue? In this case, the execution of the program does not stop and does not wait until this task is completed in the queue

and will perform them at the same time as the tasks

Let's assign our

and then

This experiment will convince us that our new queue is

Thus, for multi-threaded code execution it is not enough to use the method

Let's use sequential

, and then

queue of tasks

queue

Here, multithreaded tasks are executed, and tasks are more often executed on queues

You can delay the execution of tasks on any queue

In order to initialize the

Let's assign our parallel queue a

, and then

Our new parallel queue is

The picture is completely different compared to one consecutive queue. If there all tasks are performed strictly in the order in which they are submitted for execution, then for our parallel (multi-threaded) queue

being later queued

Let's use parallel

queue

queue

Here is the same picture as with different consecutive

You can create queues with deferred execution using an argument

If you want to have additional capabilities for managing the execution of various tasks on the

Setting the flag

This allows you to forcibly increase the priority of a specific task for a

If we remove the flag

the tasks

But still, they get to the very beginning of the corresponding queues. The code for all of these experiments is on firstPlayground.playground on Github.

The class

Despite the presence of a method

You can use the class

to solve the problem given at the very beginning of the post - downloading images from the network:

We form a synchronous task in the form of a

Using the function

we are waiting for notification of the end of data loading in

The code is on the LoadImage.playground on Github .

We have two synchronous tasks:

retrieving data from the network

and update based on

This is a typical pattern, performed using multi-threading mechanisms

This can be done either in the classical way:

either using the ready asynchronous API, using

or using

Finally, we can always “wrap” our synchronous puzzle into the asynchronous “shell” and execute it:

The code for this pattern is on LoadImage.playground on github .

Consider as an example a very simple application consisting of only one

Here’s what the class

and the class

image is loaded in the usual classical way. The model for the class

If we launch the application and start scrolling down quickly enough to see all 8 images, we will find that the Cassini Satellite will not load until we leave the screen. Obviously, it takes significantly longer to load than all the others.

But, having scrolled to the end and having seen “Arctic” in the most recent cell, we suddenly find that after some very short time it will be replaced with Cassini Satellite :

This is the incorrect functioning of such a simple application. What is the matter?The fact is that the cells in the tables are reusable due to the method

How can we remedy the situation? Within the mechanism,

Now everything will work correctly. Thus, multi-threaded programming requires non-standard imagination. The fact is that some things in multithreaded programming are carried out in a different order than the written code. The GCDTableViewController application is on Github .

If you have several tasks that need to be performed asynchronously and wait for them to complete, then a group is applied

Suppose we need to load 4 different images “from the network”:

The method

We create a group

- ,

, , :

, , , . : , ,

: :

.

Let's go back to our first experiment with bursts

I have to say that I used to store the results as a normal NEpotochno- safe to

The purpose of this section is to show how the in-safe string in should be arranged

All experiments with a stream-safe string will occur on the Playground GCDPlayground.playground on Github .

I will slightly change the tasks in order to accumulate information in both lines

In

Ideal for thread-safety would be the case when

Fortunately, it

Barriers

Let's see what the thread-safe class will look like

The function

The function

.

1.

-

2.

-

3.

-

4.

-

5.

and

-

6.

and

-

7.

and

-

8.

and

-

9.

-

10.

-

and

.

,

and

, , (

-

race condition . Tsan Github .

.

PS

IBM ,

— ( actor models ).

.

, iOS Swift c CS193p Winter 17 ( iOS 10 Swift 3) , iTunes , .

WWDC 2016. Concurrent Programming With GCD in Swift 3 (session 720)

WWDC 2016. Improving Existing Apps with Modern Best Practices (session 213)

WWDC 2015. Building Responsive and Efficient Apps with GCD.

Grand Central Dispatch (GCD) and Dispatch Queues in Swift 3

iOS Concurrency with GCD and Operations

The GCD Handbook

GCD

Modernize libdispatch for Swift 3 naming conventions

GCD

GCD – Beta

CONCURRENCY IN IOS

www.uraimo.com/2017/05/07/all-about-concurrency-in-swift-1-the-present

All about concurrency in Swift — Part 1: The Present

So, you have an application, it works on

main thread (main thread), which is responsible for executing the code that displays your user interface ( UI ). As soon as you start adding such time-consuming pieces of code to your application as loading data from the network or processing images on main thread (main thread), your UI starts to slow down a lot and can even cause it to freeze completely. .

How can I change the architecture of the application so that such problems do not arise? In this case, multithreading (

oncurrency ) comes to the rescue, which allows you to simultaneously perform two or more independent tasks ( tasks ): calculations, downloading data from a network or from a disk, image processing, etc.The processor at any given time can perform one of your tasks and the corresponding thread is allocated for it.

In the case of a single-core processor (iPhone and iPad), multithreading (

oncurrency ) is achieved by multiple short-term switching between “threads” ( threads ) on which tasks are performed, creating a reliable idea of the simultaneous execution of tasks on a single-core processor. On a multi-core processor (Mac), multithreading is achieved by the fact that each “thread” associated with a task is provided with its own kernel for running tasks. Both of these technologies use the general concept of multithreading ( oncurrency ).')

A kind of fee for introducing multithreading in your application is the difficulty of ensuring safe execution of code on different threads (

thread safety ). As soon as we allow tasks to work in parallel, problems arise because different tasks will want to access the same resources, for example, they will want to change the same variable in different threads, or they will want to access to resources that are already blocked by other tasks. This can lead to the destruction of the resources used by tasks on other threads.In iOS programming, multi-threading is provided to developers in the form of several tools: Thread , Grand Central Dispatch (GCD for short) and Operation - and is used to increase the performance and responsiveness of the user interface. We will not consider

Thread , since this is a low-level mechanism, and focus on GCD in this article and Operation (object-oriented API built on top of GCD ) in further publication.I must say that before

Swift 3 such a powerful framework as Grand Central Dispatch (GCD ) had an API based on C, which at first glance seems like a book of spells, and it is not immediately clear how to mobilize its ability to perform useful user tasks.In

Swift 3 everything changed dramatically. GCD has a completely Swift syntax that is very easy to use. If you are at least a little familiar with the old API GCD , then the whole new syntax will seem just a cakewalk; if not, then you just have to learn another ordinary programming section on iOS . The new GCD framework works in Swift 3 on all Apple devices, starting with Apple Watch , including all iOS devices, and ending with Apple TV and Mac .Another good news is that since

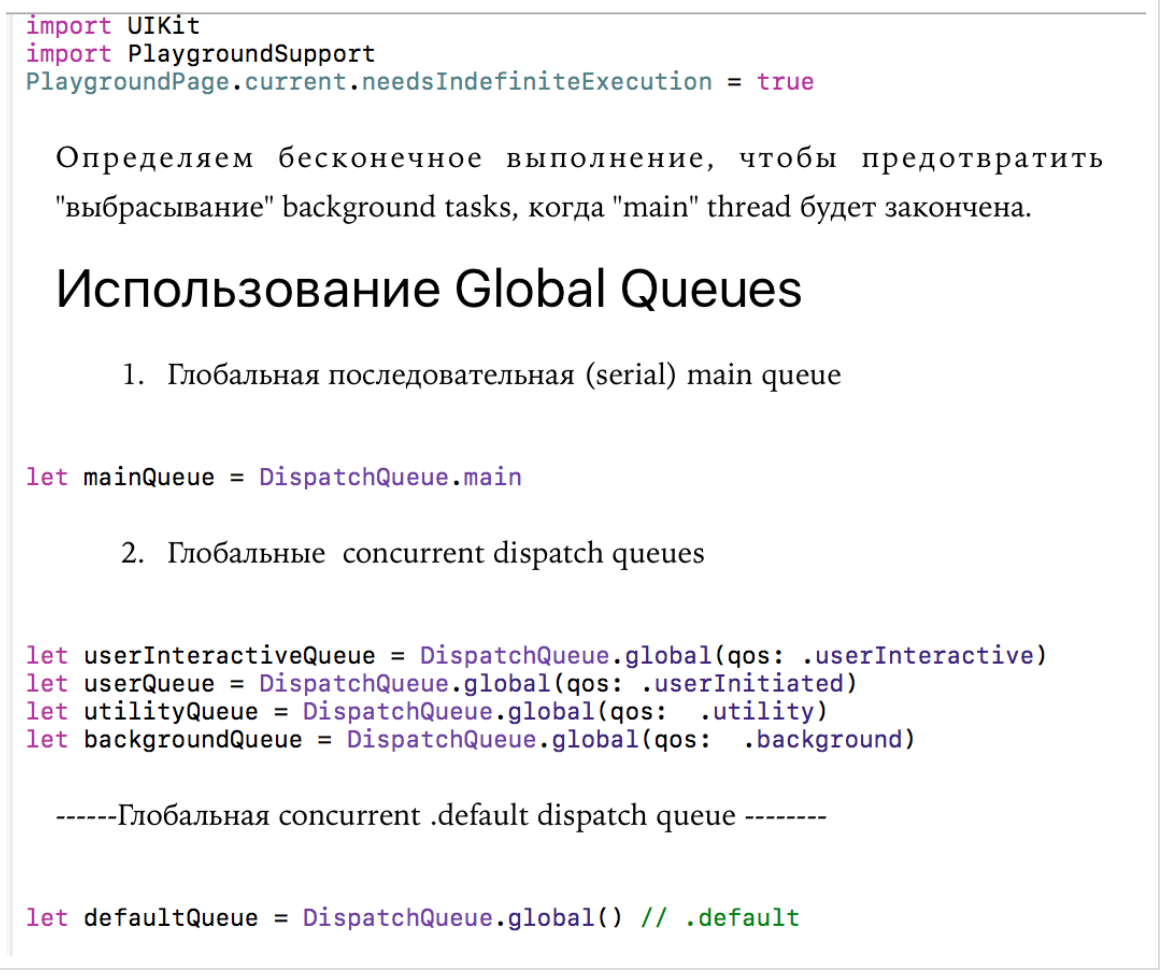

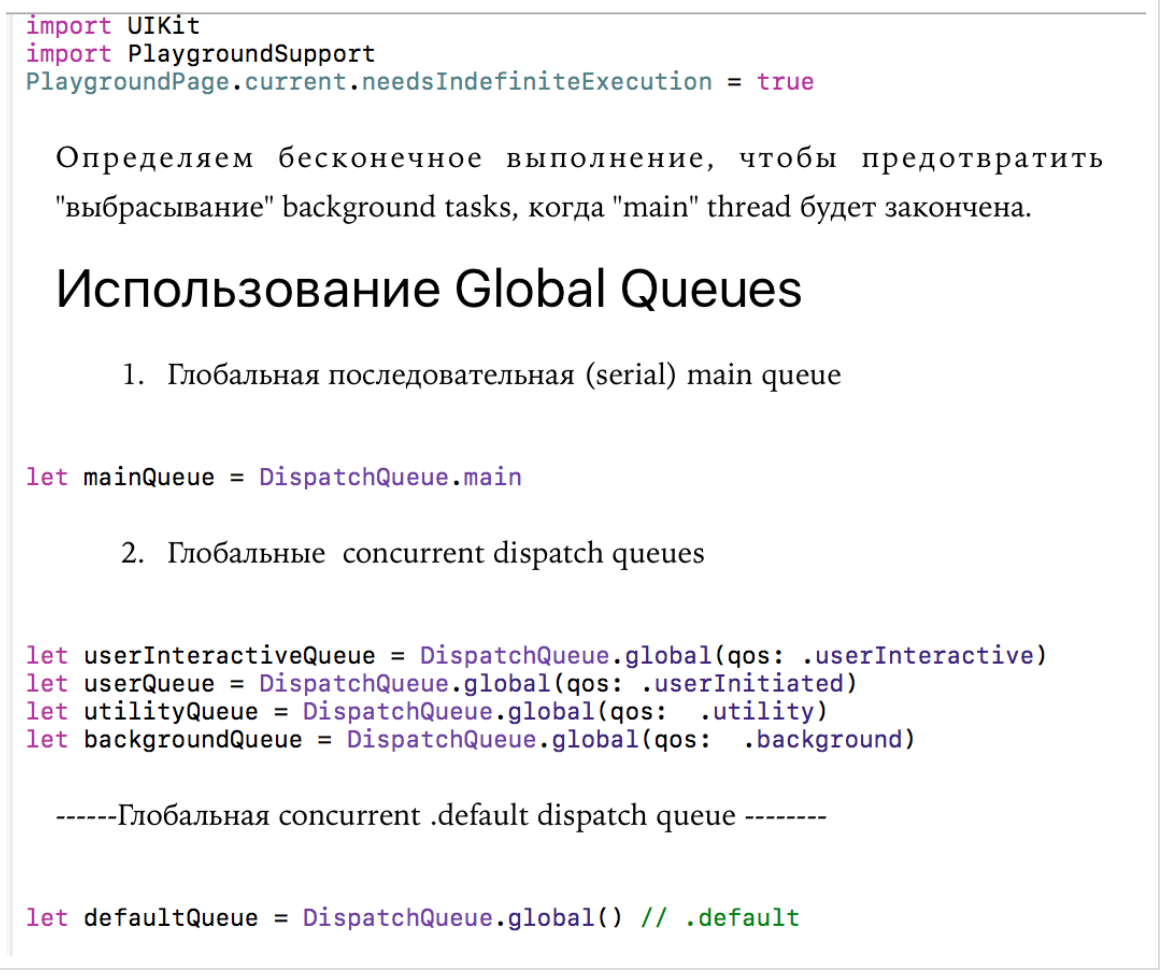

Xcode 8 , you can use such a powerful and visual tool as Playgroud to learn GCD and Operation . In Xcode 8 a new auxiliary class PlaygroudPage , which has a function that allows Playgroud to live for an unlimited time. In this case, the DispatchQueue queue may work until the work is completed. This is especially important for network requests. In order to use the PlaygroudPage class, you need to import the PlaygroudSupport module. This module also allows you to access the run loop , display the live UI , and perform asynchronous operations on Playgroud . Below we will see how this setting looks like in the work. This new Playground feature in Xcode 8 makes learning to use multithreading in Swift 3 very simple and intuitive.For a better understanding of multithreading (

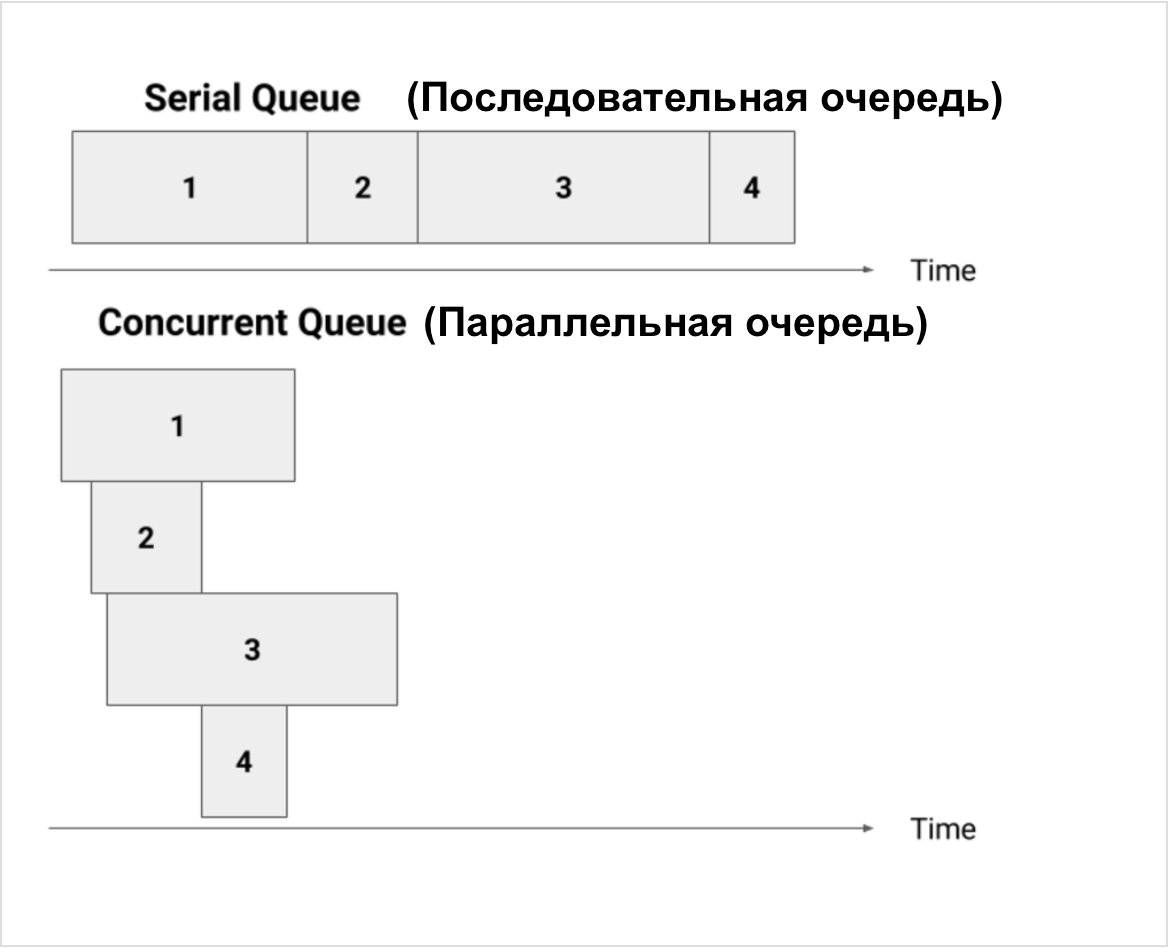

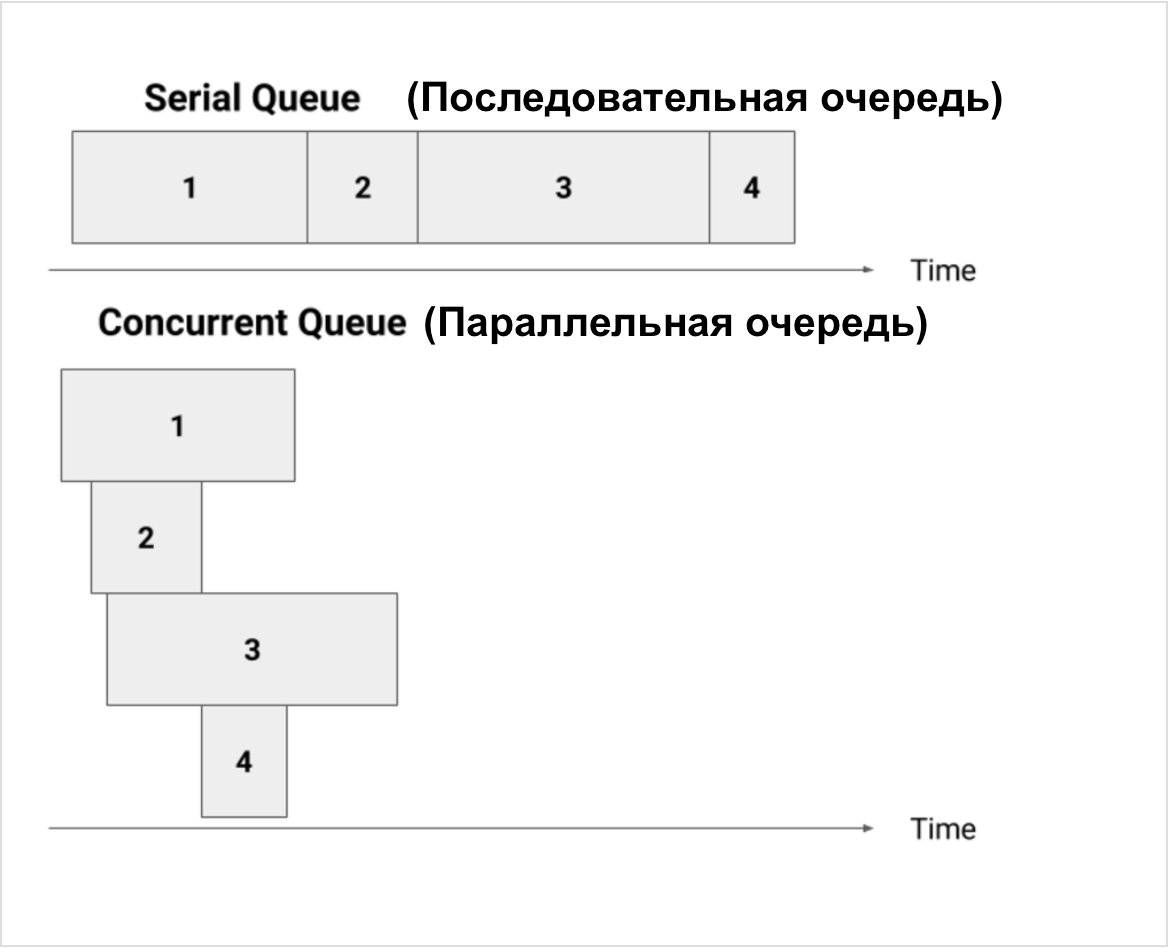

concurrency ), Apple introduced some abstract concepts with which both tools operate - GCD and Operation . The basic concept is the queue . Therefore, when we talk about multithreading in iOS from the point of view of an iOS application developer, we are talking about queues. Queues are the usual queues in which people line up to buy, for example, a cinema ticket, but in our case closures are lined up ( closure — anonymous code blocks). The system simply executes them according to the queue, “pulling out” the next in turn and starting it up for execution in the thread corresponding to this queue. Queues follow the FIFO pattern ( First In, First Out ), which means that the one who was first put in the queue will be the first to be sent. You can have multiple queues ( queues ) and the system “pulls” the closures one at a time from each queue and starts them to run in their own threads. So you get multithreading.But this is just a general idea of how multithreading (

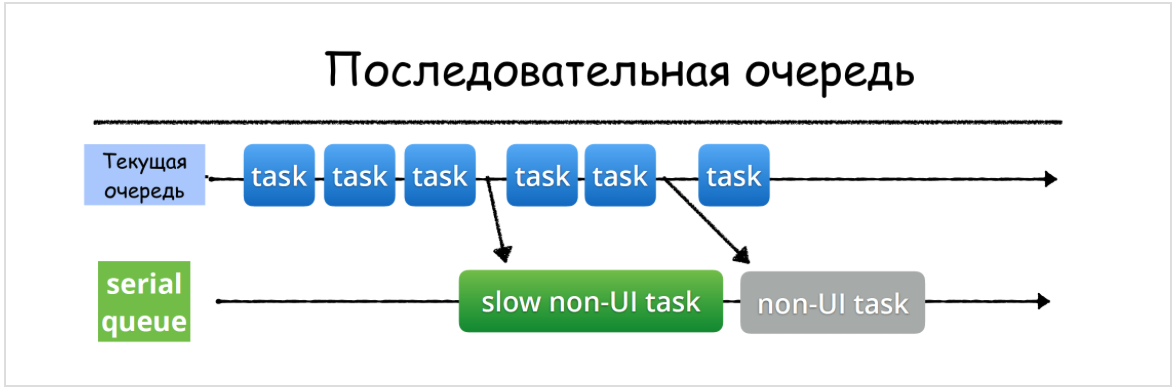

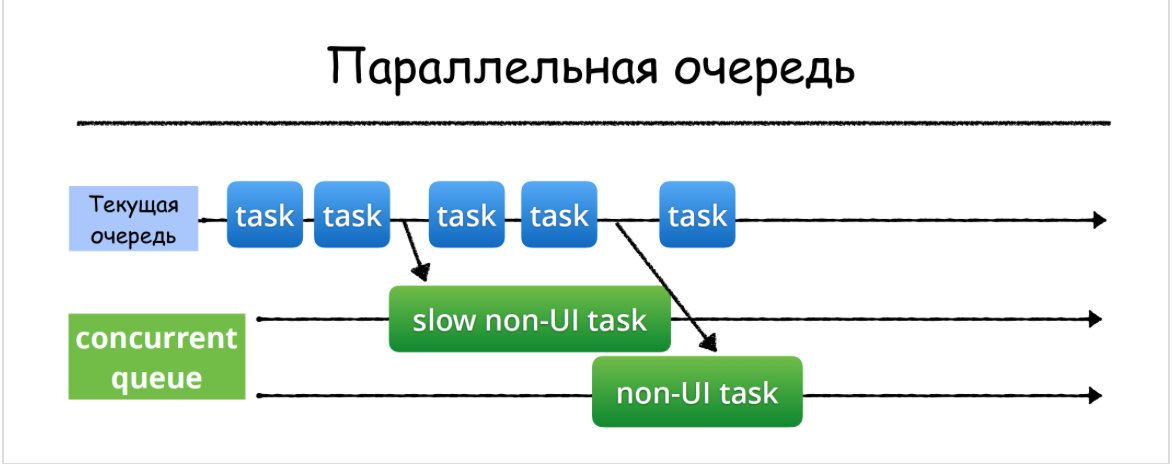

oncurrency ) works in iOS . The intrigue lies in the fact that they represent these queues in the sense of performing tasks in relation to each other (serial or parallel) and with the help of which function (synchronous or asynchronous) these tasks are placed in the queue, thereby blocking or not blocking the current queue.Serial ( serial ) and parallel ( concurrent ) queues

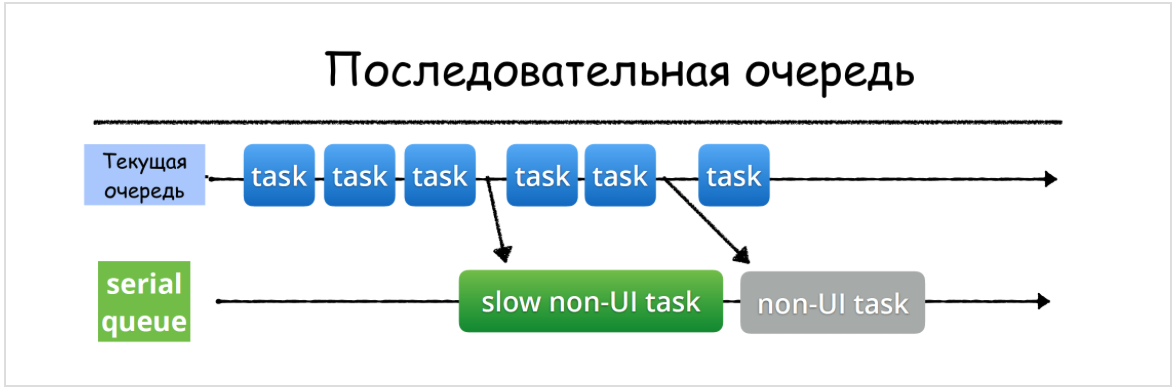

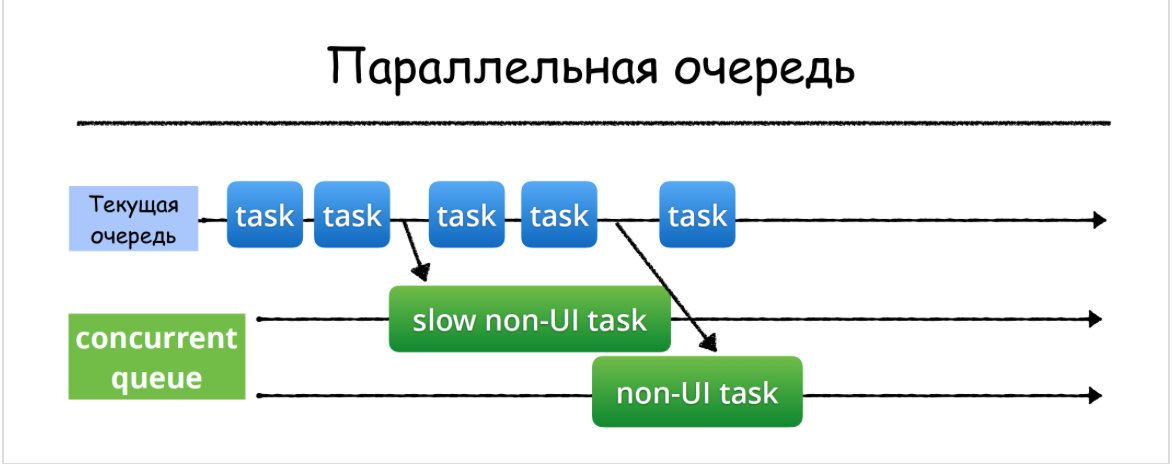

Queues can be “

serial ” (consecutive) when a task (closure) that is at the top of the queue is pulled out by iOS and works until it ends, then the next item is pulled from the queue, etc. This is a serial queue or serial queue. Queues can be “c oncurrent ” (multi-threaded) when the system “pulls” the closure located at the top of the queue and starts it to run on a specific thread. If the system still has resources, then it takes the next element from the queue and starts it to run on another thread while the first function is still running. And so the system can extend a range of functions. In order not to confuse the general concept of multithreading with "concurrent queues" (multi-threaded queues), we will call the "concurrent queue" parallel queue, meaning the order of execution of tasks on it relative to each other, without going into the technical implementation of this parallelism.

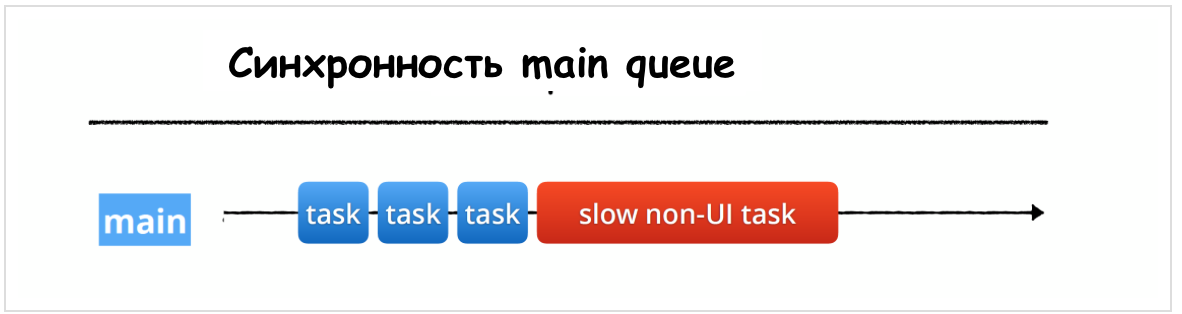

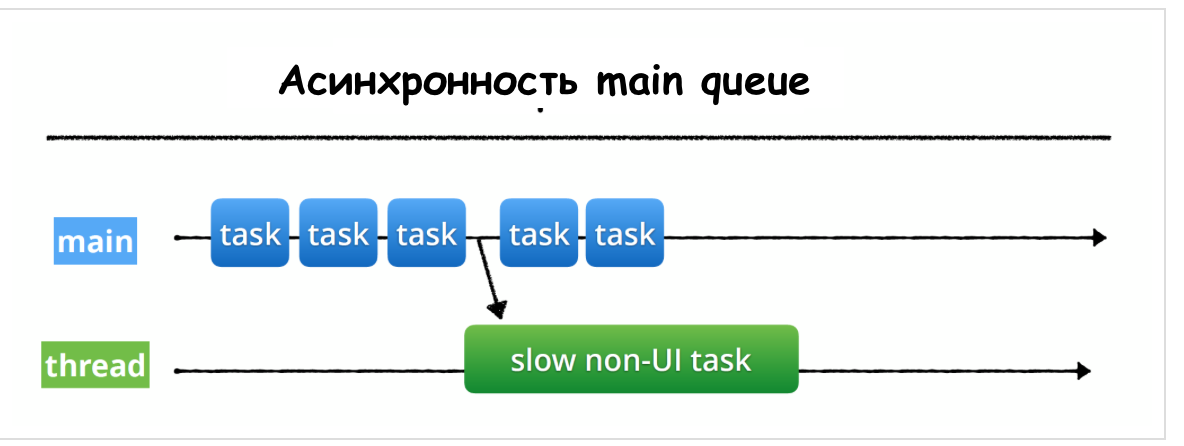

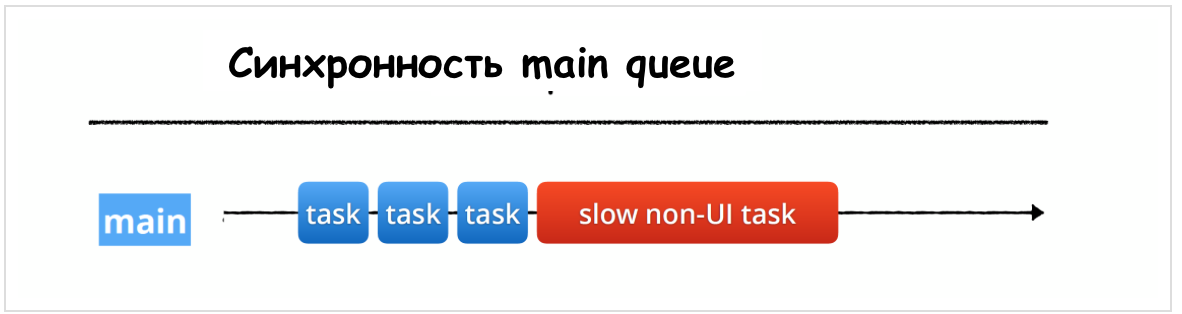

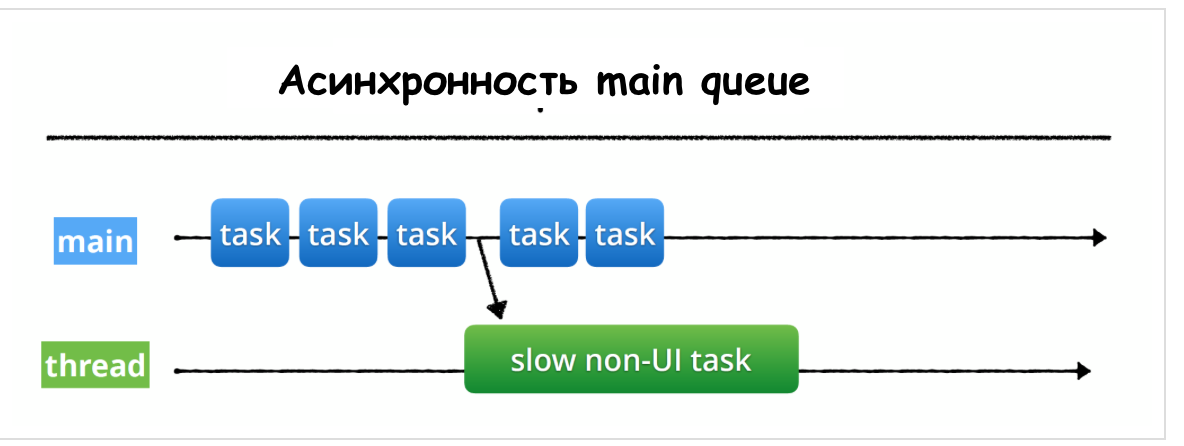

We see that on the

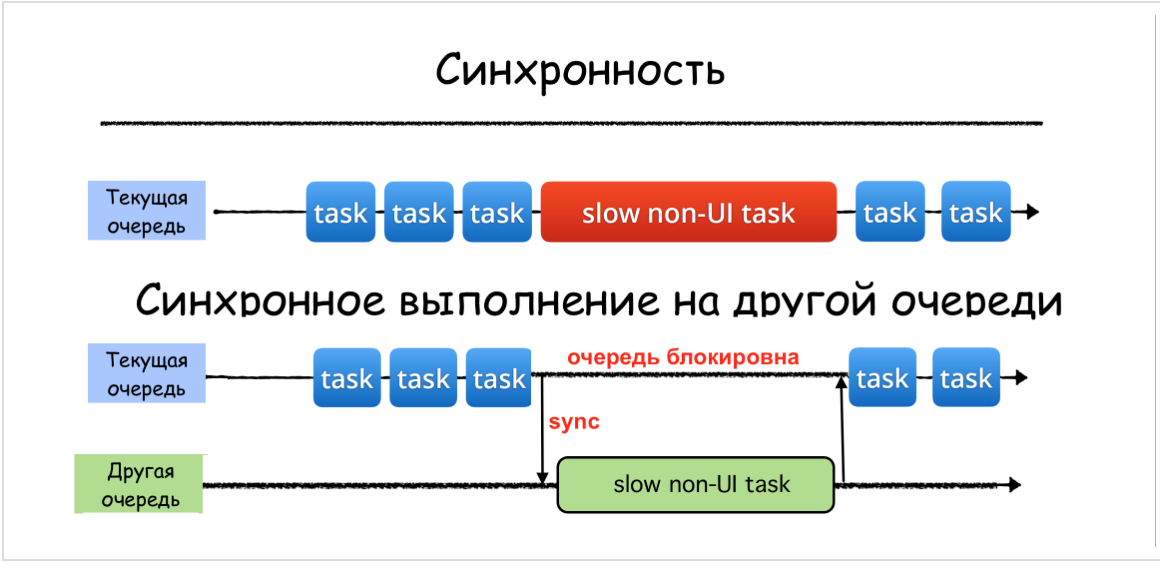

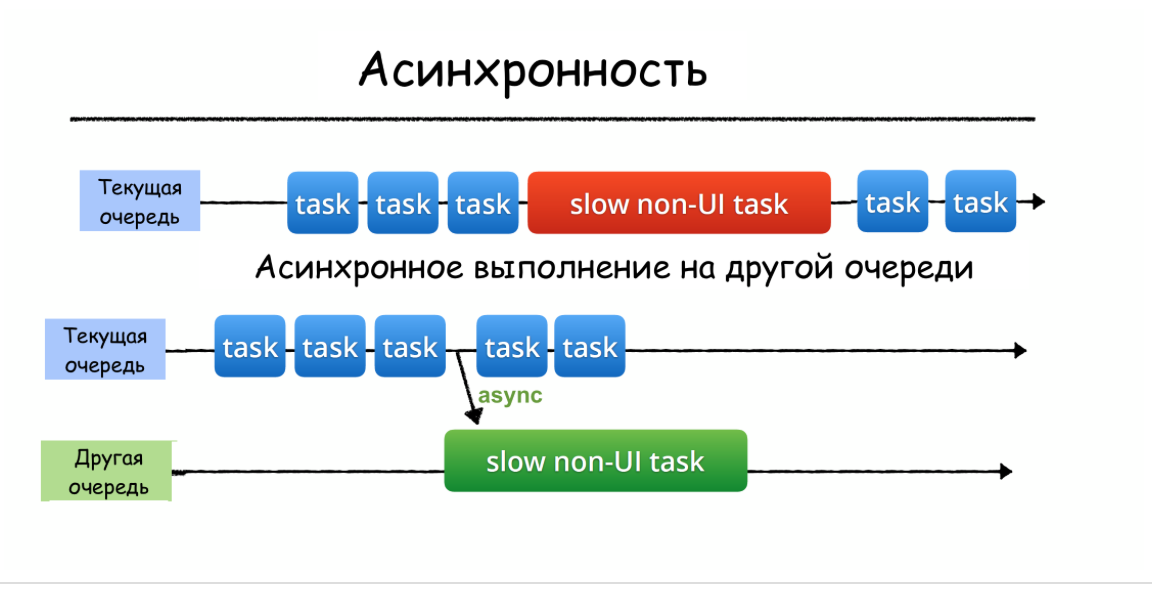

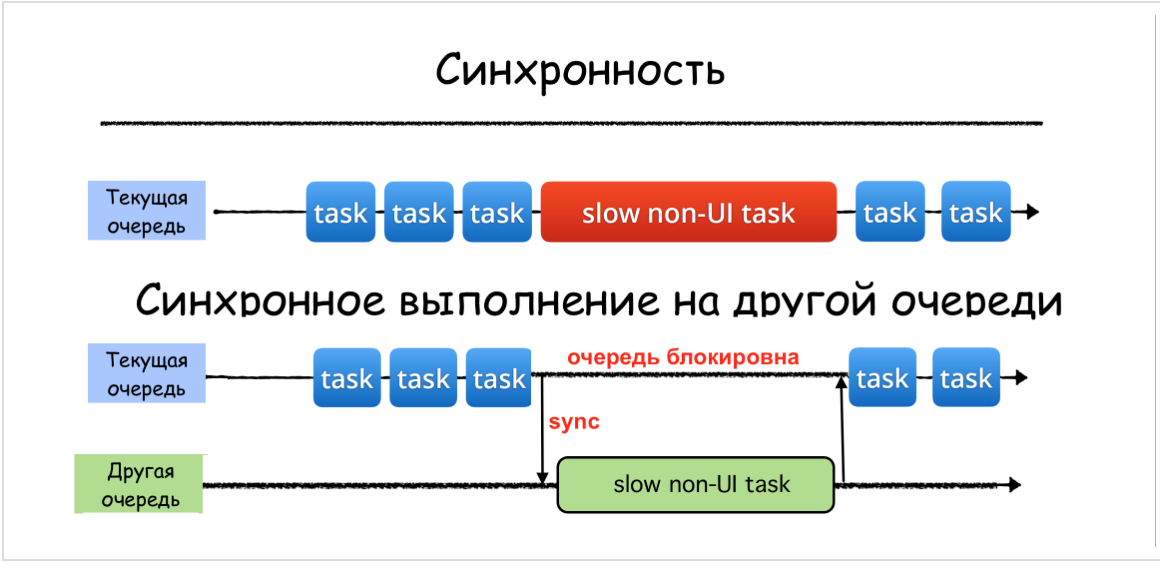

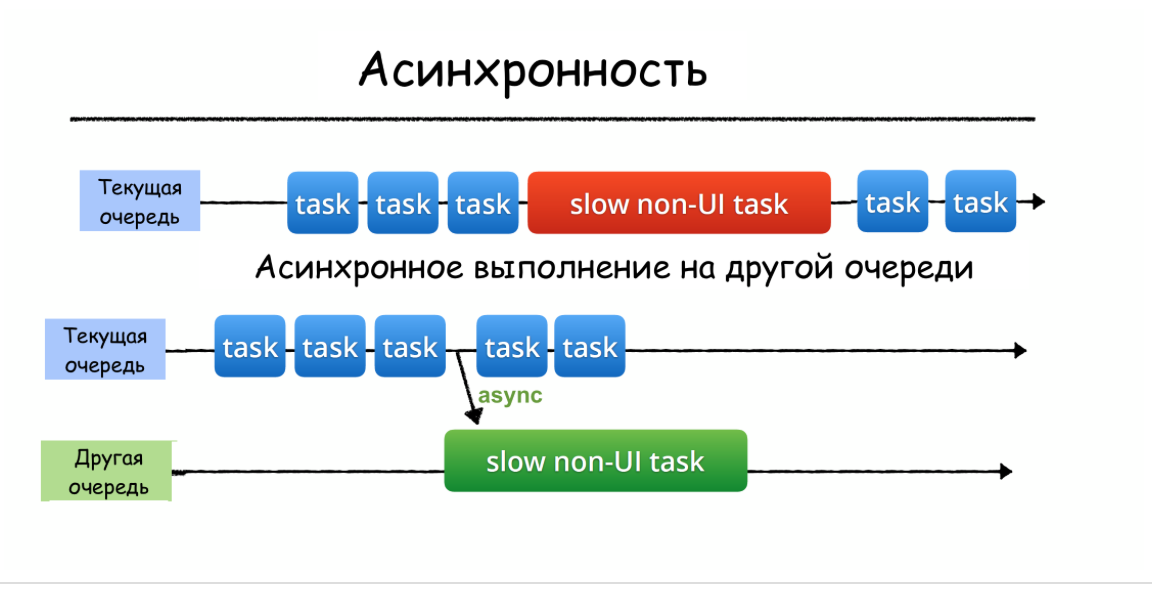

serial (sequential) queue, the completion of closures occurs strictly in the order in which they arrived at execution, while on the concurrent (parallel) queue, the tasks end in an unpredictable way. In addition, you can see that the total execution time of a certain group of tasks on the serial queue is much greater than the time to complete the same group of tasks on a concurrent queue. On the serial (serial) queue at any current time, only one task is executed, and on a concurrent (parallel) queue, the number of tasks at any current time can vary.Synchronous and asynchronous execution of tasks

Once the

queue created, the task can be placed on it using two functions: sync - synchronous execution with respect to the current queue and async - asynchronous execution with respect to the current queue.The

sync function returns control to the current queue only after the task has completed, thereby blocking the current queue:

The

async function, in contrast to the sync function, returns control to the current queue immediately after starting the job for execution in another queue, without waiting for it to complete. Thus, the async function async does not block the execution of tasks on the current queue:

In the case of asynchronous execution as a sequential (

serial ) queue, the “other queue” may be:

and parallel (

concurrent ) queue:

The task of the developer is only to select the queue and add the task (usually the closure) to this queue synchronously using the

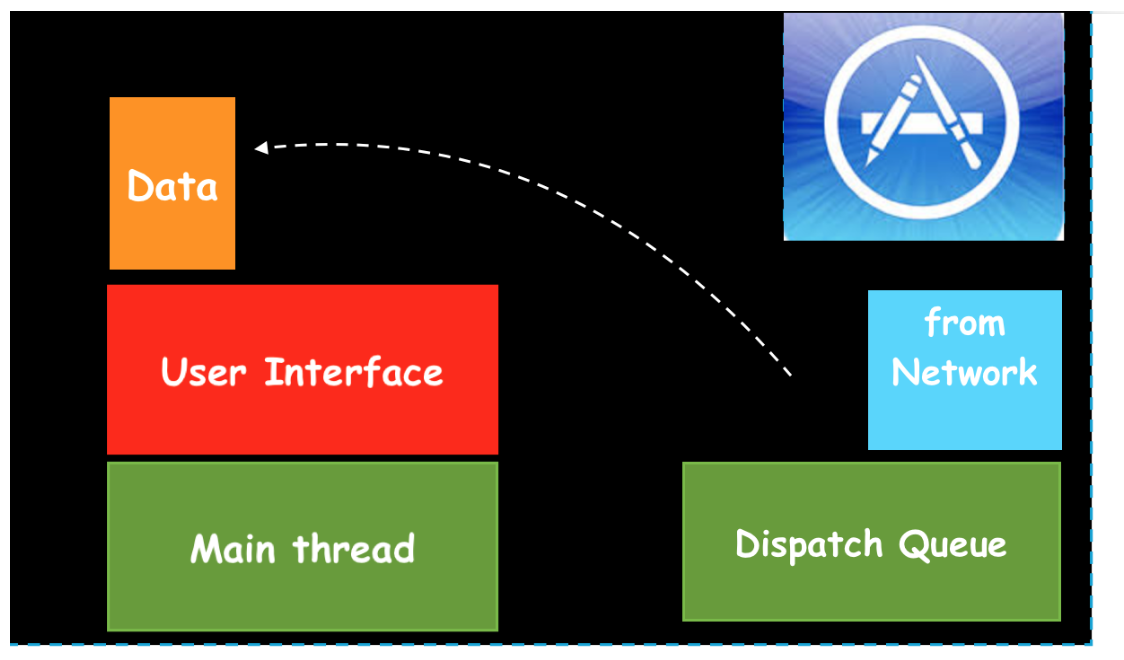

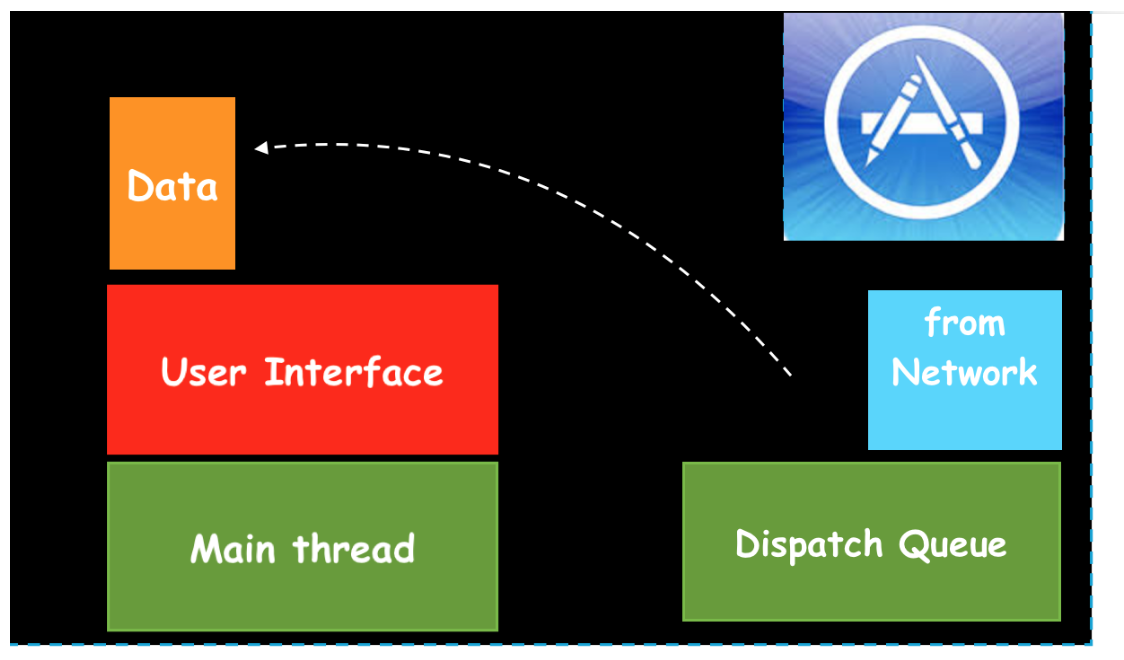

sync function or asynchronously using the async function, then iOS only works.Returning to the task presented at the very beginning of this post, we will switch the task of receiving data from the Data from Network to another queue:

After getting the

Data from the network to another Dispatch Queue , we send it back to the Main thread .

When we receive

Data data from the network on another Queue DispatchQueue , Main thread is free and serves all events that occur on the UI . Let's see what the real code looks like for this case:

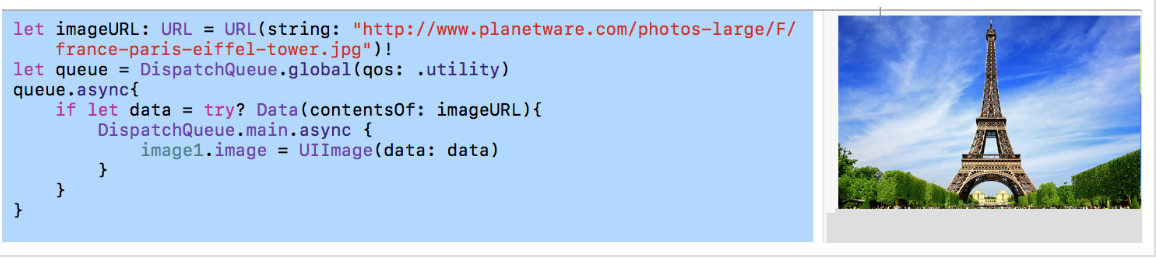

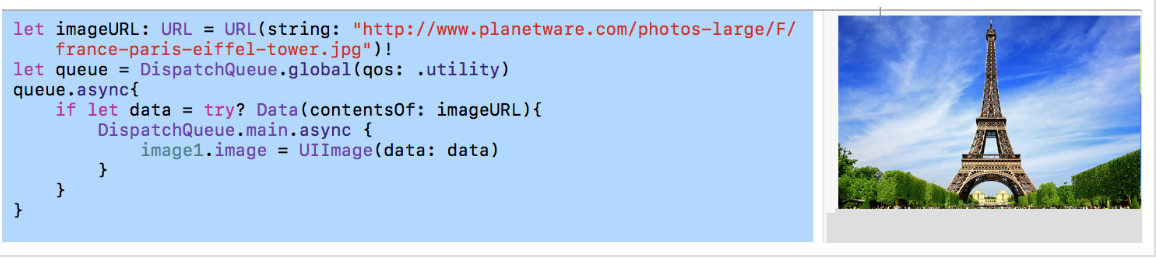

To load the imageURL

imageURL , which can take considerable time and block the Main queue , we ASINCHRONOUSLY switch the execution of this resource-intensive task to a global parallel queue with qos quality of service equal to .utility (more on this later): let imageURL: URL = URL(string: "http://www.planetware.com/photos-large/F/france-paris-eiffel-tower.jpg")! let queue = DispatchQueue.global(qos: .utility) queue.async{ if let data = try? Data(contentsOf: imageURL){ DispatchQueue.main.async { image.image = UIImage(data: data) print("Show image data") } print("Did download image data") } } After receiving the

data we return to the Main queue again to update our UI image1.image with this data.You see how easy it is to execute a switching chain to another queue in order to “divert” the execution of “expensive” tasks from the

Main queue , and then return to it again. The code is on the EnvironmentPlayground.playground on Github .Notice that switching cost jobs from the

Main queue to another thread is always ASYNCHRONOUS .You need to be very careful with the

sync method for queues, because the “current thread” has to wait for the job to complete on another queue. NEVER call the sync method on the Main queue , because it will cause your application to deadlock ! (more on that below)Global queues

In addition to custom queues that need to be specifically created, the

iOS system provides the developer with ready-made ( out-of-the-box ) global queues ( queues ). Their 5:1.) a sequential

Main queue in which all operations with the user interface ( UI ) take place: let main = DispatchQueue.main If you want to perform a function or closure that does something with a user interface (

UI ), with a UIButton or with a UI-- , you must put this function or closure on the Main queue . This queue has the highest priority among global queues.2.) 4 background

concurrent (parallel) global queues with different quality of service qos and, of course, different priorities: // let userInteractiveQueue = DispatchQueue.global(qos: .userInteractive) let userInitiatedQueue = DispatchQueue.global(qos: .userInitiated) let utilityQueue = DispatchQueue.global(qos: .utility) // let backgroundQueue = DispatchQueue.global(.background) // let defaultQueue = DispatchQueue.global() Apple awarded each of these lines with an abstract “quality of service” qos (abbreviation for Quality of Service ), and we must decide what it should be for our assignments.Below are the various

qos and explains what they are for:.userInteractive- for tasks that interact with the user at the moment and take very little time: animation, performed instantly; the user does not want to do this in theMain queue, but this should be done as quickly as possible, since the user is interacting with me right now. You can imagine a situation where a user runs a finger across the screen, and you need to calculate something related to intensive image processing, and you place the calculation in this queue. The user continues to drive his finger across the screen, he does not immediately see the result, the result is a little behind the finger position on the screen, as the calculations take some time, but at least theMain queuestill “listens” to our fingers and reacts to them. This queue has a very high priority, but lower than that of theMain queue..userInitiated- for tasks that are initiated by the user and require feedback, but this is not inside an interactive event, the user is waiting for feedback to continue the interaction; may take a few seconds; has a high priority, but lower than the previous queue,.utulity- for tasks that take some time to complete and do not require immediate feedback, for example, loading data or clearing a certain database. Something is being done that the user is not asking for, but this is necessary for this application. A task can take from a few seconds to a few minutes; priority is lower than the previous queue,.background- for tasks not related to visualization and not critical to the time of execution; for example,backupsor synchronization with awebservice. This is what usually runs in the background, only happens when no one wants any maintenance. Just a background task that takes considerable time from minutes to hours; has the lowest priority among all global queues.

There is also the Global

concurrency default .default , which reports a lack of information about the “quality of service” qos . It is created using the operator: DispatchQueue.global() If it is possible to determine

qos information from other sources, then it is used; if not, then qos used between .userInitiated and .utility .It is important to understand that all these global queues are SYSTEM global queues and our tasks are not the only tasks in this queue! It is also important to know that all global queues, except one, are

concurrent (parallel) queues.Global Serial Queue for the user interface - Main queue

Apple provides us with a single GLOBAL

serial (SERIAL) queue - this is the Main queue mentioned above. It is undesirable to perform resource-intensive operations (for example, downloading data from the network) that are not related to changing the UI on this queue so as not to freeze the UI for the duration of this operation and to preserve the responsiveness of the user interface to user actions at any time, for example on gestures.

It is strongly recommended to “divert” such resource-intensive operations to other threads or queues:

There is one more rigid requirement - ONLY on the

Main queue we can change UI elements.This is because we want the

Main queue be not only “responsive” to actions with the UI (yes, this is the main reason), but we also want the user interface to be protected from “debugging” in a multithreaded environment, that is, a reaction to user actions would be performed strictly sequentially in an orderly manner. If we allow our UI elements to perform their actions in different queues, then it may happen that drawing will occur at different speeds and actions will intersect, which will lead to complete unpredictability on the screen. We use the Main queue as a kind of “synchronization point”, to which everyone who wants to “draw” on the screen returns.Multithreading problems

As soon as we allow tasks to work in parallel, there are problems related to the fact that different tasks will want to gain access to the same resources.

There are three main problems:

race condition- an error in the design of a multi-threaded system or application in which the operation of the system or application depends on the order in which the parts of the code are executedpriority inversiondeadlock- a situation in a multi-threaded system in which several threads are in a state of infinite waiting for resources occupied by these threads themselves

Race condition

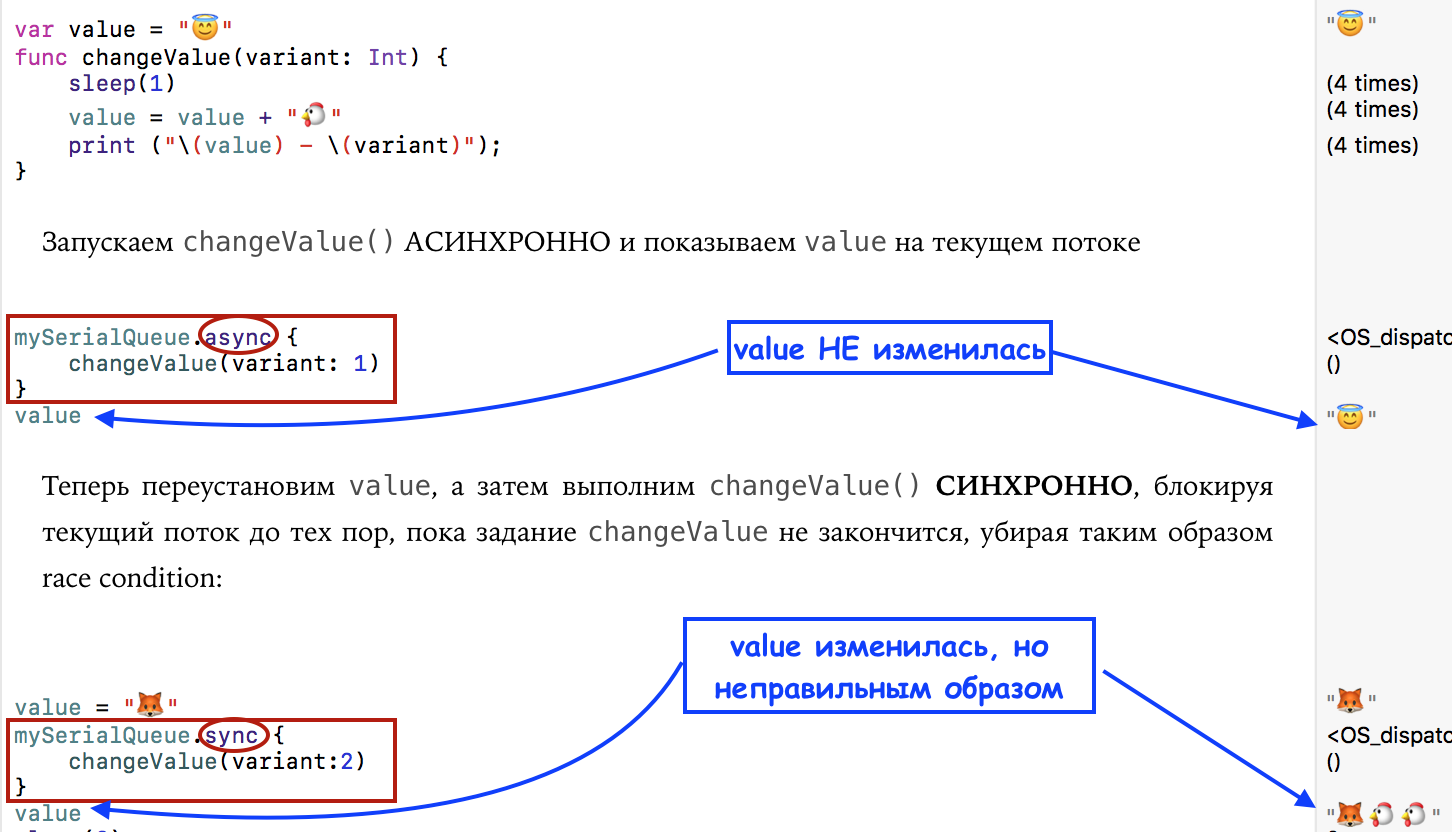

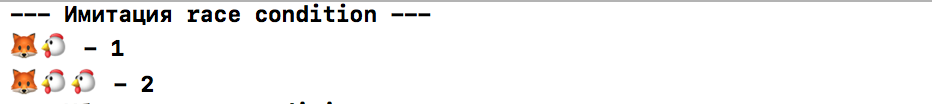

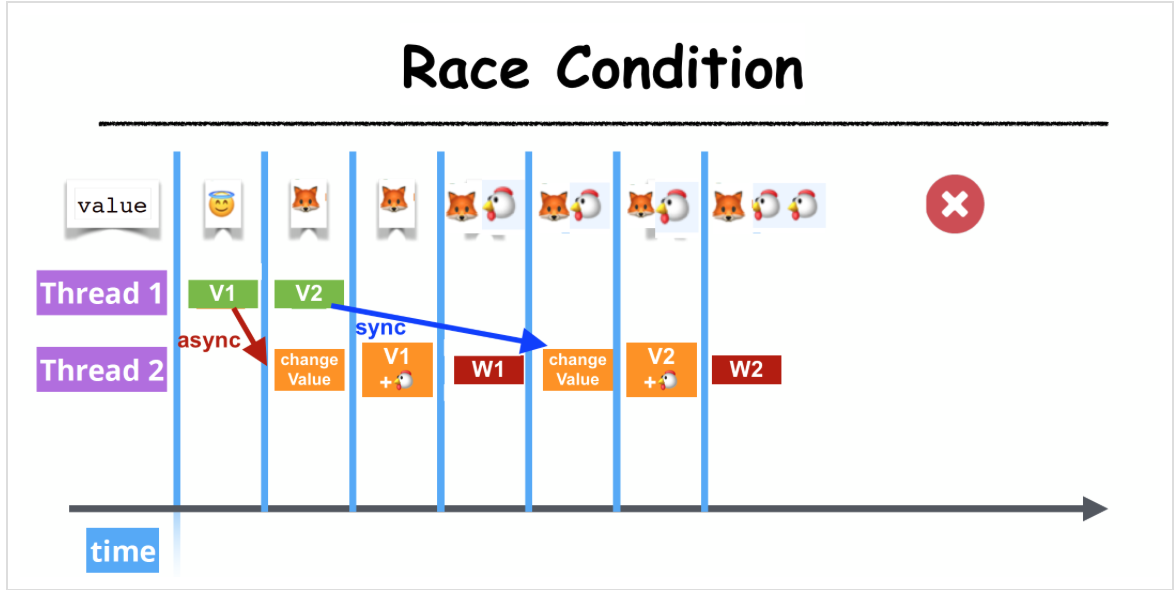

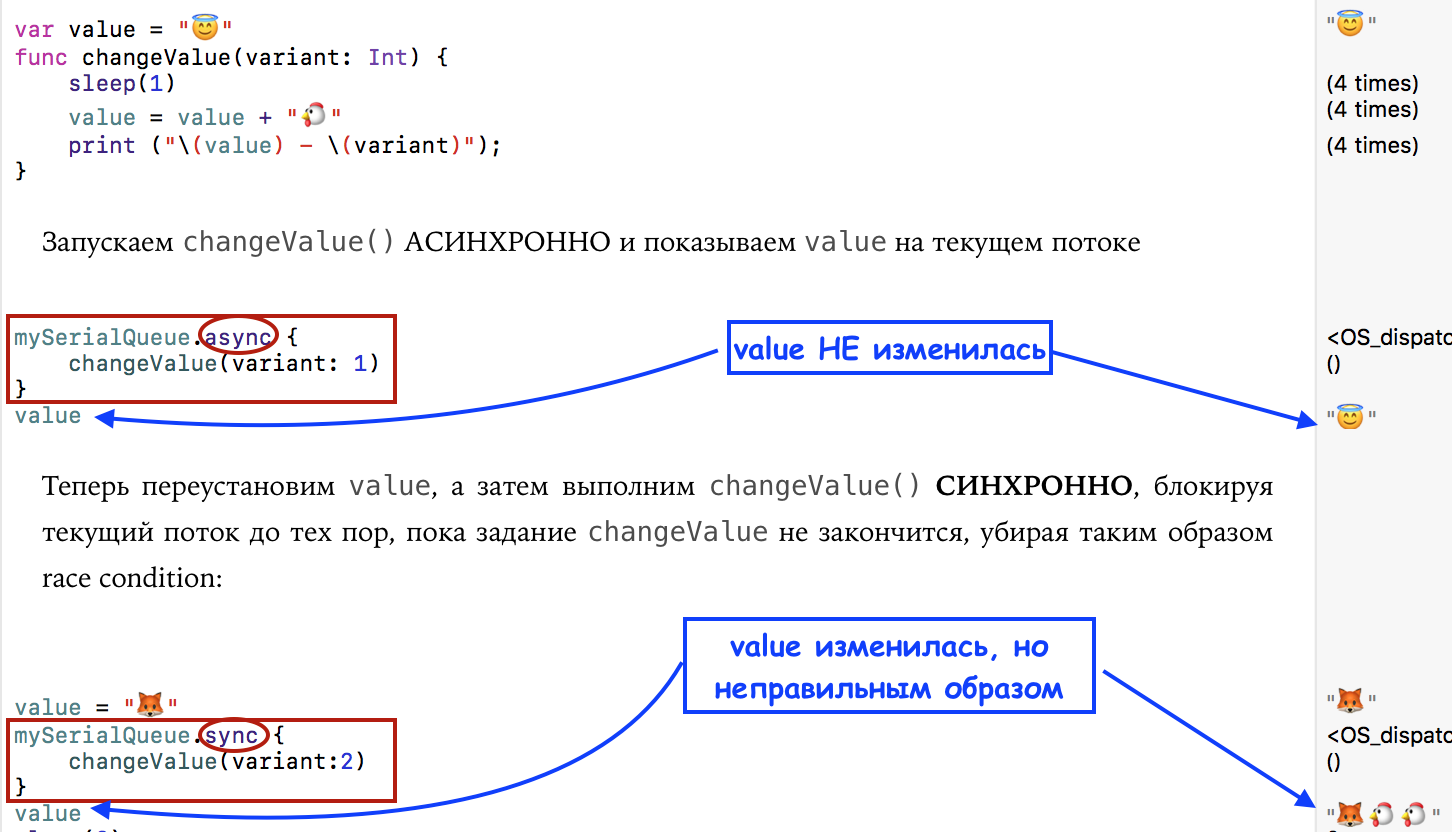

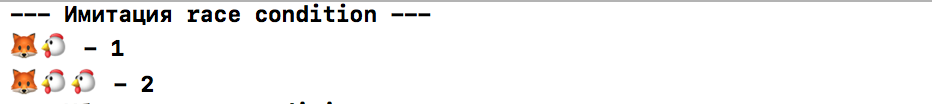

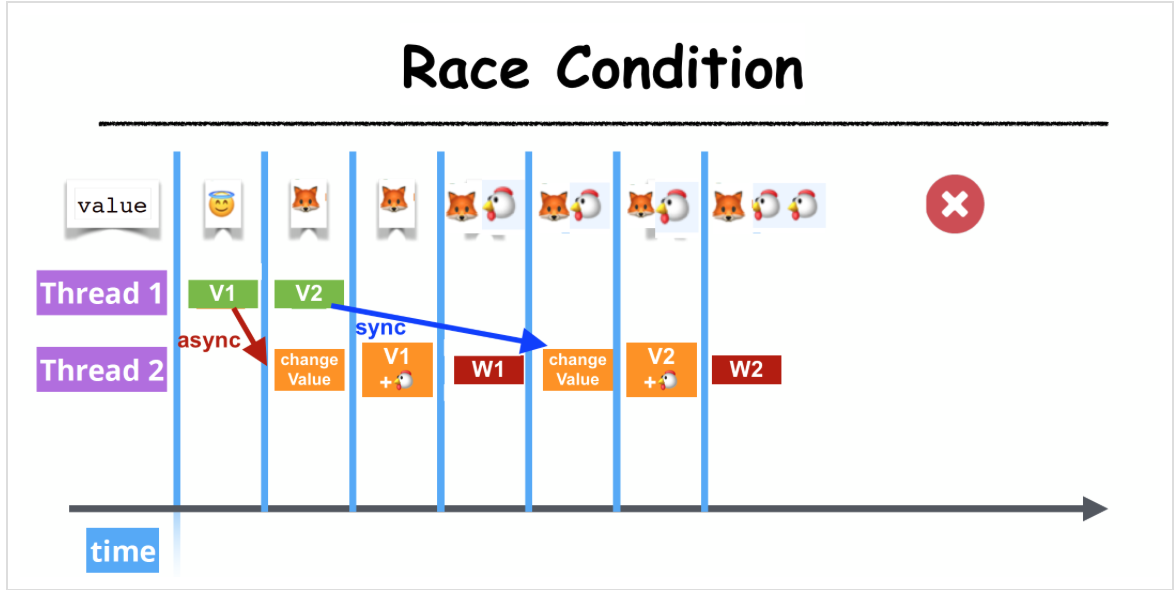

We can reproduce the simplest case of

race condition if we change the value variable asynchronously to a private queue, and show the value on the current thread:

We have the usual

value variable and the usual changeValue function to change it, and deliberately we did it with the help of the sleep(1) operator so that changing the value variable takes a considerable amount of time. If we run the changeValue function changeValue using async , then before it comes to placing the changed value in the value variable, the value variable on the current stream can be reset to a different value, this is the race condition . This code corresponds to the seal in the form:

and a diagram that clearly shows the phenomenon called "

race condition ":

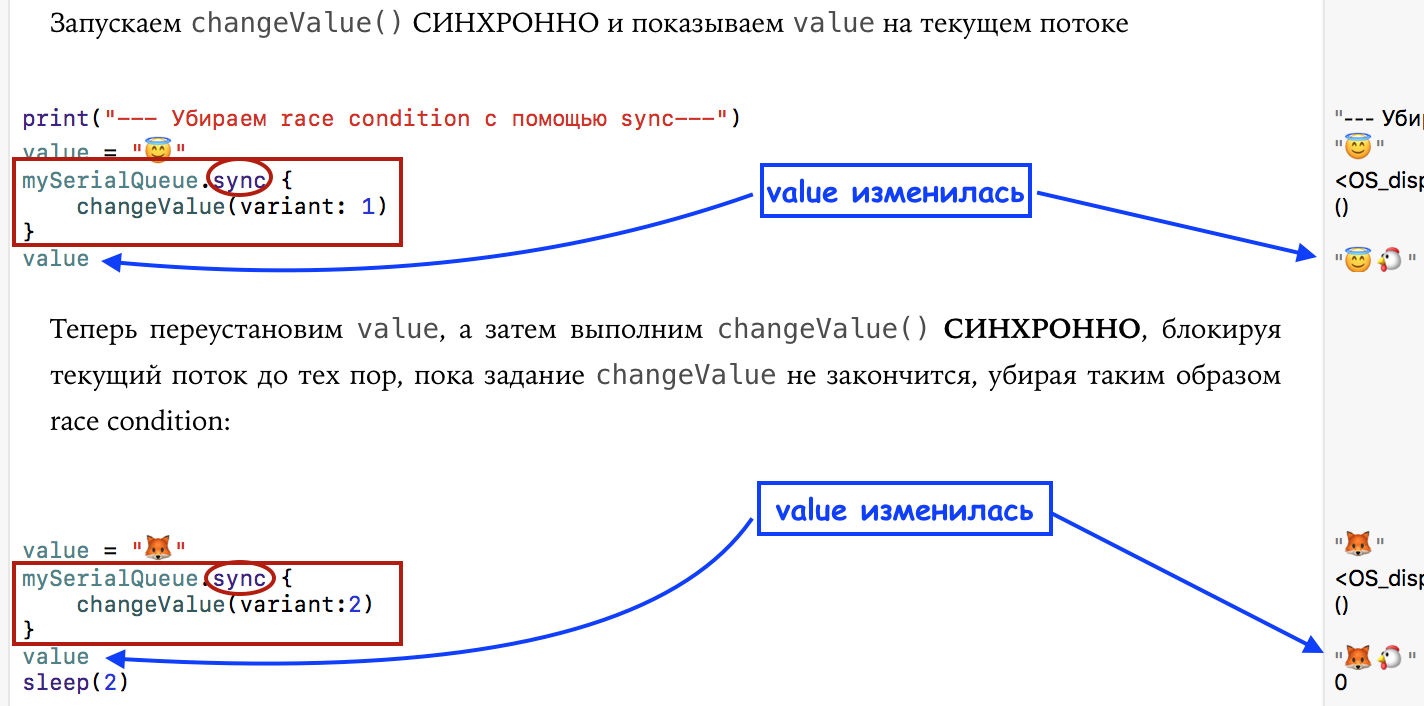

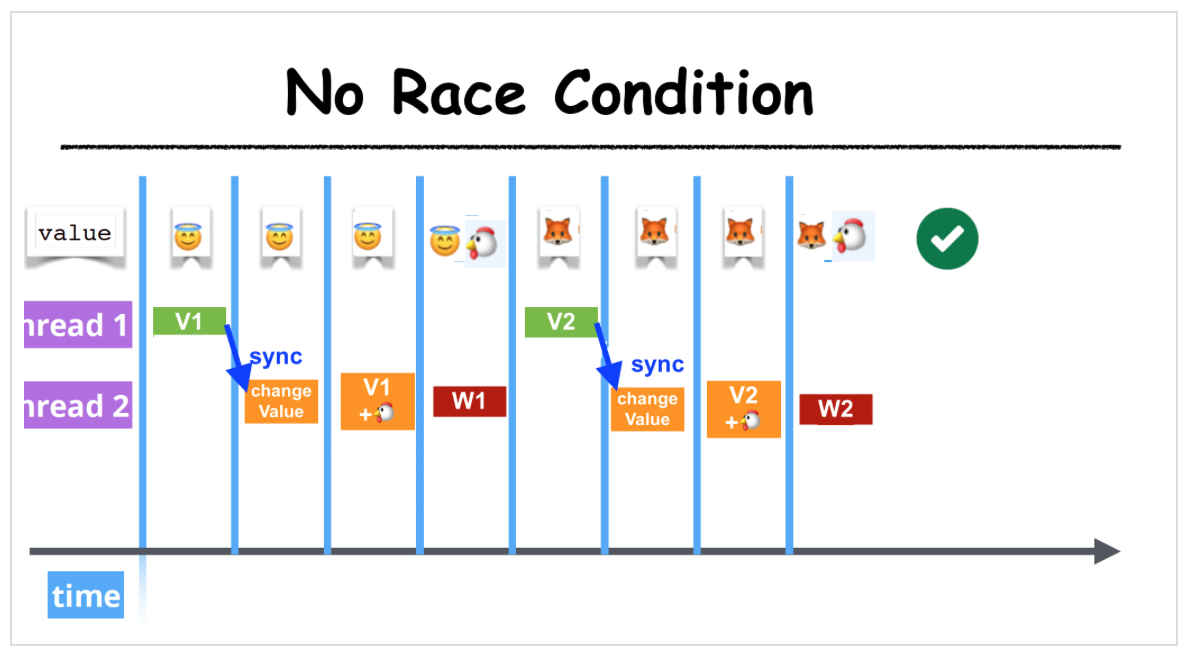

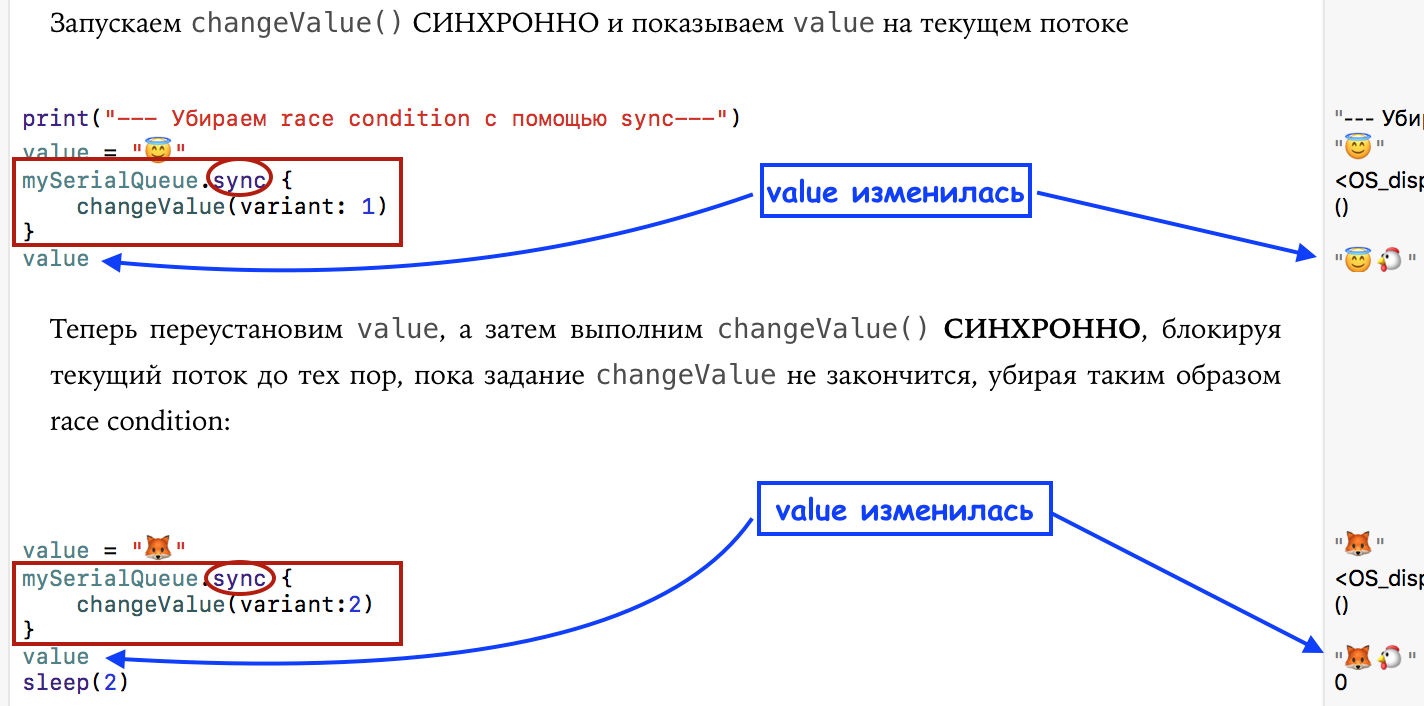

Let's replace the

async method with sync :

Both print and result have changed:

<img

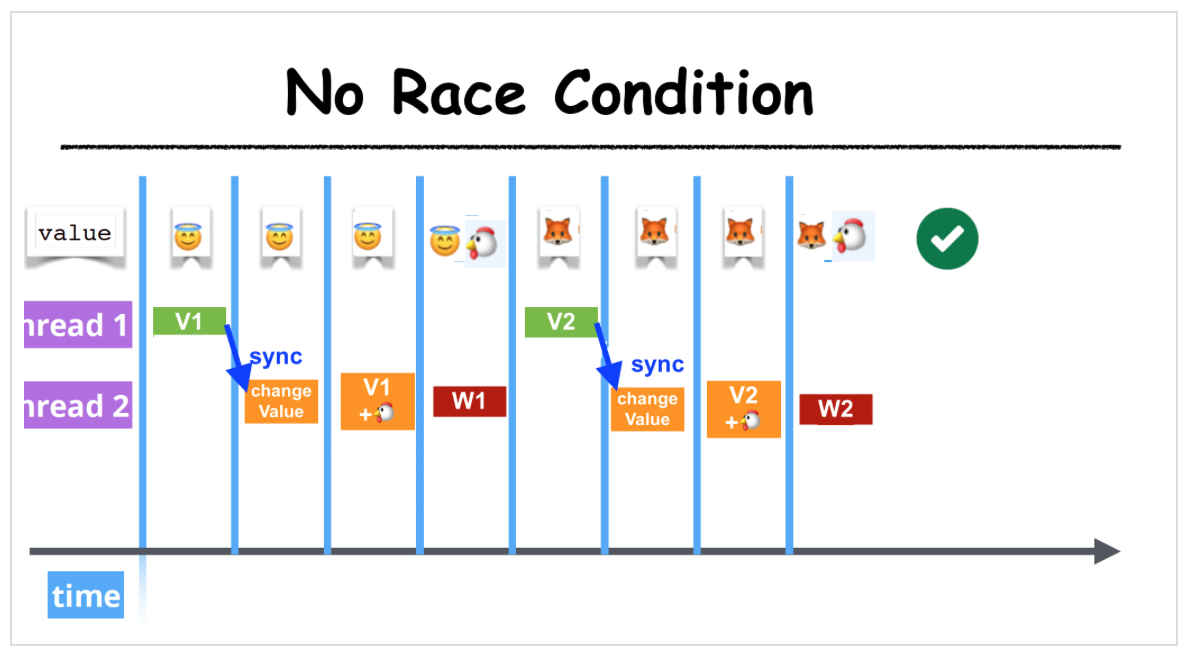

<imgand a diagram that lacks a phenomenon called "

race condition ":

We see that although it is necessary to be very attentive with the

sync method for queues, because the “current thread” has to wait for the completion of a task on another queue, the sync method turns out to be very useful in order to avoid race conditions. The code for simulating the " race condition " phenomenon can be viewed on the firstPlayground.playground on Github . Later we will show the real " race condition " when forming a string from characters received on different streams. An elegant way of forming a string using “barriers” will also be proposed, which will allow to avoid “ race conditions ” and make the string being thread-safe.Priority inversion

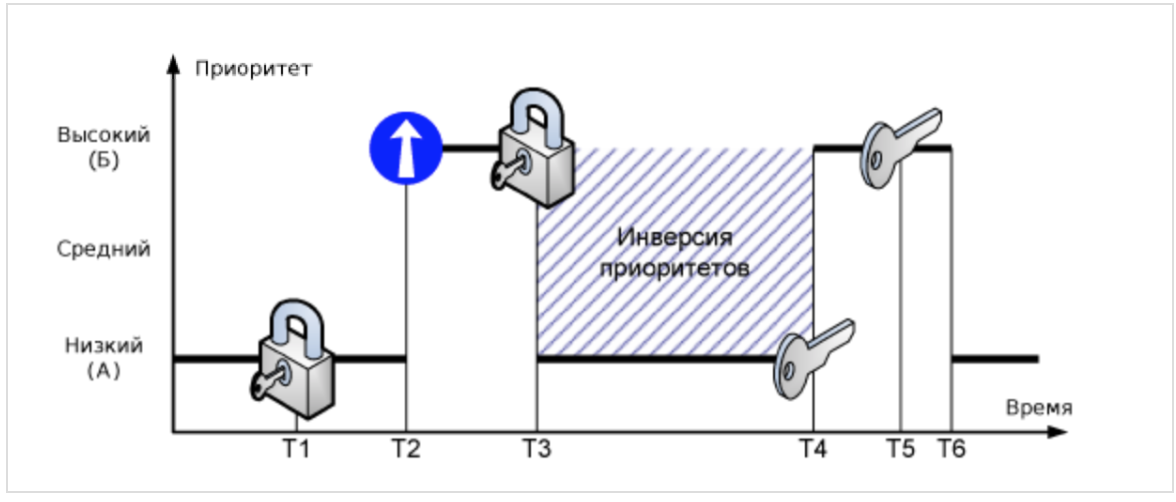

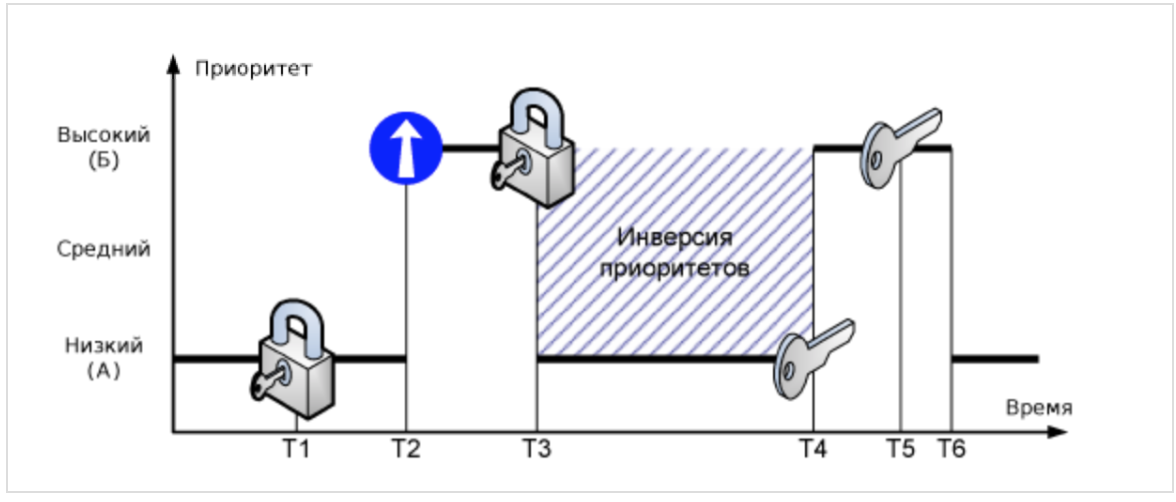

The concept of

Suppose there are two tasks in the system with low (A) and high (B) priority. At time T1, task (A) locks the resource and starts servicing it. At time T2, task (B) crowds out the low-priority task (A) and tries to seize the resource at time T3. But since the resource is locked, task (B) is transferred to the waiting, and task (A) continues execution. At time T4, task (A) completes the maintenance of the resource and unlocks it. Since the resource is awaiting task (B), it immediately starts execution.

The time interval (T4-T3) is called limited priority inversion . In this interval, there is a logical inconsistency with the rules of planning - a task with a higher priority is pending while a low priority task is being performed.

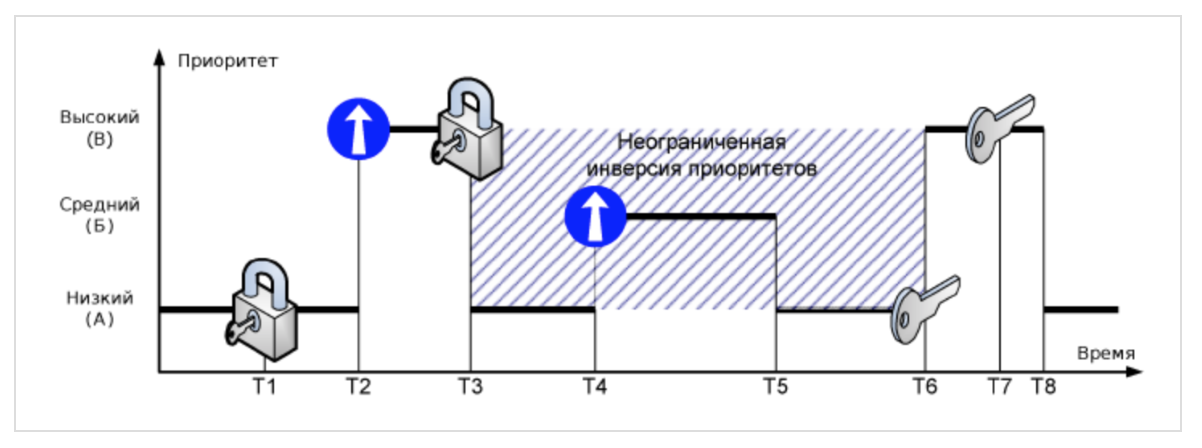

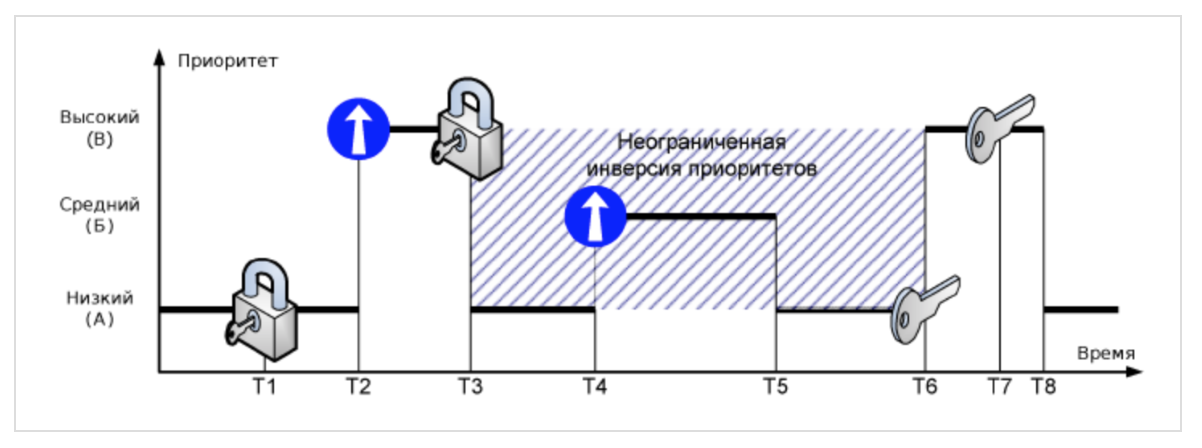

. : (), () ():

(), (), — . , () (), () . () , (T5-T4). , () , (), (). (T6-T3) . .

, , . «» .

,

DispatchWorkItem .(deadlock)

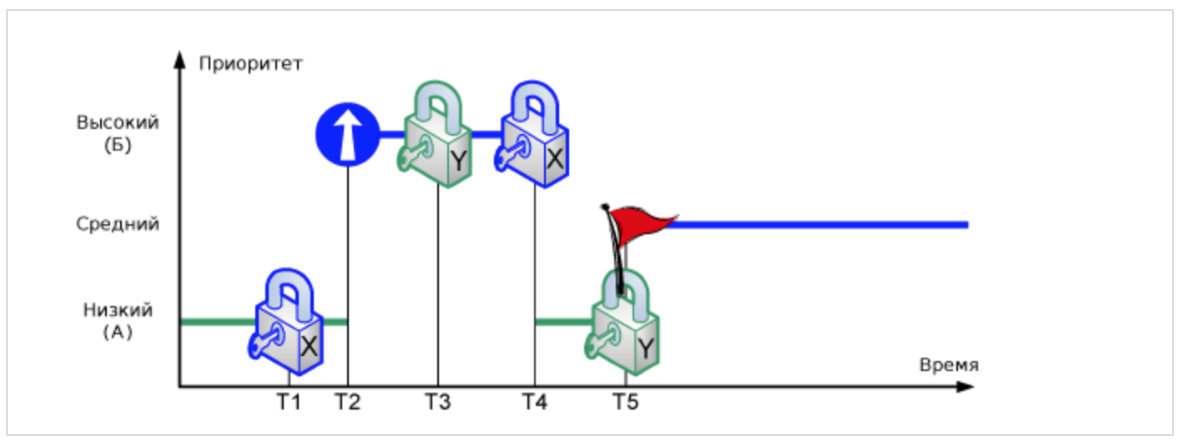

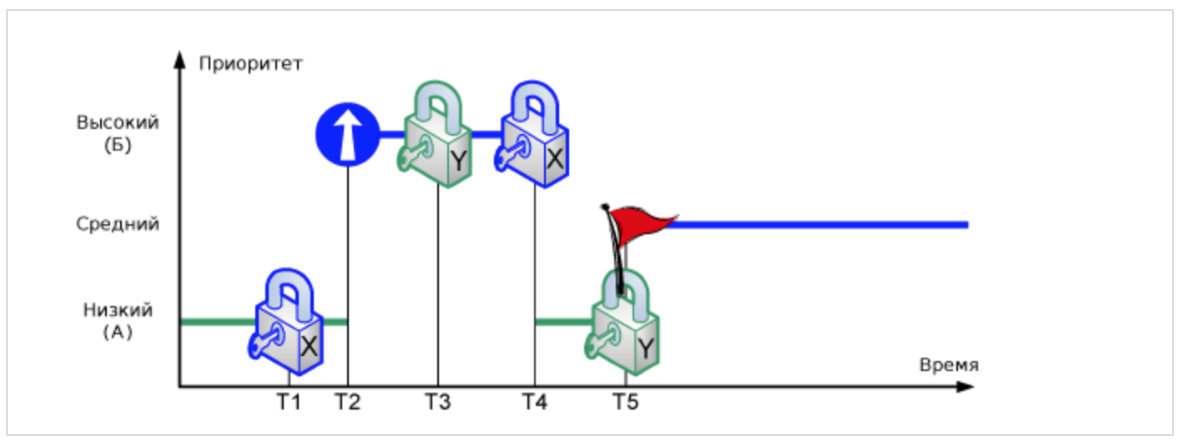

Mutual blocking is an emergency state of the system, which can occur when nested resource locks. Suppose there are two tasks in the system with low (A) and high (B) priority, which use two resources - X and Y:

At time T1, task (A) blocks resource X. Then, at time T2, task (A) displaces more priority task (B), which, at time T3, blocks resource Y. If task (B) attempts to block resource X (T4) without releasing resource Y, it will be transferred to the idle state, and task (A) will continue. If at time T5, task (A) attempts to block resource Y without releasing X, a deadlock state will occur — none of tasks (A) and (B) will be able to get control.

, () . , , .

, , , main queue sync, (

deadock ).sync main queue , ( deadlock ) !For the experiments, we will use the

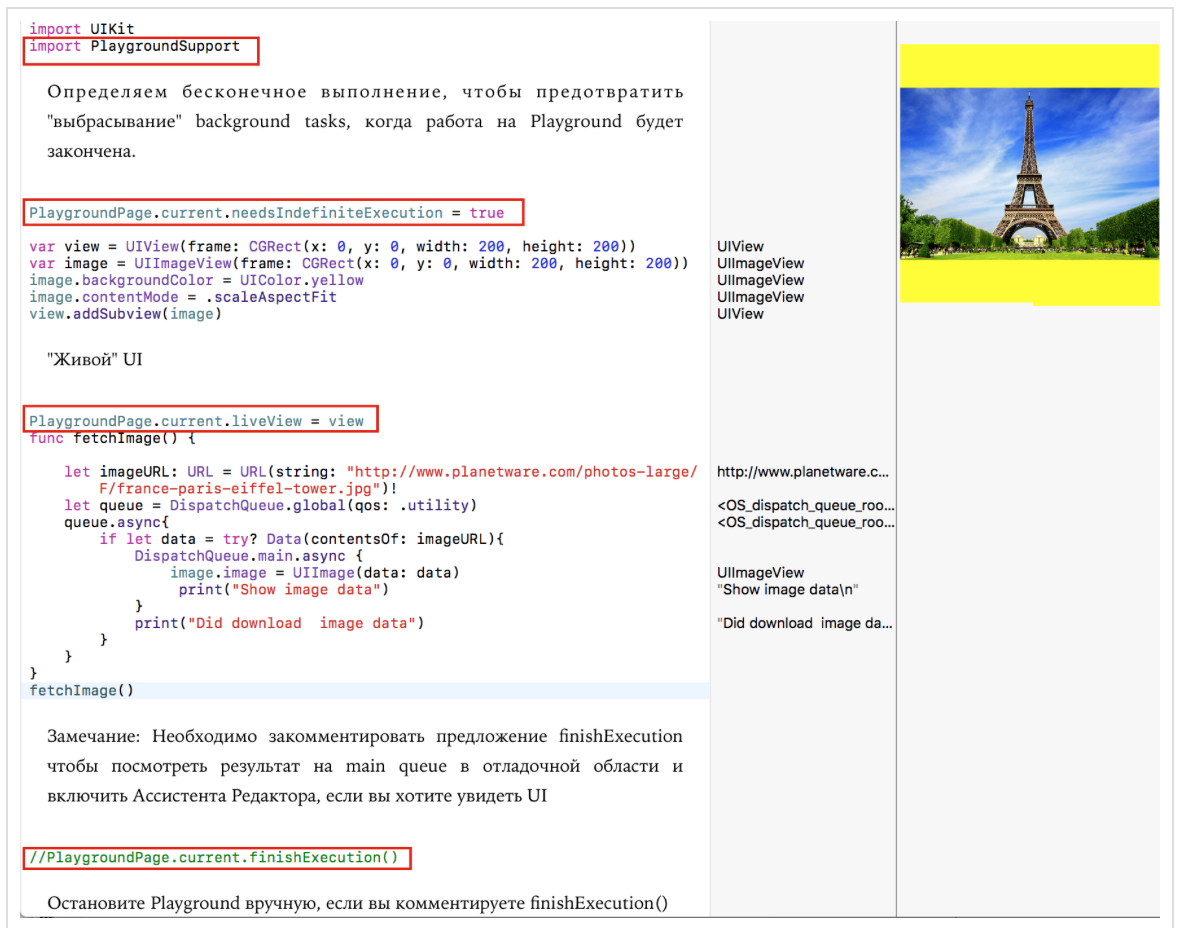

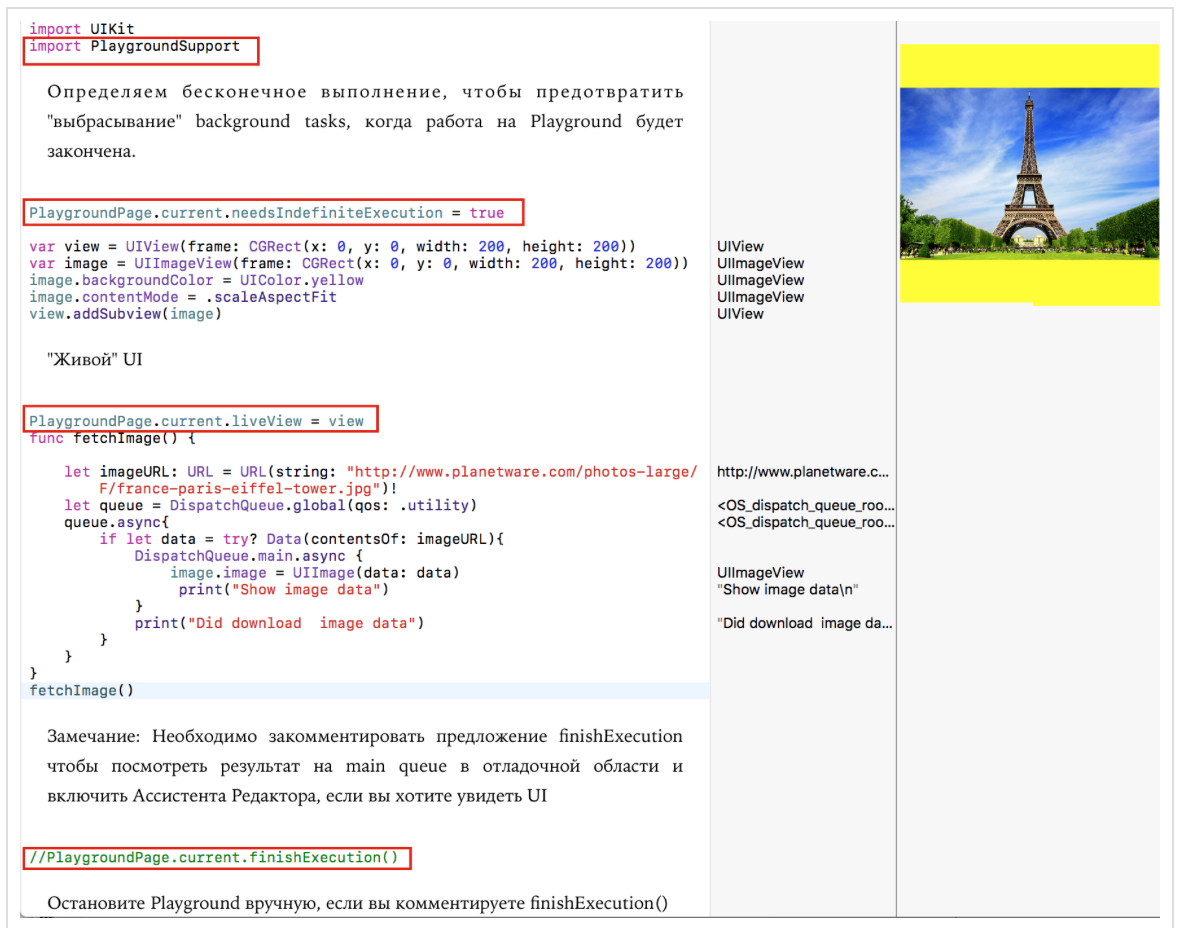

Playgroundmodule PlaygroundSupportand the class that is configured for an infinite amount of time using the module PlaygroudPageso that we can complete all the tasks placed in the queue and get access to main queue. We can stop waiting for some event on the Playgroundc using the command PlaygroundPage.current.finishExecution().There is another cool opportunity to

Playground- the ability to interact with the "live" UIwith the command PlaygroundPage.liveView = viewController and Editor's Assistant (

Assistant Editor). If, for example, you create viewController, in order to see yours viewController, you just need to configure Playgroundfor unlimited code execution and enable the Editor Assistant ( Assistant Editor). You have to comment out the command PlaygroundPage.current.finishExecution()and stop it Playgroundmanually.

PlaygroundWith the experimental environment pattern code, it has the name EnvironmentPlayground.playground and is on Github .1. First experiment. Global queues and tasks

Let's start with simple experiments. We also define a number of global queues: one consistent

mainQueue- it is main queue, and four parallel ( concurrent) queues- userInteractiveQueue, userQueue, utilityQueueand backgroundQueue. You can set concurrent queuethe default - defautQueue:

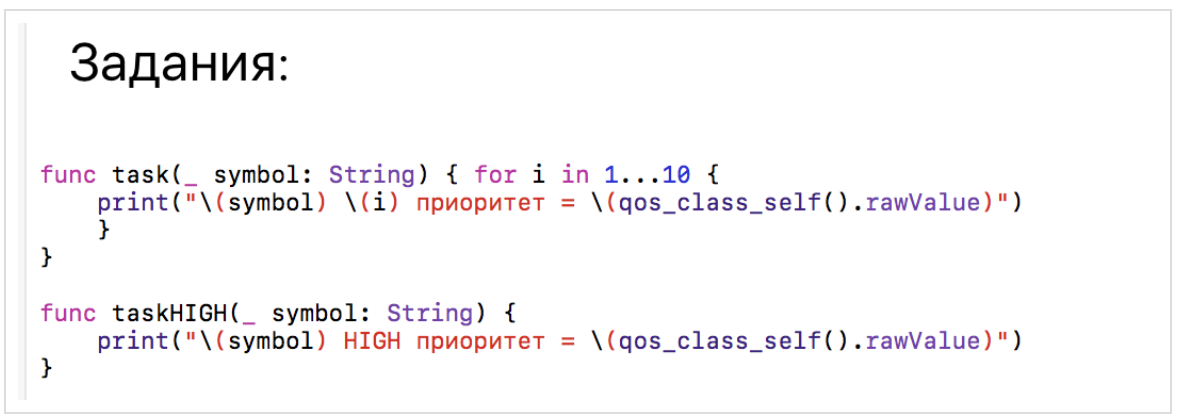

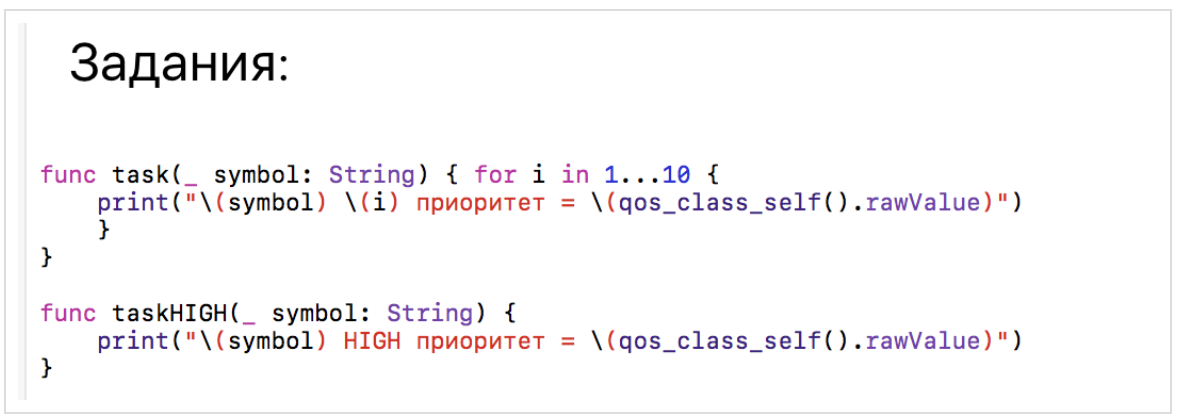

As a job,

taskchoose to print any ten identical characters and the priority of the current queue. taskHIGHWe will launch another task , which will print one character, with high priority:

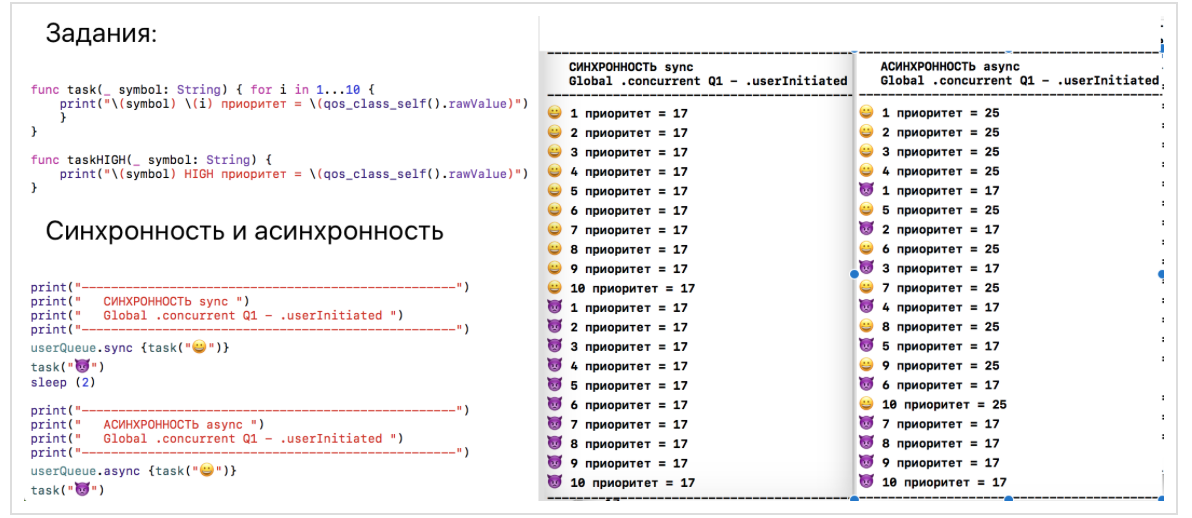

2. The second experiment will apply to synchronously and asynchronously in the global queue

Once you have a global queue, for example,

userQueueyou can perform tasks on it either SYNCHRONOUS , using the method sync, or ASYNCHRONNO using the method async.

In the case of synchronous

syncexecution, we see that all tasks start sequentially, one after another, and the next one clearly waits for the completion of the previous one. Moreover, as an optimization, the sync function can trigger a closure on the current thread, if possible, and the priority of the global queue will not matter. This is what we see.In the case of asynchronous

asyncexecution, we see that tasksstart without waiting for the tasks to complete

, and the priority of the global queue

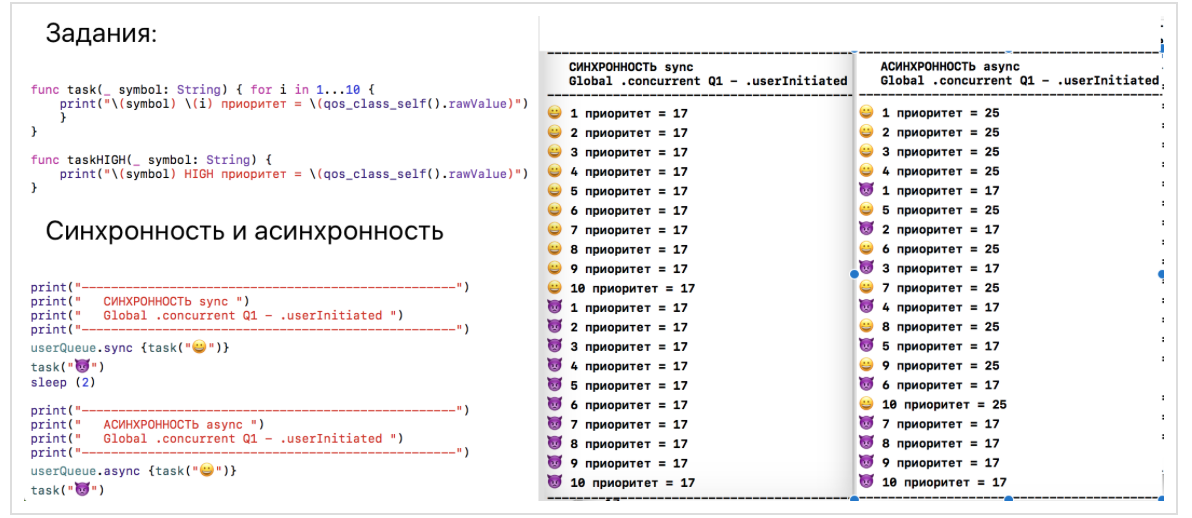

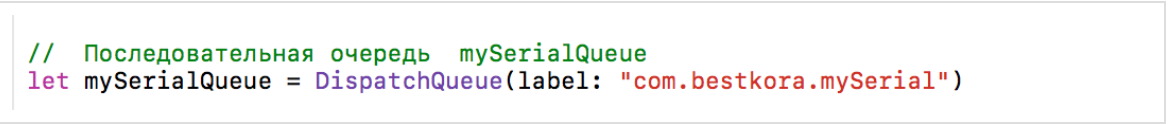

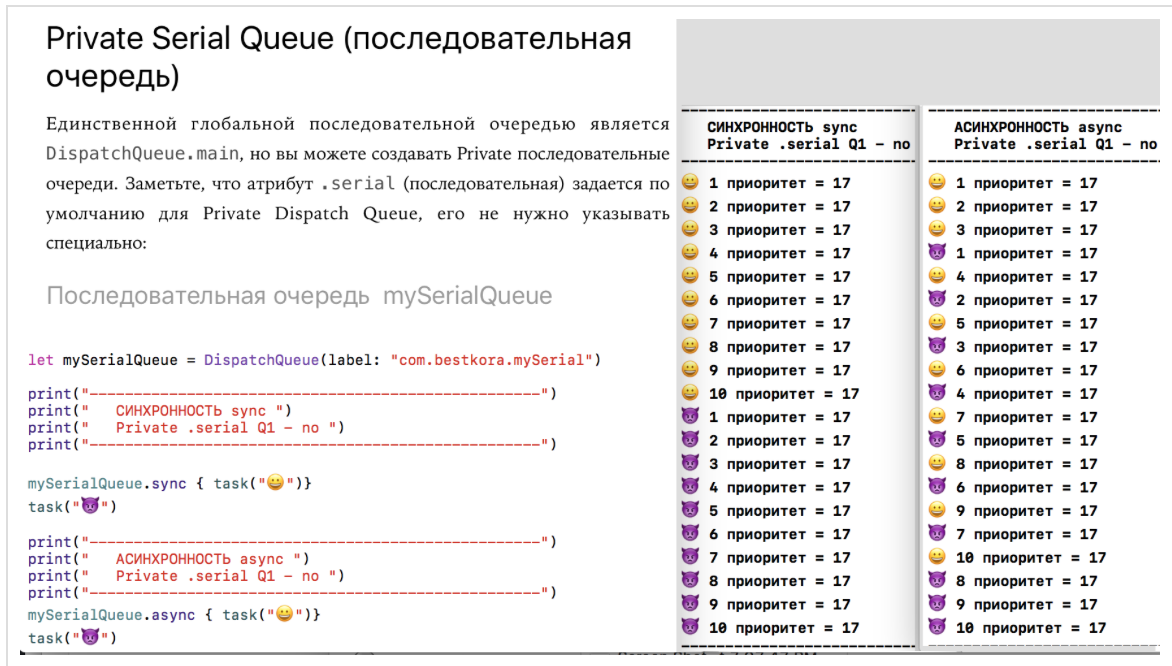

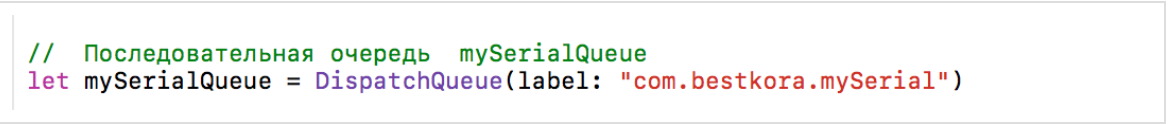

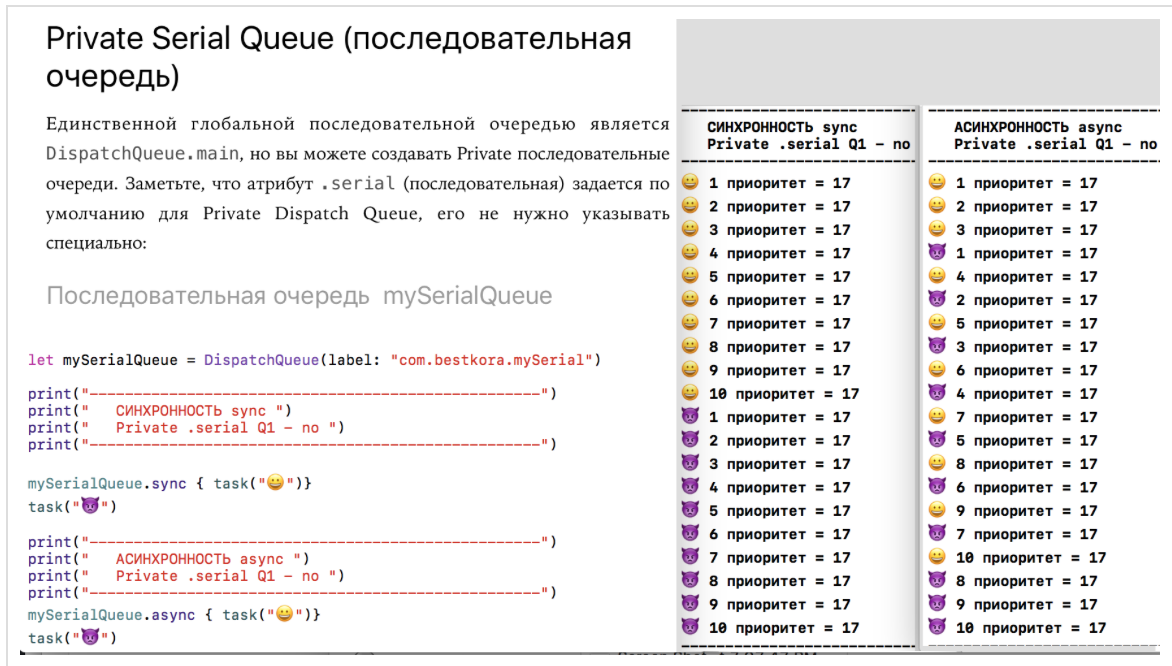

userQueuehigher priority code execution on Playground. Therefore, tasks userQueueare performed more often.3. The third experiment. Private serial queues

Private DispatchQueue :

, , —

label , Apple DNS ( “com.bestkora.mySerial” ), . , , , . label Private , ( .serial ) . , , .We look at how user

Privatesequential queues work mySerialQueuewhen using syncand asyncmethods:

In the case of synchronous,

sync we see the same situation as in experiment 3 — the type of queue does not matter, because as an optimization, the function synccan trigger a closure on the current flow. This is what we see.What happens if we use the

asyncmethod and allow the serial queue mySerialQueueto execute jobsasynchronously with respect to the current queue? In this case, the execution of the program does not stop and does not wait until this task is completed in the queue

mySerialQueue; management will immediately proceed to the execution of tasksand will perform them at the same time as the tasks

4. QoS

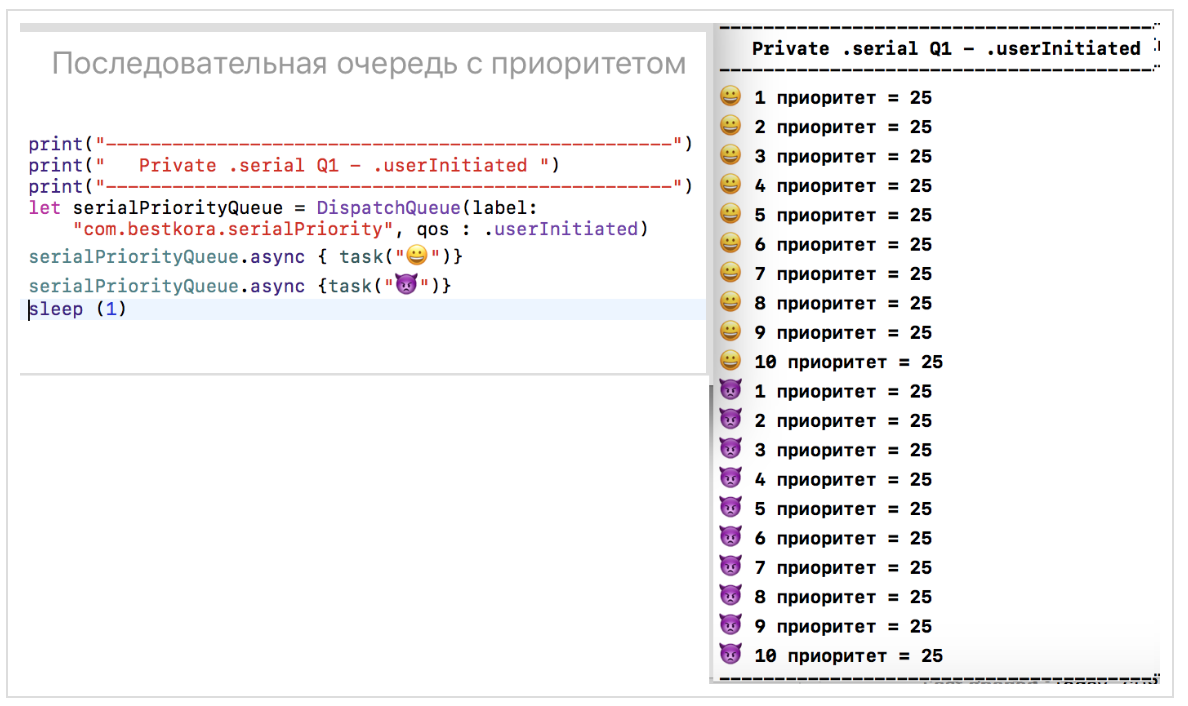

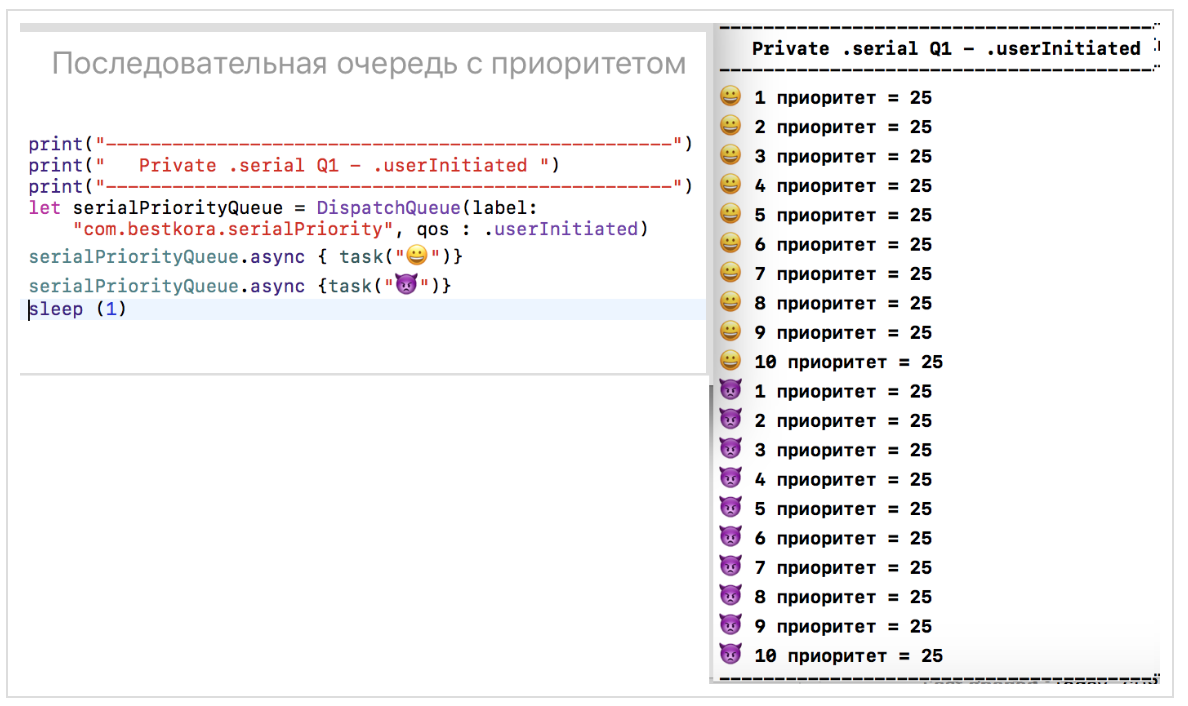

Let's assign our

Privatesequential queue the serialPriorityQueuequality of service qos, equal to .userInitiated, and put asynchronously into this queue first tasksand then

This experiment will convince us that our new queue is

serialPriorityQueueindeed consistent, and despite using the asyncmethod, the tasks are performed sequentially in the order of receipt:

Thus, for multi-threaded code execution it is not enough to use the method

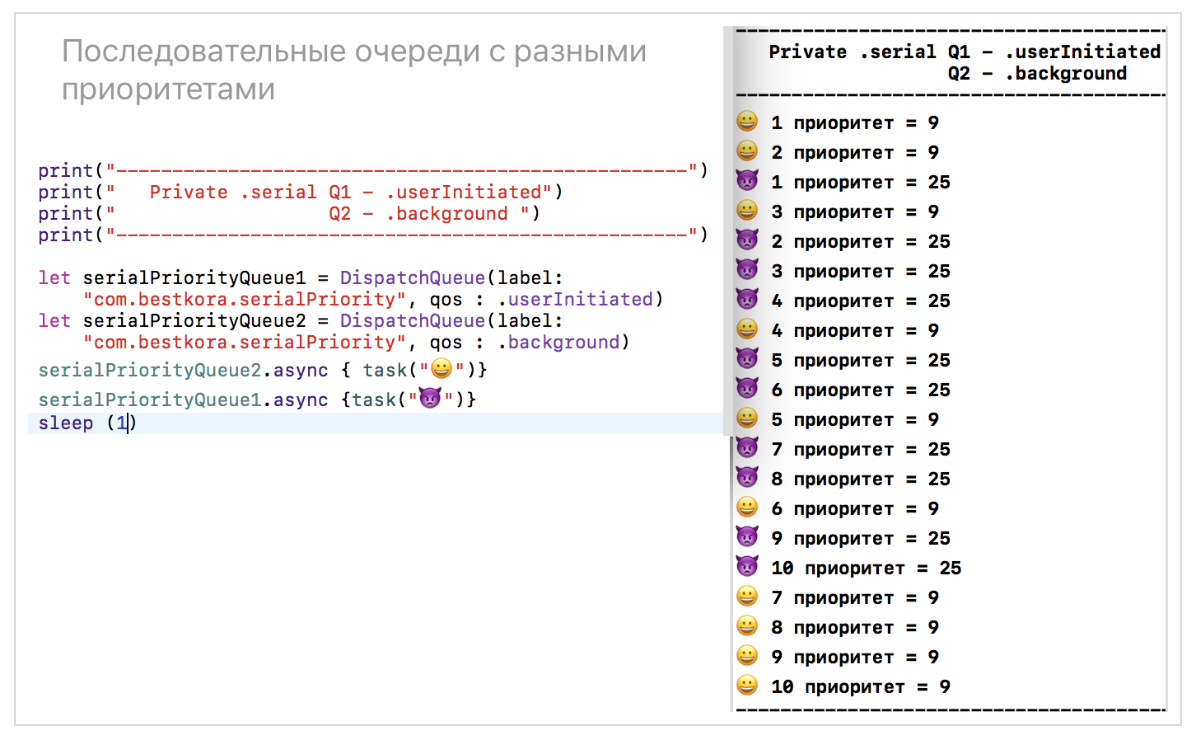

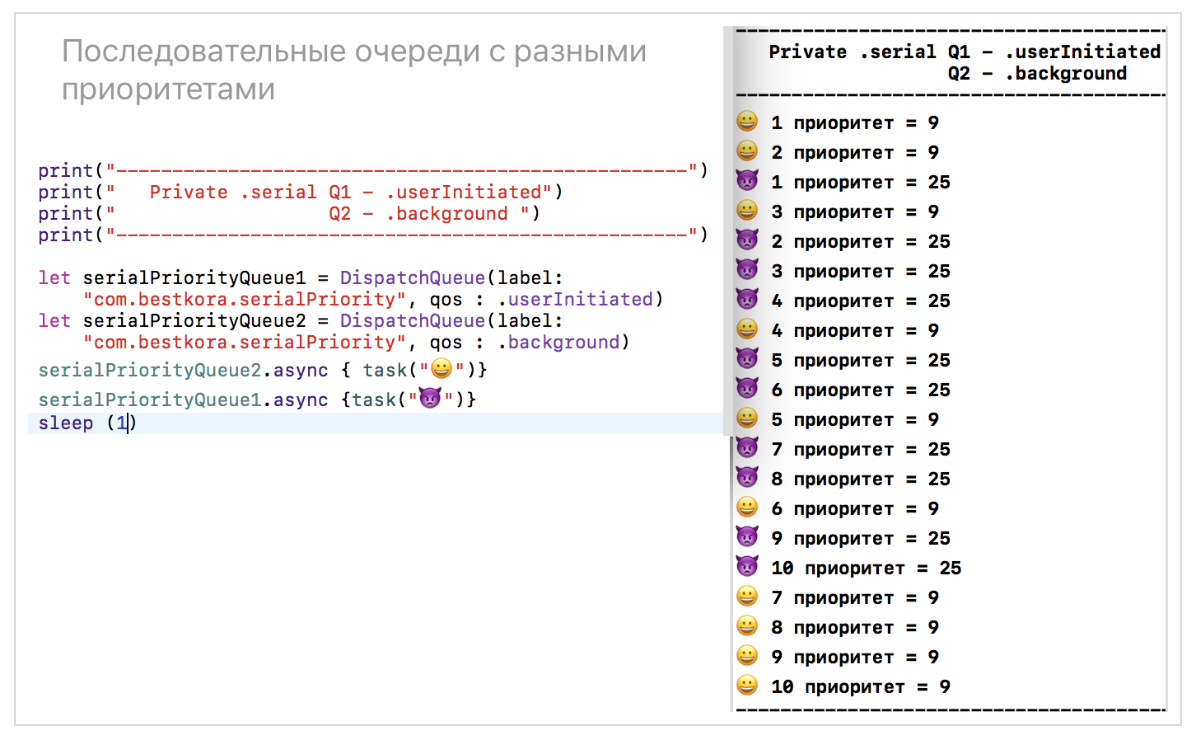

async, you need to have a lot of threads either due to different queues, or due to the fact that the queue itself is I am parallel ( .concurrent). Below in experiment 5 with parallel ( .concurrent) queues we will see a similar experiment with Private parallel ( .concurrent) queueworkerQueue, but there will be a completely different picture, when we put the same tasks in this queue.Let's use sequential

Privatequeues with different priorities for asynchronous setting of tasks in this queue, and then

queue of tasks

serialPriorityQueue1c qos .userInitiatedqueue

serialPriorityQueue2c qos .background

Here, multithreaded tasks are executed, and tasks are more often executed on queues

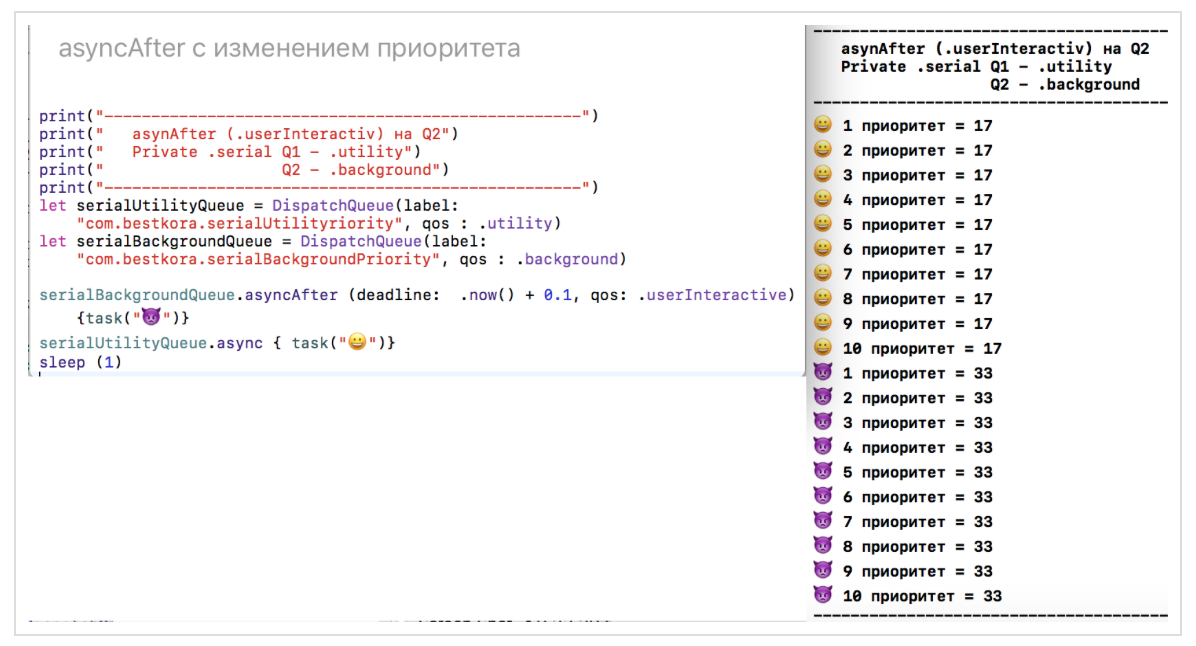

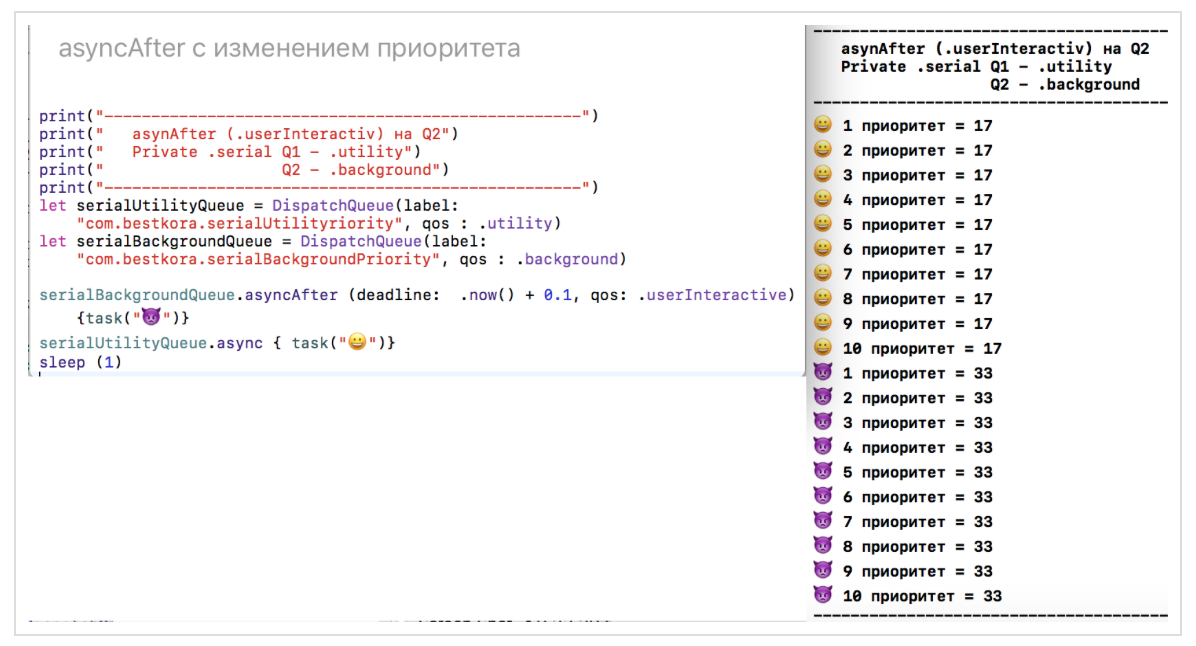

serialPriorityQueue1with a higher priority quality of service qos: .userIniatated.You can delay the execution of tasks on any queue

DispatchQueuefor a specified time, for example, by now() + 0.1using the function asyncAfterand also change the quality of service qos:

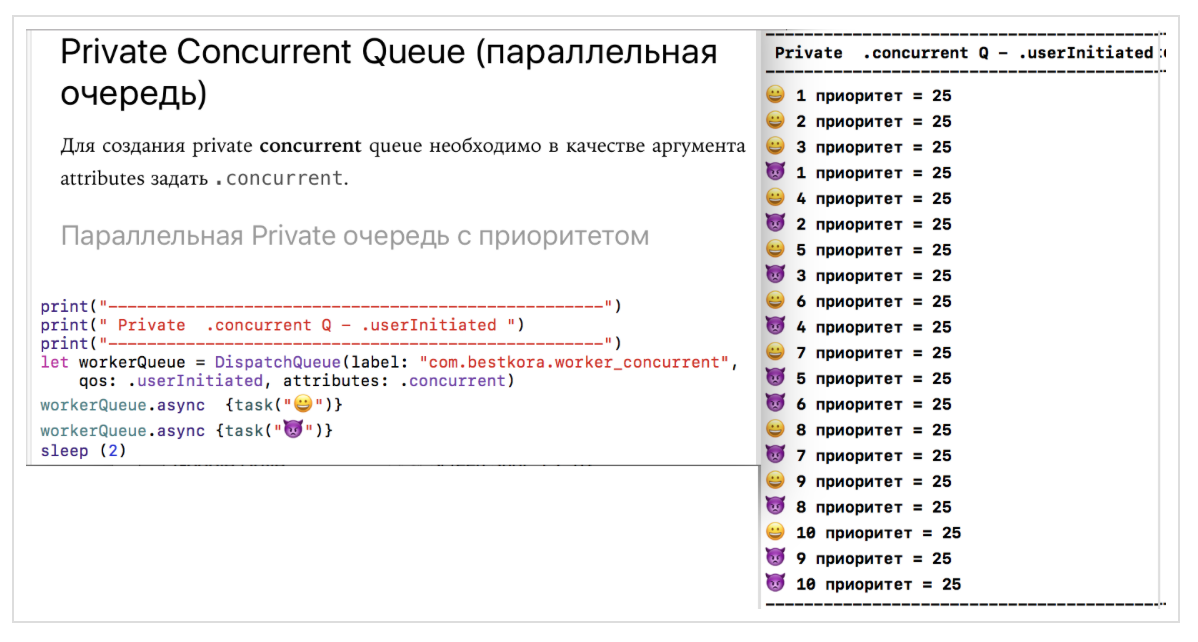

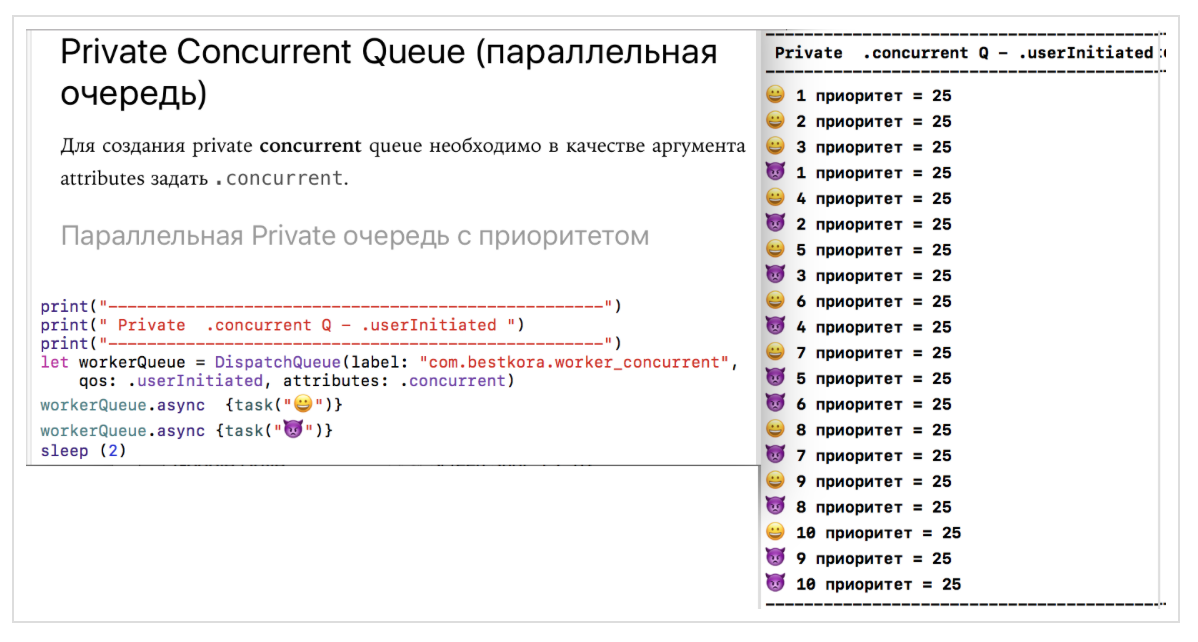

5. The fifth experiment will concern private parallel (concurrent) queues.

In order to initialize the

Privateparallel ( .concurrent) queue, it is sufficient to specify the argument attributesvalue equal to when initializing the Private queue .concurrent. If you do not specify this argument, the Privatequeue will be sequential ( .serial). The argument is qosalso not required and can be skipped without any problems.Let's assign our parallel queue a

workerQueuequality of service qosequal to .userInitiated, and set tasks asynchronously into this queue first, and then

Our new parallel queue is

workerQueueindeed parallel, and the tasks in it are executed simultaneously, although everything we did compared with the fourth experiment (one consistent turnserialPriorityQueue), this set the argument to be attributesequal .concurrent:

The picture is completely different compared to one consecutive queue. If there all tasks are performed strictly in the order in which they are submitted for execution, then for our parallel (multi-threaded) queue

workerQueue, which can be “split” into several threads, the tasks are actually executed in parallel: some tasks with the symbolbeing later queued

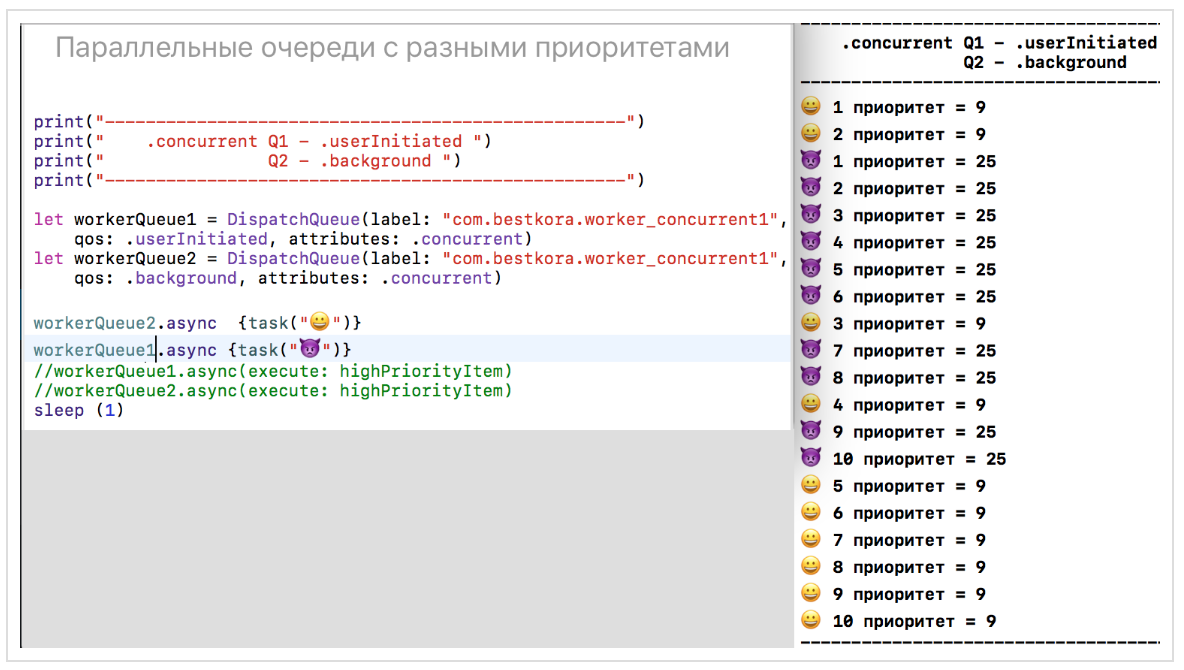

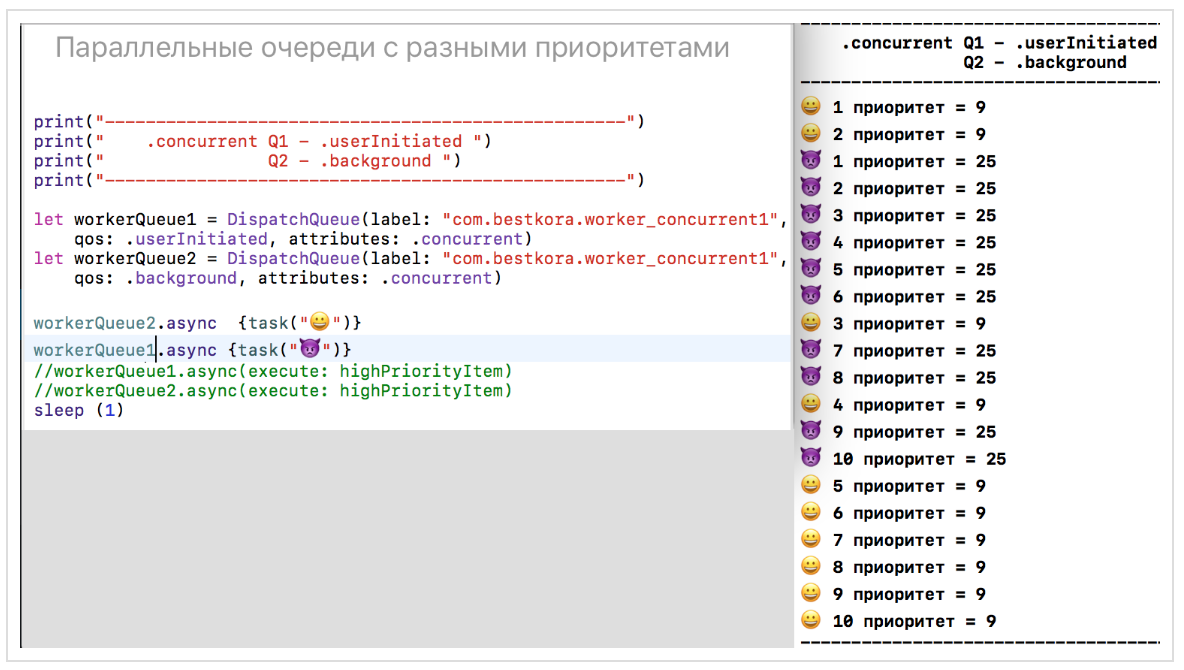

workerQueue, run faster on parallel thread.Let's use parallel

Privatequeues with different priorities:queue

workerQueue1c qos .userInitiatedqueue

workerQueue2c qos .background

Here is the same picture as with different consecutive

Privatebursts in the second experiment. We see that tasks are more often executed on the waiting list workerQueue1, which has a higher priority.You can create queues with deferred execution using an argument

attributes, and then activate the execution of tasks on it at any suitable time using the method activate():

6. The sixth experiment involves the use of DispatchWorkItem objects.

If you want to have additional capabilities for managing the execution of various tasks on the

Dispatchqueues, you can create DispatchWorkItemfor which you can set the quality of service qos, and it will affect its implementation:

Setting the flag

[.enforceQoS]during the preparation DispatchWorkItem, we get a higher priority for the task highPriorityItembefore the other tasks on that the same queue:

This allows you to forcibly increase the priority of a specific task for a

Dispatch Queuecertain quality of service qosand, thus, to deal with the phenomenon of “priority inversion”. We see that despite the fact that the two tasks highPriorityItemstart the most recent, they are performed at the very beginning thanks to the flag [.enforceQoS]and the priority increase to.userInteractive. In addition, the task highPriorityItemcan be run multiple times on different queues.If we remove the flag

[.enforceQoS]:

the tasks

highPriorityItemwill take the quality of service qosthat is set for the queue on which they are launched:

But still, they get to the very beginning of the corresponding queues. The code for all of these experiments is on firstPlayground.playground on Github.

The class

DispatchWorkItemhas a property isCancelledand a number of methods:

Despite the presence of a method

cancel()for DispatchWorkItem GCDit still does not allow removing closures that have already started on a particular queue. What we can currently do is mark DispatchWorkItemas “deleted” using the method cancel(). If the method callcancel()happens before it DispatchWorkItemis queued using the method async, it DispatchWorkItemwill not be executed. One of the reasons why it is sometimes necessary to use a mechanism Operationrather than GCDis that it GCDdoes not know how to remove closures that started on a particular queue.You can use the class

DispatchWorkItemand its method notify (queue:, execute:), as well as the class instance methodDispatchQueue async(execute workItem: DispatchWorkItem) to solve the problem given at the very beginning of the post - downloading images from the network:

We form a synchronous task in the form of a

workItemclass instance DispatchWorlItem, which consists in receiving data datafrom the "network" at a given imageURLaddress. Perform an asynchronous job workItemon a parallel global queue queuewith quality of service qos: .utilityusing the function queue.async(execute: workItem) Using the function

workItem.notify(queue: DispatchQueue.main) { if let imageData = data { eiffelImage.image = UIImage(data: imageData)} } we are waiting for notification of the end of data loading in

data. Once this happens, we update the image of the element UI eiffelImage:

The code is on the LoadImage.playground on Github .

Pattern 1. Code options for downloading images from the network

We have two synchronous tasks:

retrieving data from the network

let data = try? Data(contentsOf: imageURL) and update based on

datauser interface data ( UI) eiffelImage.image = UIImage(data: data) This is a typical pattern, performed using multi-threading mechanisms

GCD, when you need to do some work in the background thread, and then return the result to the main thread for display, as components UIKitcan work exclusively from the main thread.This can be done either in the classical way:

either using the ready asynchronous API, using

URLSession:

or using

DispatchWorlItem:

Finally, we can always “wrap” our synchronous puzzle into the asynchronous “shell” and execute it:

The code for this pattern is on LoadImage.playground on github .

Pattern 2. Features of downloading images from the network for Table View and Collection View using GCD

Consider as an example a very simple application consisting of only one

Image Table View Controllerwhose table cells contain only images downloaded from the Internet and an activity indicator that shows the loading process:

Here’s what the class

ImageTableViewControllerthat serves the screen fragment looks like Image Table View Controller:

and the class

ImageTableViewCellfor the table cell that is loaded image: The

image is loaded in the usual classical way. The model for the class

ImageTableViewControlleris an array of 8 URLs:- The Eiffel Tower

- Venice

- Scottish castle

- Satellite Cassini - loaded from the network much longer than the rest

- The Eiffel Tower

- Venice

- Scottish castle

- Arctic

If we launch the application and start scrolling down quickly enough to see all 8 images, we will find that the Cassini Satellite will not load until we leave the screen. Obviously, it takes significantly longer to load than all the others.

But, having scrolled to the end and having seen “Arctic” in the most recent cell, we suddenly find that after some very short time it will be replaced with Cassini Satellite :

This is the incorrect functioning of such a simple application. What is the matter?The fact is that the cells in the tables are reusable due to the method

dequeueReusableCell. Each time a cell (new or reused) gets into the screen, the image is launched asynchronously from the network (the “wheel” is spinning), as soon as the download is completed and the image is received, UIthis cell is updated . But we do not wait for the image to load, we continue to scroll the table and the cell ( Cassini ) leaves the screen without updating its own UI. However, a new image should appear at the bottom and the same cell left on the screen will be reused, but for a different image (“Arctic”), which will quickly load and updateUI. At this time, the Cassini download launched in this cell will return and update the screen, which is wrong. This is because we are launching different things that work with the network in different streams. They return at different times:

How can we remedy the situation? Within the mechanism,

GCDwe cannot cancel the loading of an image of a cell that has left the screen, but we can, when we come imageDatafrom the network, check URLwhich caused the download of this data urland compare it with what the user wants to have in this cell at the moment imageURL:

Now everything will work correctly. Thus, multi-threaded programming requires non-standard imagination. The fact is that some things in multithreaded programming are carried out in a different order than the written code. The GCDTableViewController application is on Github .

Pattern 3. Using DispatchGroup groups

If you have several tasks that need to be performed asynchronously and wait for them to complete, then a group is applied

DispatchGroupthat is very easy to create: let imageGroup = DispatchGroup() Suppose we need to load 4 different images “from the network”:

The method

queue.async(group: imageGroup)allows you to add any task (synchronous) to a group that is executed on any queue queue:

We create a group

imageGroupand place async (group: imageGroup)two tasks for asynchronous loading of images into the global parallel queue using this method DispatchQueue.global()and two tasks of asynchronous loading of images into a global parallel queue DispatchQueue.global(qos:.userInitiated)with quality of service .userInitiated. It is important that tasks functioning on different queues can be added to the same group. When all the tasks in the group are completed, the function notifyis called - this is a kind of callback block for the whole group, which places all the images on the screen at the same time:

- ,

async (group: imageGroup) . - , , . Playground GroupSyncTasks.playground Github ., , :

enter() , leave() . Playground GroupAsyncTasks.playground Github . .

, , , . : , ,

asyncGroup () asyncUsual () :

: :

.

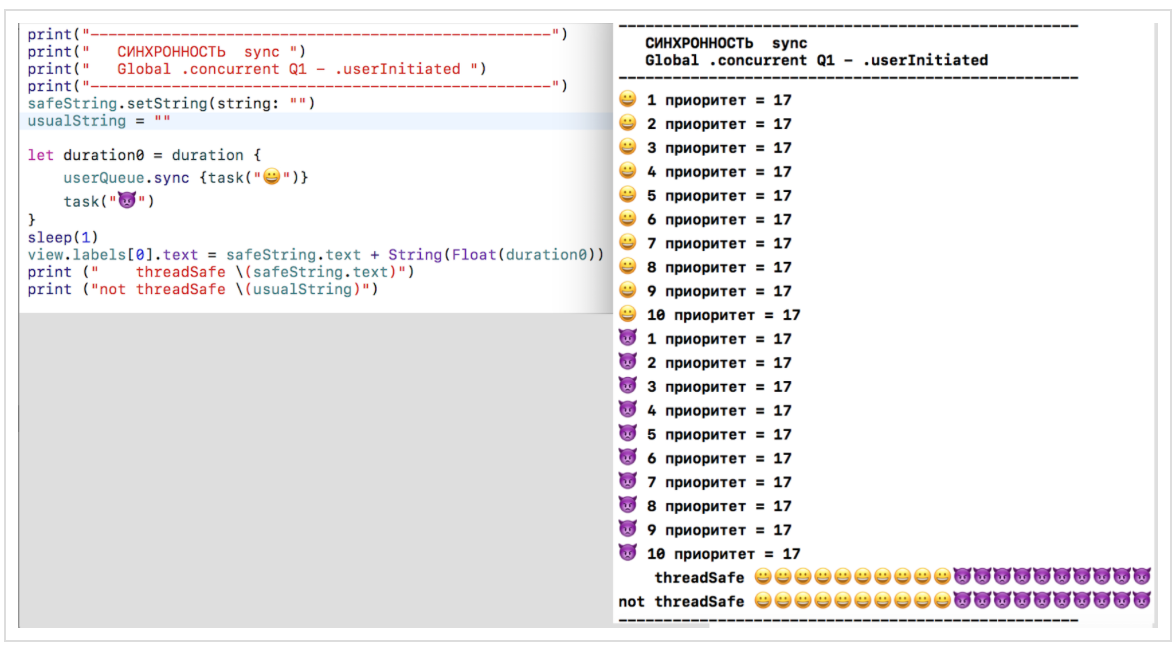

4. - (thread-safe) .

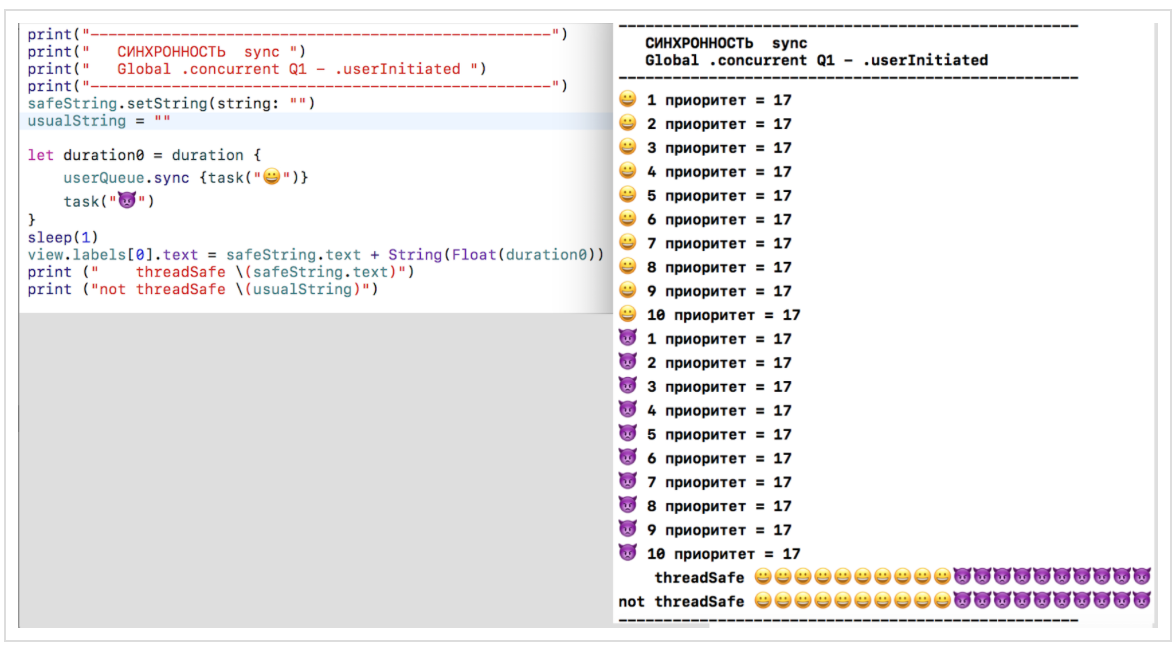

Let's go back to our first experiment with bursts

GCDin Swift 3and try to maintain a chronological (vertical) sequence of tasks in a row, and thereby present the user with the result of the tasks on different lines in a horizontal way:

I have to say that I used to store the results as a normal NEpotochno- safe to

Swift 3string usualString: Stringas well as thread-safe string safeString: ThreadSafeString: var safeString = ThreadSafeString("") var usualString = "" The purpose of this section is to show how the in-safe string in should be arranged

Swift 3, so more about that later.All experiments with a stream-safe string will occur on the Playground GCDPlayground.playground on Github .

I will slightly change the tasks in order to accumulate information in both lines

usualStringand safeString:

In

Swiftany variable declared with a keyword, it letis constant, and therefore thread safe ( thread-safe). A declaration of a variable with a keyword varmakes the variable mutable ( mutable) and non-cloud safe (thread-safe) until it is designed in a special way. If two threads start changing the same memory block at the same time, damage to this memory block may occur. In addition, if you read a variable on one thread while it is updating its value on another thread, then you risk reading the “old value”, that is, there is a race condition ( race condition).Ideal for thread-safety would be the case when

- reads happen synchronously and multithreading

- records must be asynchronous and must be the only task that is currently working with this variable

Fortunately, it

GCDprovides us with an elegant way to solve using barriers ( barrier) and isolation queues:

Barriers

GCDdo one interesting thing - they are waiting for the moment when the queue will be completely empty, before making its closure. As soon as the barrier begins to execute its closure, it ensures that the queue does not perform any other closures during this time and essentially works as a synchronous function. As soon as the barrier closure ends, the queue returns to its normal operation, providing a guarantee that no recording will be carried out simultaneously with reading or other writing.Let's see what the thread-safe class will look like

ThreadSafeString:

The function

isolationQueue.syncwill send a “read” closure {result = self.internalString}to our isolation queue isolationQueueand wait for the end, before returning the execution resultresult. After that we will have the result of reading. If you do not make a call synchronous, then you need to introduce a callback block. Due to the fact that the queue is isolationQueueparallel ( .concurrent), such synchronous reads can be performed on several pieces simultaneously.The function

isolationQueue.async (flags: .barrier)will send a “record”, “add” or “initialization” closure to the isolation queue isolationQueue. The function asyncmeans that control will be returned before the “record”, “add” or “initialization” closure is actually executed. The barrier part (flags: .barrier)means that the closure will not be executed until each closure in the queue has completed its execution. Other closures will be placed after the barrier one and executed after the barrier is completed.DispatchQueues , - ( thread-safe ) safeString: ThreadSafeString usualString: String , Playground GCDPlayground.playground Github ..

1.

sync DispatchQueue.global(qos: .userInitiated) Playground :

-

usualString , - safeString .2.

async DispatchQueue.global(qos: .userInitiated) Playground :

-

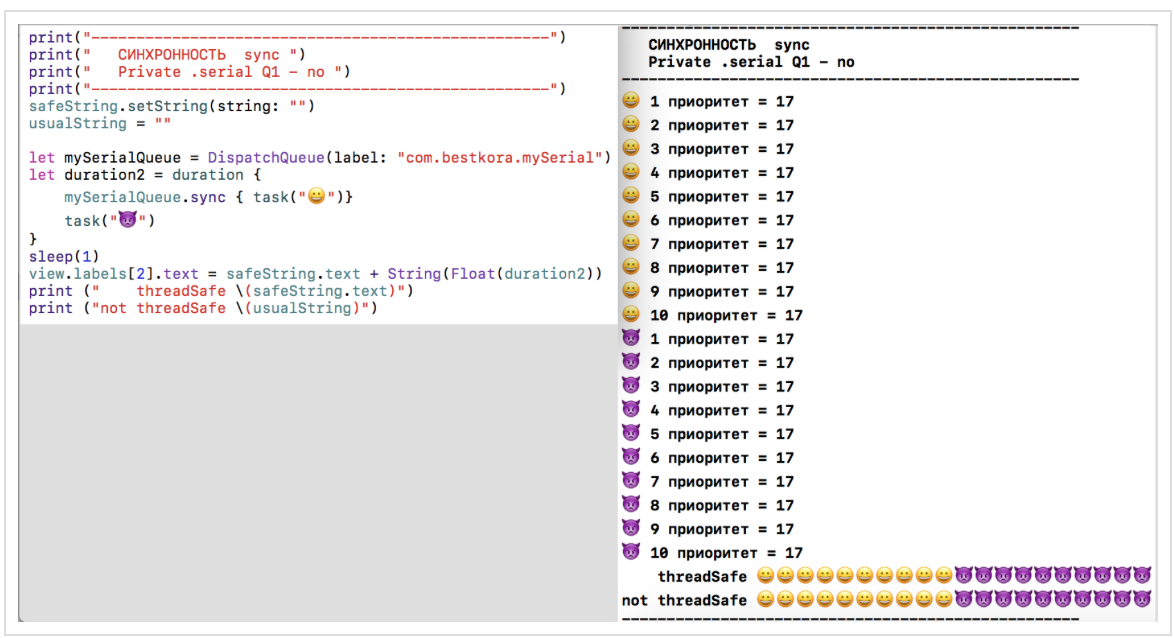

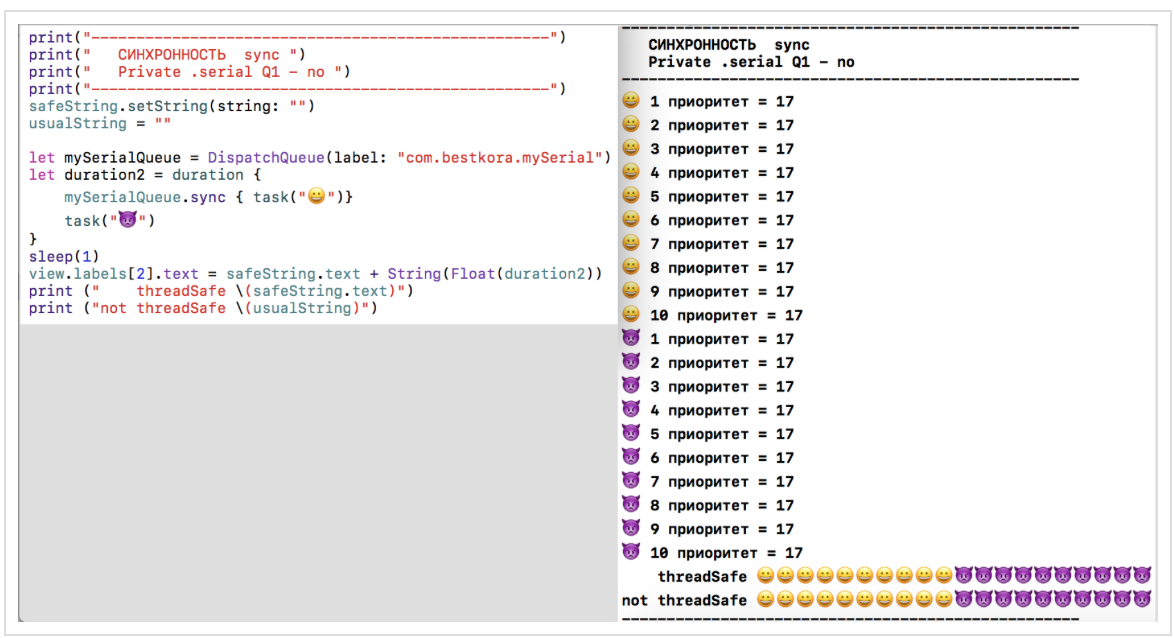

usualString , - safeString .3.

sync Private DispatchQueue (label: "com.bestkora.mySerial") Playground:

-

usualString , - safeString .4.

async Private DispatchQueue (label: "com.bestkora.mySerial") Playground :

-

usualString , - safeString .5.

asyncand

Private DispatchQueue (label: "com.bestkora.mySerial", qos : .userInitiated) :

-

usualString , - safeString .6.

asyncand

Private DispatchQueue (label: "com.bestkora.mySerial", qos : .userInitiated) DispatchQueue (label: "com.bestkora.mySerial", qos : .background) :

-

usualString , - safeString .7.

asyncand

Private DispatchQueue (label: "com.bestkora.mySerial", qos : .userInitiated, attributes: .concurrent) :

-

usualString , - safeString .8.

asyncand

Private qos : .userInitiated qos : .background :

-

usualString , - safeString .9.

asyncAfter (deadline: .now() + 0.0, qos: .userInteractive) c :

-

usualString , - safeString .10.

asyncAfter (deadline: .now() + 0.1, qos: .userInteractive) c :

-

usualString , - safeString ,and

.

,

and

, , (

.concurrent ) , usualString - safeString .-

safeString sync async, , « », :

Playground , , Xcode 8 Thread Sanitizer race condition . Thread Sanitizer . ( Scheme ):

race condition . Tsan Github .

.

GCD Swift 3 . Operations Swift 3 .PS

GCD API , . Swift 3 . Swift Swift 5 (2018 .), / 2017 ., " manifesto " 2017..IBM ,

GCD API async « » ( pyramid of doom ), , / «» Dispatch Queue , :

— ( actor models ).

actor — , , DispatchQueue + , , + , :

.

actors , async/await , atomicity , memory models . , « » , .Swift ., iOS Swift c CS193p Winter 17 ( iOS 10 Swift 3) , iTunes , .

Links

WWDC 2016. Concurrent Programming With GCD in Swift 3 (session 720)

WWDC 2016. Improving Existing Apps with Modern Best Practices (session 213)

WWDC 2015. Building Responsive and Efficient Apps with GCD.

Grand Central Dispatch (GCD) and Dispatch Queues in Swift 3

iOS Concurrency with GCD and Operations

The GCD Handbook

GCD

Modernize libdispatch for Swift 3 naming conventions

GCD

GCD – Beta

CONCURRENCY IN IOS

www.uraimo.com/2017/05/07/all-about-concurrency-in-swift-1-the-present

All about concurrency in Swift — Part 1: The Present

Source: https://habr.com/ru/post/320152/

All Articles