Application Insights. About analytics and other new tools

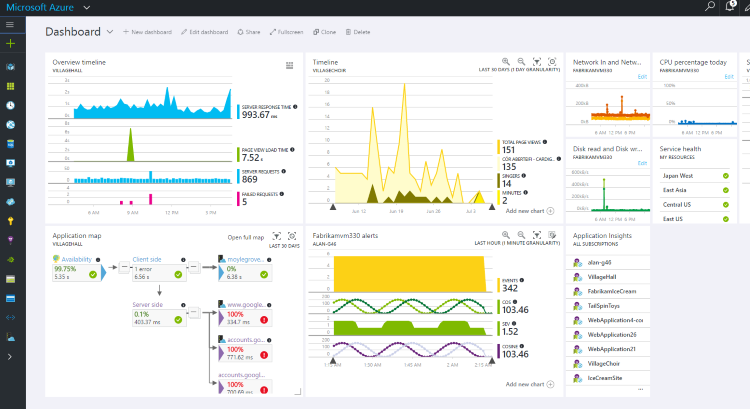

About a year ago I wrote a short article about using the preview of the Azure version of the Application Insights (AI) diagnostics and monitoring service. Since then, a lot of interesting additions have appeared in AI. And so, just over a month ago, AI finally got General Availability.

In this article, I will conduct another review of AI, taking into account the new additions, and will share the experience of its use on real projects.

To begin with, I work at Kaspersky Lab, in a team that develops .NET services. Basically, we use Azure and Amazon cloud platforms as hosting. Our services handle a fairly high load from millions of users and provide high performance. It is important for us to maintain a good reputation of services, to achieve which we must very quickly react to problems and find bottlenecks that may affect performance. Similar problems can occur when generating anomalously high load or nonspecific user activity, various failures of infrastructure (for example, database) or external services, nobody has also canceled banal bugs in the logic of services.

')

We tried to use various diagnostic systems, but at the moment AI has proven to be the most simple and flexible tool for collecting and analyzing telemetry.

AI is a cross-platform tool for collecting and visualizing diagnostic telemetry. If you have, for example, a .NET application, then to connect AI you just need to create an AI container on the Microsoft Azure portal, then connect the ApplicationInsigts package to the nugget application.

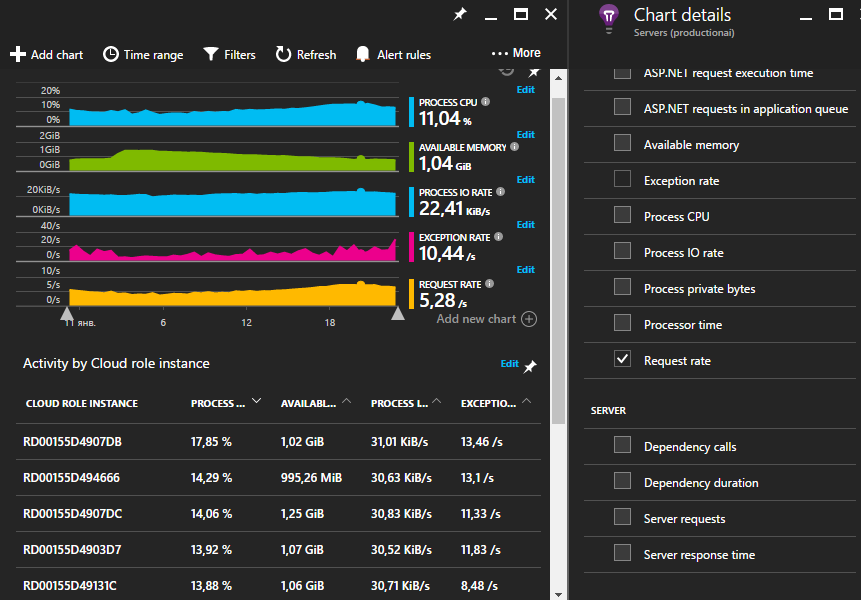

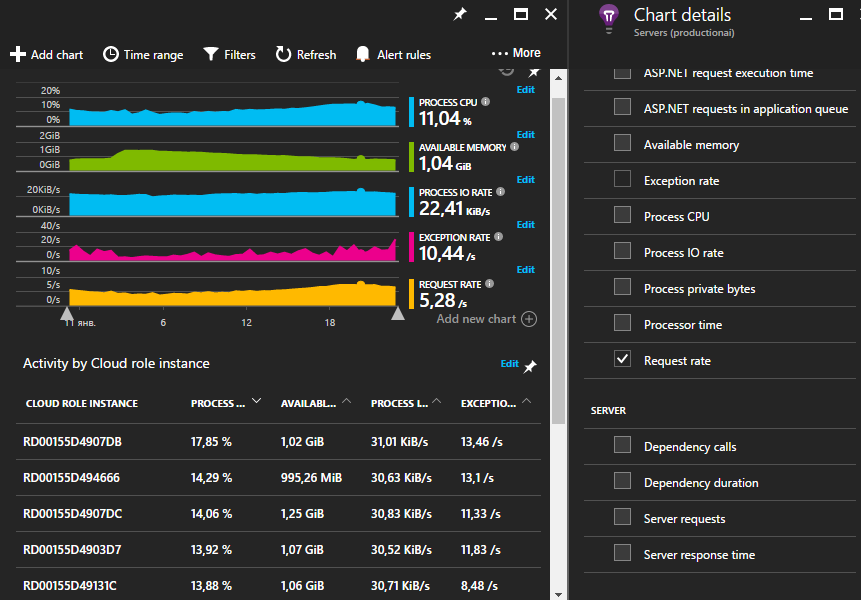

Literally out of the box, AI will begin to collect information on the main counters of the performance of the machine (memory, processor) on which your application is running.

You can start collecting such server telemetry without modifying the application code: to do this, install a special diagnostic agent on the machine. The list of collected counters can be changed by editing the ApplicationInsights.config file.

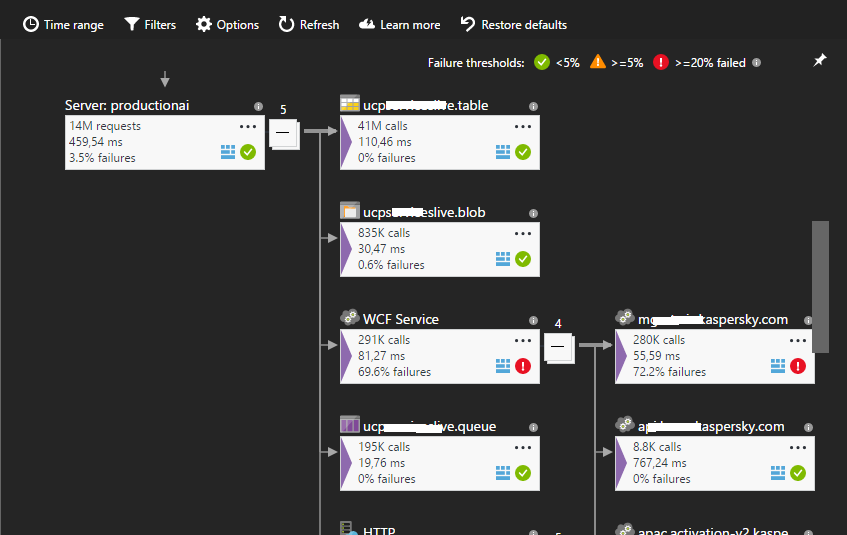

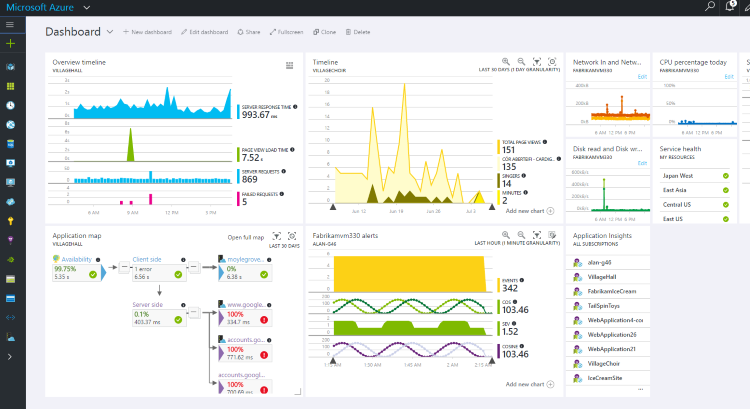

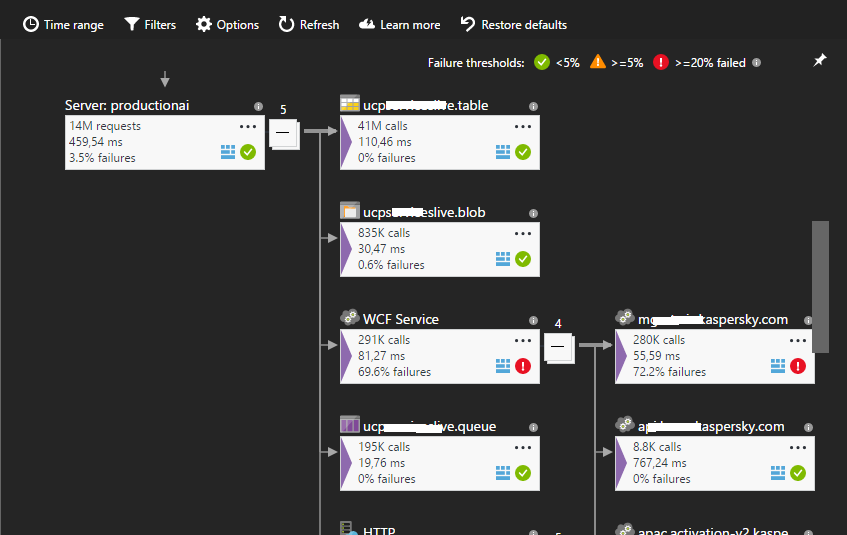

The next interesting point is “dependency monitoring”. AI keeps track of all outgoing external HTTP calls to your application. Under external calls or dependencies refers to your application to the database and other third party services. If your application is a service hosted in the IIS infrastructure, then AI will intercept telemetry for all requests to your services, including all external requests (thanks to forwarding additional diagnostic information through the CallContext flow). That is, thanks to this, you can find the request you are interested in, as well as see all its dependencies. Application Map allows you to view a complete map of external dependencies of your application.

If there are obvious problems with external services in your system, then theoretically this picture can give you some information about the problem.

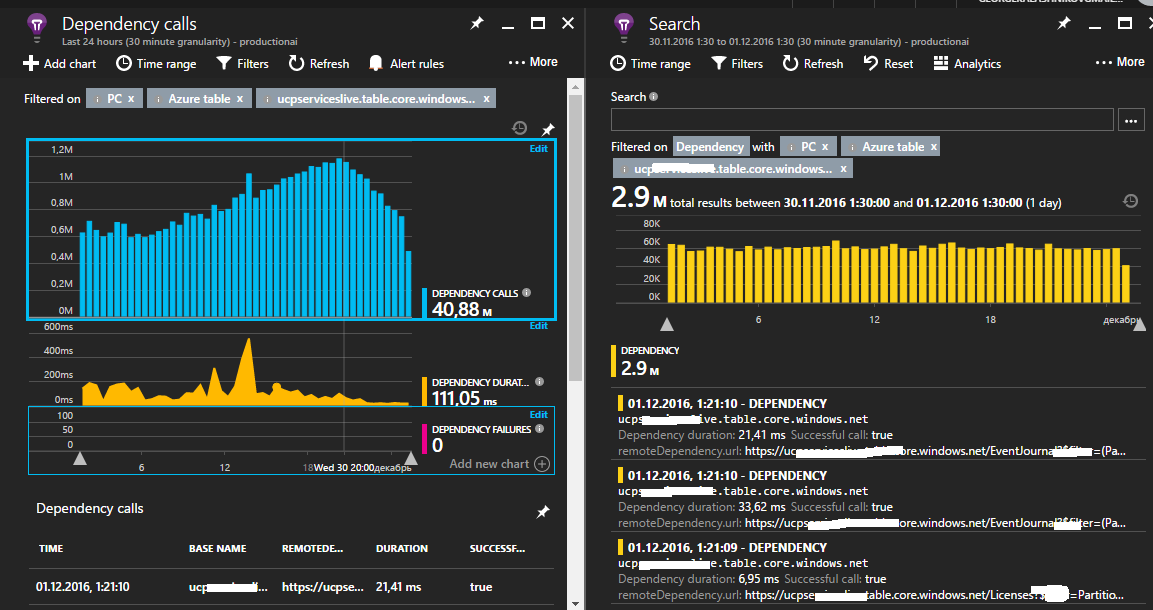

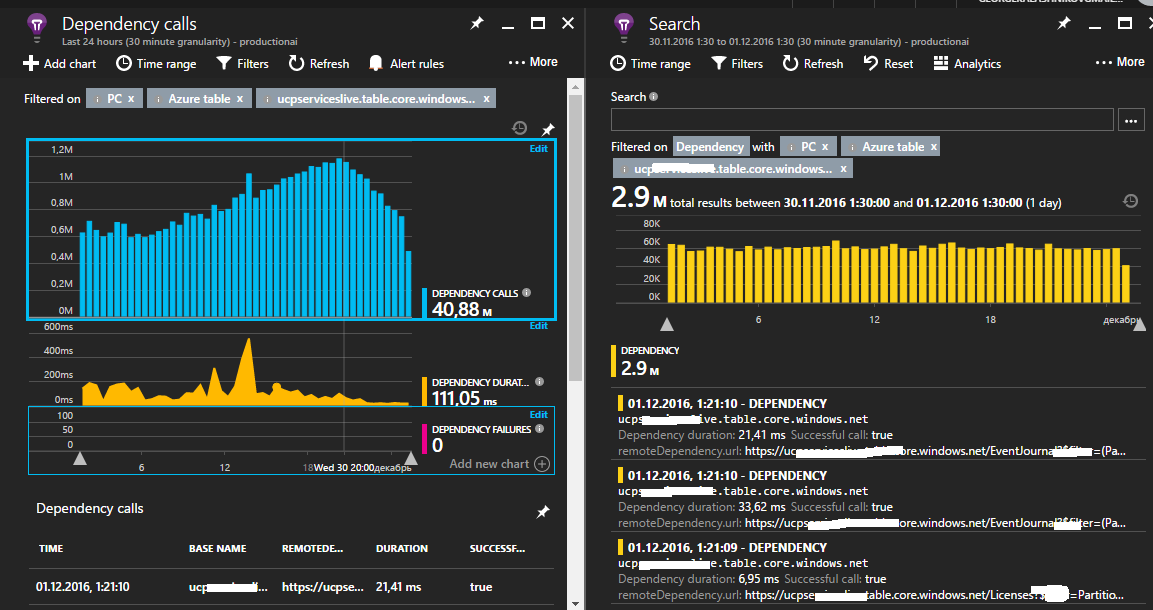

You can dive deeper for more detailed study of information on external requests.

Regardless of the platform, you can connect to your application an extensible Application Insights API, which allows you to save arbitrary telemetry. These can be logs or some custom performance counters.

For example, we write aggregated information in AI on all the main methods of our services, such as the number of calls, the percentage of errors and the time it takes to complete operations. In addition, we store information on critical areas of the application, such as the performance and availability of external services, the size of message queues (Azure Queue, ServiceBus), the bandwidth of their processing, etc.

Dependency monitoring, which I wrote about earlier, is quite a powerful tool, but at the moment it is able to automatically intercept only all outgoing HTTP requests, so we are forced to write telemetry on dependencies that were called through another transport system. In our case, this is Azure ServiceBus and RMQ, which work using custom protocols.

The telemetry that you collect does not need to have a flat structure (counterName-counterValue). It may contain a layered structure with different nesting. This is achieved by using a dynamic data type.

AI allowed to write such complex telemetry a year ago, but it was practically impossible to use it, because at that time, graphs could only be built using simple metrics. The only use of this telemetry was custom queries, which could only estimate the frequency of occurrence. Now there is a very powerful tool Analytics .

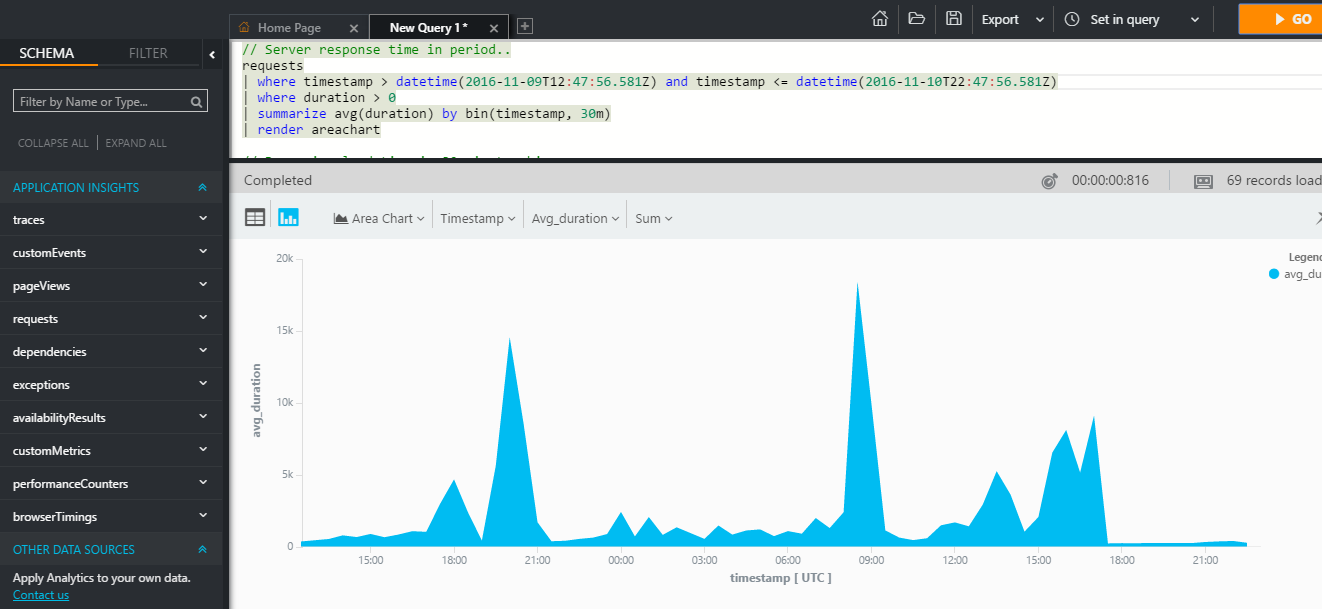

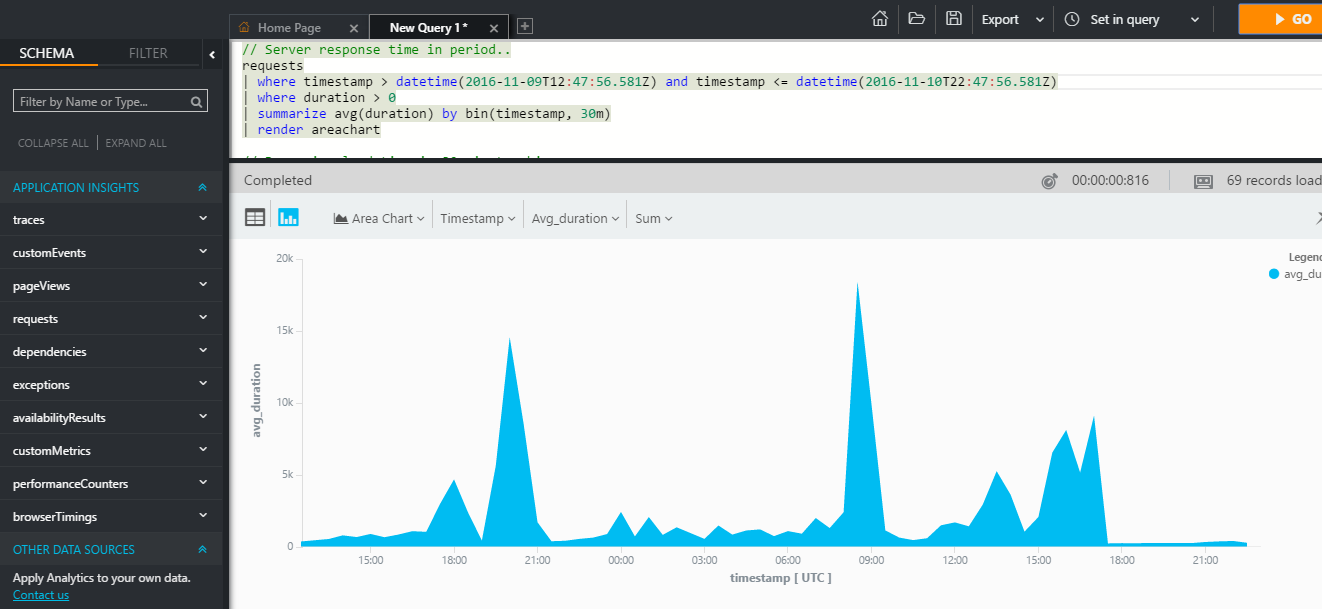

This tool allows you to write various queries to your telemetry, referring to the properties of arbitrary events using a special (SQL-like) language.

For an example of the syntax, you can look at a request that displays 10 any successful requests to your web application in the last 10 minutes:

The set of this language has a lot of ready-made operators for analysis (aggregate functions and connections, searching percentiles, medians, building reports, etc.) and visualizing telemetry in the form of graphs.

Such information can be used both for performance analysis and for obtaining analytics when solving some business problems. For example, if you are developing some online store, using AI analytics out of the box can give you an idea of customer preferences depending on their geographic location.

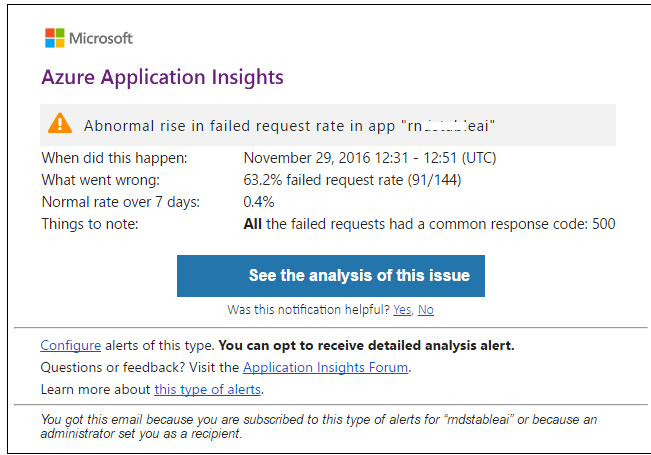

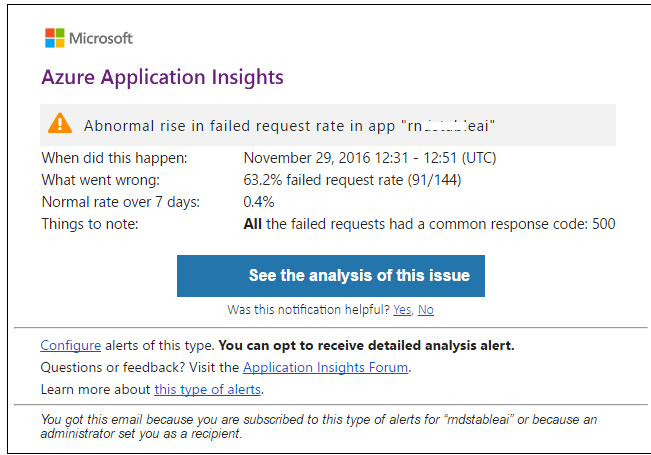

The AI includes the ProactiveDetection component, which, based on machine learning algorithms, is able to detect anomalies in the collected telemetry. For example, the number of requests to your services has increased dramatically or has fallen, the number of errors has increased, or the total duration of some operations.

You can also set up alerts for the telemetry counters you need.

AI keeps telemetry for 30 days. If this is not enough for you, then you can use the Continues Export feature, which will allow you to export telemetry to Azure Blobs. Using Azure Stream Analytics when searching the exported telemetry for the patterns you are interested in is good practice .

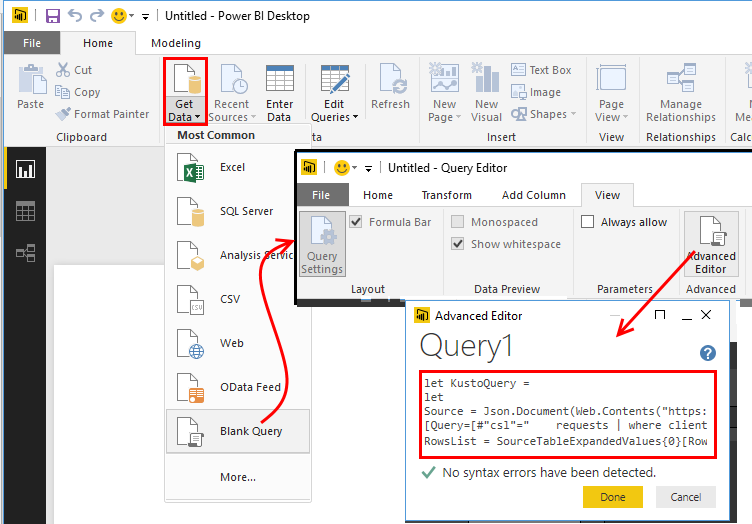

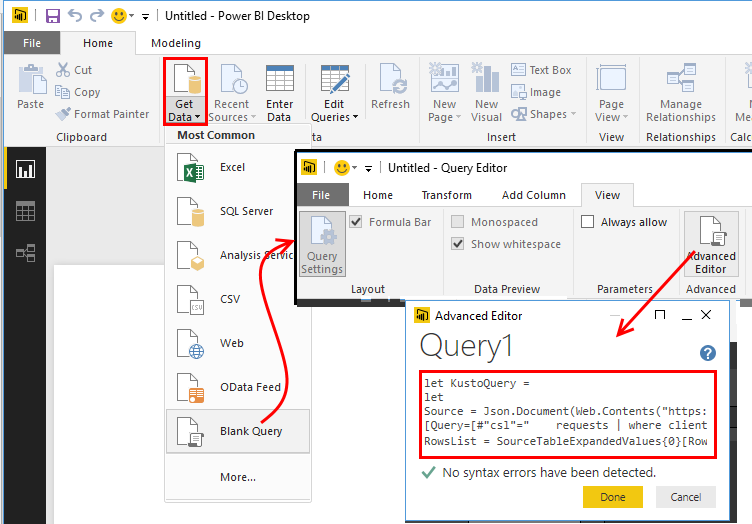

Power BI is a powerful tool for data visualization and analysis. You can connect a special Application Insights Power BI adapter to it and automatically forward some diagnostics to Power BI. To do this, simply build the necessary request in Analytics and click the export button. As a result, you get a small M-script that will be used as an AI data source.

Sometimes it is convenient to observe in real time the health of the system. This is especially true after installing updates. More recently, the Live Metrics Stream tool appeared in AI, which provides such an opportunity.

AI can also act as a monitoring service. You can import tests from Visual Studio that test the health of your application. Or, you can create a set of checks for geo-distributed validation of the availability of the endpoints that interest you directly from the AI portal. AI will perform scheduled checks and output the results to the appropriate schedule.

There is also a special plugin that allows you to display telemetry from the application being debugged in the application's dubbe right in Visual Studio.

This is not all tools. It is also recommended to use AI for analyzing user telemetry.

Currently, AI is rated by the number of so-called save points. Million which costs about 100 p. One savepoint corresponds to one diagnostic event. Since if the AI client does not aggregate telemetry, it is advisable for the application to take care of the aggregation itself.

This recommendation should be guided by the fact that AI throttling will not allow you to save more than 300 diagnostic events per second. Dependencies are more complicated.

For example, in our services, the database is called about 10 thousand times per second. AI saves the total rate of requests, but detailed information (duration, URL, return code, etc.) is saved only for a few hundred requests, data for other requests are lost. Despite this, we still have enough data to localize the problems that arise.

Thanks to Analytics and other new features, AI has already helped to identify several serious performance problems with our services.

We continue to follow the further development of this tool.

In this article, I will conduct another review of AI, taking into account the new additions, and will share the experience of its use on real projects.

To begin with, I work at Kaspersky Lab, in a team that develops .NET services. Basically, we use Azure and Amazon cloud platforms as hosting. Our services handle a fairly high load from millions of users and provide high performance. It is important for us to maintain a good reputation of services, to achieve which we must very quickly react to problems and find bottlenecks that may affect performance. Similar problems can occur when generating anomalously high load or nonspecific user activity, various failures of infrastructure (for example, database) or external services, nobody has also canceled banal bugs in the logic of services.

')

We tried to use various diagnostic systems, but at the moment AI has proven to be the most simple and flexible tool for collecting and analyzing telemetry.

AI is a cross-platform tool for collecting and visualizing diagnostic telemetry. If you have, for example, a .NET application, then to connect AI you just need to create an AI container on the Microsoft Azure portal, then connect the ApplicationInsigts package to the nugget application.

Literally out of the box, AI will begin to collect information on the main counters of the performance of the machine (memory, processor) on which your application is running.

You can start collecting such server telemetry without modifying the application code: to do this, install a special diagnostic agent on the machine. The list of collected counters can be changed by editing the ApplicationInsights.config file.

The next interesting point is “dependency monitoring”. AI keeps track of all outgoing external HTTP calls to your application. Under external calls or dependencies refers to your application to the database and other third party services. If your application is a service hosted in the IIS infrastructure, then AI will intercept telemetry for all requests to your services, including all external requests (thanks to forwarding additional diagnostic information through the CallContext flow). That is, thanks to this, you can find the request you are interested in, as well as see all its dependencies. Application Map allows you to view a complete map of external dependencies of your application.

If there are obvious problems with external services in your system, then theoretically this picture can give you some information about the problem.

You can dive deeper for more detailed study of information on external requests.

Regardless of the platform, you can connect to your application an extensible Application Insights API, which allows you to save arbitrary telemetry. These can be logs or some custom performance counters.

For example, we write aggregated information in AI on all the main methods of our services, such as the number of calls, the percentage of errors and the time it takes to complete operations. In addition, we store information on critical areas of the application, such as the performance and availability of external services, the size of message queues (Azure Queue, ServiceBus), the bandwidth of their processing, etc.

Dependency monitoring, which I wrote about earlier, is quite a powerful tool, but at the moment it is able to automatically intercept only all outgoing HTTP requests, so we are forced to write telemetry on dependencies that were called through another transport system. In our case, this is Azure ServiceBus and RMQ, which work using custom protocols.

The telemetry that you collect does not need to have a flat structure (counterName-counterValue). It may contain a layered structure with different nesting. This is achieved by using a dynamic data type.

Structure example of a persistent metric

{ "metric": [ ], "context": { ... "custom": { "dimensions": [ { "ProcessId": "4068" } ], "metrics": [ { "dispatchRate": { "value": 0.001295, "count": 1.0, "min": 0.001295, "max": 0.001295, "stdDev": 0.0, "sampledValue": 0.001295, "sum": 0.001295 } }, "durationMetric": { "name": "contoso.org", "type": "Aggregation", "value": 468.71603053650279, "count": 1.0, "min": 468.71603053650279, "max": 468.71603053650279, "stdDev": 0.0, "sampledValue": 468.71603053650279 } } ] } } AI allowed to write such complex telemetry a year ago, but it was practically impossible to use it, because at that time, graphs could only be built using simple metrics. The only use of this telemetry was custom queries, which could only estimate the frequency of occurrence. Now there is a very powerful tool Analytics .

This tool allows you to write various queries to your telemetry, referring to the properties of arbitrary events using a special (SQL-like) language.

For an example of the syntax, you can look at a request that displays 10 any successful requests to your web application in the last 10 minutes:

requests | where success == "True" and timestapm > ago(10m) | take 10 The set of this language has a lot of ready-made operators for analysis (aggregate functions and connections, searching percentiles, medians, building reports, etc.) and visualizing telemetry in the form of graphs.

Such information can be used both for performance analysis and for obtaining analytics when solving some business problems. For example, if you are developing some online store, using AI analytics out of the box can give you an idea of customer preferences depending on their geographic location.

The AI includes the ProactiveDetection component, which, based on machine learning algorithms, is able to detect anomalies in the collected telemetry. For example, the number of requests to your services has increased dramatically or has fallen, the number of errors has increased, or the total duration of some operations.

You can also set up alerts for the telemetry counters you need.

AI keeps telemetry for 30 days. If this is not enough for you, then you can use the Continues Export feature, which will allow you to export telemetry to Azure Blobs. Using Azure Stream Analytics when searching the exported telemetry for the patterns you are interested in is good practice .

Power BI is a powerful tool for data visualization and analysis. You can connect a special Application Insights Power BI adapter to it and automatically forward some diagnostics to Power BI. To do this, simply build the necessary request in Analytics and click the export button. As a result, you get a small M-script that will be used as an AI data source.

Sometimes it is convenient to observe in real time the health of the system. This is especially true after installing updates. More recently, the Live Metrics Stream tool appeared in AI, which provides such an opportunity.

AI can also act as a monitoring service. You can import tests from Visual Studio that test the health of your application. Or, you can create a set of checks for geo-distributed validation of the availability of the endpoints that interest you directly from the AI portal. AI will perform scheduled checks and output the results to the appropriate schedule.

There is also a special plugin that allows you to display telemetry from the application being debugged in the application's dubbe right in Visual Studio.

This is not all tools. It is also recommended to use AI for analyzing user telemetry.

Currently, AI is rated by the number of so-called save points. Million which costs about 100 p. One savepoint corresponds to one diagnostic event. Since if the AI client does not aggregate telemetry, it is advisable for the application to take care of the aggregation itself.

This recommendation should be guided by the fact that AI throttling will not allow you to save more than 300 diagnostic events per second. Dependencies are more complicated.

For example, in our services, the database is called about 10 thousand times per second. AI saves the total rate of requests, but detailed information (duration, URL, return code, etc.) is saved only for a few hundred requests, data for other requests are lost. Despite this, we still have enough data to localize the problems that arise.

Thanks to Analytics and other new features, AI has already helped to identify several serious performance problems with our services.

We continue to follow the further development of this tool.

Source: https://habr.com/ru/post/320058/

All Articles