Containers and virtualization: faster, more efficient, more reliable

Modern virtualization technologies, without exception, seek to find a middle ground: to help in the maximum fast operation of systems, efficient use of equipment and security. Today we want to share our vision of the history of the development of virtualization and its immediate prospects.

As you know, virtualization in its usual format (that is, implying the launch of a full-fledged operating system within another operating system) was not always so vigorous and efficient as it is now. The first versions of hypervisors differed in relative slowness and really led to a serious decrease in performance compared to how the operating systems and applications running in them worked on "real hardware".

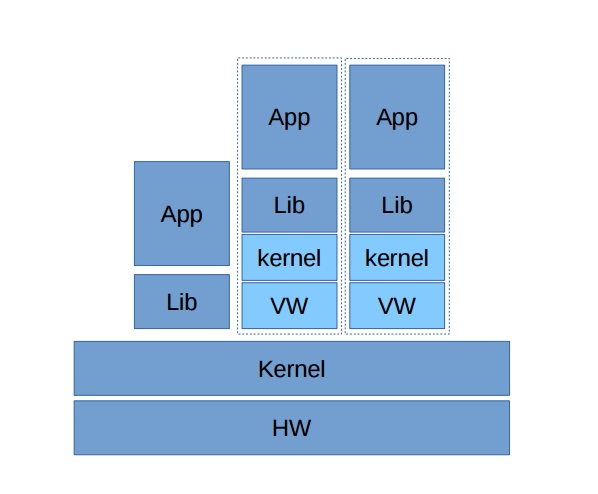

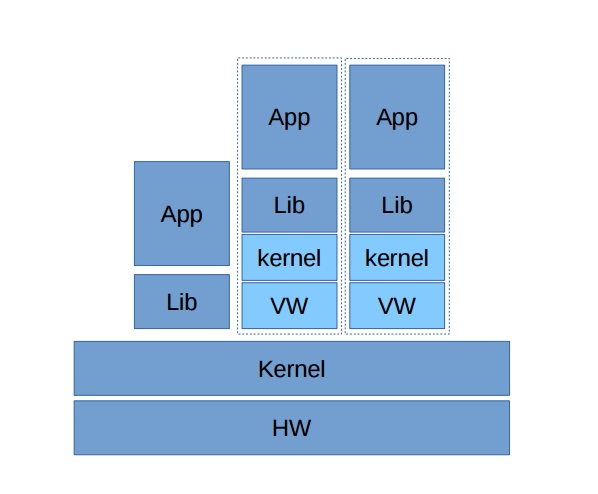

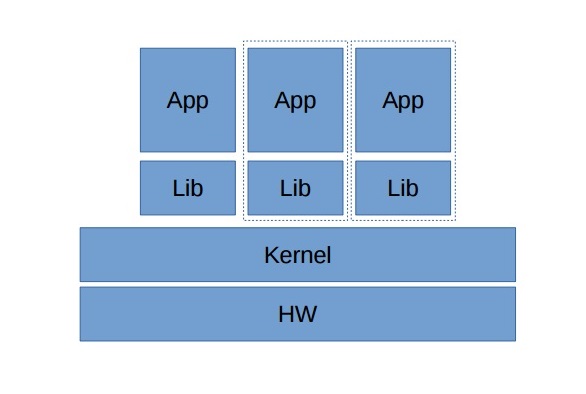

That is why, when our company was just beginning to work on a product that is called today Virtuozzo, we chose the direction that is called today “OS Containers” or “Light VMs”. What is the difference? And the difference is that our light VMs did not require and do not require the launch of a separate kernel for each “entity” running on the server. Instead, we take a ready-made Linux kernel and offer it to share all the resources between different users. This, of course, was not entirely about the standard Linux distribution, but about a modified version that could isolate users among themselves, giving them access, for example, only to separate parts of the process tree, to individual network adapters, and so on. All this was very much in demand, as it worked ten times faster than full virtualization.

')

But the user actually received his isolated Linux distribution: when accessing via SSH, he sees certain resources, allowed processes and cannot influence other users. This is exactly how the model of providing virtual hosting based on OS containers (or “Light VMs”) has developed - maximum efficiency with minimal server load.

Since the first version of Virtuozzo was introduced back in 1999, a lot of time has passed, during which virtualization technologies, both containerized and full, have undergone major changes, and the main search vectors for the Holy Grail have been outlined. for modern cloud-native tasks.

Throughout this time, container security requirements were mostly imposed on container virtualization, and full virtualization schemes were criticized for insufficient performance and density of resource allocation.

Well, during this time, the virtualization tools that allow you to run full versions of the guest OS have made great strides forward, which, in particular, can be seen on VMware products. Due to the fact that modern processors support a number of optimizations for working with virtual environments, the number of processes that have to be emulated has decreased significantly. As a result, the guest core is increasingly turning directly to the hardware, and only on demand, requests pass through the hypervisor.

Why emulate the simplest instructions? For example, arithmetic or moving bytes can be performed by the processor directly. The same can be said about the function ret (return the result of the procedure). Due to the fact that you do not need to contact the hypervisor every time, virtual environments began to work faster. And today, processor manufacturers continue to add a virtual context to their products, making virtualization systems even more efficient.

Container technology has also become increasingly perfect. For example, in our own version of Virtuozzo Linux, we implemented ReadyKernel technology, which allows you to install security updates without restarting services, which means doing it at any time, including immediately after their announcement. Or another example is the encryption of container disks in order to avoid the possibility of data theft when the “Easy VM” is in an inactive state. So while the means of full virtualization became faster, their alternative in the form of OS containers became more secure.

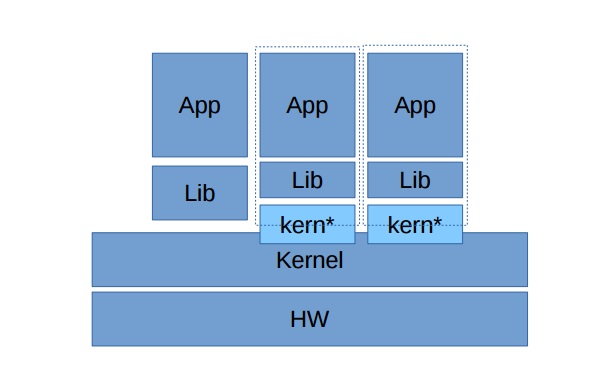

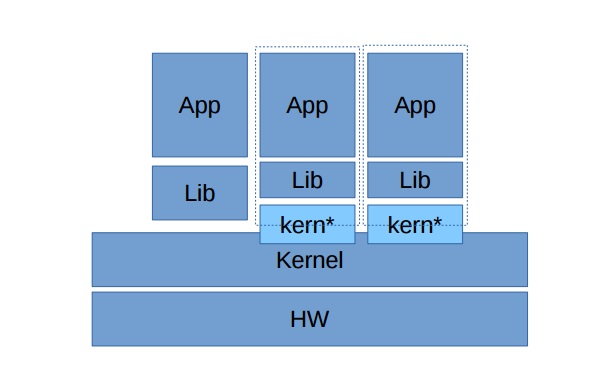

On the other hand, the operating system manufacturers themselves began to assist in the implementation of virtual initiatives. Today, both Linux and Windows are paravirtualized systems. This means that, while working in guest mode, the OS "consciously" does not refer to real hardware, but to the hypervisor. Thus, they can once declare to the hypervisor a series of privileged operations and save a lot of time, which used to be emulated. In particular, memory management and work with I / O systems have become much better and faster, because in fact the “virtual iron” layer has been excluded from the system.

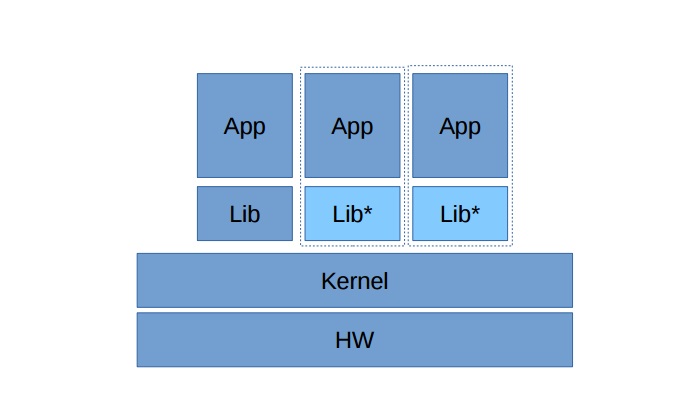

Another interesting technology that today is only gaining momentum and can give a big impetus to the development of “Light VM” is proxying. The first of this decision said the company Apporeto. The developers suggested the following idea: they analyzed the database of vulnerabilities and concluded that vulnerabilities in file systems and network stack are found only 1-2 times a year. But in the interface part it happens much more often. As a result, it was proposed to use all clients the same layer of work with the network, files and other "deep" OS services, but at the same time to bring to the guest system everything related to interfaces. Thus, even if the attacker can use the fresh vulnerability, he will not be able to get beyond the specific "Light VM". At the moment, to implement the approach, you need to use a special Linux distribution, but given the promise of the approach, we will soon see the implementation of this principle in most standard distributions.

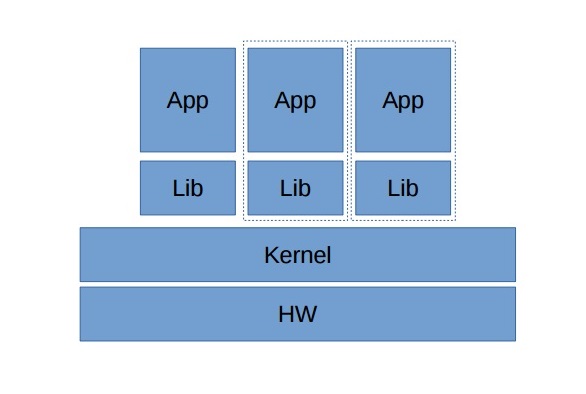

However, the victorious march of Docker has identified another very interesting trend: many people want to run ready-made microservices - separate applications. And it doesn't seem to make a whole virtual machine for this. In this case, you can give the owner the opportunity to choose which functions are available to the application. And this approach has become very popular in the absence of other ready-made solutions. But it is only suitable for those applications that do not need many functions. Otherwise, you have to sacrifice something, but this is not interesting.

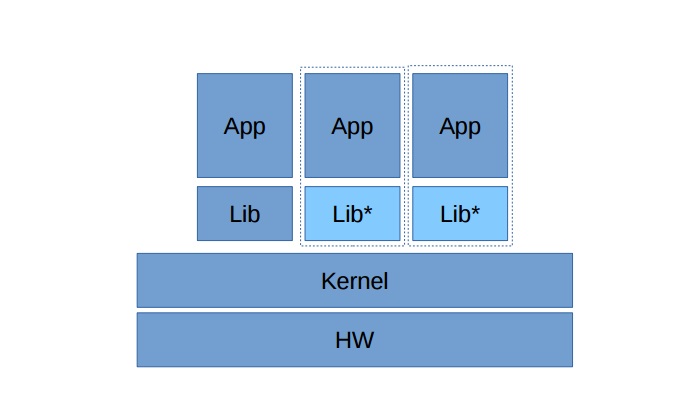

Instead, it became possible to launch an application in a hypervisor without a kernel, but to provide it with the necessary libraries. Such solutions are called Unikernel. They include modified system libraries that initially know that they work in the hypervisor. For the hypervisor, the guest OS is 1 process. So what's the difference? Why not launch our application in the form of this process?

The only difference is that the application along with the libraries is formed as a "monolith", which includes the necessary modules. However, today enough automated tools have been created that help assemble such kits and run them directly in the hypervisor, providing both a high degree of isolation of processes from each other and high performance.

Summing up the above, we want to express our confidence that virtualization technologies will develop in both directions, deepening their specialization to solve various problems. Increasingly deeper paravirtualization and new proxying methods will provide higher performance and resource efficiency for guest operating systems in the hypervisor, and the additional protection of the “lightweight VMs” will make them even more attractive for hosters and corporate environments. Thus, in the near future, a full-fledged virtual ecosystem will have to support ready guest VMs, light VMs, and ready applications in the form of Unikernel or Docker containers.

As you know, virtualization in its usual format (that is, implying the launch of a full-fledged operating system within another operating system) was not always so vigorous and efficient as it is now. The first versions of hypervisors differed in relative slowness and really led to a serious decrease in performance compared to how the operating systems and applications running in them worked on "real hardware".

That is why, when our company was just beginning to work on a product that is called today Virtuozzo, we chose the direction that is called today “OS Containers” or “Light VMs”. What is the difference? And the difference is that our light VMs did not require and do not require the launch of a separate kernel for each “entity” running on the server. Instead, we take a ready-made Linux kernel and offer it to share all the resources between different users. This, of course, was not entirely about the standard Linux distribution, but about a modified version that could isolate users among themselves, giving them access, for example, only to separate parts of the process tree, to individual network adapters, and so on. All this was very much in demand, as it worked ten times faster than full virtualization.

')

But the user actually received his isolated Linux distribution: when accessing via SSH, he sees certain resources, allowed processes and cannot influence other users. This is exactly how the model of providing virtual hosting based on OS containers (or “Light VMs”) has developed - maximum efficiency with minimal server load.

Progress is inevitable

Since the first version of Virtuozzo was introduced back in 1999, a lot of time has passed, during which virtualization technologies, both containerized and full, have undergone major changes, and the main search vectors for the Holy Grail have been outlined. for modern cloud-native tasks.

Throughout this time, container security requirements were mostly imposed on container virtualization, and full virtualization schemes were criticized for insufficient performance and density of resource allocation.

Well, during this time, the virtualization tools that allow you to run full versions of the guest OS have made great strides forward, which, in particular, can be seen on VMware products. Due to the fact that modern processors support a number of optimizations for working with virtual environments, the number of processes that have to be emulated has decreased significantly. As a result, the guest core is increasingly turning directly to the hardware, and only on demand, requests pass through the hypervisor.

Why emulate the simplest instructions? For example, arithmetic or moving bytes can be performed by the processor directly. The same can be said about the function ret (return the result of the procedure). Due to the fact that you do not need to contact the hypervisor every time, virtual environments began to work faster. And today, processor manufacturers continue to add a virtual context to their products, making virtualization systems even more efficient.

Container technology has also become increasingly perfect. For example, in our own version of Virtuozzo Linux, we implemented ReadyKernel technology, which allows you to install security updates without restarting services, which means doing it at any time, including immediately after their announcement. Or another example is the encryption of container disks in order to avoid the possibility of data theft when the “Easy VM” is in an inactive state. So while the means of full virtualization became faster, their alternative in the form of OS containers became more secure.

Paravirtualization

On the other hand, the operating system manufacturers themselves began to assist in the implementation of virtual initiatives. Today, both Linux and Windows are paravirtualized systems. This means that, while working in guest mode, the OS "consciously" does not refer to real hardware, but to the hypervisor. Thus, they can once declare to the hypervisor a series of privileged operations and save a lot of time, which used to be emulated. In particular, memory management and work with I / O systems have become much better and faster, because in fact the “virtual iron” layer has been excluded from the system.

Proxy

Another interesting technology that today is only gaining momentum and can give a big impetus to the development of “Light VM” is proxying. The first of this decision said the company Apporeto. The developers suggested the following idea: they analyzed the database of vulnerabilities and concluded that vulnerabilities in file systems and network stack are found only 1-2 times a year. But in the interface part it happens much more often. As a result, it was proposed to use all clients the same layer of work with the network, files and other "deep" OS services, but at the same time to bring to the guest system everything related to interfaces. Thus, even if the attacker can use the fresh vulnerability, he will not be able to get beyond the specific "Light VM". At the moment, to implement the approach, you need to use a special Linux distribution, but given the promise of the approach, we will soon see the implementation of this principle in most standard distributions.

With an eye to the application

However, the victorious march of Docker has identified another very interesting trend: many people want to run ready-made microservices - separate applications. And it doesn't seem to make a whole virtual machine for this. In this case, you can give the owner the opportunity to choose which functions are available to the application. And this approach has become very popular in the absence of other ready-made solutions. But it is only suitable for those applications that do not need many functions. Otherwise, you have to sacrifice something, but this is not interesting.

Instead, it became possible to launch an application in a hypervisor without a kernel, but to provide it with the necessary libraries. Such solutions are called Unikernel. They include modified system libraries that initially know that they work in the hypervisor. For the hypervisor, the guest OS is 1 process. So what's the difference? Why not launch our application in the form of this process?

The only difference is that the application along with the libraries is formed as a "monolith", which includes the necessary modules. However, today enough automated tools have been created that help assemble such kits and run them directly in the hypervisor, providing both a high degree of isolation of processes from each other and high performance.

Perspectives

Summing up the above, we want to express our confidence that virtualization technologies will develop in both directions, deepening their specialization to solve various problems. Increasingly deeper paravirtualization and new proxying methods will provide higher performance and resource efficiency for guest operating systems in the hypervisor, and the additional protection of the “lightweight VMs” will make them even more attractive for hosters and corporate environments. Thus, in the near future, a full-fledged virtual ecosystem will have to support ready guest VMs, light VMs, and ready applications in the form of Unikernel or Docker containers.

Source: https://habr.com/ru/post/319998/

All Articles