Trucks and refrigerators in the cloud

In this article, we would like to share with you the story of creating a basic prototype of the gateway and transferring it to the Microsoft Azure cloud platform using the Software as a Service (SaaS) model.

In order to make it clear what will be discussed below - a little help:

The AutoGRAPH system is a classic client-server application with data access and analytics capabilities. Its current architecture makes it costly to scale. That is why the key idea for collaboration was an attempt to rethink the architecture, bringing it to the SaaS model to solve scalability problems and create innovative supply for the market.

')

Before the start of the project, it was obvious that carrying out technological interaction in the Internet of Things paradigm can be a very difficult task, as it includes, in addition to integrating Azure services into already existing software, issues related to obtaining data from the equipment installed on the transport satellite AutoGRAPH transport monitoring (hereinafter referred to as the “SMT terminals”). And only appropriate training greatly simplifies the task. Therefore, preparation began about a month before the event itself. As a result, several key questions were discussed, which are listed below with the answers to them.

Has the system been commissioned? What condition do devices have, what can they do and what can't they do?

Today the system works for several years. Since it was intended for use with vehicles from different manufacturers / partners, there are differences in equipment. Almost all devices are similar only in one thing - changing the firmware even on a small number of them is not possible because of their work in a productive environment. For this reason, the use of the Field Gateway model (placing or installing a gateway close to devices) is impossible.

What protocol do devices use to communicate with the server?

Binary protocol proprietary company TehnoKom.

Is it possible to create a new version of the server part without disturbing the processes taking place and redirect them there from the old version?

Difficult, but real. But since such an approach requires serious and long-term testing and development, it was decided to exclude it from this iteration.

Do I need to control devices remotely?

Perhaps in the future, but not now.

In the planning process, it was decided to expand the list of tasks in connection with the addition of components to the system for processing the collected data. Accordingly, the current monolithic architecture should have been broken into modules. An incoming stream of data from AutoGRAPH was organized, so questions regarding device emulation were closed.

For hosting a gateway that listens on a TCP port and sends data to the data processing subsystem, the Microsoft Azure Cloud Services cloud service was chosen.

In order to implement the prototype, the team had 5 hours and 3 developers for 6 tasks:

Initially we decided to focus on the question of how to break a monolithic application into components.

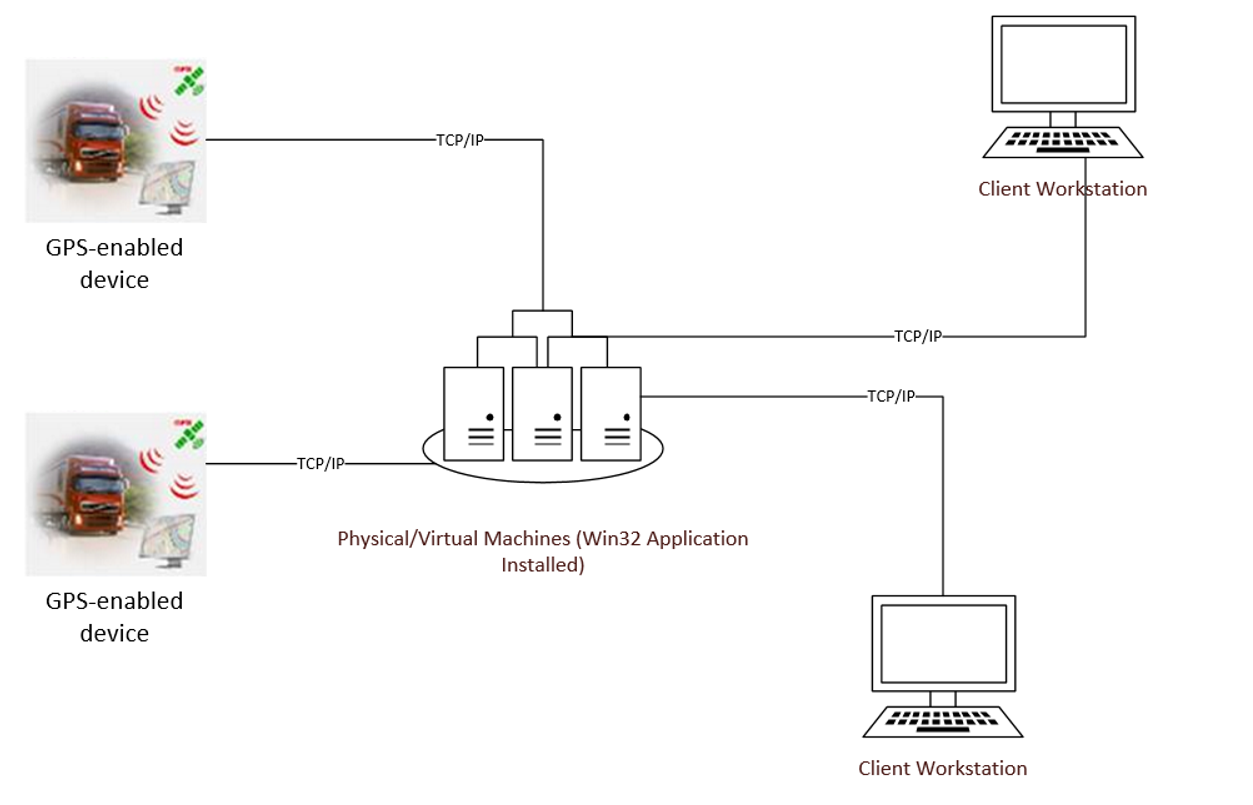

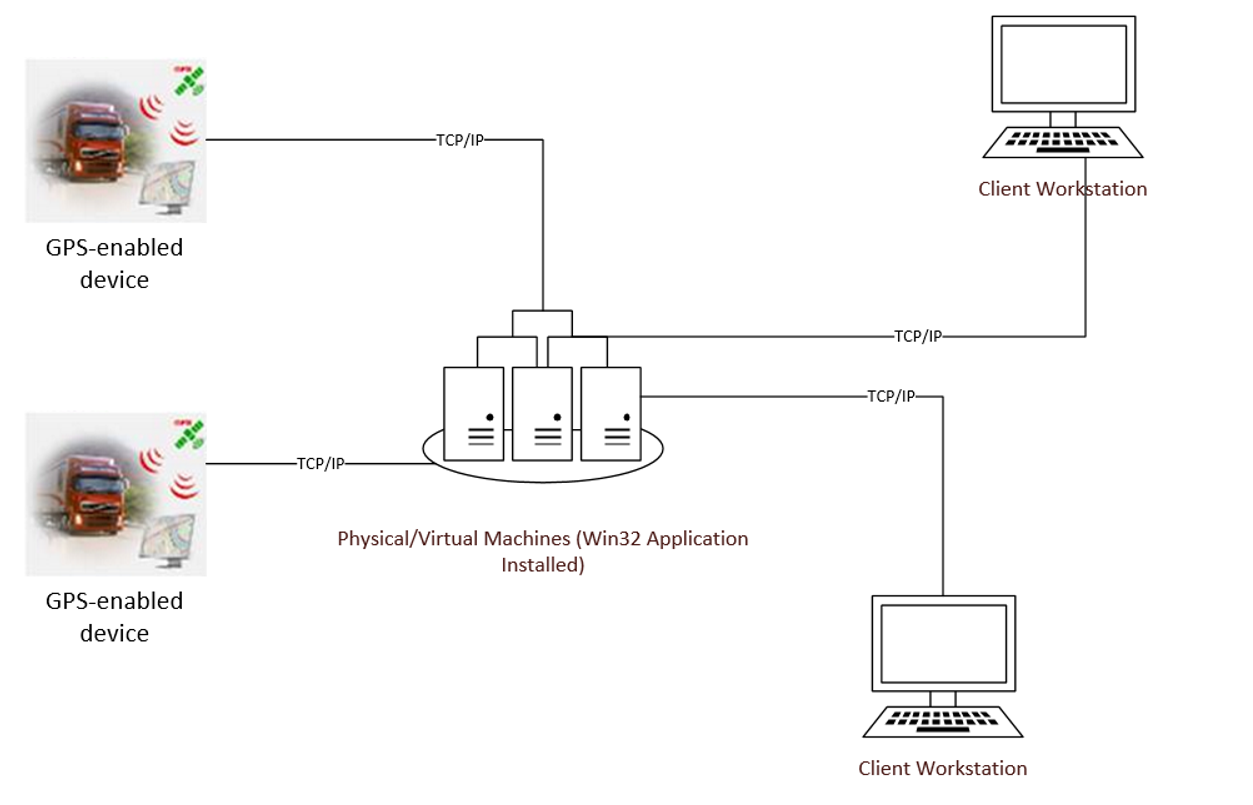

The old architecture was a classic two-tier client-server architecture, a monolithic application written in C ++ and running in the Windows Server environment. His job was to receive data packets from a monitoring system installed on devices, save these packets to local storage and be a front-end for data access for external users. Inside the application there were several modules: network, data storage, data decryption and work with the database.

Data between the server and devices was transmitted via a proprietary binary TCP protocol. To establish a connection, the client sends a handshake-packet, the server had to respond to it with a confirmation packet.

In its work, the application used a set of Win32API system calls. Data is stored in binary form as files.

The problem of scaling this lies in the limited means of implementation: increasing server resources / adding new ones and setting up a load balancer and performing other tasks.

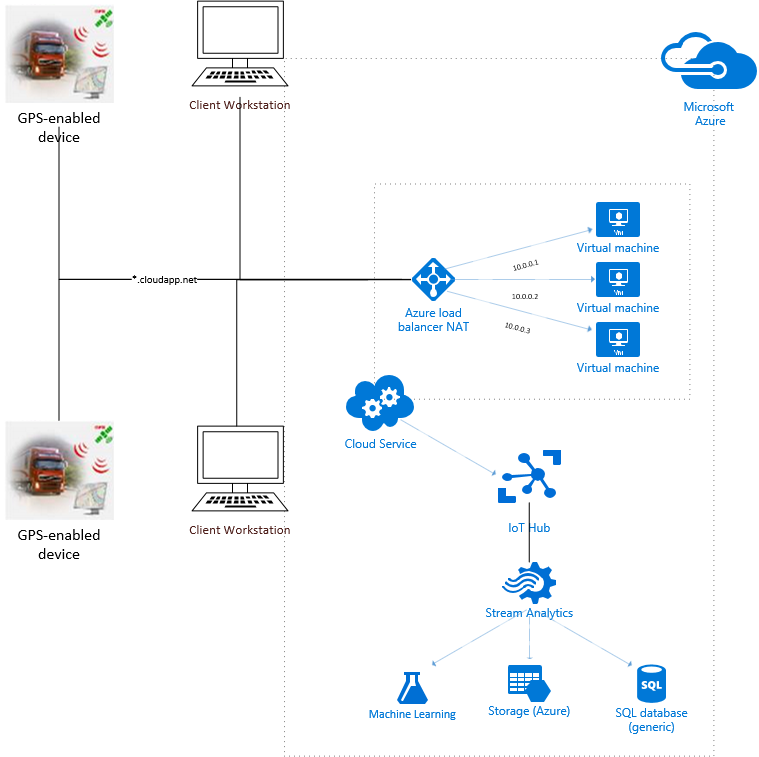

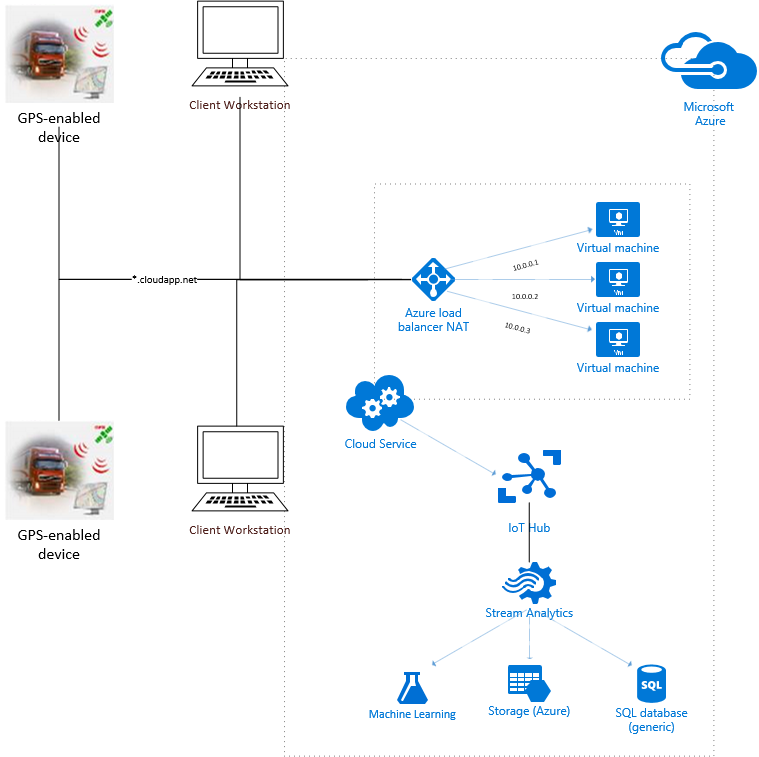

After the application of the new architecture, the application lost its relevance, since in fact it was a gateway and performed several office tasks (for example, to save data without changing it). In the new architecture, all this was replaced by Azure services (the developers evaluated which option is better to choose: Azure IoT Gateway or a self-written gateway; and chose the second option with hosting in Azure Cloud Services).

The principles of operation of the new system are similar to the principles of the old, but provide additional opportunities for scaling (including automatic) and remove infrastructure tasks, for example, to configure the load balancer. Several instances (instances) of the Cloud Service with the gateway listen on a special port, connect devices and transfer data to the processing subsystem.

It is important to start with small steps and not implement everything at once in the cloud. The cloud has its advantages and features that may complicate prototyping (for example, opening ports, debugging a remote project, deployment, and so on). Therefore, in this case, the first stage of development was to create a local version of the gateway:

In the process of creating a prototype, a problem arose - the developers worked in the Microsoft office, where the network security perimeter has a certain number of different kinds of policies, which could lead to the complication of prototyping the local gateway. Since there was no opportunity to change policies, the team used ngrok , which allows you to create a secure tunnel on localhost.

During development, there were several other issues that were decided to work out deeper - writing to the local storage, getting the address and ports of the instance on which the gateway works. Since many variables in the cloud change dynamically, it was important to resolve these issues before proceeding to the next stage.

Of course, compared to local storage of data on the instance, Azure has better ways to store information, but the old architecture meant local storage, so it was necessary to check whether the system can work in this mode. By default, everything that works in the Cloud Service does not have access to the file system - that is, it is not possible to simply capture and write information to c: / temp. Since this is PaaS, you need to set up a special space called Local Storage in the cloud service configuration and set the cleanOnRoleRecycle flag, which with a certain degree of confidence ensures that information from the local storage will not be deleted when the role is restarted. Below is the code that was used to solve this problem.

After testing, it turned out that the data remains in the repository, so it can be a good way to store temporary data.

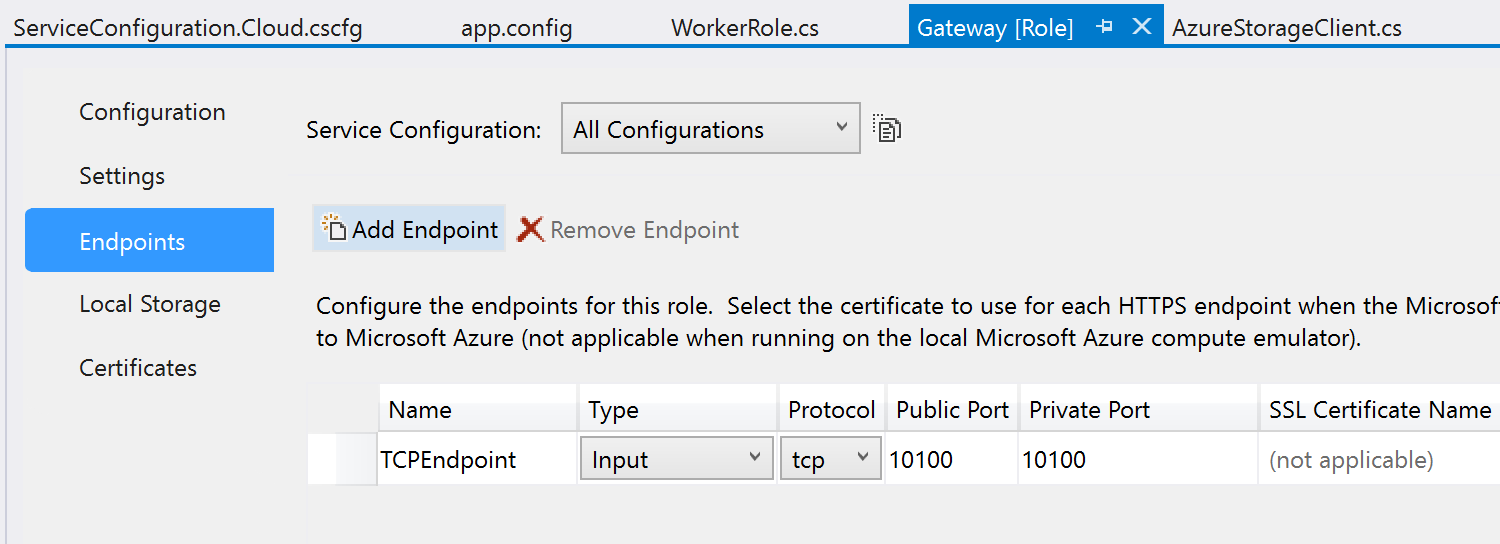

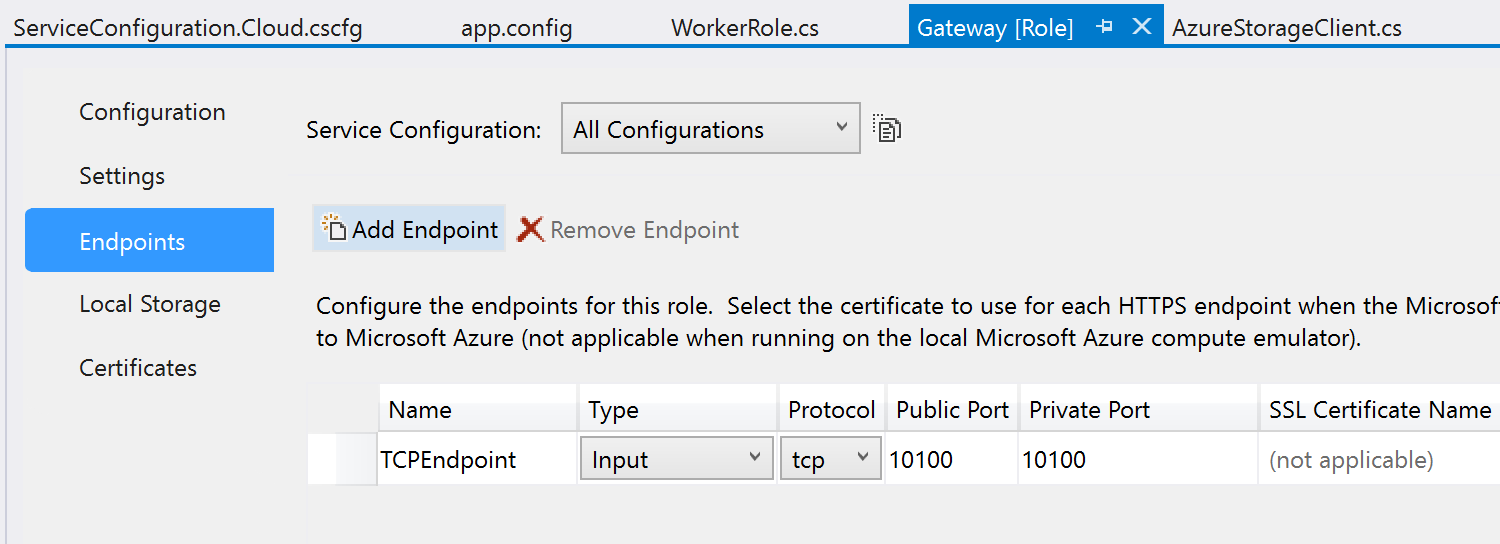

In order to get data at runtime, you must define the Endpoint inside the configuration of the cloud service and access it in code.

Now the automatically configured load balancer will manage requests between instances, the developer can scale the number of these instances, with each instance will receive the necessary data in runtime.

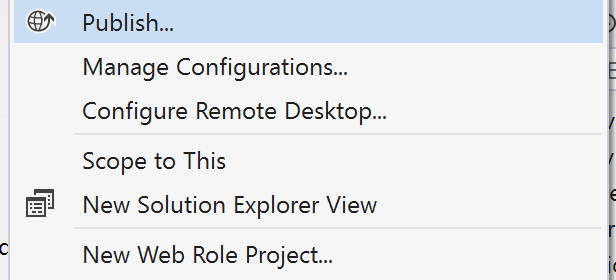

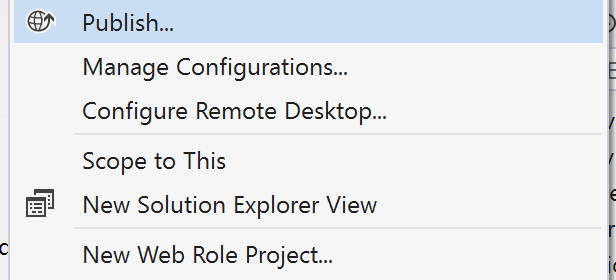

So, the local gateway was launched and tested on Cloud Services (which can be emulated locally), now it is the turn to deploy the project to the cloud. The Visual Studio Toolkit allows you to do this in a few clicks.

The issue of further data transfer from the gateway instance was successfully closed using official code samples.

When planning, the team was worried that one day would not be enough to develop a working prototype of the system, especially in the presence of various environments, devices and requirements (outdated devices, protocols, monolithic C ++ application). Something really did not have time to do, but the basic prototype was created and worked.

Using the PaaS model for this kind of task is a great way:

In just a few hours, it was possible to create a basis for developing an end-to-end solution in the IoT paradigm using real data. Future plans include using machine learning to take greater advantage of the data, migrate to a new protocol, and test the solution on the Azure Service Fabric platform.

Thank you for participating in the creation of the material by Alexander Belotserkovsky and the team of Quart Technology.

In order to make it clear what will be discussed below - a little help:

- Quarta Technologies is a supplier of solutions in the field of basic IT infrastructure and the leader of the Russian IT market in the field of complete information and technical support of technologies for embedded systems based on Microsoft Windows Embedded.

- TechnoCom works in the development and production of GLONASS / GPS satellite monitoring systems for vehicles, personnel, fuel sensors and software for any companies and sectors of transport, industry and agriculture. The most famous product of the company is the navigation terminals of the series “AutoGRAPH”.

- iQFreeze is a solution from Quart Technology that collects, processes and transmits information about the status of cargo and transport in real time.

Creating a basic prototype gateway

The AutoGRAPH system is a classic client-server application with data access and analytics capabilities. Its current architecture makes it costly to scale. That is why the key idea for collaboration was an attempt to rethink the architecture, bringing it to the SaaS model to solve scalability problems and create innovative supply for the market.

')

Before the start of the project, it was obvious that carrying out technological interaction in the Internet of Things paradigm can be a very difficult task, as it includes, in addition to integrating Azure services into already existing software, issues related to obtaining data from the equipment installed on the transport satellite AutoGRAPH transport monitoring (hereinafter referred to as the “SMT terminals”). And only appropriate training greatly simplifies the task. Therefore, preparation began about a month before the event itself. As a result, several key questions were discussed, which are listed below with the answers to them.

Has the system been commissioned? What condition do devices have, what can they do and what can't they do?

Today the system works for several years. Since it was intended for use with vehicles from different manufacturers / partners, there are differences in equipment. Almost all devices are similar only in one thing - changing the firmware even on a small number of them is not possible because of their work in a productive environment. For this reason, the use of the Field Gateway model (placing or installing a gateway close to devices) is impossible.

What protocol do devices use to communicate with the server?

Binary protocol proprietary company TehnoKom.

Is it possible to create a new version of the server part without disturbing the processes taking place and redirect them there from the old version?

Difficult, but real. But since such an approach requires serious and long-term testing and development, it was decided to exclude it from this iteration.

Do I need to control devices remotely?

Perhaps in the future, but not now.

In the planning process, it was decided to expand the list of tasks in connection with the addition of components to the system for processing the collected data. Accordingly, the current monolithic architecture should have been broken into modules. An incoming stream of data from AutoGRAPH was organized, so questions regarding device emulation were closed.

For hosting a gateway that listens on a TCP port and sends data to the data processing subsystem, the Microsoft Azure Cloud Services cloud service was chosen.

In order to implement the prototype, the team had 5 hours and 3 developers for 6 tasks:

- Evaluate the current architecture and rethink it using PaaS.

- Implement a test gateway (console application) for testing.

- Evaluate existing options for creating a cloud gateway (for example, Azure IoT Gateway, Cloud Services, and so on).

- Migrate the gateway to the cloud.

- Connect the gateway with the data processing subsystem (that is, write code for this).

- Add monitoring capabilities to your prototype using Application Insights.

Initially we decided to focus on the question of how to break a monolithic application into components.

Architecture to

The old architecture was a classic two-tier client-server architecture, a monolithic application written in C ++ and running in the Windows Server environment. His job was to receive data packets from a monitoring system installed on devices, save these packets to local storage and be a front-end for data access for external users. Inside the application there were several modules: network, data storage, data decryption and work with the database.

Data between the server and devices was transmitted via a proprietary binary TCP protocol. To establish a connection, the client sends a handshake-packet, the server had to respond to it with a confirmation packet.

In its work, the application used a set of Win32API system calls. Data is stored in binary form as files.

The problem of scaling this lies in the limited means of implementation: increasing server resources / adding new ones and setting up a load balancer and performing other tasks.

Architecture after

After the application of the new architecture, the application lost its relevance, since in fact it was a gateway and performed several office tasks (for example, to save data without changing it). In the new architecture, all this was replaced by Azure services (the developers evaluated which option is better to choose: Azure IoT Gateway or a self-written gateway; and chose the second option with hosting in Azure Cloud Services).

The principles of operation of the new system are similar to the principles of the old, but provide additional opportunities for scaling (including automatic) and remove infrastructure tasks, for example, to configure the load balancer. Several instances (instances) of the Cloud Service with the gateway listen on a special port, connect devices and transfer data to the processing subsystem.

Migrating the prototype to the Microsoft Azure cloud platform using the Software as a Service (SaaS) model

It is important to start with small steps and not implement everything at once in the cloud. The cloud has its advantages and features that may complicate prototyping (for example, opening ports, debugging a remote project, deployment, and so on). Therefore, in this case, the first stage of development was to create a local version of the gateway:

- The gateway listens to the TCP port and initiates a connection when it receives a handshake packet from the device.

- The gateway responds with an ACK packet and establishes a connection. When receiving data packets, according to the specification, these packets are decomposed into ready-made content.

- The gateway forwards the data further.

In the process of creating a prototype, a problem arose - the developers worked in the Microsoft office, where the network security perimeter has a certain number of different kinds of policies, which could lead to the complication of prototyping the local gateway. Since there was no opportunity to change policies, the team used ngrok , which allows you to create a secure tunnel on localhost.

During development, there were several other issues that were decided to work out deeper - writing to the local storage, getting the address and ports of the instance on which the gateway works. Since many variables in the cloud change dynamically, it was important to resolve these issues before proceeding to the next stage.

Record on local storage Cloud Service

Of course, compared to local storage of data on the instance, Azure has better ways to store information, but the old architecture meant local storage, so it was necessary to check whether the system can work in this mode. By default, everything that works in the Cloud Service does not have access to the file system - that is, it is not possible to simply capture and write information to c: / temp. Since this is PaaS, you need to set up a special space called Local Storage in the cloud service configuration and set the cleanOnRoleRecycle flag, which with a certain degree of confidence ensures that information from the local storage will not be deleted when the role is restarted. Below is the code that was used to solve this problem.

const string azureLocalResourceNameFromServiceDefinition = "LocalStorage1"; var azureLocalResource = RoleEnvironment.GetLocalResource(azureLocalResourceNameFromServiceDefinition); var filepath = azureLocalResource.RootPath + "telemetry.txt"; Byte[] bytes = new Byte[514]; String data = null; while (true) { TcpClient client = server.AcceptTcpClient(); data = null; int i; NetworkStream stream = client.GetStream(); while ((i = stream.Read(bytes, 0, bytes.Length)) != 0) { … System.IO.File.AppendAllText(filepath, BitConverter.ToString(bytes)); … } client.Close(); } After testing, it turned out that the data remains in the repository, so it can be a good way to store temporary data.

Obtain an instance address and port

In order to get data at runtime, you must define the Endpoint inside the configuration of the cloud service and access it in code.

IPEndPoint instEndpoint = RoleEnvironment.CurrentRoleInstance.InstanceEndpoints\["TCPEndpoint"\].IPEndpoint; IPAddress localAddr = IPAddress.Parse(instEndpoint.Address.ToString()); TcpListener server = new TcpListener(localAddr, instEndpoint.Port); Now the automatically configured load balancer will manage requests between instances, the developer can scale the number of these instances, with each instance will receive the necessary data in runtime.

So, the local gateway was launched and tested on Cloud Services (which can be emulated locally), now it is the turn to deploy the project to the cloud. The Visual Studio Toolkit allows you to do this in a few clicks.

The issue of further data transfer from the gateway instance was successfully closed using official code samples.

findings

When planning, the team was worried that one day would not be enough to develop a working prototype of the system, especially in the presence of various environments, devices and requirements (outdated devices, protocols, monolithic C ++ application). Something really did not have time to do, but the basic prototype was created and worked.

Using the PaaS model for this kind of task is a great way:

- Rapid prototyping solutions.

- Using services and settings to make a solution scalable and flexible from the very beginning.

In just a few hours, it was possible to create a basis for developing an end-to-end solution in the IoT paradigm using real data. Future plans include using machine learning to take greater advantage of the data, migrate to a new protocol, and test the solution on the Azure Service Fabric platform.

Thank you for participating in the creation of the material by Alexander Belotserkovsky and the team of Quart Technology.

Source: https://habr.com/ru/post/319856/

All Articles