Terraform, Azure, Irkutsk and another 1207 words about transferring the game to the cloud

We had load balancers, several application servers, 5 databases, 24 cores, 32 gigabytes of RAM, nginx, php, redis, memcached and a whole bunch of other network technologies of all shapes and colors. Not that it was a necessary minimum for the backend, but when I started making great online games, it becomes difficult to stop. We knew that sooner or later we would move on to the cloud.

Now we are doing a backend for games based on microservices - everything used to be completely different. There was a fixed hardware setup, constant risks, that here, a little bit more, and everything would break down due to the influx of players. The year 2013 began. Then we released the game 2020: My Country .

A lot of time passed, the project grew and developed, the load gradually increased, and at some point we decided to transfer the backend to the Azure cloud - we can. The clouds provide a good margin of computing power, so we decided to start with a smaller number of gigabytes and gigahertz than in our data center. The basis of the new backend became less powerful machines with newer processors. Most of all, we were worried about the load on the new database servers and even prepared to partition the bases, but we did, as it turned out, in vain.

')

Fig. 1 - Microsoft Azure Portal

On the Azure portal, we raised the required number of machines, added a security group with access settings to the machines, installed the necessary packages via Ansible, set up configs, drank some more coffee, turned on new load balancers and exhaled. There remained a couple of things to do.

But before that - attention, dear experts, - a question is asked by a novice systems engineer from Irkutsk: “Well, this is one project. But what if there are a lot of them, and there will be dozens and hundreds of hosts? ”We answer: in such cases, a terraform from HashiCorp comes to the rescue, which already successfully works in AWS and also supports Azure.

In the first approach to terraform, the documentation helps us, where there is an example of creating a resource group, its network, and a file with credentials for the terraform. We brought this data to a file with the .tf extension in the directory from which the scripts are run.

The terraform plan team shows previews of future actions - it is planned to add resources that have already been created manually and exist.

Fig. 2 - The result of the terraform plan command.

This is not exactly what we would like to see, so we recall our experience with AWS - there is an import command in terraform, for obviously importing existing infrastructure.

When importing, you must enter the identifiers of existing resources from Azure. By default, they are quite long and complex, so (probably) the portal has a button Copy to clipboard, which greatly simplifies the whole process.

Fig. 3 - Import Result

Another point: terraform does not yet know how to automatically generate the configuration of imported resources and suggests that we do it manually. By default, if there is no configuration for a resource, it is marked for deletion upon the next launch.

Add a configuration and look at the terraform plan .

Fig. 4 - terraform plan after importing resources from Azure

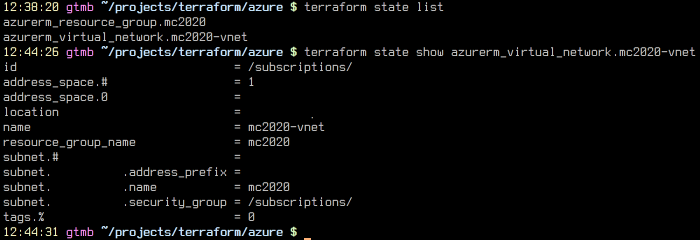

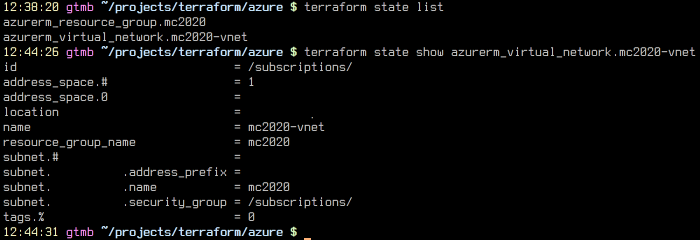

The tilde and yellow color mean the change of the resource - terraform wants to re-create the subnet mc2020 without being tied to the security group. This is probably due to the fact that we did not specify the security group for the subnet. To find out the reason for sure, let's look at how an existing virtual network is imported. This can be done using the terraform.tfstate file (unreadable JSON sheet) or the state command. It is obvious what method we have chosen.

Fig.5 - Again terraform state

Indeed, there is a link to security_group, but in the initial configuration we forgot about it. To fix, we go through all the steps anew - we find the group on the Azure portal, copy the id, import it again and write it into the configuration.

Note: when importing resources, you need to carefully look at their attributes - they are case-sensitive, and it is important not to be mistaken when typing them. All of this is the cost of importing an existing infrastructure and encoding it.

After all edits, the terraform plan command gives us what we expected to see initially.

(Here was the boring process of importing / recording the infrastructure of the entire project, which we missed for obvious reasons)

We recommend creating the infrastructure immediately as a code. Magic is done as follows:

Like that. Thanks for the question, attentive reader! Let's return to the pair of things promised earlier.

First, we needed to transfer a large amount of data from the battle iron. To update xtrabackup, I had to upgrade MySQL and temporarily disable some of the network functionality. During the development of the game, it was provided with an offline mode, so we did it painlessly and started transferring data.

Figure 6 - Summary of the infrastructure in Azure

At the time of transfer, there were about 500 gigabytes of data in several databases. We transferred data from the two largest servers between data centers via xtrabackup at a speed of 1 Gbit / s, and to dump the necessary databases from the third server we used myloader, which runs faster than the standard mysqldump. After that, we finished the remaining coffee and made the final settings for everything-that-could-was-configured.

Secondly, we began to slowly transfer traffic to the new load balancers, watching for errors and load. To process all the traffic, we added another app-server and eventually got ~ 30% of the load on the app- and 8-15% db-servers.

In the example above, we used state from the local computer of one of our engineers and could lose all the work done if the file was lost. Therefore, of course, we have made backups, saved our code in git and fully take advantage of the version control system.

Planning and storage of code and steates depends on the specifics of the project, so there may be several approaches - creation in relation to projects, regions, even to a separate state for each resource. In general, you need to start writing the infrastructure as a code, and everything will work out.

For full transfer left:

The whole process of transferring the backend to Azure (along with the preparation and reading of the documentation for terraform) took a little less than a week, and the transfer itself took about two days. The Azure cloud out of the box allows you to feel the benefits of automatic scaling, gives you the ability to instantly add resources in the event of an increase in load and all the rest is good and positive, which cloud services bring to life of developers.

With the right approach to the organization of the process and the choice of effective tools, the most crucial and difficult part - moving and launching the existing backend in the cloud infrastructure - becomes simple, predictable and understandable, which we enjoyed and used. Having documented the process of simply moving to this technology, we will continue to transfer our other projects to the cloud.

We are waiting for your questions in the comments. Thanks for attention!

Now we are doing a backend for games based on microservices - everything used to be completely different. There was a fixed hardware setup, constant risks, that here, a little bit more, and everything would break down due to the influx of players. The year 2013 began. Then we released the game 2020: My Country .

A lot of time passed, the project grew and developed, the load gradually increased, and at some point we decided to transfer the backend to the Azure cloud - we can. The clouds provide a good margin of computing power, so we decided to start with a smaller number of gigabytes and gigahertz than in our data center. The basis of the new backend became less powerful machines with newer processors. Most of all, we were worried about the load on the new database servers and even prepared to partition the bases, but we did, as it turned out, in vain.

')

Fig. 1 - Microsoft Azure Portal

On the Azure portal, we raised the required number of machines, added a security group with access settings to the machines, installed the necessary packages via Ansible, set up configs, drank some more coffee, turned on new load balancers and exhaled. There remained a couple of things to do.

But before that - attention, dear experts, - a question is asked by a novice systems engineer from Irkutsk: “Well, this is one project. But what if there are a lot of them, and there will be dozens and hundreds of hosts? ”We answer: in such cases, a terraform from HashiCorp comes to the rescue, which already successfully works in AWS and also supports Azure.

In the first approach to terraform, the documentation helps us, where there is an example of creating a resource group, its network, and a file with credentials for the terraform. We brought this data to a file with the .tf extension in the directory from which the scripts are run.

provider "azurerm" { subscription_id = "xxxx-xxxx" client_id = "xxxx-xxxx" client_secret = "xxxx-xxxx" tenant_id = "xxxx-xxxx" } The terraform plan team shows previews of future actions - it is planned to add resources that have already been created manually and exist.

Fig. 2 - The result of the terraform plan command.

This is not exactly what we would like to see, so we recall our experience with AWS - there is an import command in terraform, for obviously importing existing infrastructure.

When importing, you must enter the identifiers of existing resources from Azure. By default, they are quite long and complex, so (probably) the portal has a button Copy to clipboard, which greatly simplifies the whole process.

Fig. 3 - Import Result

Another point: terraform does not yet know how to automatically generate the configuration of imported resources and suggests that we do it manually. By default, if there is no configuration for a resource, it is marked for deletion upon the next launch.

Add a configuration and look at the terraform plan .

Fig. 4 - terraform plan after importing resources from Azure

The tilde and yellow color mean the change of the resource - terraform wants to re-create the subnet mc2020 without being tied to the security group. This is probably due to the fact that we did not specify the security group for the subnet. To find out the reason for sure, let's look at how an existing virtual network is imported. This can be done using the terraform.tfstate file (unreadable JSON sheet) or the state command. It is obvious what method we have chosen.

Fig.5 - Again terraform state

Indeed, there is a link to security_group, but in the initial configuration we forgot about it. To fix, we go through all the steps anew - we find the group on the Azure portal, copy the id, import it again and write it into the configuration.

After all, it looks like this:

resource "azurerm_resource_group" "mc2020" { name = "mc2020" location = "${var.location}" } resource "azurerm_virtual_network" "mc2020-vnet" { name = "mc2020-vnet" address_space = ["XX.XX.XX.XX/24"] location = "${var.location}" resource_group_name = "${azurerm_resource_group.mc2020.name}" } resource "azurerm_subnet" "mc2020-snet" { name = "mc2020" resource_group_name = "${azurerm_resource_group.mc2020.name}" virtual_network_name = "${azurerm_virtual_network.mc2020-vnet.name}" address_prefix = "XX.XX.XX.XX/24" network_security_group_id = "${azurerm_network_security_group.mc2020-nsg.id}" } resource "azurerm_network_security_group" "mc2020-nsg" { name = "mc2020-nsg" location = "${var.location}" resource_group_name = "${azurerm_resource_group.mc2020.name}" security_rule { name = "default-allow-ssh" priority = 1000 direction = "Inbound" access = "Allow" protocol = "TCP" source_port_range = "*" destination_port_range = "22" source_address_prefix = "XX.XX.XX.XX/24" destination_address_prefix = "*" } security_rule { name = "trusted_net" priority = 1050 direction = "Inbound" access = "Allow" protocol = "*" source_port_range = "*" destination_port_range = "0-65535" source_address_prefix = "XX.XX.XX.XX/32" destination_address_prefix = "*" } security_rule { name = "http" priority = 1060 direction = "Inbound" access = "Allow" protocol = "TCP" source_port_range = "*" destination_port_range = "80" source_address_prefix = "*" destination_address_prefix = "*" } security_rule { name = "https" priority = 1061 direction = "Inbound" access = "Allow" protocol = "TCP" source_port_range = "*" destination_port_range = "443" source_address_prefix = "*" destination_address_prefix = "*" } } Note: when importing resources, you need to carefully look at their attributes - they are case-sensitive, and it is important not to be mistaken when typing them. All of this is the cost of importing an existing infrastructure and encoding it.

After all edits, the terraform plan command gives us what we expected to see initially.

No changes. Infrastructure is up-to-date. This means that Terraform could not detect any differences between your configuration and the real physical resources that exist. As a result, Terraform doesn't need to do anything. (Here was the boring process of importing / recording the infrastructure of the entire project, which we missed for obvious reasons)

We recommend creating the infrastructure immediately as a code. Magic is done as follows:

- Add a resource;

- We look terraform plan;

- We execute terraform apply;

- We look at the wonders of automatic reconfiguration of resources;

Like that. Thanks for the question, attentive reader! Let's return to the pair of things promised earlier.

First, we needed to transfer a large amount of data from the battle iron. To update xtrabackup, I had to upgrade MySQL and temporarily disable some of the network functionality. During the development of the game, it was provided with an offline mode, so we did it painlessly and started transferring data.

Figure 6 - Summary of the infrastructure in Azure

At the time of transfer, there were about 500 gigabytes of data in several databases. We transferred data from the two largest servers between data centers via xtrabackup at a speed of 1 Gbit / s, and to dump the necessary databases from the third server we used myloader, which runs faster than the standard mysqldump. After that, we finished the remaining coffee and made the final settings for everything-that-could-was-configured.

Secondly, we began to slowly transfer traffic to the new load balancers, watching for errors and load. To process all the traffic, we added another app-server and eventually got ~ 30% of the load on the app- and 8-15% db-servers.

In the example above, we used state from the local computer of one of our engineers and could lose all the work done if the file was lost. Therefore, of course, we have made backups, saved our code in git and fully take advantage of the version control system.

Planning and storage of code and steates depends on the specifics of the project, so there may be several approaches - creation in relation to projects, regions, even to a separate state for each resource. In general, you need to start writing the infrastructure as a code, and everything will work out.

For full transfer left:

- Patch clients to register new hosts (“az-”);

- Transfer static files that are uploaded to one of the old servers and serve as Origin for Akamai CDN;

- After a while, turn off the old server.

The whole process of transferring the backend to Azure (along with the preparation and reading of the documentation for terraform) took a little less than a week, and the transfer itself took about two days. The Azure cloud out of the box allows you to feel the benefits of automatic scaling, gives you the ability to instantly add resources in the event of an increase in load and all the rest is good and positive, which cloud services bring to life of developers.

With the right approach to the organization of the process and the choice of effective tools, the most crucial and difficult part - moving and launching the existing backend in the cloud infrastructure - becomes simple, predictable and understandable, which we enjoyed and used. Having documented the process of simply moving to this technology, we will continue to transfer our other projects to the cloud.

We are waiting for your questions in the comments. Thanks for attention!

Source: https://habr.com/ru/post/319760/

All Articles