Crysis at the maximum speed, or why the server needs a video card

In addition to the game in Battlefield 1 and entertainment with VR-glasses, installing a separate video card in the server is useful when working with graphics in a virtual environment or for mathematical calculations.

The use of a GPU in a server environment is associated with some features in each specific scenario, so we will analyze them in more detail.

Mathematics - the Queen of Sciences

With the advent of cryptocurrency and mining, it turned out that it is faster to calculate encryption keys on video cards than on ordinary CPUs.

There are several reasons for this:

The video card has more arithmetic-logic modules than the central processor, which allows you to perform a greater number of parallel tasks;

GPU is served by more efficient RAM;

- The logic of the GPU is simpler, which reduces the cost of each operation.

For example, Intel Xeon v4 with AVX 2.0 support is able to execute 16 32-bit instructions per core per clock, while one NVIDIA GeForce GTX 1080 video card runs 8,228 similar instructions.

Now mathematical math on video cards are used not only for mining. For example, in the corporate sector there are more general tasks:

Calculation of some business applications (Big Data analysis);

Graphic tasks. For mass creation of images on 3D models, it is more convenient to use a special server or render farm instead of the designer’s computer;

- Resource-intensive tasks from the field of information security. Bruteforce passwords on video cards - a good help when testing for penetration.

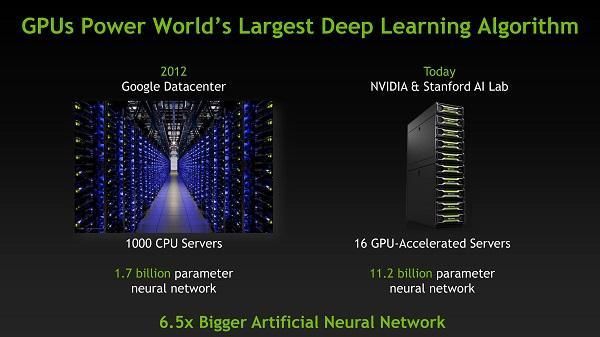

In recent years, video cards are increasingly used to train neural networks , as a sub-option of working with Big Data. The popular Caffe framework runs on Amazon instances with a GPU 10 times faster than a CPU.

Not so long ago, an organization caught up with me, catching a coder. Fortunately, the virus was old and the files were encrypted with a PSK key, not a certificate. It took two days of brutfors using Hashcat on several gaming computers of colleagues - and a ten-digit key was found.

When transferring work from the processor to the GPU, the difficulty is that you can’t just say the operating system "consider, dear, on the video card." To work directly with the mathematical module of the video card, you need support in a specific application. The very principle of such work is called GPGPU (General-purpose computing for graphics processing units), so you should look for support for something similar in the description of software features.

Two technologies are used for applications with the mathematical module of the video card - CUDA and OpenCL:

CUDA . NVIDIA software and hardware technology. The SDK provides the ability to develop in a C language dialect. NVIDIA produces both regular PCI-E video cards and the Tesla server line. There are also video card with MXM interface optimized for blades;

- OpenCL . An open framework originally developed by the Khronos Compute group of companies. Supported in both NVIDIA graphics cards and AMD models.

Experimental comparisons of the performance of the two technologies can be found a lot. When working with graphics cards from AMD and NVIDIA about the same level, from the use of OpenCL wins AMD. But if the application was developed with CUDA support, NVIDIA is already leading. Therefore, the choice of a particular video card is determined by the architecture that was incorporated in the application.

In addition to processing complex math problems, the video card can also be used in a more specialized way - for working with "heavy" graphic applications.

Virtualize it

Terminal servers and thin clients have long been valued for serviceability and independence from client machines. In recent years, virtual desktop infrastructure (VDI) is gaining popularity, offering each user its own virtual machine in the data center.

If you transplant an ordinary office employee to a thin client, it doesn’t take much trouble and practically doesn’t affect the quality of work, then a separate category consistently causes a headache - these are designers, architects and other professionals who use serious graphic programs.

In addition to graphics applications, GPU acceleration is used when running even ordinary MS Office office suites, LibreOffice. You can speed up their work on a normal terminal server by installing a powerful video card. For example, using the RemoteFX AMD FirePro S10000 card supports up to 70 users. If employees complain about the performance of office applications, installing a video card can fix the situation.

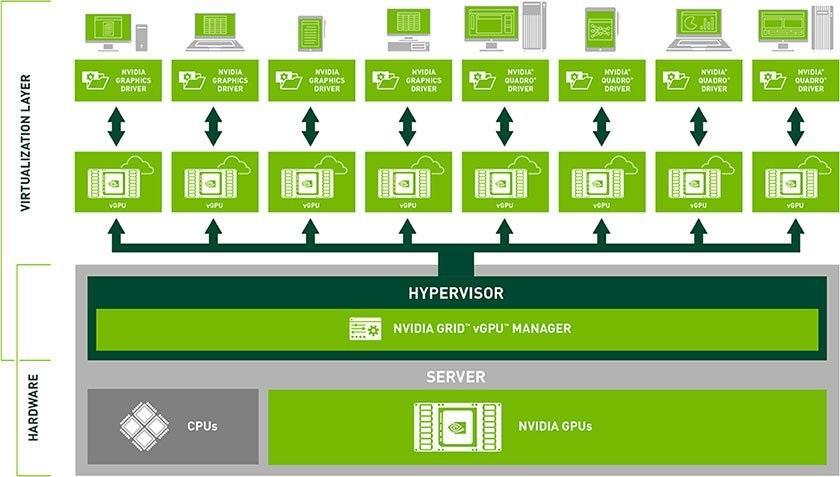

The solution can be to transfer demanding users to VDI instead of a simple terminal. A hardware-accelerated graphics hypervisor is required. In 2016, most hypervisors can do this, even free ones.

The processor supports hardware virtualization and IOMMU (Intel VT-d or AMD-Vi);

The motherboard supports IOMMU;

- Video card BIOS supports UEFI.

If there are a lot of users who want to evaluate the advantages of a separate graphic, you can purchase special video cards with support for resource sharing.

NVIDIA and AMD have models that can share resources:

| Nvidia | AMD | |||||

| Technology name | GRID 2.0 | Multi User GPU (MxGPU) | ||||

| Name | Tesla M10 | Tesla M60 | Tesla M6 | FirePro S7150 | FirePro S7150 x2 | FirePro S7100X |

| Interface | PCI-E | PCI-E | MXM | PCI-E | PCI-E | MXM |

| Number of GPU users | sixteen | 32 | sixteen | sixteen | 32 | sixteen |

| VMware support | Yes | Yes | ||||

| Citrix Xen support | Yes | in developing | ||||

| MS Hyper-V support | yes starting from 2016 | in developing | ||||

| NICE support | Yes | not | ||||

| Technology work | through a special driver | hardware |

Even in VMware, there are GRID K and GRID K2 video cards in the assortment, which are not included in the table, as they are morally outdated.

Support for shared resources cards is not yet available in free virtualization systems. If before XenServer of all editions supported the work of the "multi-user" card, now Enterprise edition is needed. VMware will require Enterprise Plus and above.

In addition to desktop virtualization, application virtualization also exists (VMware Horizon, Citrix XenApp). It is not necessary to give the designer a full-fledged workstation, you can virtualize, for example, only Photoshop.

When developing a virtual environment with accelerated graphics, you should pay attention not only to support in the hypervisor, but also to compatibility with a specific server model. You can check the compatibility of NVIDIA GRID video cards with specific server models on the NVIDIA website . In particular, HPE servers are certified to work only with modern Tesla M60 ProLiant DL380 Gen9 . The same model is approved by AMD .

For blade servers, AMD and NVIDIA have an MXM interface solution that has characteristics similar to cards for regular servers.

The main disadvantage of graphics acceleration technology in a virtual environment is the price. One video card costs about $ 3,000 dollars, which is comparable to the cost of the whole server. In addition, a new project will require a license for the hypervisor and, most likely, a server of a certain model (remember compatibility). In addition, when choosing NVIDIA cards, you also need a driver license.

NVIDIA GRID allows you to use a shared video card in three ways:

| Virtual application | Virtual pc | Virtual workstation | |

| Remote Desktop | |||

| Remote application | |||

| Guest OS Windows | |||

| Guest OS Linux | |||

| Max. number of monitors | application dependent | 2 | four |

| Max. resolution | application dependent | 2560x1600 | 4096x2160 |

| CUDA and OpenCL support | |||

| Video memory size per client | 1 GB, 2 GB, 4 GB, 8 GB | 512 MB, 1 GB | 512 MB, 1 GB, 2 GB, 4 GB, 8 GB |

| Price per year, per license + SUMS * | $ 10 | $ 50 | $ 250 |

| Eternal license ** | $ 20 | $ 100 | $ 450 |

| SUMS1 | $ 5 | $ 25 | $ 100 |

* SUMS (Support, Update and Maintenance Subscriptions) - support and updates from NVIDIA.

** To purchase a perpetual license, you must purchase a SUMS for at least the first year.

The price tag on the license itself is not large, but the total implementation of the entire software and hardware solution will be quite expensive. However, if a company has a large part of its employees working with bulky graphic applications, the introduction of VDI can be cheaper than supporting a fleet of powerful computers.

In addition to mathematical calculations and the infrastructure of powerful virtual machines with support for graphical applications, video cards can also be used for games. For example, create a cloud service for the passage of the third witcher at high settings on any device.

Did you use powerful discrete video cards in servers as impressions?

Useful links on the topic:

')

Source: https://habr.com/ru/post/319712/

All Articles