Load balancing with Pacemaker and iPaddr (Active / Active cluster)

I want to tell you about another way to load balance. About Pacemaker and iPaddr (resource agent) and setting it up for an Active / Passive cluster has already been said a lot, but I found very little information on the organization of a full Active / Active cluster using this module. I will try to correct this situation.

To begin, I will tell you more than this method of balancing is remarkable:

- Lack of external balancer - On all the nodes in the cluster, one common virtual IP address is configured. All requests are sent to him. Nodes respond to requests to this address randomly and by agreement between themselves.

- High Availability - If one node falls, its responsibilities are picked up by the other.

- Easy setup - Setup is done in just 3-5 teams.

Input data

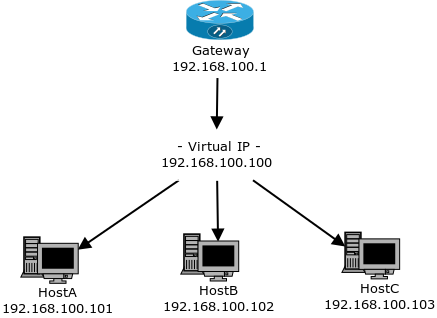

Let's look at the picture at the beginning of the article, we will see the following devices:

- Gateway - IP: 192.168.100.1

- HostA - IP: 192.168.100.101

- HostB - IP: 192.168.100.102

- HostC - IP: 192.168.100.103

Clients will contact the external address of our gateway, it will redirect all requests to the virtual IP 192.168.100.100, which will be configured on all three nodes of our cluster.

Training

First of all, we need to make sure that all our nodes can contact each other via a single hostname, for reliability, it is better to immediately add node addresses to /etc/hosts :

192.168.100.101 hostA 192.168.100.102 hostB 192.168.100.103 hostC Install all the necessary packages:

yum install pcs pacemaker corosync #CentOS, RHEL apt-get install pcs pacemaker corosync #Ubuntu, Debian When installing pcs creates a user hacluster , let's set a password for it:

echo CHANGEME | passwd --stdin hacluster Further operations are performed on a single node.

Configure authentication:

pcs cluster auth HostA HostB HostC -u hacluster -p CHANGEME --force Create and run a cluster “Cluster” of three nodes:

pcs cluster setup --force --name Cluster hostA hostB hostC pcs cluster start --all See the result:

pcs cluster status Cluster Status: Last updated: Thu Jan 19 12:11:49 2017 Last change: Tue Jan 17 21:19:05 2017 by hacluster via crmd on hostA Stack: corosync Current DC: hostA (version 1.1.14-70404b0) - partition with quorum 3 nodes and 0 resources configured Online: [ hostA hostB hostC ] PCSD Status: hostA: Online hostB: Online hostC: Online Some steps were borrowed from the article Lelik13a , thanks to him for that.

In our particular case, neither quorum nor stonith is required by our cluster, so we boldly disable both:

pcs property set no-quorum-policy=ignore pcs property set stonith-enabled=false Further, if you have resources for which this is necessary, you can refer to the Silvar article .

A few words about MAC addresses

Before we begin, we need to understand that all our nodes will be configured with the same IP and the same mac-address, on request for which they will give answers one by one.

The problem is that each switch works in such a way that during operation it creates its own switching table, in which each mac-address is associated with a specific physical port. The switching table is created automatically, and serves to unload the network from "unnecessary" L2-packets.

So, if the mac-address is in the switching table, then the packets will be sent only to one port to which this mac-address is assigned.

Unfortunately, this does not suit us and we need to make sure that all our hosts in the cluster simultaneously "see" all these packages. Otherwise, this scheme will not work.

First we need to make sure that the mac address we are using is a multicast address. That is, it is in the range of 01:00:5E:00:00:00 - 01:00:5E:7F:FF:FF . Having received a packet for such an address, our switch will transmit it to all other ports, except the source port. In addition, some managed switches allow you to configure and define multiple ports for a specific MAC address.

You may also have to disable the Dynamic ARP Inspection feature if it is supported by your switch, as it may cause blocking of arp responses from your hosts.

Configuring the iPaddr Resource

So we got to the most interesting.

There are currently two versions of the iPaddr with cloning support:

IPaddr2 (

ocf:heartbeat:IPaddr2) - Standard agent resource for creating and running a virtual IP address. It is usually installed along with the standardresource-agentspackage.- IPaddr3 (

ocf:percona:IPaddr3) - An improved version of iPadcon from Percona.

This version includes work-oriented fixes in the clone mode.

Requires separate installation.

To install IPaadr3, run these commands on each host:

curl --create-dirs -o /usr/lib/ocf/resource.d/percona/IPaddr3 \ https://raw.githubusercontent.com/percona/percona-pacemaker-agents/master/agents/IPaddr3 chmod u+x /usr/lib/ocf/resource.d/percona/IPaddr Further operations are performed on a single node.

Create a resource for our virtual IP address:

pcs resource create ClusterIP ocf:percona:IPaddr3 \ params ip="192.168.100.100" cidr_netmask="24" nic="eth0" clusterip_hash="sourceip-sourceport" \ op monitor interval="10s" clusterip_hash - here you need to specify the desired type of query distribution.

There can be three options:

sourceip- distribution only by source IP-address, this ensures that all requests from one source will always go to the same host.sourceip-sourceport- distribution by source IP-address and outgoing port. Each new connection will fall on a new host. The best option.sourceip-sourceport-destport- distribution by source IP-address outgoing port and destination port. Provides the best distribution, it is important if you have several services running on different ports.

For iPaddr2, you must specify the parameter mac=01:00:5E:XX:XX:XX with the mac-address from the multicast range. IPaddr3 installs it automatically.

Now we will incline our resource:

pcs resource clone ClusterIP \ meta clone-max=3 clone-node-max=3 globally-unique=true This action will create the following rule in iptables :

Chain INPUT (policy ACCEPT) target prot opt source destination all -- anywhere 192.168.100.100 CLUSTERIP hashmode=sourceip-sourceport clustermac=01:00:5E:21:E3:0B total_nodes=3 local_node=1 hash_init=0 As you can see, the CLUSTERIP module is used here.

It works as follows:

All packages come to three nodes, but all three Linux kernels know how many nodes receive packets, all three cores number received packets according to a single rule, and, knowing how many nodes and the number of their node, each server processes only its part of the packets, the other packets server ignored - they are processed by other servers.

Read more about this in this article .

Let's look at our cluster again:

pcs cluster status Cluster Status: Cluster name: cluster Last updated: Tue Jan 24 19:38:41 2017 Last change: Tue Jan 24 19:25:44 2017 by hacluster via crmd on hostA Stack: corosync Current DC: hostA (version 1.1.14-70404b0) - partition with quorum 3 nodes and 0 resources configured Online: [ hostA hostB hostC ] Full list of resources: Clone Set: ClusterIP-clone [ClusterIP-test] (unique) ClusterIP:0 (ocf:percona:IPaddr3): Started hostA ClusterIP:1 (ocf:percona:IPaddr3): Started hostB ClusterIP:2 (ocf:percona:IPaddr3): Started hostC PCSD Status: hostA: Online hostB: Online hostC: Online IP addresses have started successfully. We try to contact them outside.

If everything works as it should, then this setting can be considered complete.

Links

- High Availability Cluster (HA-cluster) based on Pacemaker

- Pacemaker-based HA-Cluster for LXC and Docker container virtualization

- pacemaker: how to finish off lying

- The use of iptables load balancer for PXC, PRM, MHA and NDB

- Pacemaker load-balancing with Clone

- Load sharing on LDAP servers using iptables CLUSTERIP

- pacemaker / Ch-Active-Active.txt at master · ClusterLabs / pacemaker

- 9.5. Clone the IP address

')

Source: https://habr.com/ru/post/319550/

All Articles