Graal and Truffle (Graal & Truffle)

From translator

I want to immediately warn you that the article in some places resembles a presentation of a large company because of epithets in the spirit of “change the industry”, “the best on the market”, “breakthrough technologies”, etc. If you close your eyes to this emotional style of narration, you’ll get New technologies compilers and virtual machines.

Introduction

Since the heyday of the computer industry, many have been fascinated by the quest in search of the perfect programming language. The quest is very difficult: the creation of a new language is not an easy task. And very often, the process of fragmentation of the established programming ecosystem occurs and it becomes necessary to rebuild the basic tools for a new language: compiler, debugger, HTTP stack, IDE, libraries and an infinite number of basic blocks are written from scratch for each new language. Excellence in the design of programming languages is unattainable, and new ideas arise constantly. We are like Sisyphus: sentenced by the gods to the eternal pushing of a stone into a mountain, in order to see how it rolls down again and again ... for ages.

How can you break this vicious cycle? Let's dream what we would like.

We need something, a special tool that will do the following:

- A way to create a new language in just a week

- And so that it automatically works as fast as other languages.

- So that he has the support of a quality debugger, automatically (ideally, without slowing down the program)

- Support profiling, automatically

- Having a quality garbage collector, automatically ... but only if we need it

- So that the language can use all the existing code, regardless of what it was written on

- That language supports any programming style from low-level C or FORTRAN to Java, Haskell and fully dynamic scripting languages such as Python and Ruby.

To support just-in-time and ahead-of-time compilation - And finally, to support hot-swappable code (hotswap) in an already running program.

And yet, of course, we want to have the open source code of this magic tool. And let there be ponies. It seems to be all.

A clever reader, of course, guessed that I would not begin this article if I did not have such a tool. His name is a little strange - Graal & Truffle. And let the name rather fit the pretentious hipster restaurant, in fact it is a significant research project, in which more than 40 scientists from the IT industry and universities participate. Together they build new compiler and virtual machine technologies that implement all the items on our list.

They invented a new way to quickly create programming languages without problems with libraries, optimizing compilers, debuggers, profilers, binding to C libraries and other attributes that are needed by the modern programming language. This tool promises to cause a wave of innovations in programming languages and - I hope - reformat the entire IT industry.

That's about it and talk.

What is Graal and Truffle?

Graal is a research compiler. And Truffle is ... it's such a thing that is difficult to compare with anything. In short, the following comes to mind: Truffle is a framework for creating programming languages by writing an interpreter for an abstract syntax tree.

When creating a new programming language, the first thing is determined by the grammar. Grammar is a description of the syntax rules of your language. Using grammar and a tool like ANTLR you get a parser. At the exit of the parser you will have a parsing tree

The picture shows a tree, which was obtained after the work of ANTLR for the following line of code:

Abishek AND (country = India OR City = BLR) LOGIN 404 | show name A parsing tree and a derivative of it, called the abstract syntax tree (AST), is a natural way of expressing programs. After building such a tree, there will be one simple step to a running program. You will need to add the "execute" method to the tree node class. When executing “execute,” each node can call child nodes and combine the results to get the value of an expression or execute an instruction. And, in principle, that's all!

Take for example dynamic programming languages such as Python, JavaScript, PHP and Ruby. For these languages, their dynamism was the result of moving along the path of least resistance. If you create a language from scratch, complicating the language with a static type system or an optimizing compiler can slow down the development of a language greatly. The consequences of such a choice result in poor performance. But even worse, there is a temptation to quickly add features to the simple / slow interpreter AST. After all, optimizing the performance of these features later will be very difficult.

Truffle is a framework for writing interpreters using annotations and a small amount of additional code. Truffle paired with Graal will allow you to convert such interpreters to JIT compiling virtual machines (VM) ... automatically. The resulting execution environment at times of peak performance can compete with the best existing compilers (manually tuned and customized for a particular language). For example, the implementation of a JavaScript language called TruffleJS obtained in this way can be frustrated with V8 in performance tests.

The RubyTruffle engine is faster than all other Ruby implementations. The TruffleC engine is roughly competing with GCC. There are already implementations of some languages (different degrees of readiness) using Truffle:

- Javascript

- Python 3

- Ruby

- LLVM bitcode - allows you to run programs in C / C ++ / Objective-C / Swift

- Another engine for interpreting C source code without compiling it in LLVM (read below for the advantages of this approach)

- R

- Smalltalk

- Lua

- Many small experimental languages

To make it clear how easy it is to create these engines, the source code for TruffleJS is only about 80,000 lines, compared to 1.7 million lines of code in V8.

To hell with the details, how can I play with all this?

Graal and Truffle are the result of Oracle Labs, a part of the Java team working on virtual machine research. GraalVM lies here . This is an advanced Java Development Kit, which contains some of the languages from the above list, as well as substitutes for console utilities NodeJS, Ruby and R. The package also includes the training language “SimpleLanguage”, from which you can start your introduction to Graal and Truffle.

What is Graal for?

If Truffle is a framework for creating AST interpreters, then Graal is a thing to speed up such interpreters. Graal is a work of art in the field of optimizing compilers. It supports the following features:

- Can be run in just-in-time and ahead-of-time modes.

- Very advanced optimizations that include partial escape analysis . Escape analysis allows you to avoid allocation of objects in the heap, if it is not really necessary. The popularity of EA is due to the development of the JVM, but this optimization is very complex and is supported by a small number of virtual machines. The JavaScript compiler “Turbofan” used in the Chrome browser began to receive escape analysis optimization only at the end of 2015. Graal has advanced optimizations for a wider range of cases.

- Works in conjunction with Truffle-based languages and allows you to convert Truffle AST into optimized native code due to partial computation . The partial computation of a specialized interpreter is called the “first projection of Futamura” ( “first Futamura projection” ). [As far as I understand from Wikipedia, the first projection of Futamura allows the interpreter to be customized for the source code. That is, if some features of the language are not used in the code, then these features are “cut out” from the interpreter. And then the interpreter “turns” into a compiler that generates native code. Correct if I messed up.]

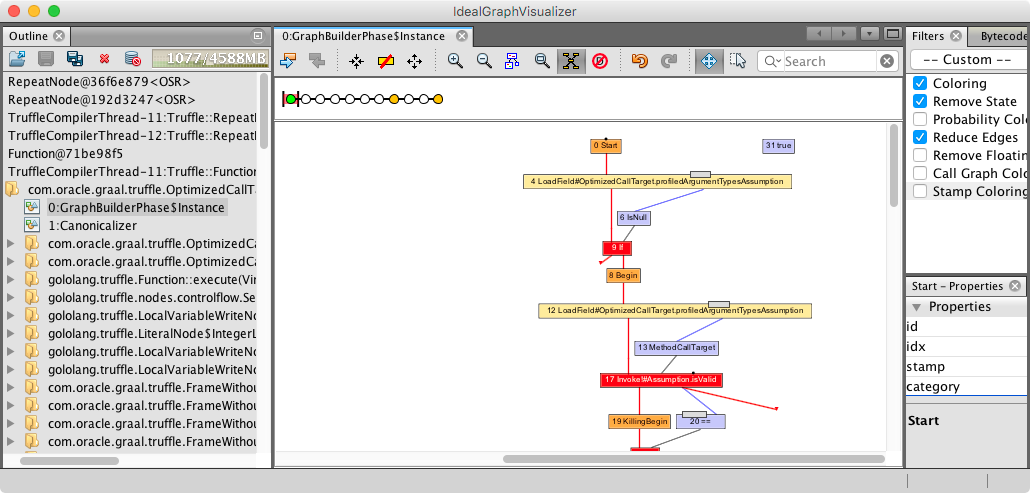

- Contains advanced visualization tools that allow you to study the intermediate representation of the compiler at each stage of optimization.

- Written in Java. So it is much easier to study and experiment than traditional compilers written in C or C ++.

- Starting with Java 9, Graal can be used as a JVM plugin.

IGV visualization

')

From the very beginning, Graal was designed as a multilanguage compiler. But its optimizations are particularly well suited for high-level languages of abstraction and dynamism. Java programs work in this virtual machine in the same way as in existing JVM compilers. While Scala programs run about 20% faster. Ruby programs get a gain of 400% compared to the best implementation to date (and this is not MRI).

Polyglot

Not tired yet? But this is only the beginning.

Truffle contains a special interlanguage framework called Polyglot. It allows Truffle-languages to call each other. And there is the Truffle Object Storage Model , which standardizes and optimizes most of the behavior of objects in dynamically typed languages. And at the same time allows you to share such objects. Do not forget that Graal and Truffle are built on Java technology and can communicate with JVM languages such as Java, Scala and even Kotlin. Moreover, this communication is two-way: Graal-Truffle code can call Java libraries, and Java code can call Graal-Truffle libraries.

Polyglot works in a very unusual way. As you remember, Truffle is a framework for describing abstract syntax tree (AST) nodes. As a result, access to other languages does not occur through an additional layer of abstractions and wrappers, but by merging two AST trees into one. As a result, the resulting syntax tree is compiled (and optimized) within Graal as a whole. So any difficulties that may appear at the junction of two programming languages can be analyzed and simplified.

For this reason, the researchers decided to implement the C interpreter through Truffle. Usually we take C as a compiled language. But there is no real reason for this. History knows cases where the C interpreter was used in real programs. For example, the video editor Shake special effect gave users the ability to write scripts in C.

Due to known performance problems with scripting languages, critical program sections are often rewritten in C using the internal interpreter APIs. As a result, such an approach significantly complicates the task of accelerating the language, because besides the interpreter itself, it is necessary to launch extensions to C. The global optimization task is complicated by the fact that these extensions are based on assumptions about the internal implementation of the language.

When the authors of RubyTruffle encountered this problem, they came up with an elegant solution: let's write a special C interpreter that will not only understand simple C, but also macros and other constructions that are specific to Ruby extensions. Then after the merger of the Ruby and C interpreters through Truffle, a single code will be obtained, and the costs of interlanguage communication will be leveled by the optimizer. The result is TriffleC.

You can read a great explanation of how it all works in an article by one of the researchers of this project, Chris Seaton . Or you can study a scientific article with a detailed description .

Let's do secure memory management in C

C programs run fast. But there is a downside - hackers love them very much, because it's too easy to shoot yourself in the foot while working with memory.

The ManagedC language appeared as a continuation of TruffleC and replaces standard memory management with controlled allocations with the garbage collector. ManagedC supports pointer arithmetic and other low-level constructions while avoiding heaps of errors. The price of reliability is 15% sagging performance compared to GCC, as well as the active use of “indefinite behavior” that many C compilers love so much. This means that if your program works with GCC, it does not guarantee that it will run under ManagedC. At the same time, ManagedC itself fully implements the C99 standard.

You can find more information from the article “Memory safe execution of C on a Java VM” .

Debugging and profiling for free

All developers of programming languages are faced with the problem of lack of quality tools. Take for example GoLang. The GoLang ecosystem has suffered for many years from a bad, primitive, and poorly portable ported debugger and profiler.

Another problem is to add debugger support to the interpreter. That is, the native code must be close to the source code in order to be able to establish a one-to-one correspondence between the current state of the virtual machine and the code that the developer wrote. This usually leads to the abandonment of compiler optimizations and a general slowdown in debugging.

This is where Truffle comes to the rescue, which offers a simple API that allows you to embed an advanced debugger into the interpreter ... without slowing down the program. The compiler still applies optimization, and the state of the program during debugging remains the same as expected by the programmer. All thanks to the metadata that Graal and Truffle generate during the compilation process into native code. The resulting metadata is then used to de-optimize the parts of the running program to get the initial state of the interpreter. When using a breakpoint, watchpoint, profiling point, or other debugging mechanism, the virtual machine rolls back the program to its slow form, adds nodes to the AST to implement the necessary functionality and recompiles everything back to the native code, which replaced on the fly.

Of course, the debugger is not only a feature of the execution environment. Need more UI for users. Therefore, there is a plugin for NetBeans IDE with support for any Truffle language.

For details, I send you to the article “Building debuggers and other tools: we can have it all” .

LLVM support

Truffle is mainly used to create source interpreters. But nothing prevents to use this framework for other purposes. The Sulong project is a Truffle interpreter for LLVM bytecode.

The Sulong project is still at an early stage of development and contains a number of limitations. But theoretically, launching bytecode using Graal and Truffle will allow you to work not only with C, but also C ++, Objective-C, FORTRAN, Swift and maybe even Rust.

Sulong also contains a simple C API for interacting with other Truffle languages via Polyglot . Again, thanks to the independence of the programming language and the completely dynamic nature of this API, the resulting code will be aggressively optimized, and the overhead will be minimized.

Hot Swap (HotSwap)

Hot swap is the ability to change the program code in the process of its work without restarting. This is one of the main advantages of dynamic programming languages, allowing to achieve high performance programmers. There is a scientific article dedicated to the implementation of hot-swap in the Truffle framework (not sure that support has already been added to Truffle itself). As with the debugger, profiler, and optimizers, developers of languages on Truffle will just need to use the new APIs to support hot-swap. This API is much more convenient than your own bikes.

Where is the catch?

As you know, in life there is nothing perfect. Graal and Truffle offer a solution for almost all of our desires from the original list. Developers of programming languages should be just happy. But there is a price for this convenience:

- Warm up time

- Memory consumption

The process of converting an interpreter into an optimized native code requires studying how the program works in real conditions. This, of course, is not news. The term “warm-up” (i.e., acceleration as you work) is known to everyone who uses modern virtual machines, such as HotSpot or V8. But Graal is also driving progress, taking profiling optimization to a higher level. As a result, GraalVM very much depends on the profiling information.

It is for this reason that researchers measure only peak performance, that is, they give the program some time to work. It does not take into account the time required for such heating. For server applications, this is not a big problem, since only peak performance is important in this area. But in other applications, a long warm-up can put an end to the use of Graal. In practice, this picture can be seen when working with the Octane performance test (you can find it in the JDK technical preview): the final score is slightly lower than that of Chrome, even considering the rather long Graal warm-up (15-60 seconds), which is not taken into account evaluation.

The second problem: memory consumption. If the program is highly dependent on speculative optimizations, it needs to store tables with compiler's meta-information for deoptimization — the inverse transformation from the state of the machine to an abstract interpreter. And this meta-information occupies as much space as the final code, even with all the seals and compressions. Do not forget that you need to store the original AST or bytecode in case of violation of the heuristic assumptions in the native code. All this mercilessly clutters RAM.

Add to this the fact that Graal, Truffle and Truffle-based languages are themselves written in Java. This means that the compiler also needs time for its warming up in order to work in full force. And of course, memory consumption for basic data structures will grow, and these basic compiler structures fall under garbage collection.

The people behind Graal and Truffle, of course, are aware of these problems and are already thinking about solutions. One of them is called SubstrateVM. This is a virtual machine written entirely in Java and compiled ahead (ahead-of-time) using the appropriate compiler Graal and Truffle. Functionally, SubstrateVM is not as advanced as HotSpot: it cannot dynamically load code from the Internet, and the garbage collector is pretty simple. But having compiled this virtual machine once you can save a lot on warm-up time in the future.

And one more subtlety, which can not be silent. In our initial list of women, there is an item about the openness of the source code. Graal and Trulle are large and very expensive projects written by experienced people. Therefore, their development can not be cheap. To date, only some parts of the above are distributed with open source.

All code can be found on GitHub or other mirrors:

- Graal & Truffle .

- Expandable version of HotSpot (project basis).

- Rubyruffle

- Sulong (support LLVM bitcode)

- R , Python 3 and Lua implementations (some of them are created as hobby / research projects).

And the following parts do not apply to OpenSource

- TruffleC / ManagedC

- TruffleJS / NodeJS API

- SubstrateVM

- AOT support

TruffleJS can be downloaded for free as part of the GraalVM preview release. I do not know how to play around with TruffleC or ManagedC. The Sulong project covers some of this functionality.

What to read

A canonical, full-scale, all-in-one tutorial across Graal and Truffle in this report: "One VM to rule them . " It takes three hours, I warned you. Only for real enthusiasts.

There are more tutorials on using Truffle:

What's next?

In the beginning, I mentioned that we can remove the barriers to creating new programming languages. This will open the door to a new wave of innovation in languages. Here is a short list of experimental languages . I hope this list will be updated in the future.

If you try the new ideas offered in Graal and Truffle, then you will discover the opportunity to really work with your own language from the first days of its existence. As a result, there will be a growing community of participants who can deploy your language in their projects. This will create a magic cycle: the community leaves its feedback and ideas, you implement improvements. In general, this will accelerate the path from experiments to the final implementation. This is exactly what I expect.

Source: https://habr.com/ru/post/319424/

All Articles