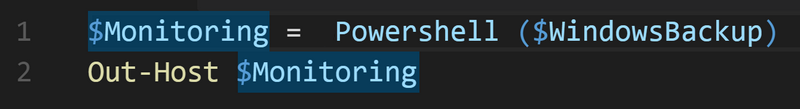

Powershell Practice: Monitoring Windows Backup Backup

This article discusses the implementation of monitoring backup file storage created by Windows Backup using a Powershell script in order to control the timeliness of the backup and the size of the generated data. The article also aims to give examples of some useful programming techniques in Powershell.

Long intro

It is good to have a backup management system that works according to a schedule and notifies the administrator in time about the state of affairs in his sometimes difficult household. Well and, at first glance - not too expensive: good, the choice of both commercial and free systems of this kind is now quite wide. However, the level of complexity of both the deployment and maintenance of any such system in working condition, as well as the hardware costs for it, suggest some reasonable minimum of the complexity of the IT system itself, the data of which elements are expected to be backed up. It seems that the deployment of, say, Bacula for the needs of a small office, the number of workstations under Windows in which is about a dozen is, say, two or three servers, represents an unproductive time and material resources in the context of the final product of the enterprise and the only positive result of such actions will be the practical experience gained by the system administrator. Consideration of decentralized backup systems offering a good level of features and relatively high autonomy leads to commercial products like Veeam Endpoint Backup.

Free editions of such programs, unfortunately, if they can boast of any advantages over the built-in Windows Backup, these advantages are not relevant to everyone and not in all cases and are not always obvious. Yes, and "native" support for the mechanism of shadow copies, frankly, is its great advantage. In a word, sometimes there is a feeling that all roads lead to this very Windows Backup. In fact, the question of choosing and organizing a backup system is purely individual for each specific case and is decided against the background, including subjective factors. However, in any case, I want to create a reliable and stable system, which, moreover, is easy to maintain. But autonomous backup agents cannot boast of the functions of a generalized monitoring of the scale of an organization. On this, I think, it is time to complete this preface so as not to get bored too soon.

')

Our scene is as follows: there are a dozen computers running Windows 7 and Windows 10, as well as a server running Windows Server 2008. There is also a NAS, purchased for use as a backup storage of data. There is a task: to provide backup of an uncomplicated but reliable enough kind without an additional budget. It is not difficult, including because there is no full-time system administrator in the organization, and all IT problems are solved by a part-time administrator with the feasible help of some users. It was decided to use Windows Backup tools, which will save data to a dedicated folder named “netbackup” on a remote machine with the network name “SA-NAS” in accordance with the local schedules of each computer. This solution works, moreover, it is rather stable, and each computer regularly sends backup reports to the system administrator email (read from system logs by the script). But the question of analyzing the volume and “freshness” of the created archives remains open, since Windows Backup is not inclined to share this information except as interactive. The system administrator rolled up his sleeves and began to write another script, for which he had to first sort out the issue of the data backup structure created by Windows Backup. So…

What does Windows Backup create and how is it organized?

To answer this question, you will have to ask a counter-question, quite in Odessa: “What exactly do you mean?” For Windows Backup, as you know, can create (a) backup copies of selected elements of the computer's file system, i.e. file archives (file backup), and (b) images of disk volumes (image backup). We ourselves choose the type of backup for a specific computer when it is configured using the corresponding Control Panel applet and, as is known, we can specify both archiving of specific folders and files, and the formation of images of selected disk volumes, as well as a combination of these two methods. I do not want to move away from the topic of the article, going into the description of this operation, which is trivial, so I dare to refer the reader to the relevant documentation and search engines of the Internet. I will give the data of my own study of the Windows Backup data structures, which is somehow immodest to call the fashionable word “reengineering” due to the relative simplicity of the problem. I also note that later in the text for the file system directories I will use the term “folder” - so that there is no confusion with the term “directory”, which will be used only in relation to Windows Backup catalog files.

The data sets created by Windows Backup in each of the two archiving modes on the target media will differ in structure.

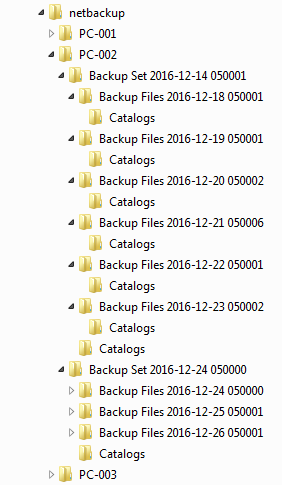

The first figure shows a typical example of the folder system structure of the file system created in the target network folder “netbackup” when backing up in file mode. First of all, the service file “MediaID.bin” appears in the root folder. As is clearly seen, at the first level of the hierarchy (the level of computers) individual subfolders are created for each computer (“PC-001”, “PC-002”, etc.). These folders contain at least a couple of service files: “MediaID.bin” and “Desktop.ini” .

The first figure shows a typical example of the folder system structure of the file system created in the target network folder “netbackup” when backing up in file mode. First of all, the service file “MediaID.bin” appears in the root folder. As is clearly seen, at the first level of the hierarchy (the level of computers) individual subfolders are created for each computer (“PC-001”, “PC-002”, etc.). These folders contain at least a couple of service files: “MediaID.bin” and “Desktop.ini” .In the individual folder of each computer, as Windows Backup works, subfolders of the second hierarchical level (archive level) appear with names like " Backup Set <date> <time>" , each of which contains one archive: the initial full backup of the selected data and the subsequent ones based on it, incremental backups. Also, each folder of the archive level contains a subfolder with the name “Catalogs” , which contains a file with the name “GlobalCatalog.wbcat” (contains the catalog of this archive).

This file is very useful for analyzing the data structure and its status: its very presence in the “Catalogs” folder indicates that, firstly, this folder and its neighbors are located inside the archive level (at the volume level) and, secondly, the analysis the time of its last change allows you to know when the last backup operation that updated this archive was performed.

Both the initial full copy and the subsequent incremental copies are located in separate subfolders of the volume level with names like “Backup Files <date> <time>” . Just as at the archives level, each volume-level folder contains the “Catalogs” subfolder, but it — unlike the namesake of the archives level — contains quite a few files with the ".wbcat" and ".wbverify" extensions that make up the catalog of a specific archive volume . In addition to the “Catalogs” subfolders, each folder of this level contains only an arbitrary number of ZIP archives, which together contain data from a specific volume. Everything is quite simple and - what is nice - the data can, as a last resort, be manually extracted by unpacking the ZIP archives.

Since the configured and running Windows Backup can be affected to the same extent as the stone released from the slingshot, decisions about stopping the addition of the current archive with incremental volumes and initializing a new one are made somewhere deep in the code of this program and are not known to others. in the form of the appearance of a new folder at the level of archives. The author of the article was unable to figure out the algorithm for making decisions of this kind by the program, but the logical (and reasonable in the context of existing experience) is the interconnection between the possibility of continuing the chain of incremental volumes and the successful completion of the last backup operation. Moreover, Windows Backup, having spawned a new archive, completely forgets about its predecessor, silently entrusting its fate at the complete discretion of the system administrator. Which, of course, immediately reflects on the amount of free disk space up to the moment when the administrator does not delete outdated archives folders in one way or another. This probably provides for multiple backups of the same file (see the “Previous Versions” tab of the file properties window in the Explorer). Well, what it is - Windows Backup - that's what it is: it's hard to expect no limitations from a virtually free product.

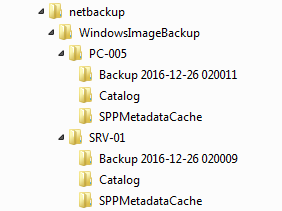

Assuming that sufficient clarity has been added to the question of the storage structure of file-type archives, we turn to the images of the disk volumes. The directory structure in this case (see figure below) is quite similar to the directory structure of the Windows Backup file archives, but there are also significant differences:

- The first level of the folder hierarchy is formed by the WindowsImageBackup folder, which is created in the target folder (“netbackup”) when backed up in disk mode. Subfolders for individual computers are created inside the “WindowsImageBackup” and form the level of computers.

- Inside the subfolders of each computer (at the archives level) there are only three folders. Two of them have static names: "Catalogs" and "SPPMetadataCache" , and the third - the archive folder - has a name like "Backup <date> <time>" .

Each computer-level folder always contains, in addition to the three above-mentioned subfolders, the MediaId service file without an extension. The subfolder “Catalog” , in turn, always contains two files: “GlobalCatalog” and “BackupGlobalCatalog” .

The archive folder (with the name of the form “Backup <date> <time>” ) changes its name whenever the backup of this computer is performed in the mode of disk volume images. This is logical, because every time a full, not incremental, backup of volumes is performed. It remains a mystery, however, why in this mode the outdated archive is deleted automatically immediately, and the outdated archives of the file mode of archiving continue to lie in the same place. Anyway, this folder contains a set of VHD files (virtual disk image) and a group of auxiliary XML files, among which we highlight “BackupSpecs.xml” . Apparently, it serves to save the parameters of a specific archive, and is formed after the actual creation of disk volume image files, thus making it possible to judge the time it takes to complete the archive operation.

That, in fact, is all that can be considered essential information about the storage structure of Windows Backup archives. I don’t see the point in examining the “Desktop.ini” files that Windows is used to form in some folders, since they are not involved in our monitoring.

Our algorithms

We will determine the information that I would like to receive automatically. Let it be the following set of attributes:

- the list of machines for which Windows Backup archives exist, and their types;

- date / time of updating the most current archive;

- the size of the most current archive;

- folder name / path of the most current archive.

For Windows Backup archives created in file mode, the algorithm for collecting information will be as follows:

- We perform a sequential enumeration of the subfolders of the first nesting level in the target folder and identifying those that contain the file “MediaID.bin” , which is actually the flag of the root folder of the archives of a separate machine (hereinafter, for brevity, the CPAM).

- In each identified CPAM, we perform an analysis of the presence in its subfolders of the “Catalogs” folder containing the “GlobalCatalog.wbcat” file.

- Select the newest among the GlobalCatalog.wbcat files found in the previous step and take the folder containing its parent folder as the repository of the most current archive of the file mode (hereinafter referred to as CAA). We take the time of the last modification of this file as the time of completion of the formation of the last volume of this archive.

- We summarize the size of the ZIP files in the subfolders of the CAA folder and take this value as the size of the CAA.

- We form and return a custom representation of the accumulated information: name (path), size and time of creation of the CAA for each vehicle from the list of CAMA.

For archives of the mode of disk volumes, the algorithm for collecting information will be as follows:

- We perform a sequential enumeration of the subfolders of the first nesting level in the target folder and identifying those that contain the MediaID file, which is actually the flag of the root folder of the archives of an individual machine (hereinafter, for brevity, the MPAC).

- In each CPAM, we perform an analysis of the presence of a file in its subfolders with the name “BackupSpecs.xml” . Just in case, we proceed from the possibility of the existence of not one, but several archive folders.

- Select the newest one among the “BackupSpecs.xml” files found in the previous step, and take the folder containing it as the repository of the most current archive of the disk volumes mode (hereinafter referred to as CAA). We accept the time of the last modification of this file as the time of completion of the formation of this archive.

- We summarize the size of the VHD files in the subfolders of the CAA folder and take this value as the size of the CAA.

- We form and return a custom representation of the accumulated information: name (path), size and time of creation of the CAA for each vehicle from the list of CAMA.

So far, everything looks simple enough for implementation, incl. and as a Powershell script, so let's get started.

Powershell Script

In this section, we will look at the key elements of the script, and for the implementation details omitted, we suggest referring to its source code, which is attached . Immediately, I note that the text elements of the user interface in the code examples below are in Russian, but they are implemented in English in the script — it was simply developed for use in an English-language environment and I would not want to spill code versions according to the language criterion, and the implementation of multilingual The interface is unnecessarily complicated for such a simple product.

Processing file mode archives

For a start, we get a list of QAMPs:

function Get-MachineListFB ($WinBackupRoot) { $MachineRoots = @{} Get-ChildItem -Path $WinBackupRoot -Recurse -Depth 1 -File -Filter "MediaID.bin" | Where-Object {$_.Directory.FullName -ne $WinBackupRoot} | foreach { $MachineRoots[$_.Directory.Name]=$_.Directory.FullName } return $MachineRoots } The Get-MachineListFB function takes one parameter: the full name of the root folder of the Windows Backup archive and, as you can see, there is nothing intricate in its code. The Get-ChildItem cmdlet generates a list of MediaID.bin file descriptors located within the same nesting level with respect to a specified folder. This list is passed to the Where-Object cmdlet by the pipeline, which passes to the next stage only those found files that are located outside the root of the specified folder, in its subfolders (as we remember, the root folder of the Windows Backup archives also contains the file MediaID.bin " ). Next, the returned object is filled with the names and full paths of the folders containing the found files.

Regarding the use of the Get-ChildItem cmdlet to select files and folders for a given criterion, I would like to make a comment. As you can see, the criterion of the name in the cmdlet is expressed in the form of the parameter "-Filter" . At the same time, Get-ChildItem also has another parameter that can perform the same function: "-Include" . What is the difference and how to choose a way to describe the selection criteria? When using the "-Filter" parameter , Powershell performs selection by activating the appropriate provider mechanisms that implement access to a specific data set (in our case, a disk file system). Alternatively, Powershell has its own built-in selection mechanism enabled by the "-Include" parameter. The difference for us, as for the user, manifests itself in:

- Speed: the selection using the mechanisms of the provider can be (and in our case it is definitely) faster than the own PS functionality;

- Capabilities: when using the "-Include" parameter together with the "-Recurse" parameter, the hierarchy depth of the "-Depth" parameter does not work (at least in Powershell version 5 with respect to disk file system - this was established experimentally).

Further, we realize the function of CAA detection:

function Get-LastFileBackupSet ($MachineRoot) { $objLB = $null try { $LastWbcat = Get-ChildItem -Path $MachineRoot -Recurse -Depth 2 ` -File -Filter "GlobalCatalog.wbcat" | Where-Object {$_.Directory.Name -eq "Catalogs"} | Sort-Object LastWriteTime | Select-Object -Last 1 LastWriteTime, Directory, ` @{Name="Machine";Expression={$_.Directory.Parent.Parent.Name.ToUpper()}}, ` @{Name="Path";Expression={$_.Directory.Parent.FullName}}, ` @{Name="Name";Expression={$_.Directory.Parent.Name}} $ZIPFiles = ( Get-ChildItem $LastWbcat.Directory.Parent.FullName -Recurse -Depth 2 ` -File -Filter "*.zip" | Select-Object Length | Measure-Object -Property Length -Sum ) } finally { if (($ZIPFiles) -and ($LastWbcat)) { $objLB = @{ Machine = $LastWbcat.Machine Path = $LastWbcat.Path Name = $LastWbcat.Name Updated = $LastWbcat.LastWriteTime Size = $ZIPFiles.Sum } } } return $objLB } Let me explain to newbies in Powershell: reverse single quotes at the ends of some lines are the standard symbol for the continuation of an expression on the next line in the PS script language. Here they are used only to improve the readability of code examples within the article.

Let us clarify the logic of the function. There is only one parameter to call it — just like the previous one: the full name of the root folder of the file mode archives for an individual machine (we determine these paths for all machines whose archives are found in advance using the Get-MachineListFB function ). The call to Get-ChildItem returns a list of objects containing descriptions of the found files with the name "GlobalCatalog.wbcat" in the subfolders of the specified folder. This list is passed to the processing of the Where-Object cmdlet , which selects among the found files only those whose parent folder name is the same as the one specified ( "Catalogs" ). The next stage of our pipeline is the Sort-Object cmdlet , which orders the rest of the list in ascending order of the last file change time, and the Select-Object cmdlet selects the last element of the ordered list, narrowing the set of attributes in the returned object to LastWriteTime and Directory in order to economize on machine resources.

That's all that can be accompanied by the adverb "just" ends: then the Select-Object cmdlet attaches additional user attributes to each returned object (it turns out, PS allows you to do such things on the fly). As a result, the return value is an object with attributes composed of two “built-in” properties of the Directory and LastWriteTime of the source element, as well as from the dynamically calculated user properties of Machine , Path and Name defined by us (in hash table format). The format for defining user attributes of this kind is as follows:

@{Name = "<_>"; Expression = {$_.<>}} An expression that calculates the value of a property can be either arbitrary, not directly related to the data being processed (for example, Get-Date ), or including the properties and methods of the $ _ iterator element — as done in the code for this function. As you can see from the example of the function code, constructions of this type are simply listed in the list of parameters returned by the Select-Object cmdlet .

Returning the result of calculations in the form of a composite object is convenient because there is no need to do individual calculations for different parameters of the same set of input data. In particular, the function just reviewed not only tells us the name of the CAA folder, but also its full path, the timestamp of the last update of the archive in it and its size.

Further, in the text of the function, we calculate the total size of the ZIP files that make up this archive, selecting files with the .zip extension in all subfolders of the folder one level above the folder containing the parent folder of the GlobalCatalog.wbcat file (i.e. within the folder of this archive). The call to Get-ChildItem returns a list of files that is sent to the Select-Object cmdlet , which only retrieves the Length property from the data (which is a synonym for size for file system objects), and it, in turn, gives the resulting sample to the Measure-Object cmdlet to summarize the sizes.

The construction of the

($ZIPFiles) -and ($LastWbcat) is used to check the existence of the $ ZIPFiles and $ LastWbcat variables for elementary detection of error situations. This is a standard method in Powershell: since we did not initialize these variables beforehand, then if any of them remained uninitialized by the time of the test (does not have a valid value), the test will return a negative in a logical sense result. Those. something like the If Not IsNull() … construct If Not IsNull() … in Visual Basic.Then, as is probably understandable, the calculated values are assigned to the fields of the object (such as a hash table) $ objLB , which will be returned by our function.

The definition of the CAA parameters for a particular machine actually completes our computational algorithm, since now everything is known about the desired CAA and this “everything” is written into the fields of the object that the Get-LastFileBackupSet function returned:

- Machine is the network name of the computer,

- Path - the full path of the CAA folder for this computer,

- Name is the name of the CAA folder (it has an identifiable value and can identify the archive),

- Updated - Last Updated Date,

- Size - the total size of the archive.

It remains to implement the presentation of the information received. Since the system administrator acts as a consumer, we will not invent any visual stylistic delicacies and we will simply make two representations of the output data: plain text and HTML of a table type. For each format, we will create two functions: the first will form a data line for an individual computer, and the second will assemble a table with a title from such lines. In fact, in the script code they will follow in reverse order: the executive subsystem of Powershell is an interpreter, so the function declaration in the script code must precede its call and the PS does not support the mechanism of preliminary declaration of function prototypes.

function Get-LastFileBackupSetSummaryTXTRow ($MachineRoot) { $ret = "" $Now = Get-Date $LastBT = Get-LastFileBackupSet ($MachineRoot) if ($LastBT) { $ret = ": " + $LastBT.Machine + "; : " + $LastBT.Name + ` "; : " + ($Now - $LastBT.Updated).Days + " . (" + ` $LastBT.Updated + "); : " + ('{0:0} {1}' -f ($LastBT.Size/1024/1024), "") } else{ $ret = " " } return $ret } function Get-LastFileBackupDatesTXT ($WinBackupRoot) { $table=" :`r`n" $MachineRoots = Get-MachineListFB ($WinBackupRoot) foreach ($machine in $MachineRoots.Keys) { $line = Get-LastFileBackupSetSummaryTXTRow($MachineRoots[$machine]) $table+= -join($line, "`r`n") } return $table } The Get-LastFileBackupDatesTXT collector function is completely trivial: it simply builds a text string containing a table with a title in stages. The rows of the table are generated by the Get-LastFileBackupSetSummaryTXTRow function , and they are "glued together" by the string concatenation operator "-join" . In the literature, it is called differently: where is the operator, and where is the method, and there are several recording forms for it. Here we use the “function-like” form of the record, in which concatenation is done by default without a separating character. The second operand is a string constant composed of the end-of-line and carriage esc characters ( "` r " and " `n" ), i.e. standard for text files Windows line break.

The Get-LastFileBackupSetSummaryTXTRow function gets the path to the CMAC and determines the CAA for the corresponding machine. Since this value is composite, it extracts individual data elements from it and, supplying them with text labels, collects a readable string from them. Powershell here again allows us to take advantage of the object-related charms of the values it uses: the difference between two values of the date / time object type is also an object and its value in days is calculated by a simple subtraction operator without invoking additional functions. It is only necessary to clarify in which unit of measurement we want to get the result, which is achieved by pointing to the Days property of the resultant object - since it is the days that interest us.

To form a representation of the size of the archive in megabytes, the following construction was used:

('{0:0} {1}' -f ($LastBT.Size/1024/1024), "") This is a formatting operator and is analogous to the formatting function in many programming languages. Inside the outer brackets (they are left here for readability) are sequentially arranged: the format string, the operator itself ( "-f" ), and the list of inline values. Our format string contains two format specifiers (each specifier is enclosed in curly brackets): a number without a fractional part and a value without specifying formatting. Since there is a space between the format specifiers - it will also be present in the result string between the values. The operator, respectively, is followed by two values: an expression that calculates the size in megabytes and a unit string. At the output, we get the string values of the form "123 MB", i.e. Powershell with the specified number formatting coerces a numeric value to the type corresponding to the specified format.

If the CAA value is empty - LastFileBackupSetSummaryTXTRow returns a string indicating an error situation.

Hypertext representations of information are formed in a similar way, differing, in essence, only by the fact that simple HTML tags are inserted into the generated lines. We will not give this code here, because there is nothing radically new from the point of view of the algorithms of our problem, but we can familiarize ourselves with it by examining the source code of the script attached to the article. We only note that the formatting of HTML-code includes color marking of lines with the age of CAA more than one day - to attract the attention of the administrator.

Processing archives mode images of disk volumes

We get the list of CAMAs as in the previous case, taking into account the specifics of storing archives of this mode:

function Get-MachineListIB ($WinBackupRoot) { $MachineRoots = @{} $ImageBackupRoot = $WinBackupRoot+"\WindowsImageBackup" Get-ChildItem -Path $ImageBackupRoot -Recurse -Depth 1 -File -Filter "MediaID" | foreach { $MachineRoots[$_.Directory.Name]=$_.Directory.FullName } return $MachineRoots } Those. we scan the subfolder with the name “WindowsImageBackup” in the root folder of the repository to a depth of one level in the search for subfolders. We are looking for signal files with the name "MediaID" , denoting a CPAM.

Similar to the case with file mode archives, we define the CAA — for which we describe the Get-LastImageBackupSet function and create the start-up of the same-set-to-the-art-of-the-art-of-the-art-of-the-art-of-the-art-of-the-art-of-the-art-of-the-art-of-the-art-for-all-in-one- and -one-for-one- and -one-for-one- and -one-for-more-image-for-the-lastImageBackupSetSummaryHTMLRow , and the of all-the-lastImageBackupSetSummaryTXTRow , Get-LastImageBackupSetSummaryHTMLRow, and the Get-LastImageBackupSetSummaryTXTRow functions . Similar to the case of file mode archives, a pair of functions that generate the hypertext representation is not shown here (see the attached source code).

function Get-LastImageBackupSet ($MachineRoot) { $objLB = $null try { $LastBSXML = Get-ChildItem -Path $MachineRoot -Recurse -Depth 1 ` -File -Filter "BackupSpecs.xml" | Sort-Object LastWriteTime | Select-Object -Last 1 LastWriteTime, Directory, ` @{Name="Machine";Expression={$_.Directory.Parent.Name.ToUpper()}}, ` @{Name="Path";Expression={$_.Directory.FullName}}, ` @{Name="Name";Expression={$_.Directory.Name}} $VHDFiles = ( Get-ChildItem $LastBSXML.Directory.FullName -File -Filter "*.vhd" | Select-Object Length | Measure-Object -property length -sum -ErrorAction SilentlyContinue) } finally { if (($VHDFiles) -and ($LastBSXML)) { $objLB = @{ Machine = $LastBSXML.Machine Path = $LastBSXML.Path Name = $LastBSXML.Name Updated = $LastBSXML.LastWriteTime Size = $VHDFiles.Sum } } } return $objLB } function Get-LastImageBackupSetSummaryTXTRow ($MachineRoot) { $ret = "" $Now = Get-Date $LastBT = Get-LastImageBackupSet ($MachineRoot) if ($LastBT) { $ret = ": " + $LastBT.Machine + "; : " + ` $LastBT.Name + "; : " + ($Now - $LastBT.Updated).Days + ` " . (" + $LastBT.Updated + "); : " + ` ('{0:0} {1}' -f ($LastBT.Size/1024/1024), "") } else{ $ret = " ." } return $ret } function Get-LastImageBackupDatesTXT ($WinBackupRoot) { $table=" :`r`n" $MachineRoots = Get-MachineListIB ($WinBackupRoot) foreach ($machine in $MachineRoots.Keys) { $line = Get-LastImageBackupSetSummaryTXTRow($MachineRoots[$machine]) $table+= -join($machine, " => ", $line, "`r`n") } return $table } This completes the analysis of the repository analysis code and proceeds to the general part of the script responsible for assembling and outputting the report as a whole.

Build and output report

Let us proceed from the fact that the script should be capable, as we have already agreed, to form a report in plain text format and in HTML format. In addition, we set ourselves the task of ensuring that the report can be output in two ways: (a) as a test stream to a standard output device and (b) by sending via e-mail to a given address. In this case, our script should accept the following command line parameters:

- root - (string) path to the root folder of the Windows Backup storage.

- txt - (flag) use plain text format, and in the absence of this parameter, HTML.

- mail - (flag) send the report to e-mail, and in the absence of this parameter, output the contents of the report "as is" to stdout.

- smtpsrv - (string) The network name or IP address of the SMTP server for sending the report via email.

- port - (integer) TCP port number for sending the report via email.

- to - (string) The email address of the message recipient.

- fm - (string) The email address of the sender of the message (also known as the username of the SMTP server).

- pwd - (string) password of the SMTP server user (although this is not quite correct from a security point of view).

- sub - (string) subject of the message to be sent.

Create a script header at the beginning with a description of the command line parameters. It is worth mentioning that the mechanism of working with them, which in its rudimentary form was implemented in the CMD.EXE scripts, was significantly improved in the Windows Script Host scripts, but it was still very inconvenient and required many additional actions to organize the system of typed parameters and their analysis. In Powershell, it underwent radical transformations and became a full-fledged handy tool - the Param block, which allows you to implement the processing of parameters by the built-in PS tools based on their ads. Let's apply it for our own purposes:

Param( [Parameter(Mandatory)][string] $Root, [switch] $txt=$false, [switch] $mail=$false, [string] $SmtpSrv, [int] $Port=25, [string] $To, [string] $Fm, [string] $Pwd, [string] $Sub ) As can be seen from the above fragment, ace command line parameters are described by a single Param block. Inside its brackets, comma-separated list, its attributes are listed - all of our parameters with indication of data types and binding. In general, the simplest version of the description of a separate parameter consists of a simple identifier, starting with the "$" symbol. The next most complex variant of a parameter declaration is the specification of the data type, which is described as the data type name in square brackets to the left of the parameter identifier. You can also set a default value by simply adding an assignment statement and value to the identifier on the right. The absence of an explicit default value causes the parameter to be initialized with an empty value of the specified type.

The type of the switch parameter (i.e. “switch”) works as follows: if the script received this parameter by simple mentioning on the command line, it is assigned a true value. If it was not on the command line, a false value will be assigned.

If you need to specify additional attributes of the parameter - such as, for example, mandatory - you will have to add another block in square brackets to the left, which begins with the keyword Parameter , followed by a list of its attributes and, possibly, their values, enclosed in parentheses. In particular, the binding nature of an individual parameter is given by its Mandatory attribute of a Boolean type. Beginning with PS 3.0, explicitly specifying the value of True to the attributes of the parameters is not necessary: if the value is omitted, the meaning is True .

In our case, for example, the Root parameter is described as mandatory, of type string , with no default value. The specified requirement of this parameter will lead to the fact that if the script is launched without it, the Powershell interpreter will prompt the console to enter its value and will not allow the execution of the script code without somehow specifying its value. The absence of explicitly specified parameters, not declared as mandatory, is not an obstacle to the beginning of the execution of the script.

It must be said that the use of the Param block in the Powershell scripting language is not limited only to command line parameters — it can also be applied to function parameters. Perhaps the more correct statement would be more true: it is applicable not only to the parameters of functions, but also to the command line parameters of the script, sincethe script body is an analogue of the top-level function of the program code hierarchy (as, for example, the main () function in C). With it, we could override our Get-LastImageBackupDatesTXT function as follows:

function Get-LastImageBackupDatesTXT { Param( [Parameter(Mandatory)][string] $WinBackupRoot ) $table=" :`r`n" $MachineRoots = Get-MachineListIB ($WinBackupRoot) foreach ($machine in $MachineRoots.Keys) { $line = Get-LastImageBackupSetSummaryTXTRow($MachineRoots[$machine]) $table+= -join($machine, " => ", $line, "`r`n") } return $table } , , , . , .

Powershell , . , ".PARAMETER" Comment-Based Help , «Get-Help <_>» .

. , , : , :

function Get-LastWinBackupDatesHTML ($WinBackupRoot) { $Now = Get-Date $table='<!DOCTYPE html PUBLIC "-//W3C//DTD XHTML 1.0 Transitional//EN" "http://www.w3.org/TR/xhtml1/DTD/xhtml1-transitional.dtd">'+"`n" $table+='<html xmlns="http://www.w3.org/1999/xhtml"><head><title> "'+ `$WinBackupRoot+'"</title></head><body>'+"`n" $table+='<h3> "'+$WinBackupRoot+'"'+"</h3>`n" $table+='<h3> : '+$Now+"</h3>`n" $table+= Get-LastFileBackupDatesHTML ($WinBackupRoot) $table+= Get-LastImageBackupDatesHTML ($WinBackupRoot) $table+= "</body>`n</html>" return $table } function Get-LastWinBackupDatesTXT ($WinBackupRoot) { $Now = Get-Date $table=' "'+$WinBackupRoot+'"'+"`r`n" $table+=' : '+$Now+"`r`n`r`n" $table+= Get-LastFileBackupDatesTXT ($WinBackupRoot) $table+= "`r`n" $table+= Get-LastImageBackupDatesTXT ($WinBackupRoot) return $table } function Send-Email-Report ($SendInfo, $TXTonly=$false){ $Credential = New-Object -TypeName "System.Management.Automation.PSCredential" ` -ArgumentList $SendInfo.Username, $SendInfo.SecurePassword if ($TXTonly) { Send-MailMessage -To $SendInfo.To -From $SendInfo.From ` -Subject $SendInfo.Subject -Body $SendInfo.MsgBody ` -SmtpServer $SendInfo.SmtpServer -Credential $Credential ` -Port $SendInfo.SmtpPort -Encoding UTF8 } else { Send-MailMessage -To $SendInfo.To -From $SendInfo.From ` -Subject $SendInfo.Subject -BodyAsHtml $SendInfo.MsgBody ` -SmtpServer $SendInfo.SmtpServer -Credential $Credential ` -Port $SendInfo.SmtpPort } } , Powershell, , CR/LF, HTML – LF. HTML- MS Outlook. , – .

, , , .

Send-Email-Report «» Send-MailMessage , « » . .NET «System.Management.Automation.PSCredential» Send-MailMessage , . ( ) ( $Credential ) , «/». , , , Get-Credential . , , – …

. , , , $SendInfo , -. - $TXTonly , . – .

, . , , – .

if ($txt) { $Ret = Get-LastWinBackupDatesTXT ($Root) } else { $Ret = Get-LastWinBackupDatesHTML ($Root) } if ($mail) { if (($SmtpSrv) -and ($Port) -and ($To) -and ($Fm) -and ($Pwd) -and ($Sub) -and ($Ret)) { $objArgs = @{ SmtpServer = $SmtpSrv SmtpPort = $Port To = $To.Split(',') From = $Fm Username = $Fm SecurePassword = ConvertTo-SecureString -String $Pwd -AsPlainText -Force Subject = $Sub MsgBody = $Ret } Send-Email-Report ($objArgs, $txt) } else { Write-Host $Ret } } else { Write-Host $Ret } , , , , : , $txt , Get-LastWinBackupDatesXXX . (, ) $Ret . – Send-Email-Report , -, . , – () .

It remains to give examples of generated reports:

Hypertext format:

Backup copies in "\\ sa-nas \ netbackup"

Date of report formation: 01/10/2017 09:00:01

File Mode Archives:

A machine | The most current archive | Age | Was updated | The size |

| SA-CHENG | Backup Set 2017-01-10 040000 | 0 days | 10/01/2017 04:16:23 | 6446 MB |

| SA-DOCTOR | Backup Set 2017-01-10 080002 | 0 days | 10/01/2017 08:10:09 | 2431 MB |

| SA-BAR | Backup Set 2016-12-28 033001 | 0 days | 10/01/2017 02:36:35 | 248028 MB |

| SA-RECEPTION | Backup Set 2017-01-10 060737 | 0 days | 10/01/2017 06:16:29 | 1264 MB |

| SA-ECR | Backup Set 2017-01-08 220005 | 0 days | 01/09/2017 22:03:22 | 2863 MB |

| SA-HOTELMGR | Backup Set 2017-01-08 020003 | 0 days | 01/09/2017 22:11:02 | 26788 MB |

| SA-ITO | Backup Set 2017-01-06 230005 | 0 days | 01/09/2017 23:08:48 | 17486 MB |

| SA-CHEF | Backup Set 2017-01-05 230002 | 0 days | 01/09/2017 23:02:11 | 3791 MB |

| SA-MASTER | Backup Set 2017-01-09 102958 | 0 days | 01/09/2017 23:12:51 | 8441 |

| SA-CONTROLLER | Backup Set 2017-01-09 000007 | 0 | 01/10/2017 00:17:04 | 9788 |

:

A machine | Age | The size | ||

| SA-ITO | Backup 2017-01-10 020013 | 0 | 01/09/2017 23:12:44 | 47534 |

| POSSERVER | Backup 2017-01-10 020006 | 0 | 01/09/2017 23:07:36 | 23138 |

:

"\\sa-nas\netbackup"

: 10.01.2017 09:00:01

:

: SA-CHENG; : Backup Set 2017-01-10 040000; : 0 . (01/10/2017 04:16:23); : 6446

: SA-DOCTOR; : Backup Set 2017-01-10 080002; : 0 . (01/10/2017 08:10:09); : 2431

: SA-BAR; : Backup Set 2016-12-28 033001; : 0 . (01/10/2017 02:36:35); : 248028

: SA-RECEPTION; : Backup Set 2017-01-10 060737; : 0 . (01/10/2017 06:16:29); : 1264

: SA-ECR; : Backup Set 2017-01-08 220005; : 0 . (01/09/2017 22:03:22); : 2863

Machine: SA-HOTELMGR; Archive name: Backup Set 2017-01-08 020003; Updated: 0 days ago. (09/09/2017 22:11:02); Size: 26788 MB

Machine: SA-ITO; Archive name: Backup Set 2017-01-06 230005; Updated: 0 days ago. (09/09/2017 23:08:48); Size: 17486 MB

Machine: SA-CHEF; Archive name: Backup Set 2017-01-05 230002; Updated: 0 days ago. (09/09/2017 23:02:11); Size: 3791 MB

Machine: SA-MASTER; Archive name: Backup Set 2017-01-09 102958; Updated: 0 days ago. (09/09/2017 23:12:51); Size: 8441 MB

Machine: SA-CONTROLLER; Archive name: Backup Set 2017-01-09 000007; Updated: 0 days ago. (10/10/2017 00:17:04); Size: 9788 MB

Disk Volume Images:

Machine: SA-ITO; Archive name: Backup 2017-01-10 020013; Updated: 0 days ago. (09/09/2017 23:12:44); Size: 47534 MB

Machine: POSSERVER; Archive name: Backup 2017-01-10 020006; Updated: 0 days ago. (09/09/2017 23:07:36); Size: 23138 MB

Conclusion

, . , – , , , , . , . , , . , , , : , .

.

, , -add-on' , , , , – NT Backup ghettoVCB ( VMware ESXi).

Source: https://habr.com/ru/post/319378/

All Articles