Why are VR shots so lame, and what to do about it?

Throws - one of the first actions that players try in virtual reality. We take a virtual mug with coffee and throw it away. A mug, donut or ball begins to spin wildly. You do not have time to look back, as the player is already throwing flower pots at the training bot.

Job simulator

Some throws are too strong. Others are extremely weak. Once or twice it still manages to get into the pursued NPC. Probably the very first lesson in VR is definitely quite difficult to throw. When I experienced this feeling, I first thought that I was not playing well in VR. We consider it natural that mastering the control scheme is part of the learning curve of the game. But when the throws of the same movements lead to completely different results, it is very frustrating.

A sharp throw with a swing ends with a short flight of the subject ...

')

Trying to throw a bottle of water with a swing

... and a slight wave of the wrist can send an item on a long flight.

I just moved my wrist slightly ...

Rescuties: tossing children

This summer I worked on a casual action game called Rescuties . This is a VR game about throwing and catching children and other cute animals.

If in the virtual reality the world reacts to our actions counterintuitively, it interferes with players immersion in the process or strains them. The whole gameplay is about throwing, so the Rescuties could not rely on such an inherently uncomfortable mechanic.

Rescuties

Irritation is an integral part of the design of such games. In short, it is difficult to throw in VR, because it is impossible to feel the weight of a virtual object . Approaches may be different, but in most games they strive to make the physics of a virtual object as plausible as possible. The player grabs the object, gives him a virtual impulse, and he flies away.

But here's the problem: there is a difference between what the player feels in his hand and what is happening in the virtual world. When the virtual object is lifted, the center of gravity of the object and the center of gravity felt in the hand do not match. Muscles get the wrong data.

If a player simply shoved his hands in some direction during a throw, this discrepancy would not be very important. But when throwing, he bends his arm and turns his wrist. (What is the secret of a good throw? “It's all in the wrist!”) When you turn your real hand, the player applies an unnecessary moment to the virtual object, as if he is throwing it with a small spoon.

This is a very uncompromising phenomenon. In many VR games, the user can “pick up” an object that is half a meter away from his hand. When you press the button, the object flies into the hand, but remains at a constant distance, turning the hand into a catapult. The difference between seven and thirty centimeters can mean that the movement of the wrist will cause the object to fly across the room or just slightly push it forward.

Wrist movement like a catapult

In Rescuties, the player catches flying children quickly and seeks to transfer them to a safe place as soon as possible. Using the traditional approach led to too whimsical management and annoying gameplay. “Why can't I just throw the baby the way I feel is right?”

Physical and virtual weight

The key to successful throwing mechanics is to consider how control is felt by the user, and not what the physics of the game offers.

Instead of measuring the speed of throwing a virtual object that the player is holding. you need to perform measurements for the object that the player is holding - the real object, i.e. controller HTC Vive or Oculus Touch. It is his weight and the moment he feels in his hand. And his muscular memory (physical skill and instinct that developed during his life) responds to this weight and moment.

The center of gravity felt by the player does not change, no matter what virtual object he raises

You can link physical and virtual weight to determine in-game speed using the center of gravity of the physical controller. First, you need to determine where this real center of gravity is in the game. Controllers report their location in the game space. The player himself can take a look from under the head-mounted display to calibrate the position of the center of gravity. When tracking this point relative to the controller, the speed is calculated when its position changes.

After making these changes, the testers started playing Rescuties much better, but I still saw and felt a strong discrepancy.

(There are interesting considerations in this article about transferring information about virtual weight to players. This approach is the opposite; instead of maximally using the physical sensations of the controller's weight, it gives the user virtual signals about the behavior of virtual objects.)

Timing

At what exact moment the player intends to throw the object?

In real life, when throwing, we relax our fingers, the object breaks out of the grip, and the fingertips continue to push it in the right direction until we lose control of it at all. We can twist or roll the object to the very last split second.

Instead of such tactile feedback in most VR games, a trigger is used under the index finger. This is better than a button: in Rescuties, pressing a trigger by 20% squeezes a virtual glove by about 20%, and a 100 percent press squeezes a fist completely. But the player will not feel the object, breaking out of the grip and sliding on the fingers. The release of the fingers described above is implemented by simply releasing the trigger (possibly gradual). I found out that you need to determine the throw signal from the player immediately when he starts to relax his fingers. Throws are recognized when the pressure on the trigger is weakened, not only to 0% or to a small value, but to the experimentally found one.

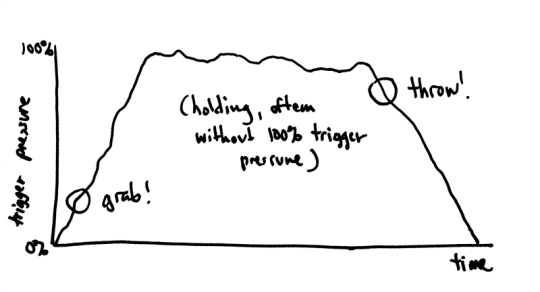

The graph shows the change in pressure on the trigger in the cycle "grab-hold-throw". In this case, the user does not click on the trigger 100%. This is often the case, because with HTC controllers, the trigger can be easily pressed up to about 80%, and then you need to put much more effort to press up to 100%. First, the player presses the trigger to raise the object. Then the pressure is more or less constant. because the player captures the object and swings for a throw. There is a slight noise of the trigger sensor. The player releases the trigger when throwing or throwing the object.

The noise of the signal and the pulse of the player can lead to fluctuations in the force of pressing the trigger. Therefore, the required threshold recognition of player actions. In particular, this means that the game registers the ejection, when the pressure on the trigger (for example) is 20% less than the peak pressure recorded when the player lifts the object. The threshold should be large enough so that the player does not accidentally drop the child. We found the required value with testers by trial and error. In addition, if one recognizes an action as a seizure with too little pressure, there will not be enough interval for a reliable determination of a throw or release. You will get the same thing that I have in one of the unsuccessful iterations: a strange super-fast cycle “lift-throw-lift-throw”.

Speed noise

Measuring the correct speed and improving the timing well enough to reduce the effect of the mismatch of shots. But the initial data themselves - the speed measurements coming from the equipment sensors - are quite noisy. Noise is especially noticeable when the head-mounted display or controllers move quickly. (Let's say when a player throws something!)

Working with noise requires smoothing.

I tried to smooth the speed with a moving average (also known as a low-pass filter), but as a result, only the slowest (swing) and fastest (release) stages of the throw were averaged. It seemed to my testers that it was very difficult to throw, as if they were under water. (This is what I used to feel in the Rec Room game.)

Averaging the speed of the controller gives reliable, but too slow results, completely different from the sensations in Job Simulator

I tried to take the peak values of the last measured speeds, and at least the testers children began to fly at the speed with which they wanted, but not always in the right direction, because the last measured direction was also exposed to noise.

I needed to take a few last frames of measurements, and observe how they develop, i.e. draw a trend line. Simple linear regression of measurements gave us a much more reliable result. Finally I managed to throw the children when and where I want!

The debug visualization of speed shows in red the last four frames of the measured speed, and in yellow - the result of the regression used in the game.

How I refined the shots in the Rescuties (tl; dr)

- We measure the speed of the throw from the center of gravity, which is felt by the user, that is, from the center of gravity of the controller.

- We recognize the shot exactly at the moment when the player is going to throw, i.e. with partial release of the trigger.

- We transform most of the measured velocity data by regression in order to better evaluate the player’s intentions.

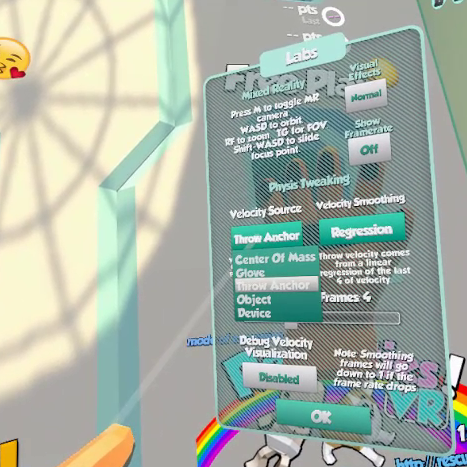

If you are interested in testing these different approaches and comparing them, in the Rescuties there is a “Labs!” Menu where you can switch between different throw modes, choose a way to measure speed and control the number of measurement frames used for regression / smoothing.

But this problem is by no means solved. In VR games, there are many different approaches to shots, and players like them. When creating throws in Rescuties, I wanted to make the physical expectations of muscle memory correspond as closely as possible to the sensations in virtual reality of flying kids. Now they are much better, but I want to further improve them.

Therefore, any suggestions on my approach and any criticism are welcome: you can contact me on Twitter or by mail at any time.

Source: https://habr.com/ru/post/319250/

All Articles