Bitrix Start Performance on Proxmox and Virtuozzo 7 & Virtuozzo Storage

Performance testing Bitrix Start on two fundamentally different platforms. We will measure using the built-in Bitrix performance panel.

On the one hand, the free version of Proxmox 4.4, LXC containers using the ZFS file system on SSD drives.

On the other hand, licensed Virtuozzo 7 CT + Virtuozzo Storage. In this version, we use regular SATA drives + SSD for write and read caches.

')

We take into account that Virtuozzo 7 is a commercial system requiring mandatory licensing, and Proxmox 4 can be used for free, but without technical support.

For this reason, to fully compare the two platforms is, of course, not correct, but, if

It is interesting to learn how to increase the performance of the site using the same hardware, the same configuration of virtual machines and its services, then this article may be useful to you.

My other publications

- Zabbix 2.2 riding on nginx + php-fpm and mariadb

- HAPRoxy for Percona or Galera on CentOS. Its configuration and monitoring in Zabbix

- “Perfect” www cluster. Part 1. Frontend: NGINX + Keepalived (vrrp) on CentOS

- "Perfect" cluster. Part 2.1: Virtual hetzner cluster

- "Perfect" cluster. Part 2.2: Highly available and scalable web server, the best technologies to guard your business

- "Perfect" cluster. Part 3.1 Implementing MySQL Multi-Master Cluster

- Acceleration and optimization of PHP-site. What technologies should be chosen when setting up a server for PHP

- Comparison of Drupal code execution speed for PHP 5.3-5.6 and 7.0. "Battle of code optimizers" apc vs xcache vs opcache

The purpose of this test is to measure the performance of the Bitrix Start 16.5.4 site installed inside Proxmox LXC and Virtuozzo 7 containers.

Prehistory

Our team is engaged in the maintenance of various web-projects.

The main task is to design and implement environments for web-systems. We monitor their high performance and availability, carry out their monitoring and backup.

One of the important problems in project management is the quality of the hosting, which hosts the sites of our clients. There are situations when the VPS falls or is slow. It happens that the server is unavailable for no apparent reason or is suddenly rebooted.

At these moments we become hostages of the technical support service of the hosting company and we have to spend time diagnosing problems, preparing statistics and providing information to the technical support of the hosting. The solution of such problems sometimes lasts for days.

To eliminate this problem for ourselves, we decided to build a small hosting for our clients. To do this, we bought the server and began to search for a suitable data center for their location. As a platform, we stopped at Selectel - the servers in their Moscow data center operate without interruption. Technical support answers very quickly and solves all questions that arise.

Initially, we chose the simplest solution - to lift Proxmox 3 based servers with OpenVZ containers and raid SSD drives. This solution was very fast and fairly reliable.

As time went on, Proxmox 4 appeared and we gradually switched to using it in combination with ZFS. Immediately faced with a lot of problems associated with the LXC. For example, in containers, screen and multitail did not work without first runningexec </dev/ttyin the container, in Centos 6 without executing the command:sed -i -e 's/start_udev/false/g' /etc/rc.d/rc.sysinit

ssh did not work, pure-ftpd did not work, and so on. Having studied everything well and refined the configuration, we set up Proxmox work and were satisfied.

Of the benefits, we used ZFS compression:

- This saved a lot of disk space for our users.

- Solved the problem of file system overflow, for example, due to logs,

- Eliminated the problem when the mysql database with the innodb engine did not decrease on disk when deleted from it, but was simply filled with empty blocks,

- We could move VPS clients in real time from server to server using pve-zsync.

In general, the transition was successful, but it was difficult. Everything was tested on test servers and, ultimately, we safely moved to a new architecture.

At some point, it became necessary to think about increasing the performance of virtual machines and sites of our clients.

We began to try various alternatives. For the tests, we bought licenses for Virtuozzo 7 and Cloud Storage. We assembled a test cluster of Virtuozzo Storage, set up caching, and started the first test virtual machines. At all stages, we were assisted by technical support from Virtuozzo .

As a result, the result turned out to be very satisfied and transferred customers to the new architecture. In one of the following articles I will describe the main points of implementation and about the pitfalls that we encountered.

Proxmox 4.4-5 / c43015a5 is used as the first test subject.

The sites are located on the zfs pool of 2 SSD disks in mirror mode, via the Proxmox driver:

zpool create -f -o ashift=12 zroot raidz /dev/sdb3 /dev/sda3 zfs set atime=off zroot zfs set compression=lz4 zroot zfs set dedup=off zroot zfs set snapdir=visible zroot zfs set primarycache=all zroot zfs set aclinherit=passthrough zroot zfs inherit acltype zroot Everything else for the Proxmox version is completely standard.

Option with Virtuozzo 7:

We have Virtuozzo release 7.0.3 (550) as the hypervisor, and Virtuozzo 7 Storage is used as the disk array. 3 servers are used as MDS:

2 avail 0.0% 1/s 0.0% 100m 17d 6h msk-01:2510 3 avail 0.0% 0/s 0.0% 102m 5d 1h msk-03:2510 M 1 avail 0.0% 1/s 1.8% 99m 17d 5h msk-06:2510 On the test bench, the chunks are located on 2 simple SATA drives.

1025 active 869.2G 486.2G 1187 0 29% 1.15/485 1.0 msk-06 1026 active 796.6G 418.8G 1243 0 17% 1.18/440 0.4 msk-06 At the same time, each chunk has its own SSD logs of approximately 166GB

vstorage -c livelinux configure-cs -r /vstorage/livelinux-cs1/data -a /mnt/ssd2/livelinux-cs0-ssd/ -s 170642 vstorage -c livelinux configure-cs -r /vstorage/livelinux-cs2/data -a /mnt/ssd2/livelinux-cs1-ssd/ -s 170642 Cluster clients have local cache on SSD, about 370GB in size

vstorage://livelinux /vstorage/livelinux fuse.vstorage _netdev,cache=/mnt/ssd1/client_cache,cachesize=380000 0 0 The test cluster uses non-replication mode, those 1: 1. On real combat servers, 3: 2 mode is used, i.e. All data is simultaneously stored on 3 servers of the cluster, this allows you to avoid data loss in case of a fall to 2 servers of the cluster.

Test Bitrix VPS have the following characteristics:

- CPU 4 cores

- RAM 4GB

- Centos 7

- Nginx as frontend and for static return

- Apache 2.4 Prefork for working .htaccess and rewright

- PHP 5.6 in php-fpm mode

- Opcache

- MariaDB 10.1 database stored at Innodb

- Redis to store user sessions

Test Server Features

Platform Supermicro SYS-6016T-U

2x Intel Xeon E5620

12 8GB DDR3-1066, ECC, Reg

2x Intel S3510 Series, 800GB, 2.5 '' SATA 6Gb / s, MLC

2x Hitachi Ultrastar A7K2000, 1TB, SATA III, 7200rpm, 3.5 '', 32MB cache

2x Intel Xeon E5620

12 8GB DDR3-1066, ECC, Reg

2x Intel S3510 Series, 800GB, 2.5 '' SATA 6Gb / s, MLC

2x Hitachi Ultrastar A7K2000, 1TB, SATA III, 7200rpm, 3.5 '', 32MB cache

/etc/sysctl.conf

net.netfilter.nf_conntrack_max = 99999999

fs.inotify.max_user_watches = 99999999

net.ipv4.tcp_max_tw_buckets = 99999999

net.ipv4.tcp_max_tw_buckets_ub = 65535

net.ipv4.ip_forward = 1

net.ipv4.tcp_syncookies = 1

net.ipv4.tcp_max_syn_backlog = 65536

net.core.somaxconn = 65535

fs.file-max = 99999999

kernel.sem = 1000 256000 128 1024

vm.dirty_ratio = 5

fs.aio-max-nr = 262144

kernel.panic = 1

net.ipv4.conf.all.rp_filter = 1

kernel.sysrq = 1

net.ipv4.conf.default.send_redirects = 1

net.ipv4.conf.all.send_redirects = 0

net.ipv4.ip_dynaddr = 1

kernel.msgmn = 1024

fs.inotify.max_user_instances = 1024

kernel.msgmax = 65536

kernel.shmmax = 4294967295

kernel.shmall = 268435456

kernel.shmmni = 4096

net.ipv4.tcp_keepalive_time = 15

net.ipv4.tcp_keepalive_intvl = 10

net.ipv4.tcp_keepalive_probes = 5

net.ipv4.tcp_fin_timeout = 30

net.ipv4.tcp_window_scaling = 0

net.ipv4.tcp_sack = 0

net.ipv4.tcp_timestamps = 0

vm.swappiness = 10

vm.overcommit_memory = 1

fs.inotify.max_user_watches = 99999999

net.ipv4.tcp_max_tw_buckets = 99999999

net.ipv4.tcp_max_tw_buckets_ub = 65535

net.ipv4.ip_forward = 1

net.ipv4.tcp_syncookies = 1

net.ipv4.tcp_max_syn_backlog = 65536

net.core.somaxconn = 65535

fs.file-max = 99999999

kernel.sem = 1000 256000 128 1024

vm.dirty_ratio = 5

fs.aio-max-nr = 262144

kernel.panic = 1

net.ipv4.conf.all.rp_filter = 1

kernel.sysrq = 1

net.ipv4.conf.default.send_redirects = 1

net.ipv4.conf.all.send_redirects = 0

net.ipv4.ip_dynaddr = 1

kernel.msgmn = 1024

fs.inotify.max_user_instances = 1024

kernel.msgmax = 65536

kernel.shmmax = 4294967295

kernel.shmall = 268435456

kernel.shmmni = 4096

net.ipv4.tcp_keepalive_time = 15

net.ipv4.tcp_keepalive_intvl = 10

net.ipv4.tcp_keepalive_probes = 5

net.ipv4.tcp_fin_timeout = 30

net.ipv4.tcp_window_scaling = 0

net.ipv4.tcp_sack = 0

net.ipv4.tcp_timestamps = 0

vm.swappiness = 10

vm.overcommit_memory = 1

nginx -V

nginx version: nginx / 1.11.8

built by gcc 4.8.5 20150623 (Red Hat 4.8.5-4) (GCC)

built with OpenSSL 1.0.1e-fips 11 Feb 2013

TLS SNI support enabled

configure arguments: --prefix = / etc / nginx --sbin-path = / usr / sbin / nginx - modules-path = / usr / lib64 / nginx / modules --conf-path = / etc / nginx / nginx. conf --error-log-path = / var / log / nginx / error.log --http-log-path = / var / log / nginx / access.log --pid-path = / var / run / nginx. pid --lock-path = / var / run / nginx.lock --http-client-body-temp-path = / var / cache / nginx / client_temp --http-proxy-temp-path = / var / cache / nginx / proxy_temp --http-fastcgi-temp-path = / var / cache / nginx / fastcgi_temp --http-uwsgi-temp-path = / var / cache / nginx / uwsgi_temp --http-scgi-temp-path = / var / cache / nginx / scgi_temp --user = nginx --group = nginx --with-compat --with-file-aio --with-threads --with-http_addition_module --with-http_auth_request_module --with-http_dav_module - "s-kx -with-http_sub_modul e --with-http_v2_module --with-mail --with-mail_ssl_module --with-stream --with-stream_realip_module --with-stream_ssl_module --with-stream_ssl_preread_module --with-cc-opt = '- O2 -g - pipe -Wall -Wp, -D_FORTIFY_SOURCE = 2 -fexceptions -fstack-protector-strong --param = ssp-buffer-size = 4 -grecord-gcc-switches -m64 -mtune = generic '

built by gcc 4.8.5 20150623 (Red Hat 4.8.5-4) (GCC)

built with OpenSSL 1.0.1e-fips 11 Feb 2013

TLS SNI support enabled

configure arguments: --prefix = / etc / nginx --sbin-path = / usr / sbin / nginx - modules-path = / usr / lib64 / nginx / modules --conf-path = / etc / nginx / nginx. conf --error-log-path = / var / log / nginx / error.log --http-log-path = / var / log / nginx / access.log --pid-path = / var / run / nginx. pid --lock-path = / var / run / nginx.lock --http-client-body-temp-path = / var / cache / nginx / client_temp --http-proxy-temp-path = / var / cache / nginx / proxy_temp --http-fastcgi-temp-path = / var / cache / nginx / fastcgi_temp --http-uwsgi-temp-path = / var / cache / nginx / uwsgi_temp --http-scgi-temp-path = / var / cache / nginx / scgi_temp --user = nginx --group = nginx --with-compat --with-file-aio --with-threads --with-http_addition_module --with-http_auth_request_module --with-http_dav_module - "s-kx -with-http_sub_modul e --with-http_v2_module --with-mail --with-mail_ssl_module --with-stream --with-stream_realip_module --with-stream_ssl_module --with-stream_ssl_preread_module --with-cc-opt = '- O2 -g - pipe -Wall -Wp, -D_FORTIFY_SOURCE = 2 -fexceptions -fstack-protector-strong --param = ssp-buffer-size = 4 -grecord-gcc-switches -m64 -mtune = generic '

/etc/nginx/nginx.conf

worker_processes auto;

timer_resolution 100ms;

pid /run/nginx.pid;

thread_pool default threads = 32 max_queue = 655360;

events {

worker_connections 10000;

multi_accept on;

use epoll;

}

http {

include /etc/nginx/mime.types;

default_type application / octet-stream;

log_format main '$ host - $ remote_user [$ time_local] "$ request"' '$ status $ body_bytes_sent "$ http_referer"' '"$ http_user_agent" "$ http_x_forwarded_for"';

log_format defaultServer '[$ time_local] [$ server_addr] $ remote_addr ($ http_user_agent) -> "$ http_referer" $ host "$ request" $ status';

log_format downloadsLog '[$ time_local] $ remote_addr "$ request"';

log_format Counter '[$ time_iso8601] $ remote_addr $ request_uri? $ query_string';

access_log off;

access_log / dev / null main;

error_log / dev / null;

connection_pool_size 256;

client_header_buffer_size 4k;

client_max_body_size 2048m;

large_client_header_buffers 8 32k;

request_pool_size 4k;

output_buffers 1 32k;

postpone_output 1460;

gzip on;

gzip_min_length 1000;

gzip_proxied any;

gzip_types text / plain text / css application / json application / x javascript text / xml application / xml application / xml + rss text / javascript text / x-javascript application / javascript;

gzip_disable "msie6";

gzip_comp_level 6;

gzip_http_version 1.0;

gzip_vary on;

sendfile on;

aio threads;

directio 10m;

tcp_nopush on;

tcp_nodelay on;

server_tokens off;

keepalive_timeout 75 20;

server_names_hash_bucket_size 128;

server_names_hash_max_size 8192;

ignore_invalid_headers on;

server_name_in_redirect off;

ssl_stapling on;

ssl_stapling_verify on;

resolver 8.8.4.4 8.8.8.8 valid = 300s;

resolver_timeout 10s;

ssl_protocols TLSv1 TLSv1.1 TLSv1.2;

ssl_ciphers 'AES128 + EECDH: AES128 + EDH';

ssl_session_cache shared: SSL: 50m;

ssl_prefer_server_ciphers on;

proxy_http_version 1.1;

proxy_set_header Connection "";

proxy_buffering off;

proxy_buffer_size 8k;

proxy_buffers 8 64k;

proxy_connect_timeout 300m;

proxy_read_timeout 300m;

proxy_send_timeout 300m;

proxy_store off;

proxy_ignore_client_abort on;

fastcgi_read_timeout 300m;

open_file_cache max = 200000 inactive = 20s;

open_file_cache_valid 30s;

open_file_cache_min_uses 2;

open_file_cache_errors on;

proxy_cache_path / var / cache / nginx / page levels = 2 keys_zone = pagecache: 100m inactive = 1h max_size = 10g;

fastcgi_cache_path / var / cache / nginx / fpm levels = 2 keys_zone = FPMCACHE: 100m inactive = 1h max_size = 10g;

fastcgi_cache_key "$ scheme $ request_method $ host";

fastcgi_cache_use_stale error timeout invalid_header http_500;

fastcgi_ignore_headers Cache-Control Expires Set-Cookie;

fastcgi_buffers 16 16k;

fastcgi_buffer_size 32k;

upstream memcached_backend {server 127.0.0.1:11211; }

real_ip_header X-Real-IP;

proxy_set_header Host $ host;

proxy_set_header X-Real-IP $ remote_addr;

proxy_set_header X-Forwarded-For $ proxy_add_x_forwarded_for;

allow all;

server {

listen 80 reuseport;

}

include /etc/nginx/conf.d/*.conf;

}

timer_resolution 100ms;

pid /run/nginx.pid;

thread_pool default threads = 32 max_queue = 655360;

events {

worker_connections 10000;

multi_accept on;

use epoll;

}

http {

include /etc/nginx/mime.types;

default_type application / octet-stream;

log_format main '$ host - $ remote_user [$ time_local] "$ request"' '$ status $ body_bytes_sent "$ http_referer"' '"$ http_user_agent" "$ http_x_forwarded_for"';

log_format defaultServer '[$ time_local] [$ server_addr] $ remote_addr ($ http_user_agent) -> "$ http_referer" $ host "$ request" $ status';

log_format downloadsLog '[$ time_local] $ remote_addr "$ request"';

log_format Counter '[$ time_iso8601] $ remote_addr $ request_uri? $ query_string';

access_log off;

access_log / dev / null main;

error_log / dev / null;

connection_pool_size 256;

client_header_buffer_size 4k;

client_max_body_size 2048m;

large_client_header_buffers 8 32k;

request_pool_size 4k;

output_buffers 1 32k;

postpone_output 1460;

gzip on;

gzip_min_length 1000;

gzip_proxied any;

gzip_types text / plain text / css application / json application / x javascript text / xml application / xml application / xml + rss text / javascript text / x-javascript application / javascript;

gzip_disable "msie6";

gzip_comp_level 6;

gzip_http_version 1.0;

gzip_vary on;

sendfile on;

aio threads;

directio 10m;

tcp_nopush on;

tcp_nodelay on;

server_tokens off;

keepalive_timeout 75 20;

server_names_hash_bucket_size 128;

server_names_hash_max_size 8192;

ignore_invalid_headers on;

server_name_in_redirect off;

ssl_stapling on;

ssl_stapling_verify on;

resolver 8.8.4.4 8.8.8.8 valid = 300s;

resolver_timeout 10s;

ssl_protocols TLSv1 TLSv1.1 TLSv1.2;

ssl_ciphers 'AES128 + EECDH: AES128 + EDH';

ssl_session_cache shared: SSL: 50m;

ssl_prefer_server_ciphers on;

proxy_http_version 1.1;

proxy_set_header Connection "";

proxy_buffering off;

proxy_buffer_size 8k;

proxy_buffers 8 64k;

proxy_connect_timeout 300m;

proxy_read_timeout 300m;

proxy_send_timeout 300m;

proxy_store off;

proxy_ignore_client_abort on;

fastcgi_read_timeout 300m;

open_file_cache max = 200000 inactive = 20s;

open_file_cache_valid 30s;

open_file_cache_min_uses 2;

open_file_cache_errors on;

proxy_cache_path / var / cache / nginx / page levels = 2 keys_zone = pagecache: 100m inactive = 1h max_size = 10g;

fastcgi_cache_path / var / cache / nginx / fpm levels = 2 keys_zone = FPMCACHE: 100m inactive = 1h max_size = 10g;

fastcgi_cache_key "$ scheme $ request_method $ host";

fastcgi_cache_use_stale error timeout invalid_header http_500;

fastcgi_ignore_headers Cache-Control Expires Set-Cookie;

fastcgi_buffers 16 16k;

fastcgi_buffer_size 32k;

upstream memcached_backend {server 127.0.0.1:11211; }

real_ip_header X-Real-IP;

proxy_set_header Host $ host;

proxy_set_header X-Real-IP $ remote_addr;

proxy_set_header X-Forwarded-For $ proxy_add_x_forwarded_for;

allow all;

server {

listen 80 reuseport;

}

include /etc/nginx/conf.d/*.conf;

}

/etc/nginx/sites-enabled/start.livelinux.ru.vhost

upstream start.livelinux.ru {

keepalive 10;

server 127.0.0.1:82;

}

server {

listen 80;

server_name start.livelinux.ru www.start.livelinux.ru;

root /var/www/start.livelinux.ru/web;

error_page 404 = @fallback;

location @fallback {

proxy_pass start.livelinux.ru;

proxy_set_header Host $ host;

proxy_set_header X-Forwarded-For $ proxy_add_x_forwarded_for;

proxy_set_header X-Real-IP $ remote_addr;

}

error_log /var/log/ispconfig/httpd/start.livelinux.ru/nginx_error.log error;

access_log /var/log/ispconfig/httpd/start.livelinux.ru/nginx_access.log combined;

location ~ * ^. + \. (ogg | ogv | svg | svgz | eot | otf | woff | mp4 | ttf | css | rss | atom | js | jpg | jpeg | gif | png | ico | zip | tgz | gz | rar | bz2 | doc | xls | exe | ppt | tar | mid | midi | wav | bmp | rtf) $ {

root /var/www/start.livelinux.ru/web;

access_log off;

expires 30d;

}

location ^ ~ / error {root / var / www; }

location / {

index index.php index.html index.htm;

proxy_set_header X-Real-IP $ remote_addr;

proxy_set_header Host $ host;

proxy_set_header X-Forwarded-For $ proxy_add_x_forwarded_for;

proxy_pass start.livelinux.ru;

}

include /etc/nginx/locations.d/*.conf;

}

keepalive 10;

server 127.0.0.1:82;

}

server {

listen 80;

server_name start.livelinux.ru www.start.livelinux.ru;

root /var/www/start.livelinux.ru/web;

error_page 404 = @fallback;

location @fallback {

proxy_pass start.livelinux.ru;

proxy_set_header Host $ host;

proxy_set_header X-Forwarded-For $ proxy_add_x_forwarded_for;

proxy_set_header X-Real-IP $ remote_addr;

}

error_log /var/log/ispconfig/httpd/start.livelinux.ru/nginx_error.log error;

access_log /var/log/ispconfig/httpd/start.livelinux.ru/nginx_access.log combined;

location ~ * ^. + \. (ogg | ogv | svg | svgz | eot | otf | woff | mp4 | ttf | css | rss | atom | js | jpg | jpeg | gif | png | ico | zip | tgz | gz | rar | bz2 | doc | xls | exe | ppt | tar | mid | midi | wav | bmp | rtf) $ {

root /var/www/start.livelinux.ru/web;

access_log off;

expires 30d;

}

location ^ ~ / error {root / var / www; }

location / {

index index.php index.html index.htm;

proxy_set_header X-Real-IP $ remote_addr;

proxy_set_header Host $ host;

proxy_set_header X-Forwarded-For $ proxy_add_x_forwarded_for;

proxy_pass start.livelinux.ru;

}

include /etc/nginx/locations.d/*.conf;

}

php -v

PHP 5.6.29 (cli) (built: Dec 9 2016 07:40:09)

Copyright © 1997-2016 The PHP Group

Zend Engine v2.6.0, Copyright © 1998-2016 Zend Technologies

with the ionCube PHP Loader (enabled) + Intrusion Protection from ioncube24.com (unconfigured) v5.1.2, Copyright © 2002-2016, by ionCube Ltd.

with Zend OPcache v7.0.6-dev, Copyright © 1999-2016, by Zend Technologies

Copyright © 1997-2016 The PHP Group

Zend Engine v2.6.0, Copyright © 1998-2016 Zend Technologies

with the ionCube PHP Loader (enabled) + Intrusion Protection from ioncube24.com (unconfigured) v5.1.2, Copyright © 2002-2016, by ionCube Ltd.

with Zend OPcache v7.0.6-dev, Copyright © 1999-2016, by Zend Technologies

/etc/php.ini

[Php]

engine = On

short_open_tag = On

asp_tags = Off

precision = 14

output_buffering = 4096

zlib.output_compression = Off

implicit_flush = Off

unserialize_callback_func =

serialize_precision = 17

disable_functions =

disable_classes =

realpath_cache_size = 4096k

zend.enable_gc = On

expose_php = off

max_execution_time = 600

max_input_time = 600

max_input_vars = 100000

memory_limit = 512M

error_reporting = E_ALL & ~ E_NOTICE & ~ E_STRICT & ~ E_DEPRECATED

display_errors = Off

display_startup_errors = Off

log_errors = On

log_errors_max_len = 1024

ignore_repeated_errors = Off

ignore_repeated_source = Off

report_memleaks = On

track_errors = Off

html_errors = On

variables_order = "GPCS"

request_order = "GP"

register_argc_argv = Off

auto_globals_jit = On

post_max_size = 500M

auto_prepend_file =

auto_append_file =

default_mimetype = "text / html"

default_charset = "UTF-8"

always_populate_raw_post_data = -1

doc_root =

user_dir =

enable_dl = Off

cgi.fix_pathinfo = 1

file_uploads = On

upload_max_filesize = 500M

max_file_uploads = 200

allow_url_fopen = On

allow_url_include = Off

default_socket_timeout = 60

[CLI Server]

cli_server.color = On

[Date]

date.timezone = Europe / Moscow

[Pdo_mysql]

pdo_mysql.cache_size = 2000

pdo_mysql.default_socket =

[Phar]

[mail function]

sendmail_path = / usr / sbin / sendmail -t -i

mail.add_x_header = On

[SQL]

sql.safe_mode = Off

[ODBC]

odbc.allow_persistent = On

odbc.check_persistent = On

odbc.max_persistent = -1

odbc.max_links = -1

odbc.defaultlrl = 4096

odbc.defaultbinmode = 1

[Interbase]

ibase.allow_persistent = 1

ibase.max_persistent = -1

ibase.max_links = -1

ibase.timestampformat = "% Y-% m-% d% H:% M:% S"

ibase.dateformat = "% Y-% m-% d"

ibase.timeformat = "% H:% M:% S"

[Mysql]

mysql.allow_local_infile = On

mysql.allow_persistent = On

mysql.cache_size = 2000

mysql.max_persistent = -1

mysql.max_links = -1

mysql.default_port =

mysql.default_socket =

mysql.default_host =

mysql.default_user =

mysql.default_password =

mysql.connect_timeout = 60

mysql.trace_mode = Off

[Mysqli]

mysqli.max_persistent = -1

mysqli.allow_persistent = On

mysqli.max_links = -1

mysqli.cache_size = 2000

mysqli.default_port = 3306

mysqli.default_socket =

mysqli.default_host =

mysqli.default_user =

mysqli.default_pw =

mysqli.reconnect = Off

[mysqlnd]

mysqlnd.collect_statistics = On

mysqlnd.collect_memory_statistics = Off

[OCI8]

[PostgreSQL]

pgsql.allow_persistent = On

pgsql.auto_reset_persistent = Off

pgsql.max_persistent = -1

pgsql.max_links = -1

pgsql.ignore_notice = 0

pgsql.log_notice = 0

[Sybase-ct]

sybct.allow_persistent = On

sybct.max_persistent = -1

sybct.max_links = -1

sybct.min_server_severity = 10

sybct.min_client_severity = 10

[bcmath]

bcmath.scale = 0

[Session]

session.save_handler = redis

session.save_path = "tcp: //127.0.0.1: 6379"

session.use_strict_mode = 0

session.use_cookies = 1

session.use_only_cookies = 1

session.name = SESSIONID

session.auto_start = 0

session.cookie_lifetime = 0

session.cookie_path = /

session.cookie_domain =

session.cookie_httponly =

session.serialize_handler = php

session.gc_probability = 1

session.gc_divisor = 1000

session.gc_maxlifetime = 1440

session.referer_check =

session.cache_limiter = nocache

session.cache_expire = 180

session.use_trans_sid = 0

session.hash_function = 0

session.hash_bits_per_character = 5

url_rewriter.tags = "a = href, area = href, frame = src, input = src, form = fakeentry"

[MSSQL]

mssql.allow_persistent = On

mssql.max_persistent = -1

mssql.max_links = -1

mssql.min_error_severity = 10

mssql.min_message_severity = 10

mssql.compatibility_mode = Off

mssql.secure_connection = Off

[Tidy]

tidy.clean_output = Off

[soap]

soap.wsdl_cache_enabled = 1

soap.wsdl_cache_dir = "/ tmp"

soap.wsdl_cache_ttl = 86400

soap.wsdl_cache_limit = 5

[sysvshm]

[ldap]

ldap.max_links = -1

engine = On

short_open_tag = On

asp_tags = Off

precision = 14

output_buffering = 4096

zlib.output_compression = Off

implicit_flush = Off

unserialize_callback_func =

serialize_precision = 17

disable_functions =

disable_classes =

realpath_cache_size = 4096k

zend.enable_gc = On

expose_php = off

max_execution_time = 600

max_input_time = 600

max_input_vars = 100000

memory_limit = 512M

error_reporting = E_ALL & ~ E_NOTICE & ~ E_STRICT & ~ E_DEPRECATED

display_errors = Off

display_startup_errors = Off

log_errors = On

log_errors_max_len = 1024

ignore_repeated_errors = Off

ignore_repeated_source = Off

report_memleaks = On

track_errors = Off

html_errors = On

variables_order = "GPCS"

request_order = "GP"

register_argc_argv = Off

auto_globals_jit = On

post_max_size = 500M

auto_prepend_file =

auto_append_file =

default_mimetype = "text / html"

default_charset = "UTF-8"

always_populate_raw_post_data = -1

doc_root =

user_dir =

enable_dl = Off

cgi.fix_pathinfo = 1

file_uploads = On

upload_max_filesize = 500M

max_file_uploads = 200

allow_url_fopen = On

allow_url_include = Off

default_socket_timeout = 60

[CLI Server]

cli_server.color = On

[Date]

date.timezone = Europe / Moscow

[Pdo_mysql]

pdo_mysql.cache_size = 2000

pdo_mysql.default_socket =

[Phar]

[mail function]

sendmail_path = / usr / sbin / sendmail -t -i

mail.add_x_header = On

[SQL]

sql.safe_mode = Off

[ODBC]

odbc.allow_persistent = On

odbc.check_persistent = On

odbc.max_persistent = -1

odbc.max_links = -1

odbc.defaultlrl = 4096

odbc.defaultbinmode = 1

[Interbase]

ibase.allow_persistent = 1

ibase.max_persistent = -1

ibase.max_links = -1

ibase.timestampformat = "% Y-% m-% d% H:% M:% S"

ibase.dateformat = "% Y-% m-% d"

ibase.timeformat = "% H:% M:% S"

[Mysql]

mysql.allow_local_infile = On

mysql.allow_persistent = On

mysql.cache_size = 2000

mysql.max_persistent = -1

mysql.max_links = -1

mysql.default_port =

mysql.default_socket =

mysql.default_host =

mysql.default_user =

mysql.default_password =

mysql.connect_timeout = 60

mysql.trace_mode = Off

[Mysqli]

mysqli.max_persistent = -1

mysqli.allow_persistent = On

mysqli.max_links = -1

mysqli.cache_size = 2000

mysqli.default_port = 3306

mysqli.default_socket =

mysqli.default_host =

mysqli.default_user =

mysqli.default_pw =

mysqli.reconnect = Off

[mysqlnd]

mysqlnd.collect_statistics = On

mysqlnd.collect_memory_statistics = Off

[OCI8]

[PostgreSQL]

pgsql.allow_persistent = On

pgsql.auto_reset_persistent = Off

pgsql.max_persistent = -1

pgsql.max_links = -1

pgsql.ignore_notice = 0

pgsql.log_notice = 0

[Sybase-ct]

sybct.allow_persistent = On

sybct.max_persistent = -1

sybct.max_links = -1

sybct.min_server_severity = 10

sybct.min_client_severity = 10

[bcmath]

bcmath.scale = 0

[Session]

session.save_handler = redis

session.save_path = "tcp: //127.0.0.1: 6379"

session.use_strict_mode = 0

session.use_cookies = 1

session.use_only_cookies = 1

session.name = SESSIONID

session.auto_start = 0

session.cookie_lifetime = 0

session.cookie_path = /

session.cookie_domain =

session.cookie_httponly =

session.serialize_handler = php

session.gc_probability = 1

session.gc_divisor = 1000

session.gc_maxlifetime = 1440

session.referer_check =

session.cache_limiter = nocache

session.cache_expire = 180

session.use_trans_sid = 0

session.hash_function = 0

session.hash_bits_per_character = 5

url_rewriter.tags = "a = href, area = href, frame = src, input = src, form = fakeentry"

[MSSQL]

mssql.allow_persistent = On

mssql.max_persistent = -1

mssql.max_links = -1

mssql.min_error_severity = 10

mssql.min_message_severity = 10

mssql.compatibility_mode = Off

mssql.secure_connection = Off

[Tidy]

tidy.clean_output = Off

[soap]

soap.wsdl_cache_enabled = 1

soap.wsdl_cache_dir = "/ tmp"

soap.wsdl_cache_ttl = 86400

soap.wsdl_cache_limit = 5

[sysvshm]

[ldap]

ldap.max_links = -1

/etc/php.d/10-opcache.ini

zend_extension = opcache.so

opcache.enable = 1

opcache.enable_cli = 1

opcache.memory_consumption = 512

opcache.interned_strings_buffer = 8

opcache.max_accelerated_files = 130987

opcache.fast_shutdown = 1

opcache.blacklist_filename = / etc / php.d / opcache * .blacklist

opcache.enable = 1

opcache.enable_cli = 1

opcache.memory_consumption = 512

opcache.interned_strings_buffer = 8

opcache.max_accelerated_files = 130987

opcache.fast_shutdown = 1

opcache.blacklist_filename = / etc / php.d / opcache * .blacklist

/etc/php-fpm.d/bitrix.conf

[bitrix]

listen = /var/lib/php5-fpm/web1.sock

listen.owner = web1

listen.group = apache

listen.mode = 0660

user = web1

group = client1

pm = dynamic

pm.max_children = 10

pm.start_servers = 2

pm.min_spare_servers = 1

pm.max_spare_servers = 5

pm.max_requests = 0

chdir = /

env [HOSTNAME] = $ HOSTNAME

env [tmp] = / var / www / clients / client1 / web1 / tmp

env [TMPDIR] = / var / www / clients / client1 / web1 / tmp

env [TEMP] = / var / www / clients / client1 / web1 / tmp

env [PATH] = / usr / local / sbin: / usr / local / bin: / usr / sbin: / usr / bin: / sbin: / bin

php_admin_value [open_basedir] = none

php_admin_value [session.save_path] = "tcp: //127.0.0.1: 6379"

php_admin_value [upload_tmp_dir] = / var / www / clients / client1 / web1 / tmp

php_admin_value [sendmail_path] = "/ usr / sbin / sendmail -t -i -f webmaster@biz.livelinux.ru"

php_admin_value [max_input_vars] = 100000

php_admin_value [mbstring.func_overload] = 2

php_admin_value [mbstring.internal_encoding] = utf-8

php_admin_flag [opcache.revalidate_freq] = off

php_admin_flag [display_errors] = on

listen = /var/lib/php5-fpm/web1.sock

listen.owner = web1

listen.group = apache

listen.mode = 0660

user = web1

group = client1

pm = dynamic

pm.max_children = 10

pm.start_servers = 2

pm.min_spare_servers = 1

pm.max_spare_servers = 5

pm.max_requests = 0

chdir = /

env [HOSTNAME] = $ HOSTNAME

env [tmp] = / var / www / clients / client1 / web1 / tmp

env [TMPDIR] = / var / www / clients / client1 / web1 / tmp

env [TEMP] = / var / www / clients / client1 / web1 / tmp

env [PATH] = / usr / local / sbin: / usr / local / bin: / usr / sbin: / usr / bin: / sbin: / bin

php_admin_value [open_basedir] = none

php_admin_value [session.save_path] = "tcp: //127.0.0.1: 6379"

php_admin_value [upload_tmp_dir] = / var / www / clients / client1 / web1 / tmp

php_admin_value [sendmail_path] = "/ usr / sbin / sendmail -t -i -f webmaster@biz.livelinux.ru"

php_admin_value [max_input_vars] = 100000

php_admin_value [mbstring.func_overload] = 2

php_admin_value [mbstring.internal_encoding] = utf-8

php_admin_flag [opcache.revalidate_freq] = off

php_admin_flag [display_errors] = on

httpd -V

Server version: Apache / 2.4.6 (CentOS)

Server built: Nov 14 2016 18:04:44

Server's Module Magic Number: 20120211: 24

Server loaded: APR 1.4.8, APR-UTIL 1.5.2

Compiled using: APR 1.4.8, APR-UTIL 1.5.2

Architecture: 64-bit

Server MPM: prefork

threaded: no

forked: yes (variable process count)

Server compiled with ...

-D APR_HAS_SENDFILE

-D APR_HAS_MMAP

-D APR_HAVE_IPV6 (IPv4-mapped addresses enabled)

-D APR_USE_SYSVSEM_SERIALIZE

-D APR_USE_PTHREAD_SERIALIZE

-D SINGLE_LISTEN_UNSERIALIZED_ACCEPT

-D APR_HAS_OTHER_CHILD

-D AP_HAVE_RELIABLE_PIPED_LOGS

-D DYNAMIC_MODULE_LIMIT = 256

-D HTTPD_ROOT = "/ etc / httpd"

-D SUEXEC_BIN = "/ usr / sbin / suexec"

-D DEFAULT_PIDLOG = "/ run / httpd / httpd.pid"

-D DEFAULT_SCOREBOARD = "logs / apache_runtime_status"

-D DEFAULT_ERRORLOG = "logs / error_log"

-D AP_TYPES_CONFIG_FILE = "conf / mime.types"

-D SERVER_CONFIG_FILE = "conf / httpd.conf"

Server built: Nov 14 2016 18:04:44

Server's Module Magic Number: 20120211: 24

Server loaded: APR 1.4.8, APR-UTIL 1.5.2

Compiled using: APR 1.4.8, APR-UTIL 1.5.2

Architecture: 64-bit

Server MPM: prefork

threaded: no

forked: yes (variable process count)

Server compiled with ...

-D APR_HAS_SENDFILE

-D APR_HAS_MMAP

-D APR_HAVE_IPV6 (IPv4-mapped addresses enabled)

-D APR_USE_SYSVSEM_SERIALIZE

-D APR_USE_PTHREAD_SERIALIZE

-D SINGLE_LISTEN_UNSERIALIZED_ACCEPT

-D APR_HAS_OTHER_CHILD

-D AP_HAVE_RELIABLE_PIPED_LOGS

-D DYNAMIC_MODULE_LIMIT = 256

-D HTTPD_ROOT = "/ etc / httpd"

-D SUEXEC_BIN = "/ usr / sbin / suexec"

-D DEFAULT_PIDLOG = "/ run / httpd / httpd.pid"

-D DEFAULT_SCOREBOARD = "logs / apache_runtime_status"

-D DEFAULT_ERRORLOG = "logs / error_log"

-D AP_TYPES_CONFIG_FILE = "conf / mime.types"

-D SERVER_CONFIG_FILE = "conf / httpd.conf"

/etc/httpd/conf.modules.d/00-mpm.conf

LoadModule mpm_prefork_module modules / mod_mpm_prefork.so

<IfModule mpm_prefork_module>

StartServers 8

MinSpareServers 5

MaxSpareServers 20

ServerLimit 256

MaxClients 256

MaxRequestsPerChild 4000

<IfModule mpm_prefork_module>

StartServers 8

MinSpareServers 5

MaxSpareServers 20

ServerLimit 256

MaxClients 256

MaxRequestsPerChild 4000

/etc/httpd/conf.modules.d/00-mutex.conf

Mutex posixsem

EnableMMAP Off

EnableMMAP Off

/etc/httpd/conf.modules.d/10-fcgid.conf

LoadModule fcgid_module modules / mod_fcgid.so

FcgidMaxRequestLen 20 million

FcgidMaxRequestLen 20 million

/etc/httpd/conf/sites-enabled/100-start.livelinux.ru.vhost

<Directory /var/www/start.livelinux.ru>

AllowOverride None

Require all denied

<// Directory>

<VirtualHost *: 82>

DocumentRoot / var / www / clients / client1 / web3 / web

ServerName start.livelinux.ru

ServerAlias www.start.livelinux.ru

ServerAdmin webmaster@start.livelinux.ru

ErrorLog /var/log/ispconfig/httpd/start.livelinux.ru/error.log

<Directory /var/www/start.livelinux.ru/web>

<FilesMatch ". + \. Ph (p [345]? | T | tml) $">

SetHandler None

<// FilesMatch>

Options + FollowSymLinks

AllowOverride All

Require all granted

<// Directory>

<Directory / var / www / clients / client1 / web3 / web>

<FilesMatch ". + \. Ph (p [345]? | T | tml) $">

SetHandler None

<// FilesMatch>

Options + FollowSymLinks

AllowOverride All

Require all granted

<// Directory>

<IfModule mod_suexec.c>

SuexecUserGroup web3 client1

<// IfModule>

<IfModule mod_fastcgi.c>

<Directory / var / www / clients / client1 / web3 / cgi-bin>

Require all granted

<// Directory>

<Directory /var/www/start.livelinux.ru/web>

<FilesMatch "\ .php [345]? $">

SetHandler php5-fcgi

<// FilesMatch>

<// Directory>

<Directory / var / www / clients / client1 / web3 / web>

<FilesMatch "\ .php [345]? $">

SetHandler php5-fcgi

<// FilesMatch>

<// Directory>

Action php5-fcgi / php5-fcgi virtual

Alias / php5-fcgi /var/www/clients/client1/web3/cgi-bin/php5-fcgi-*-82-start.livelinux.ru

FastCgiExternalServer /var/www/clients/client1/web3/cgi-bin/php5-fcgi-*-82-start.livelinux.ru -idle-timeout 300 -socket /var/lib/php5-fpm/web3.sock -pass -header Authorization

<// IfModule>

<IfModule mod_proxy_fcgi.c>

<Directory / var / www / clients / client1 / web3 / web>

<FilesMatch "\ .php [345]? $">

SetHandler "proxy: unix: /var/lib/php5-fpm/web3.sock | fcgi: // localhost"

<// FilesMatch>

<// Directory>

<// IfModule>

<IfModule mpm_itk_module>

AssignUserId web3 client1

<// IfModule>

<IfModule mod_dav_fs.c>

<Directory / var / www / clients / client1 / web3 / webdav>

<ifModule mod_security2.c>

SecRuleRemoveById 960015

SecRuleRemoveById 960032

<// ifModule>

<FilesMatch "\ .ph (p3? | Tml) $">

SetHandler None

<// FilesMatch>

<// Directory>

DavLockDB / var / www / clients / client1 / web3 / tmp / DavLock

<// IfModule>

<// VirtualHost>

AllowOverride None

Require all denied

<// Directory>

<VirtualHost *: 82>

DocumentRoot / var / www / clients / client1 / web3 / web

ServerName start.livelinux.ru

ServerAlias www.start.livelinux.ru

ServerAdmin webmaster@start.livelinux.ru

ErrorLog /var/log/ispconfig/httpd/start.livelinux.ru/error.log

<Directory /var/www/start.livelinux.ru/web>

<FilesMatch ". + \. Ph (p [345]? | T | tml) $">

SetHandler None

<// FilesMatch>

Options + FollowSymLinks

AllowOverride All

Require all granted

<// Directory>

<Directory / var / www / clients / client1 / web3 / web>

<FilesMatch ". + \. Ph (p [345]? | T | tml) $">

SetHandler None

<// FilesMatch>

Options + FollowSymLinks

AllowOverride All

Require all granted

<// Directory>

<IfModule mod_suexec.c>

SuexecUserGroup web3 client1

<// IfModule>

<IfModule mod_fastcgi.c>

<Directory / var / www / clients / client1 / web3 / cgi-bin>

Require all granted

<// Directory>

<Directory /var/www/start.livelinux.ru/web>

<FilesMatch "\ .php [345]? $">

SetHandler php5-fcgi

<// FilesMatch>

<// Directory>

<Directory / var / www / clients / client1 / web3 / web>

<FilesMatch "\ .php [345]? $">

SetHandler php5-fcgi

<// FilesMatch>

<// Directory>

Action php5-fcgi / php5-fcgi virtual

Alias / php5-fcgi /var/www/clients/client1/web3/cgi-bin/php5-fcgi-*-82-start.livelinux.ru

FastCgiExternalServer /var/www/clients/client1/web3/cgi-bin/php5-fcgi-*-82-start.livelinux.ru -idle-timeout 300 -socket /var/lib/php5-fpm/web3.sock -pass -header Authorization

<// IfModule>

<IfModule mod_proxy_fcgi.c>

<Directory / var / www / clients / client1 / web3 / web>

<FilesMatch "\ .php [345]? $">

SetHandler "proxy: unix: /var/lib/php5-fpm/web3.sock | fcgi: // localhost"

<// FilesMatch>

<// Directory>

<// IfModule>

<IfModule mpm_itk_module>

AssignUserId web3 client1

<// IfModule>

<IfModule mod_dav_fs.c>

<Directory / var / www / clients / client1 / web3 / webdav>

<ifModule mod_security2.c>

SecRuleRemoveById 960015

SecRuleRemoveById 960032

<// ifModule>

<FilesMatch "\ .ph (p3? | Tml) $">

SetHandler None

<// FilesMatch>

<// Directory>

DavLockDB / var / www / clients / client1 / web3 / tmp / DavLock

<// IfModule>

<// VirtualHost>

10.1.20-MariaDB MariaDB Server

symbolic-links = 0

default_storage_engine = InnoDB

innodb_file_per_table = 1

innodb_large_prefix = on

datadir = / var / lib / mysql

socket = / var / lib / mysql / mysql.sock

connect_timeout = 600000

wait_timeout = 28800

max_connections = 800

max_allowed_packet = 512M

max_connect_errors = 10000

net_read_timeout = 600000

connect_timeout = 600000

net_write_timeout = 600000

innodb_open_files = 512

innodb_buffer_pool_size = 2G

innodb_buffer_pool_instances = 4

innodb_file_format = barracuda

innodb_locks_unsafe_for_binlog = 1

innodb_flush_log_at_trx_commit = 2

transaction-isolation = READ-COMMITTED

innodb-data-file-path = ibdata1: 10M: autoextend

innodb-log-file-size = 256M

innodb_log_buffer_size = 32M

skip-name-resolve

skip-external-locking

skip-innodb_doublewrite

query_cache_size = 128M

query_cache_type = 1

query_cache_min_res_unit = 2K

join_buffer_size = 8M

sort_buffer_size = 2M

read_rnd_buffer_size = 3M

table_definition_cache = 2048

table_open_cache = 100000

thread_cache_size = 128

tmp_table_size = 200M

max_heap_table_size = 200M

log_warnings = 2

log_error = /var/log/mysql/mysql-error.log

key_buffer_size = 128M

binlog_format = MIXED

server_id = 226

sync-binlog = 0

expire-logs_days = 3

max-binlog-size = 1G

log-slave-updates

binlog-checksum = crc32

slave-skip-errors = 1062,1146

log_slave_updates = 1

slave_type_conversions = ALL_NON_LOSSY

slave-parallel-threads = 4

relay-log = / var / log / mysql / mysql-relay-bin

relay-log-index = /var/log/mysql/mysql-relay-bin.index

relay-log-info-file = /var/log/mysql/mysql-relay-log.info

skip-slave-start

optimizer_switch = 'derived_merge = off, derived_with_keys = off'

sql-mode = ""

innodb_flush_method = O_DIRECT_NO_FSYNC

innodb_use_native_aio = 1

innodb_adaptive_hash_index = 0

innodb_adaptive_flushing = 1

innodb_flush_neighbors = 0

innodb_read_io_threads = 16

innodb_write_io_threads = 16

innodb_max_dirty_pages_pct = 90

innodb_max_dirty_pages_pct_lwm = 10

innodb_lru_scan_depth = 4000

innodb_purge_threads = 4

innodb_max_purge_lag_delay = 10,000,000

innodb_max_purge_lag = 1,000,000

innodb_checksums = 1

innodb_checksum_algorithm = crc32

table_open_cache_instances = 16

innodb_thread_concurrency = 32

innodb-use-mtflush = ON

innodb-mtflush-threads = 8

innodb_use_fallocate = ON

innodb_monitor_enable = '%'

performance_schema = ON

performance_schema_instrument = '% sync% = on'

malloc-lib = /usr/lib64/libjemalloc.so.1

port = 3306

default_storage_engine = InnoDB

innodb_file_per_table = 1

innodb_large_prefix = on

datadir = / var / lib / mysql

socket = / var / lib / mysql / mysql.sock

connect_timeout = 600000

wait_timeout = 28800

max_connections = 800

max_allowed_packet = 512M

max_connect_errors = 10000

net_read_timeout = 600000

connect_timeout = 600000

net_write_timeout = 600000

innodb_open_files = 512

innodb_buffer_pool_size = 2G

innodb_buffer_pool_instances = 4

innodb_file_format = barracuda

innodb_locks_unsafe_for_binlog = 1

innodb_flush_log_at_trx_commit = 2

transaction-isolation = READ-COMMITTED

innodb-data-file-path = ibdata1: 10M: autoextend

innodb-log-file-size = 256M

innodb_log_buffer_size = 32M

skip-name-resolve

skip-external-locking

skip-innodb_doublewrite

query_cache_size = 128M

query_cache_type = 1

query_cache_min_res_unit = 2K

join_buffer_size = 8M

sort_buffer_size = 2M

read_rnd_buffer_size = 3M

table_definition_cache = 2048

table_open_cache = 100000

thread_cache_size = 128

tmp_table_size = 200M

max_heap_table_size = 200M

log_warnings = 2

log_error = /var/log/mysql/mysql-error.log

key_buffer_size = 128M

binlog_format = MIXED

server_id = 226

sync-binlog = 0

expire-logs_days = 3

max-binlog-size = 1G

log-slave-updates

binlog-checksum = crc32

slave-skip-errors = 1062,1146

log_slave_updates = 1

slave_type_conversions = ALL_NON_LOSSY

slave-parallel-threads = 4

relay-log = / var / log / mysql / mysql-relay-bin

relay-log-index = /var/log/mysql/mysql-relay-bin.index

relay-log-info-file = /var/log/mysql/mysql-relay-log.info

skip-slave-start

optimizer_switch = 'derived_merge = off, derived_with_keys = off'

sql-mode = ""

innodb_flush_method = O_DIRECT_NO_FSYNC

innodb_use_native_aio = 1

innodb_adaptive_hash_index = 0

innodb_adaptive_flushing = 1

innodb_flush_neighbors = 0

innodb_read_io_threads = 16

innodb_write_io_threads = 16

innodb_max_dirty_pages_pct = 90

innodb_max_dirty_pages_pct_lwm = 10

innodb_lru_scan_depth = 4000

innodb_purge_threads = 4

innodb_max_purge_lag_delay = 10,000,000

innodb_max_purge_lag = 1,000,000

innodb_checksums = 1

innodb_checksum_algorithm = crc32

table_open_cache_instances = 16

innodb_thread_concurrency = 32

innodb-use-mtflush = ON

innodb-mtflush-threads = 8

innodb_use_fallocate = ON

innodb_monitor_enable = '%'

performance_schema = ON

performance_schema_instrument = '% sync% = on'

malloc-lib = /usr/lib64/libjemalloc.so.1

port = 3306

/etc/redis.conf

bind 127.0.0.1

protected-mode yes

port 6379

tcp-backlog 511

timeout 0

tcp-keepalive 0

pidfile /var/run/redis/redis.pid

loglevel notice

logfile /var/log/redis/redis.log

databases 16

save 900 1

save 300 10

save 60 10000

stop-writes-on-bgsave-error yes

rdbcompression yes

rdbchecksum yes

dbfilename dump.rdb

dir / var / lib / redis /

unixsocket /tmp/redis.sock

unixsocketperm 777

slave-serve-stale-data yes

slave-read-only yes

repl-diskless-sync no

repl-diskless-sync-delay 5

repl-disable-tcp-nodelay no

slave-priority 100

appendonly no

appendfilename "appendonly.aof"

appendfsync everysec

no-appendfsync-on-rewrite no

auto-aof-rewrite-percentage 100

auto-aof-rewrite-min-size 64mb

aof-load-truncated yes

lua-time-limit 5000

slowlog-log-slower-than 10,000

slowlog-max-len 128

latency-monitor-threshold 0

notify-keyspace-events ""

hash-max-ziplist-entries 512

hash-max-ziplist-value 64

list-max-ziplist-size -2

list-compress-depth 0

set-max-intset-entries 512

zset-max-ziplist-entries 128

zset-max-ziplist-value 64

hll-sparse-max-bytes 3000

activerehashing yes

client-output-buffer-limit normal 0 0 0

client-output-buffer-limit slave 256mb 64mb 60

client-output-buffer-limit pubsub 32mb 8mb 60

hz 10

aof-rewrite-incremental-fsync yes

maxmemory 32M

maxmemory-policy volatile-lru

protected-mode yes

port 6379

tcp-backlog 511

timeout 0

tcp-keepalive 0

pidfile /var/run/redis/redis.pid

loglevel notice

logfile /var/log/redis/redis.log

databases 16

save 900 1

save 300 10

save 60 10000

stop-writes-on-bgsave-error yes

rdbcompression yes

rdbchecksum yes

dbfilename dump.rdb

dir / var / lib / redis /

unixsocket /tmp/redis.sock

unixsocketperm 777

slave-serve-stale-data yes

slave-read-only yes

repl-diskless-sync no

repl-diskless-sync-delay 5

repl-disable-tcp-nodelay no

slave-priority 100

appendonly no

appendfilename "appendonly.aof"

appendfsync everysec

no-appendfsync-on-rewrite no

auto-aof-rewrite-percentage 100

auto-aof-rewrite-min-size 64mb

aof-load-truncated yes

lua-time-limit 5000

slowlog-log-slower-than 10,000

slowlog-max-len 128

latency-monitor-threshold 0

notify-keyspace-events ""

hash-max-ziplist-entries 512

hash-max-ziplist-value 64

list-max-ziplist-size -2

list-compress-depth 0

set-max-intset-entries 512

zset-max-ziplist-entries 128

zset-max-ziplist-value 64

hll-sparse-max-bytes 3000

activerehashing yes

client-output-buffer-limit normal 0 0 0

client-output-buffer-limit slave 256mb 64mb 60

client-output-buffer-limit pubsub 32mb 8mb 60

hz 10

aof-rewrite-incremental-fsync yes

maxmemory 32M

maxmemory-policy volatile-lru

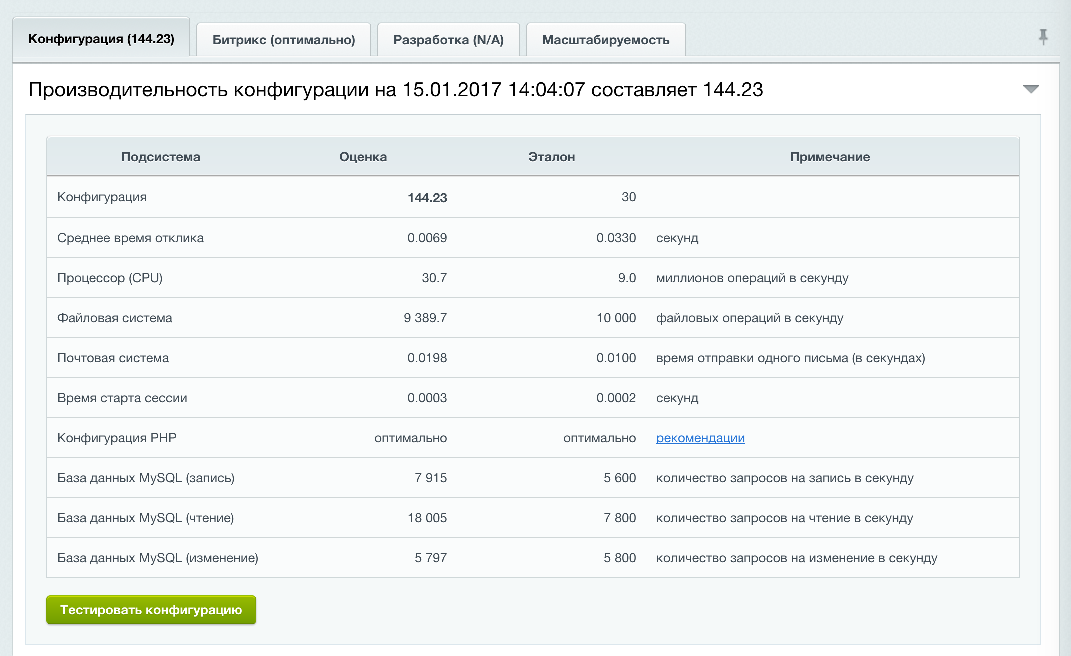

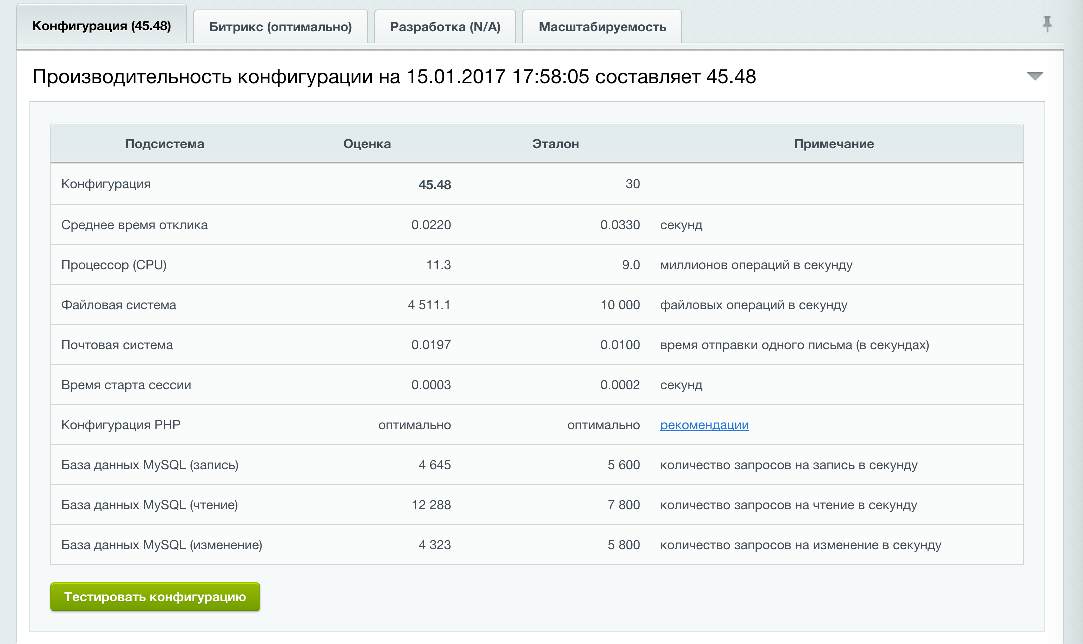

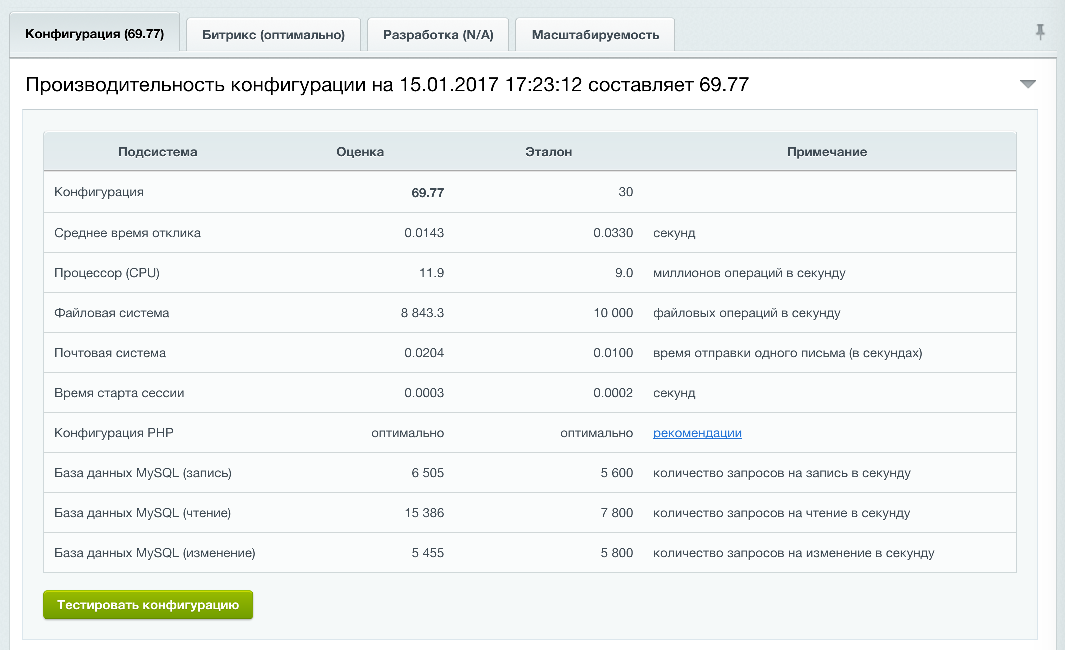

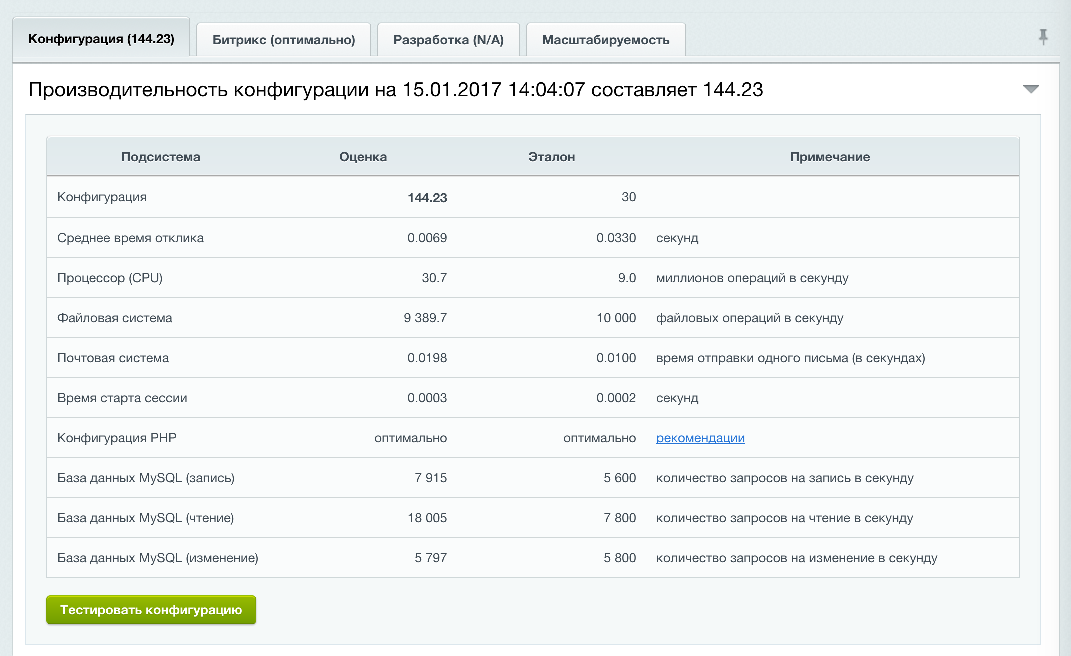

Performance test results:

Proxmox 4

Virtuozzo 7

As a bonus, Virtuozzo 7, php70 website

As a result:

The transition to a new cloud platform gave us high availability of data due to replication to 3 servers, but we lost a useful option zfs - compression. We received a performance gain of the test site on Bitrix, an average of 45%. If we talk about real sites, then depending on the number of plugins and the complexity of the code, we received a gain of up to 3 times.

Of the benefits - we got the ability to migrate virtual machines from server to server quickly, without having to turn them off. Of the minuses, unlike Proxmox, Virtuozzo 7 does not yet have a reliable and convenient control panel, so everything had to be done by hand or through shell scripts. The existing Automator is still very raw and unpredictable.

In favor of Virtuozzo - this is his technical support, she is on top. There is also good documentation on all components of the system, and Proxmox has a lot of problems with this. Even to write on the forum, you must pay a subscription, but in my opinion it is absolutely not worth it. The Proxmox cluster works until it breaks, but when it breaks, it is very difficult to pick it up and there is clearly not enough documentation for this.

I hope the article will be useful for you. Next time I will talk about switching Bitrix to php70 and compare the performance of various Bitrix editions.

Source: https://habr.com/ru/post/319210/

All Articles