Moving with Tarantool 1.5 to 1.6

Hi, Habr! I want to tell the story of migration from Tarantool version 1.5 to 1.6 in one of our projects. Do you think it is necessary to migrate to the new version, if everything works like this? How easy is it to do if you have already written a lot of code? How not to affect live users? What difficulties can you encounter with such changes? What is the profit from the move? Answers to all questions can be found in this article.

Service Architecture

It will be about our service to send push-notifications. On Habré already there is an article about sending messages " throughout the user base. " This is one of the parts of our service. All code was originally written in Python 2.7. We use the uwsgi stack, gevent, and, of course, Tarantool! The service has already sent out about one billion push notifications per day. Two clusters of eight servers each handle this load. The architecture of the service looks as shown in the figure:

Push notification service architecture

')

Notifications are sent by several servers from the cluster, designated in the figure as Node 1, Node N. The mailing service interacts with the apple cloud platforms push notification service and firebase cloud messaging . Each server handles HTTP traffic from mobile apps. The sending servers are completely identical. If one of them fails, then the entire load is automatically distributed to the other servers in the cluster.

Our users, their settings and push-tokens are stored in Tarantool. The figure is Tarantool Storage. Two replicas are used to distribute the reading load. The service code is designed for temporary unavailability of the master or replicas. If one of the replicas does not respond, then the select query is made to the next available replica or master. All requests for writing to the master are made through the Tarantool Queue. If the master is not available, the queue accumulates requests until the master is ready for operation.

For a long time, we had one master with the Tarantool database, which was used to store push tokens. At 10,000 requests per second, one master is enough for writing. And for the distribution of 100,000 requests for reading, we use several replicas. While one master is coping with writing data, the load on the reading can be distributed by adding new replicas.

The service architecture was originally designed for load growth and horizontal scaling. For a while, we could easily grow horizontally, adding new servers to send notifications. But is it possible to grow endlessly with such an architecture?

The problem is that an instance with a Tarantool master is only one. It runs on a separate server and grew to 50 GB. Two replicas are located on the second server. They began to occupy 50 * 2 = 100 GB. It turned out quite heavy instances of Tarantool. When restarting, they take off not instantly. Yes, and the amount of free memory on the server with replicas has reached the limit, which suggests that you need to change something.

Database sharding

The decision with a sharding of a database arises. We take a large instance Tarantool master and make several small instances! There are already ready-made solutions for sharding: one and two . But they all work only with Tarantool 1.6.

As always, there is one more “but”. Mail.Ru mail generates a huge traffic of events about new letters, reading letters, deleting them, etc. Only a part of these events must be delivered to mobile applications in the form of push-notifications. Processing this traffic is quite a resource-intensive task. Therefore, Mail.Ru Mail service filters out unnecessary events and sends only useful traffic to our service. To filter events, Mail.Ru Mail needs to receive information about installing a mobile application. To do this, use queries in our replicas Tarantool. That is, to divide one Tarantool into several already becomes more difficult. We need to tell all third-party services about how our sharding works. This will greatly complicate the system, especially when rewarding is required. The development will take a lot of time, since several services need to be finalized.

One of the possible solutions is to use a proxy that pretends to be Tarantool and makes the distribution of requests across multiple shards. In this case, changing the third-party services is not required.

So, what do we get from sharding?

- scalability will increase, you can grow further;

- the size of Tarantool instances will decrease, they will start faster, it will reduce the system downtime in case of accidents;

- due to sharding, we will distribute the load from Tarantool on the CPU on one server more efficiently, we will be able to use more cores;

- we will get more efficient use of server memory, 100 GB for the master and 100 GB for replicas.

Our service uses Tarantool 1.5. The development of this version of Tarantool is stopped. Well, if we do sharding, develop proxy, then why not replace the old Tarantool 1.5 with the new Tarantool 1.6?

What other advantages does Tarantool 1.6 have?

Our service is written in Python, and to work with Tarantool we use the connector github.com/tarantool/tarantool-python . For Tarantool 1.5, the iproto protocol is involved , the packing and unpacking of data in the connector is done in Python using the calls to struct.pack / struct.unpack . For the Tarantool 1.6 Python connector, the msgpack library is used . Preliminary benchmarks showed that unpacking and packaging on msgpack consumes slightly less CPU time than iproto. Migration to 1.6 can free up CPU resources in the cluster.

Little about the future

Apple has developed a new protocol for sending push notifications to iOS devices. It differs from the previous version , based on HTTP / 2 , it supports hiding push notifications. The maximum size of the sent data of one push notification is 4 Kbytes (in the old protocol - 2 Kbytes).

To send notifications to Android devices, we use the Google service.

Firebase Cloud Messaging . It added support for encrypting the content of push notifications for browser hrome.

Unfortunately, in Python there are still no good libraries for working with HTTP / 2. There are also no libraries to support encrypting Google notifications. Worse, you need to make existing libraries work with gevent and asyncio frameworks. This was the reason to think about the difficulties of supporting our service in the future. We considered the use of golang. Go has good support for all the new Apple and Google buns. But again the problem: we did not find the official go connector to Tarantool 1.5. Sadness, pain, ashes. Not! This is not about us. :)

So, for the development of our service it is necessary to solve the following tasks:

- support sharding;

- go to Tarantool 1.6;

- create a proxy that will process requests from clients in the protocol format for Tarantool 1.5;

- transfer the queue system to work with Tarantool 1.6;

- update the combat cluster so that it does not affect our users.

We develop proxy

We chose golang as the proxy language. This language is very convenient for solving this class of problems. After Python, it is unusual to code in a compiled language with type checking. The absence of classes and exceptions has caused some doubts. But several months of work have shown that you can cope perfectly well without these things. The gorutines and the go channels are very cool and convenient from the development point of view. Tools such as benchmark tests, a powerful golang profiler, golint, gofmt, help a lot and speed up the development process. Language support by the community, conferences, blogs, articles about go - all this causes only admiration!

So, we have a tarantool-proxy . It accepts connections from clients, provides interaction via the Tarantool 1.5 protocol, and distributes requests across several Tarantool 1.6 instances. The load on reading, as before, can be scaled using replicas. When introducing a new solution, we have provided the possibility of rollback. To do this, we modified our Python code. We have duplicated all requests for recording in tarantool-proxy and additionally to the “old” instance with Tarantool 1.5. In fact, our code has not changed, but it started working with Tarantool 1.6 through proxy. You ask: why so difficult? There will not be a rollback? No, there was a rollback. And not one.

Despite the fact that we performed load testing, after the first start, tarantool-proxy consumed too much CPU. Roll back, profile, fixed. After the second start, tarantool-proxy consumed a lot of memory, as much as 3 GB. The golang profiler again helped find the problem.

The profiler turns on quite simply:

import ( _ "net/http/pprof" "net/http" ) go http.ListenAndServe(netprofile, nil) Launch profile removal:

go tool pprof -inuse_space tarantool-proxy http://127.0.0.1:8895/debug/pprof/heap Visualize the results of profiling:

Entering interactive mode (type "help" for commands) (pprof) top20 1.74GB of 1.75GB total (99.38%) Dropped 122 nodes (cum <= 0.01GB) flat flat% sum% cum cum% 1.74GB 99.38% 99.38% 1.74GB 99.58% main.tarantool_listen.func1 0 0% 99.38% 1.75GB 99.89% runtime.goexit (pprof) list main.tarantool_listen.func1 Total: 1.75GB ROUTINE ======================== main.tarantool_listen.func1 in /home/work/src/tarantool-proxy/daemon.go 1.74GB 1.74GB (flat, cum) 99.58% of Total . . 37: . . 38: //run tarantool15 connection communicate . . 39: go func() { . . 40: defer conn.Close() . . 41: 1.74GB 1.74GB 42: proxy := newProxyConnection(conn, listenNum, tntPool, schema) . 3.50MB 43: proxy.processIproto() . . 44: }() . . 45: } . . 46:} . . 47: . . 48:func main() { (pprof) Visible problem with memory consumption that needs to be fixed. We launched all this on a battle server under load. By the way, there is a great article about profiling in go . She really helped us find problem areas in the code.

After correcting all the problems with tarantool-proxy, we watched the service work for another week under the new scheme. And then they finally abandoned Tarantool 1.5, removed all requests to it from the Python code.

How to make data migration from Tarantool 1.5 to 1.6?

To migrate data from 1.5 to 1.6, everything is ready from the github.com/tarantool/migrate box. We take snapshot 1.5 and we fill it in 1.6. For sharding, delete unnecessary data. A little patience - and we have a new Tarantool storage. All third-party services gained access to the new cluster through tarantool-proxy.

What difficulties have we encountered yet?

I had to migrate code lua-procedures under 1.6. But, frankly, it did not require significant effort. Another feature 1.6 - it does not have queries of the form

select * from space where key in (1,2,3) I had to rewrite several queries in a view loop

for key in (1,2,3): select * from space where key = ? We also took a new tarantool-queue , which is supported by developers and works with Tarantool 1.6. There were moments when I had to work in Python with both Tarantool 1.5 and Tarantool 1.6 — simultaneously.

Set the connector for 1.5 with pip and rename it:

pip install tarantool\<0.4 mv env\lib\python2.7\site-packages\tarantool env\lib\python2.7\site-packages\tarantool15 Next, install the connector for 1.6:

pip install tarantool In the Python code, do the following:

# todo use 1.6 import tarantool15 as tarantool tnt = tarantool.connect(host, port) tnt.select(1, "foo", index=0) import tarantool tnt = tarantool.connect(host, port) tnt.select(1, "bar", index="baz") Thus, simultaneously supporting multiple versions of Tarantool in Python does not require much effort. We gradually threw 1.5 queued instances and completely switched to Tarantool 1.6.

Production update

If you think that we quickly updated the production and everything worked right away, then this is far from being the case. For example, after the first attempt to switch to a new tarantool-queue with 1.6 graphics, the average response time of our services looked like this:

Average service response time

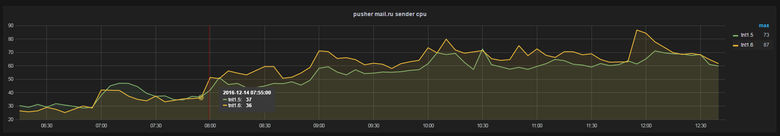

The green graphs clearly show the growth of the average HTTP request processing time for uwsgi, and not only. But after several iterations of the search for the reasons for such growth, the graphs returned to normal. And this is how the final graphs of Load Average and CPU consumption on the battle server look like:

Load average

CPU usage

Green charts show that we have freed up some of our iron resources. Considering that our loads are growing permanently, we received a small supply of iron on the current cluster configuration.

Summing up

We spent several months working to upgrade to Tarantool 1.6. And about a month more on sharding storage and update production. Modifying an existing system with a large load is quite difficult. Our service is constantly changing. In a live project, there are constant errors that require the intervention of developers. Constantly new product features appear, which also require changes in the already existing code.

You can not stop the development, especially for such a long task. You always have to think about possible options for a rollback to the previous state. And most importantly, the development has to be implemented in small iterations.

What's next?

Resharing is one of the remaining outstanding issues. While it is not urgent, we have time to evaluate all the work and make a forecast when we need resharing. We also plan to rewrite part of the service on golang. Perhaps the service will consume even less CPU. Then you have to do another article. :)

Thanks to all developers, Mail.ru Mail service engineers, Tarantool team and all those who participated in the migration, helped and inspired us to support and develop our service. It was cool!

Links used in writing this article:

- Tarantool - tarantool.org

- Tarantool Queue - github.com/tarantool/queue

- Asyncio Tarantool Queue, stand in line

- Go profiling and optimization

Source: https://habr.com/ru/post/319170/

All Articles