Isomorphic React Applications: Performance and Scaling

Denis Izmaylov ( DenisIzmaylov )

Hello! Briefly tell about yourself. I am Denis Izmailov. For the past 5 years, he has focused on JS development, has done a lot of Single Page Application, highload, React, spoke at MoscowJS several times, kammitil webpack, etc.

Today I would like to talk about this: why the Single Page Application in its classic form should be abandoned; how isomorphic applications will affect your salary; and what will you do this weekend?

')

It would be ideal if you already had some experience with React 14, with a webpack, understood how ES6 works, did something with express / koa, and at least vividly imagine what isomorphic applications are, they are universal.

Part 1.

The web has become very big. Now the development of a web has become a fine line between science and art.

Previously, it was quite simple: I made some kind of script on the server, added a couple of JS and sent it to the production, everything worked.

The browser made a request to the server, and the server did absolutely everything, and gave some HTML page.

CSS, JS - it was optional, practically not necessary. It worked. But then began to stand out Single Page Application (SPA).

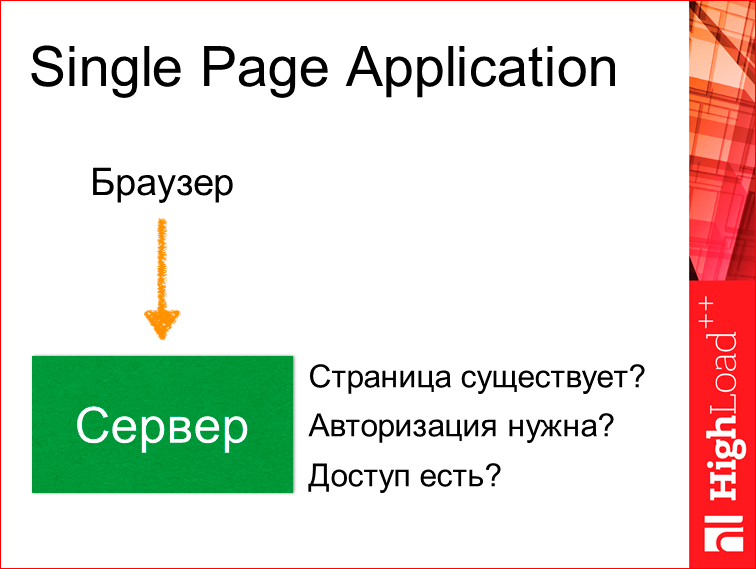

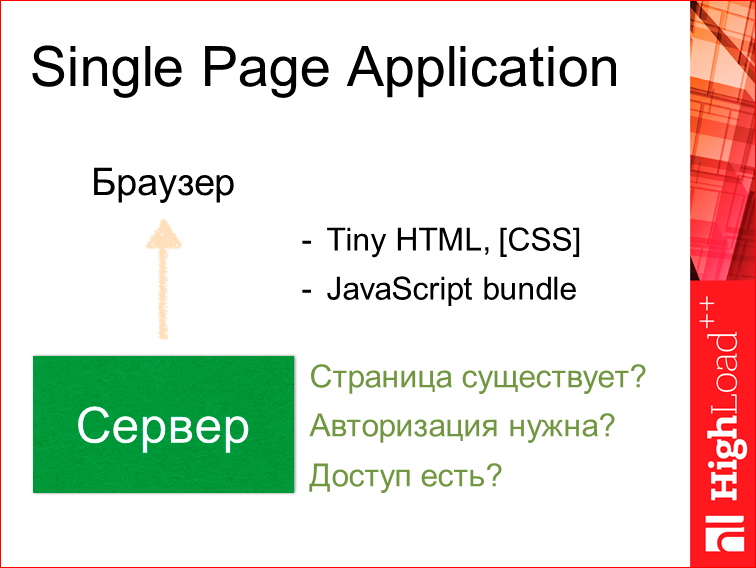

The code on the client began to grow. The server has already begun to play a small role. It was literally checked whether we have a page, is authorization necessary, do we have access to it?

And the server gave already small HTML, CSS and huge JS bundle.

The advantages here are quite large, i.e. easy to start writing - looked at the webpack report, set up, made a little HTML, connected Redux, React, and it all worked.

- Rich functionality. We all load immediately in the case of Single Page, and we do not need to worry about the fact that we do not have something. We can include absolutely anything there - pictures, images, we can even shove a video inside the bundle, and this will work.

- It is easy to finalize it quickly - we don’t have to worry about any optimization, everything works fine, everything is super, everything is cool, we can just add-add-add.

- Responsive UI - accordingly, everything is loaded with us, and there is no need to reload anything.

- This is all very convenient to cache. When we downloaded a bundle once, we already have it always cached in the browser and next time it loads quickly.

And not one minus? Cons, there is.

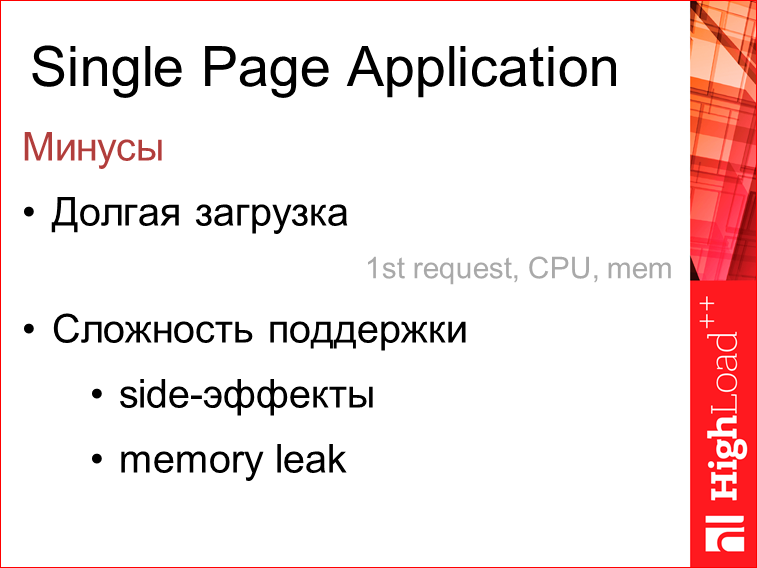

Firstly, due to the fact that the bundle is very large, sometimes it reaches 3-5 MB, it takes a very long time to load it for the first time. Those. Often, when you go to the site for the first time, to a resource of some kind, or after updating to the past, we see a wheel, which many do not like. So The first call to the application takes a long time. This is also not done quickly. And the memory is eating well.

Difficulty of support. Who supported the large Single Page Application applications, he knows that often some plugins that we add to some, even individual pages, can affect any other pages. Those. we cannot control this. And memory leak. Few people tested it, but when the application is large and it works long in the tab, it often happens that the memory grows and for some reason everything starts to hang. 3-4 hours have passed and ... But we do not test, as a rule.

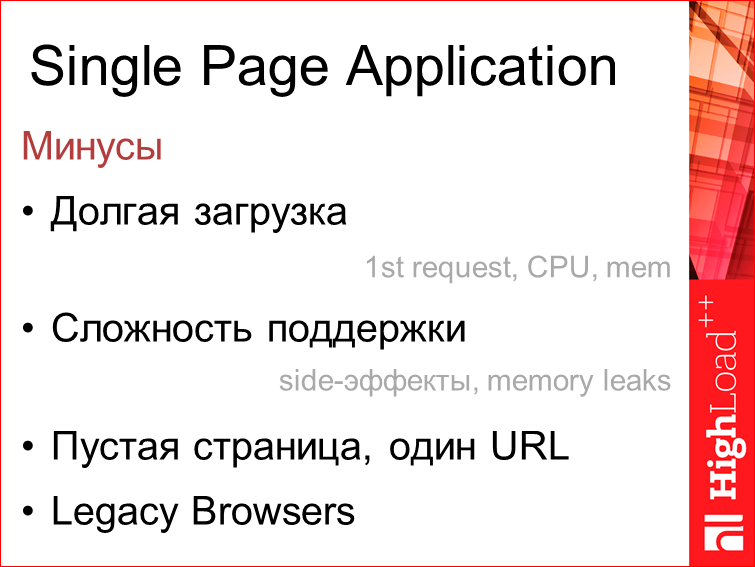

Accordingly, long download, support complexity, a blank page, one url (I will explain later why this is a minus) and old browsers. We recently conducted research and watched that even now in our 2015-2016 year, it would seem that all browsers should have been updated, but no. 5% is the 12th Opera and 7-8th IE, and the 6th is even present. Just last week watched.

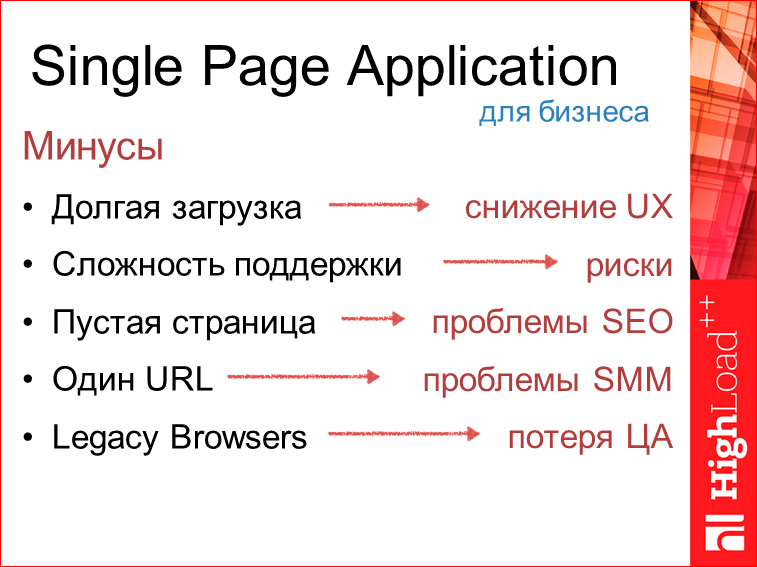

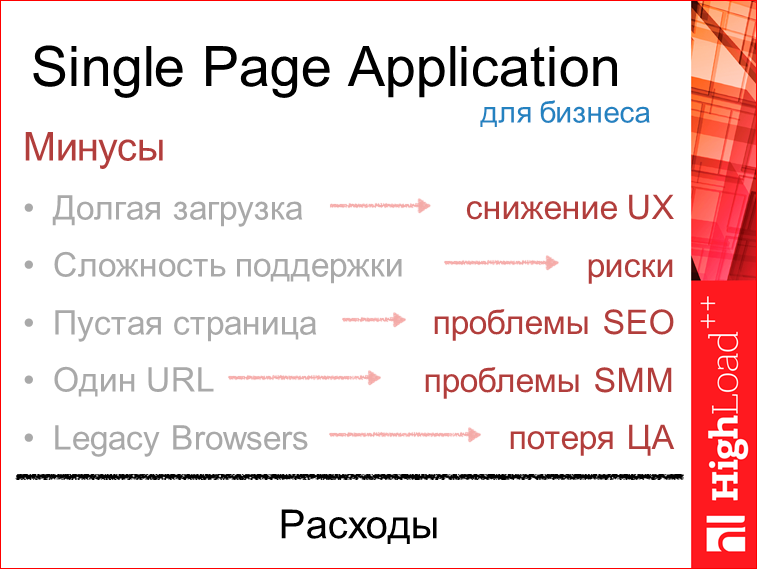

Are these cons? For business, it is cons.

Long loading is the loss of UX, in which big money is invested. The difficulty of support is risk. These are risks as not to meet the deadline, and get out of the budget. A blank page is SEO problems. Here, many may have such an objection: there are pre-vendors, our Angular application can be easily rendered in advance. But this is essentially a hack. There are very few opportunities in this regard. 1 URL is an SMM problem. SMM - this means that the user can not easily share your page on social networks, it will lead to one page. And accordingly, if this page does not turn out on the old browser, then if you do not have polyphiles, which many now refuse, then the person on the old browser in these 5% will see a blank page. All this for business expenses.

What to do? We can now take the best of these two worlds and do it in the form of isomorphic applications.

In 2011, Charlie Robbins formulated it in such a way that by isomorphic we mean the code in which each line taken can be executed both on the server and on the client. With a few exceptions.

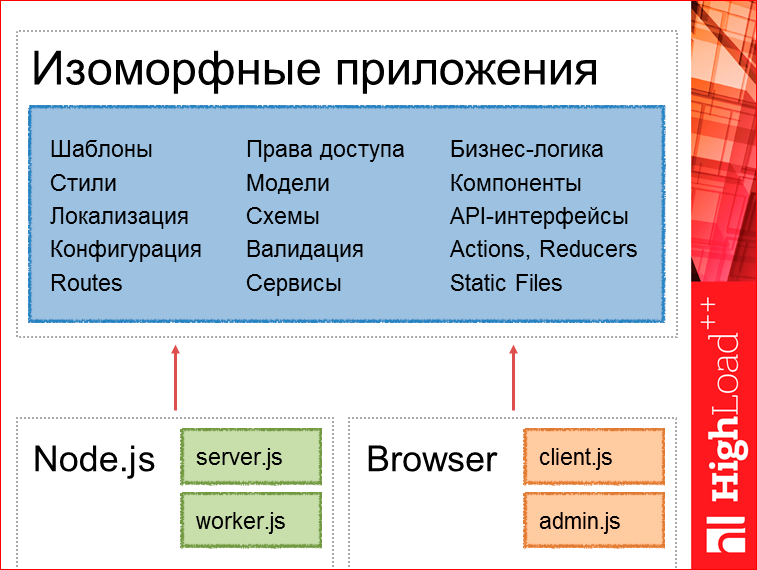

Here it is very important to focus and understand now, because the question arises: how to divide and how to organize isomorphic applications? I tried to formulate in such a way that these parts are templates, services, static files, Actions, Reducers, it is absolutely universal in us, and small starting things can be a client file, or an admin, i.e. what the router initializes - we can already have it for the server part, ours for the client part.

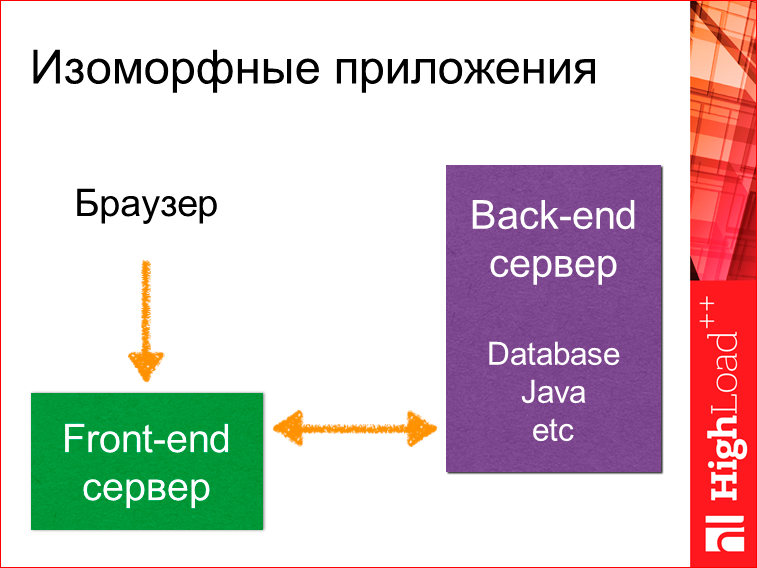

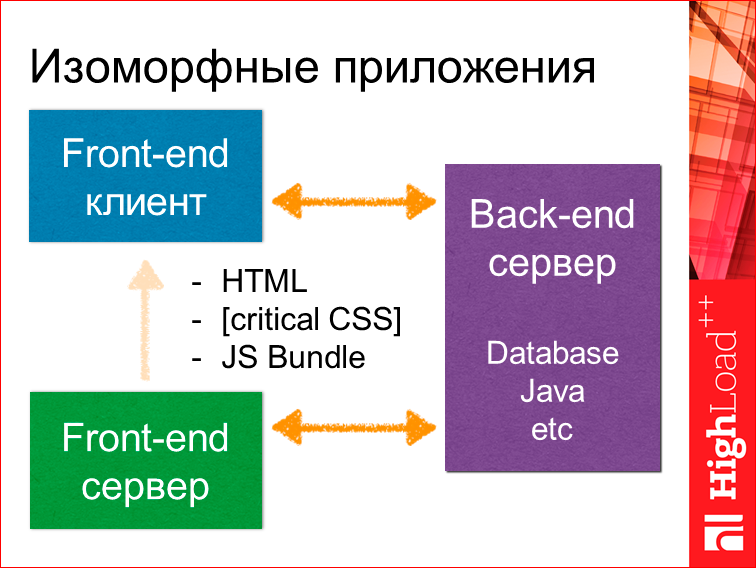

It all looks like this, that we have a browser, there is a front-end server (it is allocated separately), and there is an old familiar back-end server. It may even be a database without any server. The browser accesses the front-end server, it can go for data somewhere to the old server or to the database, get the data.

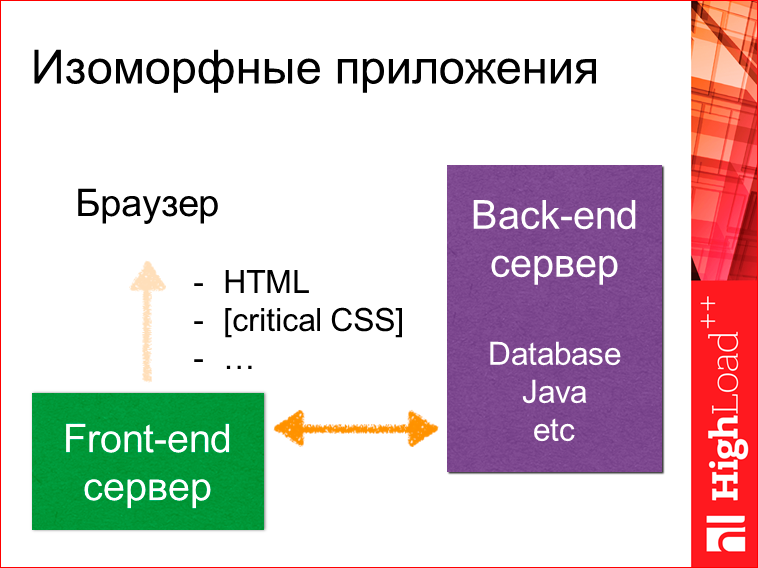

Further, it can generate full HTML, render some critical CSS, which is needed only for rendering this page, and not, as is the case with SPA, for rendering the entire application, which you may well need.

And at the end give JS bundle. At this point, we will have a front-end client assembled on the client side and raised, which in turn can further communicate with the previous backend server, say, through the RESTful API.

So, we have a unified execution environment, a common code base and full control, because when we have a backend server, for example, in Java, the front-end developer is problematic enough to affect or push any changes to the server. And again, we have an ecosystem, i.e. node package manager.

How to implement it in life? Via Sever-Side Rendering.

The point is that we build at Server-Side Rendering, the entire application is built on React, for example, on the front-end server, on Node.js. At the same time, we get an instantaneous mapping, i.e. we have rendered the application, we immediately get it in the form of HTML - even before loading JS. And the user sees it instantly. Those. at the first appeal. And when JS is loaded, React will simply add event handlers, and all this happens quite quickly.

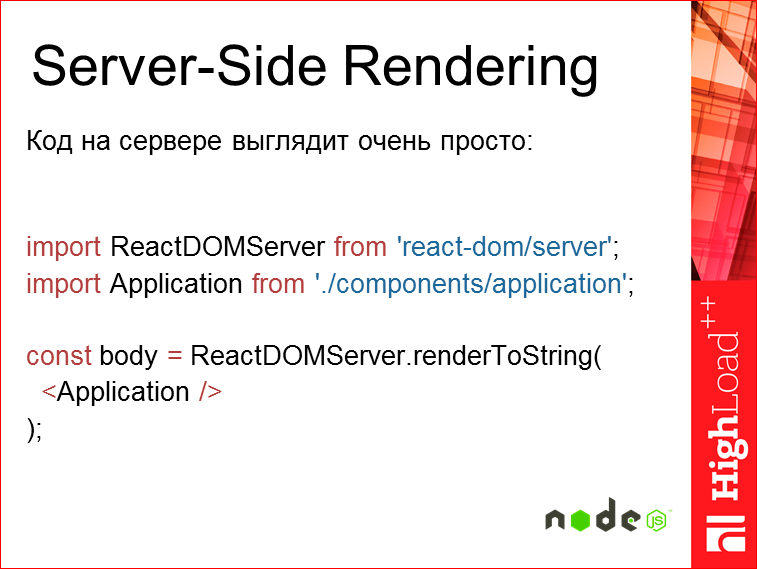

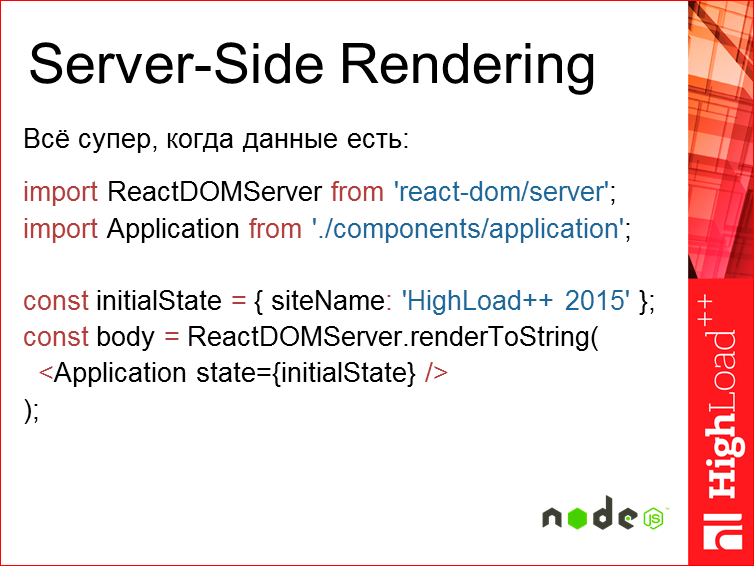

The code on the server side looks like this:

Those. we have a certain React'ovskiy renderer, this is already the 14th version, and that is our application. Those. this is compiled into a string and given. It is clear that all the routes are already wrapped inside the application, because we have the same code to be executed, we already know that we have some way, something we need to display for this way, we will have the tree we need displayed.

In the end, what do we have? The user instantly sees the page, and we do not have any additional requests for receiving data, because to display it, we already have all the data collected and put inside, where necessary. And the page can be launched even without JS. It is very important to work on the same legasy browser of that 5%. Let them have something there will not function as cool as it is now on the last chrome, but it will more or less work. And full URL navigation, and at the same time, meta tags, i.e. This is all that will allow us (for grocery stories this is especially important) to fumble, share some links to individual pages much more efficiently than it is now with the hash. And at the same time, we have the preservation of all the opportunities relevant to JS that we have.

Part 2. Performance and scaling. Scaling is not about load performance, but about functional scaling - how we will grow.

In the case of Server-Side Rendering, everything is super when we already have all the data. Everyone understands that Node.js is a single-threaded story, because as usual JS and, accordingly, everything is super when we have data, and we can draw all this in one stream.

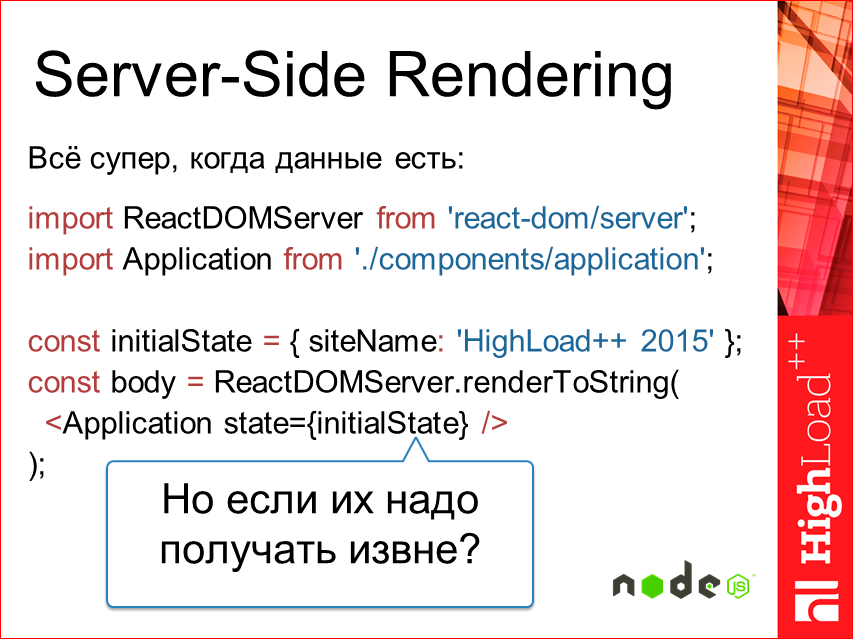

But what if we need this data? To do some kind of request at the same time, we do not have the right to block the thread, the current event loop.

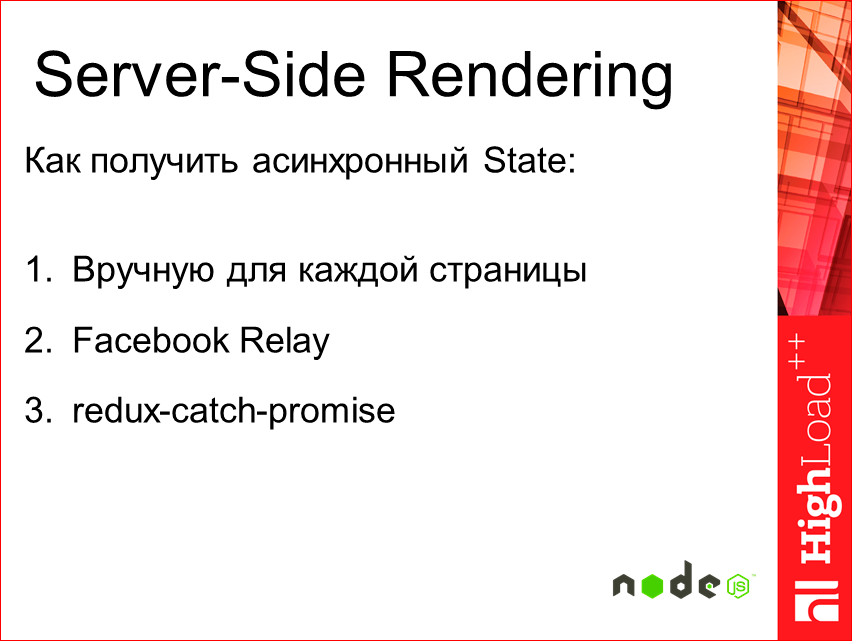

Here we have three main ways: remove the necessary data manually and then return it as to the incoming state; use Facebook Relay; and I developed a plugin for redux-catch-promise.

Manually for each page - everything is simple enough, we get state from the base and then just give it to renderToString. Nothing changes with us, but in this case we have to invent for each page, but how will we get this data to the server? We can no longer just take and add a page, we will have to somehow extract this data, climb, enter additional entities into the project. Also not always convenient.

Facebook Relay, which they presented a few months ago, is a framework that allows you to declare specific requests for data in the components. There's quite an interesting story, i.e. you declaratively specify the request, what data you need, the conditions that they are tied. Relay accumulates all this data and then immediately throws it to the server, and you get it all at once. Those. this happens batching requests, which we talked about. The only problem is that this is not yet available on the server, i.e. server-side, but in the first quarter of 2016, Facebook promises to realize this, and everything will work. There is a link to GitHub issues, you can watch.

Redux-catch-promise is a small life project that I did working for one project. What is Redux? I told on MoscowJS about him. This is the state container for React. In essence, this is a replacement for Flux, a much more successful replacement is shown. Link to the speech is. Redux-catch-promise is middleware, i.e. plugin for redux.

What is he doing? We hang up a callback to capture Promise actions in the stream and render the application. When rendering a component, we make a request, i.e. we send the action to receive the data and give it a reply Promise. This Promise we catch at the top level, where we render the application, and as a result we get a collection of Promisees. Having waited for its permission, we render again application with the obtained data. It turns out quite comfortably, a kind of compromise between manual receipt and what is now implemented in Relay.

The link to GitHub here, there is an example, maybe a bit outdated, everything is changing very quickly.

Performance - the second part.

When they began to see how quickly Server-Side is rendered on a regular MacBook ...

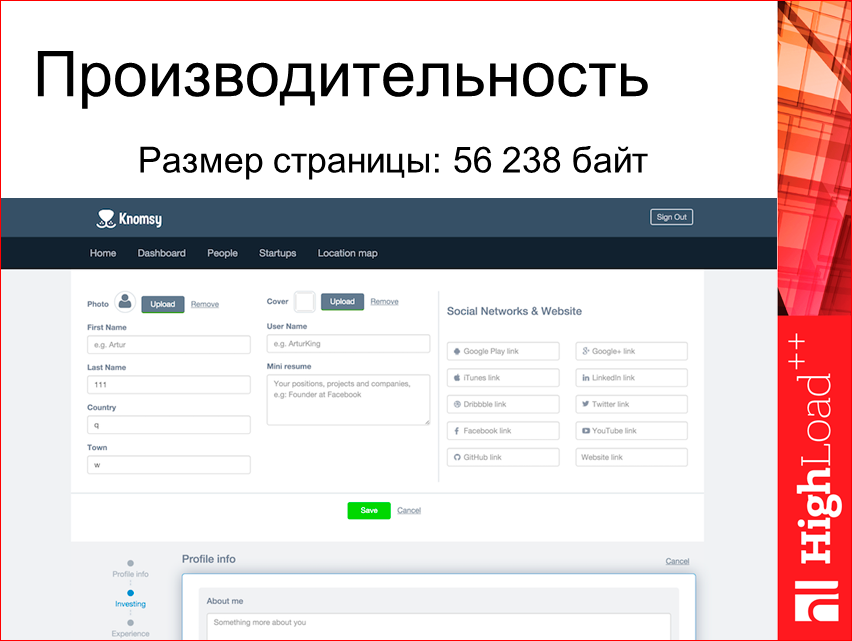

To understand it - the page takes 56 KB, it looks literally in 4 screens, a small profile ...

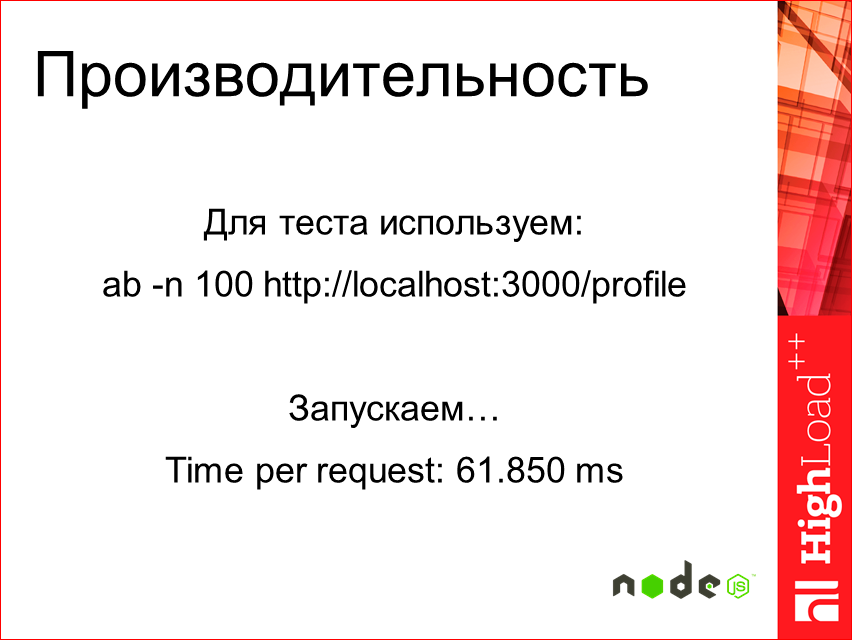

With all the data, take ab testing, the request is complete. Has left 61 msec.

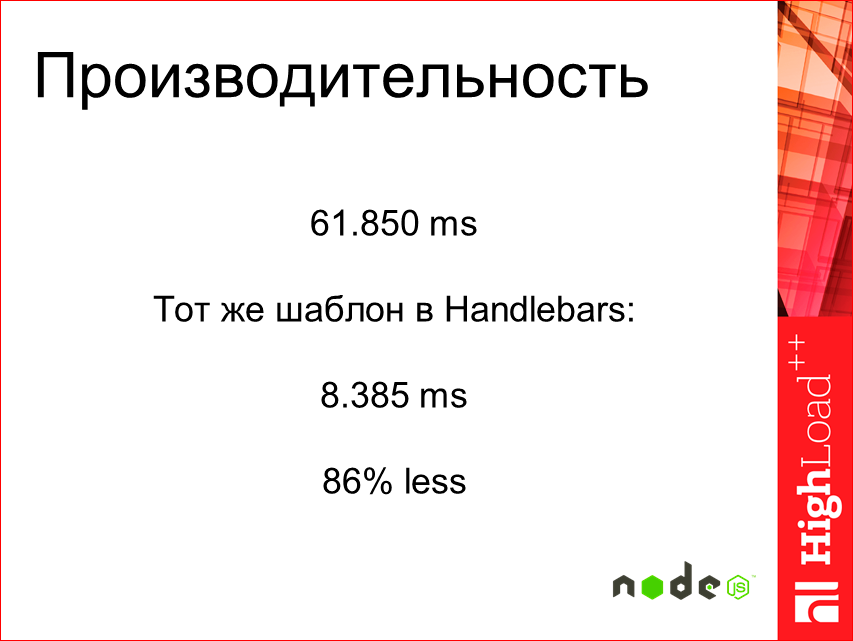

A little bit incomprehensible, it’s a lot or a little. Let's say the same one, if we do Hendlebars, it will be 8 ms.

I think the difference is more obvious, not super.

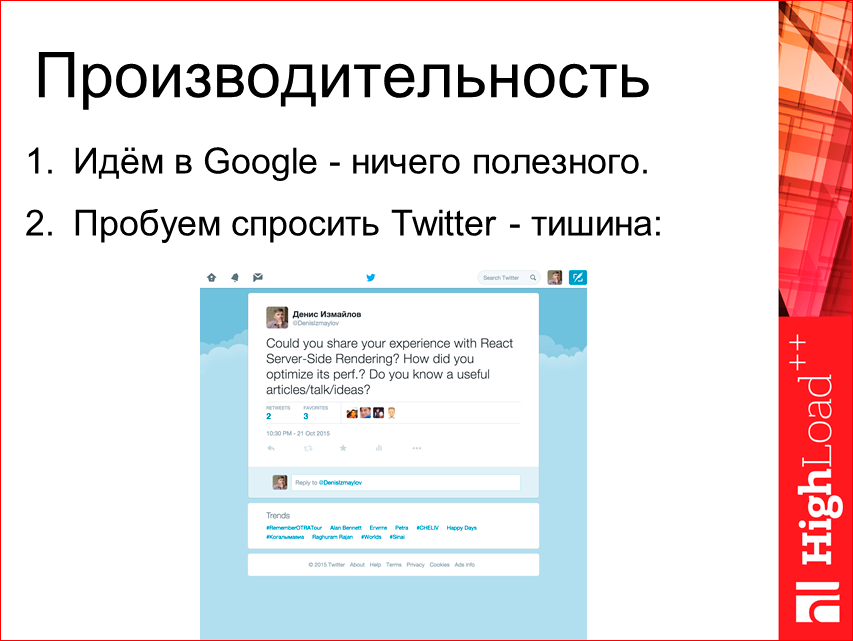

We start to look for something, we look, we go to Google - there is nothing concrete there. We try to ask Twitter, too, everyone is silent, retweets, but we do not find any answers.

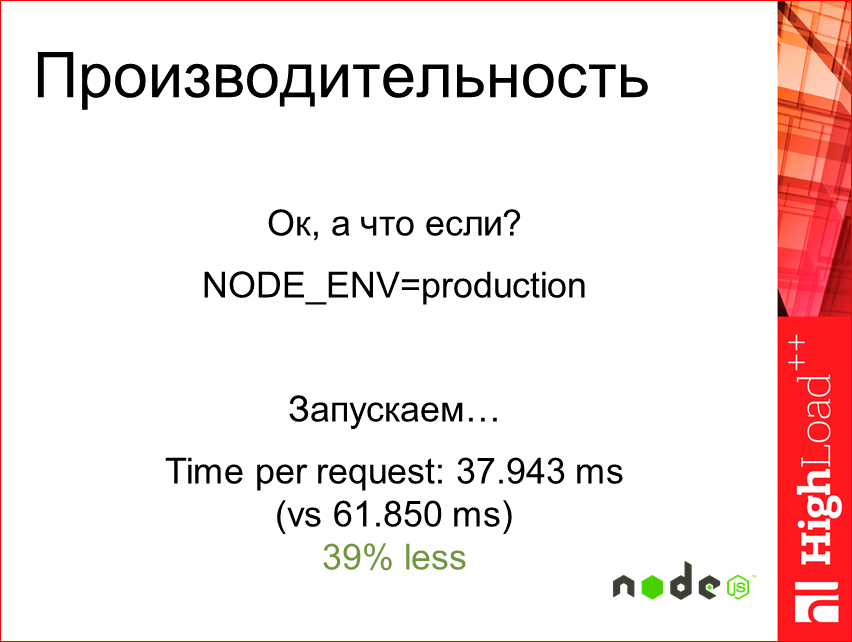

At the same time, we will try to put NODE_ENV in production.

Run and - bang! - almost twice as fast. Great, interesting. It seems better, but still not a cake.

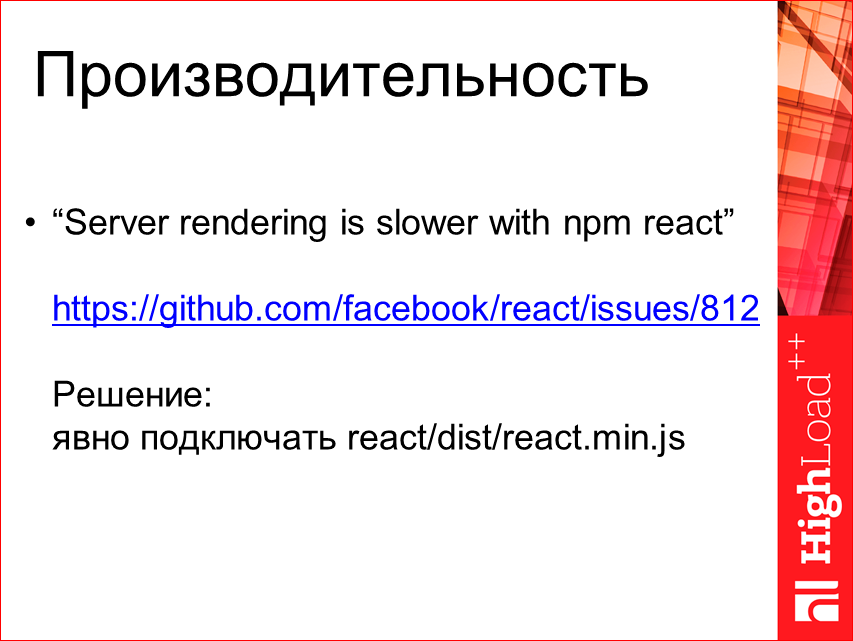

Go ahead. Let's see, let's get into GitHub issues.

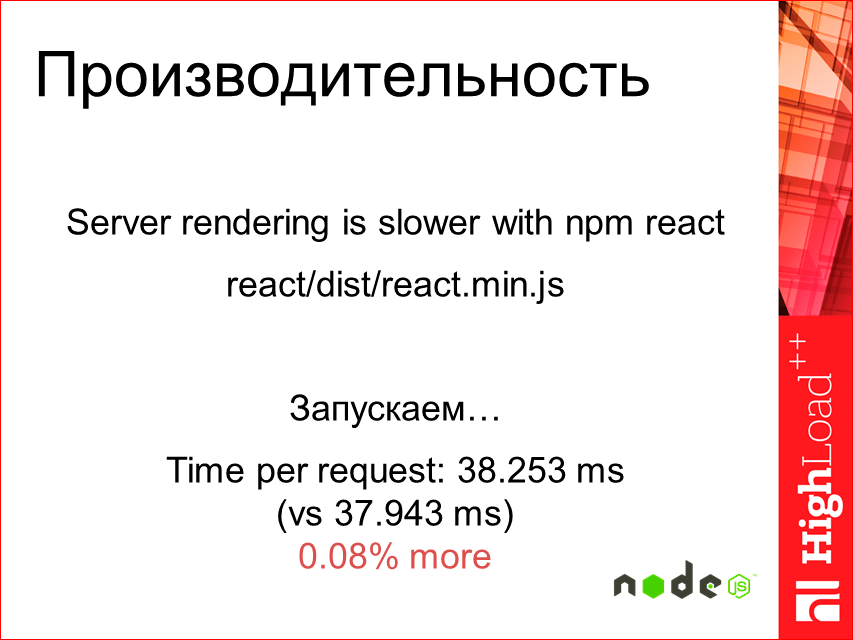

Let's find the interesting such “Server rendering is slower with npm react”. There is given the decision that you need to connect, and not just import the react, connect the file explicitly react.min.js.

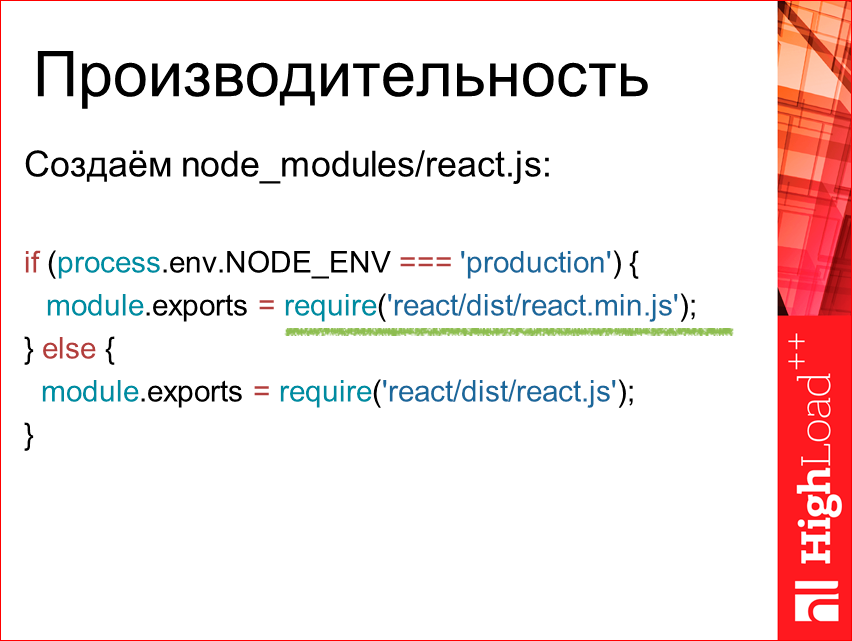

Ok, let's try. Let's create some node_modules for the test. For cleanliness we will do this. Here we obviously use min.js in the case of production. And in the end, how did this change the result? We start.

It turned out even slower.

Slide that test failed.

As it turned out, that advice worked well for React's past, but for the 14th, it does not work. The overall picture looks like this:

Those. 8 ms is occupied by Hendlebars, all the rest is, in fact, if we specify production, and purple - if we use js min. Only 39% less.

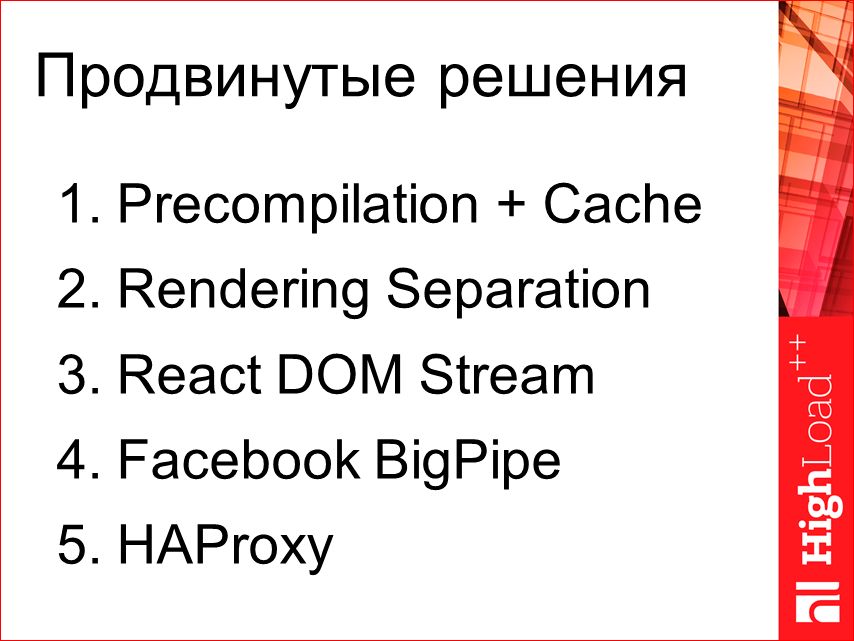

Any further global research - no hack in Server-Side Rendering, we will not see. At the level of React, there is no place to optimize it all, for now. There are only enough hardcore ways.

If you globally divide this, then use some Precompilation and Cache, this is split Rendering. And use the recently released React DOM Stream plugin. Using Facebook BigPipe is a very interesting extension from Facebook that has long been used. And HAProxy is more to devops, other sections of the report.

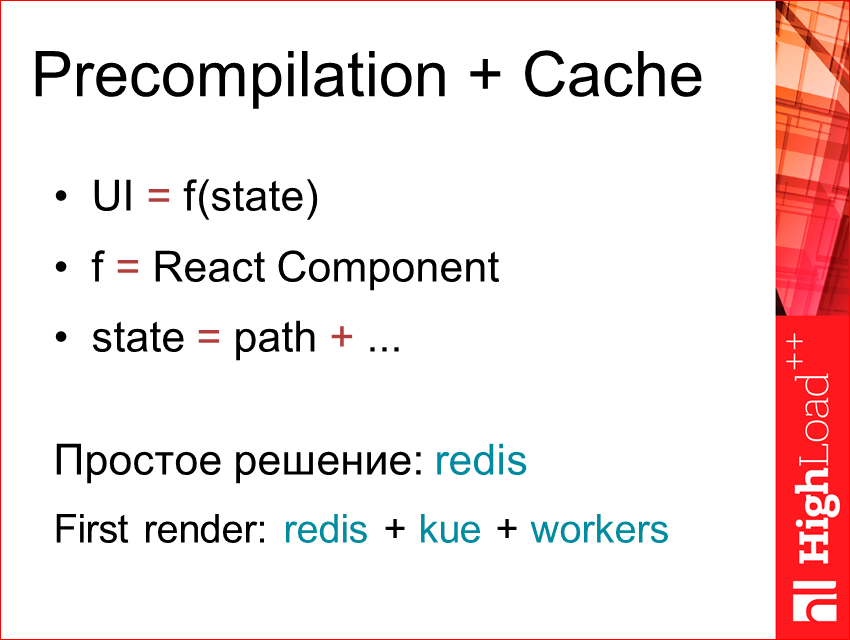

What is the essence of Precompilation? In fact, if we start from the fact that the UI is the result of performing a certain function of state - f (state), where f is React Component, and state is, maybe, let's say, only the way, if it is site or tree of pages, or it may be tied to something else. We can quite easily cach our HTML that we render using this key.

A simple solution is to simply use redis. If we need to have a quick response right away, we can use redis, plus queues, plus workers. Those. we show some kind of castor, we do partial loading, and in the background we have workers render our component with each request. Here we get where First render, what we have, in fact, in this case, the page will be given instantly, everything will be rendered in our background, and with the next request we can already give the cached part of the components.

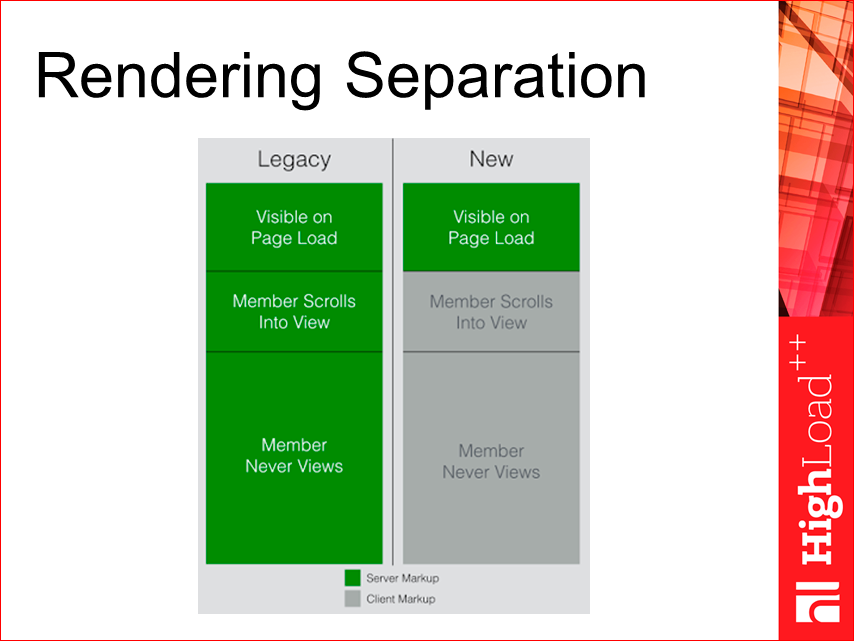

Rendering Separation. Linkedin conducted research, and they observed that most of their pages (long, especially) do not need full server rendering, it suffices to show only the first part - the visible one. And we can add all the rest separately, for example, client JS. And in most cases their clients saw only the first part, no one scrolled, i.e. Thus, we can render the first part on the server and immediately give it away, and the next part can be programmatically downloaded via JS. This gives a fairly large advantage in performance and in resources.

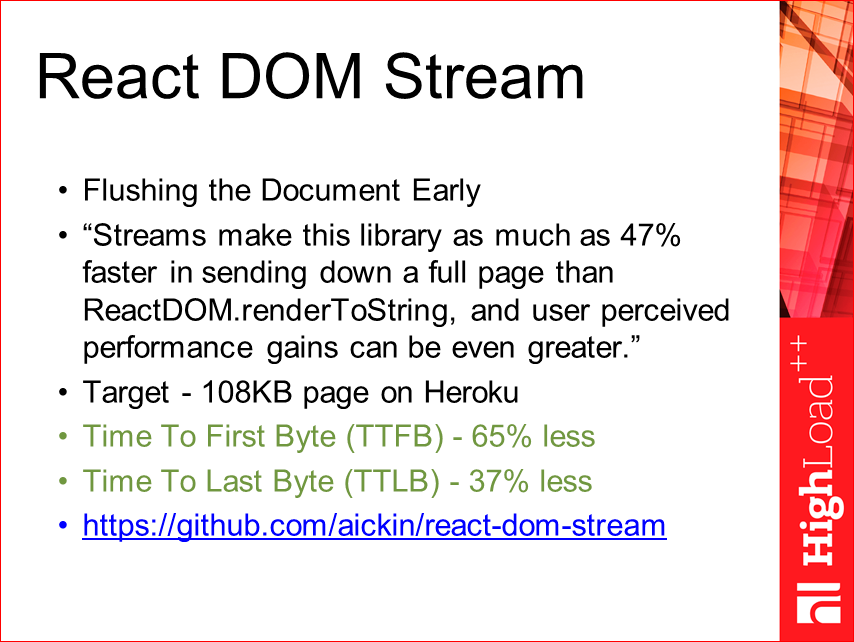

React DOM Stream is the next extension - this is a recently published project. The man (aickin) forked React and implemented a technology of premature dumping there, like http usual, i.e. you render-render, and then all at once gives. In this case, he was able to implement the technology, when you immediately as the component is being rendered, and not in the form of html-code, they are dropped into the stream server. It turns out quite an interesting performance effect. The time to the first byte is reduced already by 65%, i.e. this is almost 2 times, and to the last byte - by 37%. If we combine these two approaches, it turns out quite well ... The only negative thing that affected React itself was that it needed to be slightly modified, so it uses fork there, and this is not the React that is official. There is a discussion about this now, you can observe, it will be ready soon.

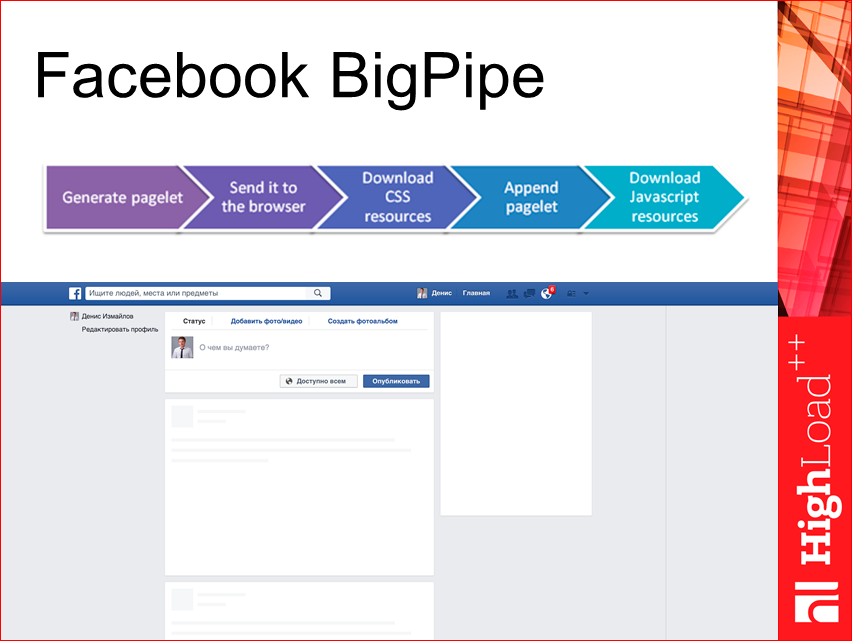

Facebook BigPipe. Very cool extension. They made it a long time ago, 2-3 years ago. This is when the page is assembled during the loading process. Those. we are loading everything in parallel, which makes us error resistance. The point is this: we have a page, and it has certain parts that we can select and load already in the process. Those. In the process of loading the page, we form a call for JS, CSS required for these blocks, and our data is loaded by itself through some json pipe.

It turns out that when we first render we see this almost full page. We can even see something important, like Yandex, for example, does. In Yandex, when you enter a search phrase, press enter, you will first display the top toolbar with your query, and only then will the upload results be loaded. Here the process is depicted.

HAProxy. This is a little about DevOps, but I think everyone now has access to a DevOps specialist, or else you can configure something yourself. The essence is that on the production it is better to raise several nodes and to circulate between them.

In conclusion, I want to bring some useful materials:

- Supercharging page load (100 Days of Google Dev) https://youtu.be/d5_6yHixpsQ

- Making Netflix.com Faster http://techblog.netflix.com/2015/08/making-netflixcom-faster.html

- New technologies for the new LinkedIn home page https://engineering.linkedin.com/frontend/new-technologies-new-linkedin-home-page

- Improving performance on twitter.com https://blog.twitter.com/2012/improving-performance-on-twittercom

- Scaling Isomorphic Javascript Code https://blog.nodejitsu.com/scaling-isomorphic-javascript-code/

- From AngularJS to React: The Isomorphic Way https://blog.risingstack.com/from-angularjs-to-react-the-isomorphic-way/

- Isomorphic JavaScript: The Future of Web Apps http://nerds.airbnb.com/isomorphic-javascript-future-web-apps/

- React server side rendering performance http://www.slideshare.net/nickdreckshage/react-meetup

- The Lost Art of Progressive HTML Rendering https://blog.codinghorror.com/the-lost-art-of-progressive-html-rendering/

And as a recommendation, join the MoscowJS community and stay tuned.

There we constantly have something interesting happening.

The most important thing is to improve English, come to the English-language reports and "do not read Soviet newspapers." Read the originals and technical blogs. Let's say the same companies - Linkedin, Facebook, Netflix - they write very actual things. On Twitter, you can always see all these announcements. And Twitter, GitHub now are probably the main things with which you can keep abreast and understand what is happening in the world of frontend.

I want to give two quotes that I really liked in this regard:

"Most of the problems of algorithms can be solved by changing the data structure." This is Andrei Sitnik said in one of the releases of RadioJS. And in one of the videos: “Changes is our work”, i.e. change is our job. This is jake from google said.

I hope, I have now answered the question why the classic Single Page Application should be abandoned and where it is worth moving. This is actually not as difficult as it seems, and I urge you to move further in this direction.

Contacts

» DenisIzmaylov

» Github

This report is a transcript of one of the best speeches at the conference of developers of high-loaded systems HighLoad ++ of the special section " Frontend performance" .

Also, some of these materials are used by us in an online training course on the development of high-load systems HighLoad.Guide is a chain of specially selected letters, articles, materials, videos. Already, in our textbook more than 30 unique materials. Get connected!

Well, the main news is that we have begun preparations for the spring festival " Russian Internet Technologies ", which includes eight conferences, including Frontend Conf . This is a professional conference for developers of high-load systems. And Denis, by the way, is on her Program Committee.

Source: https://habr.com/ru/post/319038/

All Articles