We train a neural network written in TensorFlow in the cloud using Google Cloud ML and Cloud Shell

In the previous article, we discussed how to train a chat bot based on a recurrent neural network on an AWS GPU instance . Today we will see how easy it is to train the same network using Google Cloud ML and Google Cloud Shell . Thanks to Google Cloud Shell, you won't need to do almost anything on your local computer! By the way, we took the network from the previous article only for an example, you can safely take any other network that uses TensorFlow.

Special thanks to my patrons who made this article possible: Aleksandr Shepeliev, Sergei Ten, Alexey Polietaiev, Nikita Penzin, Karnaukhov Andrey, Matveev Evgeny, Anton Potemkin.

I tried to make the article a self-sufficient guide, but I strongly advise you to look at each link in order to understand what is happening under the hood, and not just copy and paste the commands step by step.

')

There is only one requirement that the reader must satisfy in order to go through all the steps described in the article: to have a Google Cloud account with billing enabled, since we will use paid functionality.

Let's begin our journey with answers to two main questions:

The official definition says the following:

I do not know about you, but this definition tells me very little. Let me explain what Google Cloud ML can give you:

The focus of this article will be on the first 3 points. Later, in subsequent articles, we will look at how to deploy a trained model in Google Cloud ML and how to predict data using the cloud model.

And again, the official definition :

And again, I'll add a few details, Google Cloud Shell is:

Yes, you understood correctly, you have a completely free instance with Shell access, which you can access from your Web console.

But nothing comes for free, in the case of Cloud Shell there are some offensive limitations - you can access it only through the Web console, and not via ssh (I personally don’t like to use any other terminals except iTerm). I asked a question on StackOverflow, is it possible to use Cloud Shell via ssh and, like, it’s impossible. But at least there is a way to make your life easier by installing a special Chrome plugin, which, at a minimum, allows you to use the normal binding of the keys, so that the terminal works as a terminal, and not as a browser window (which means =)).

More information about Cloud Shell features can be found here .

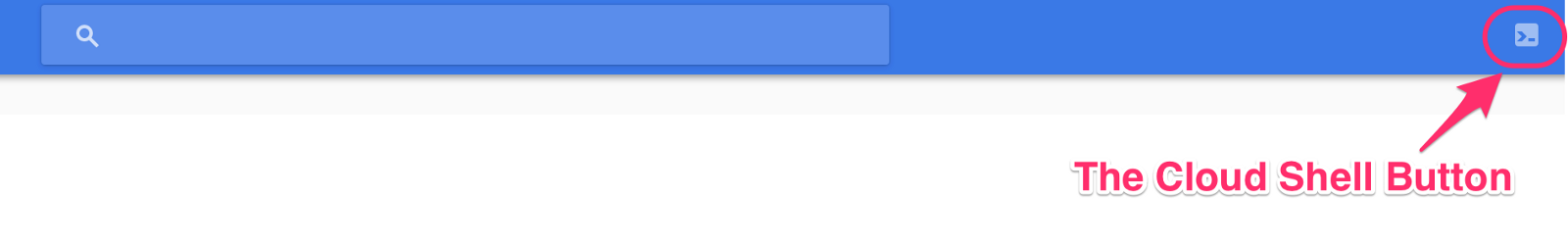

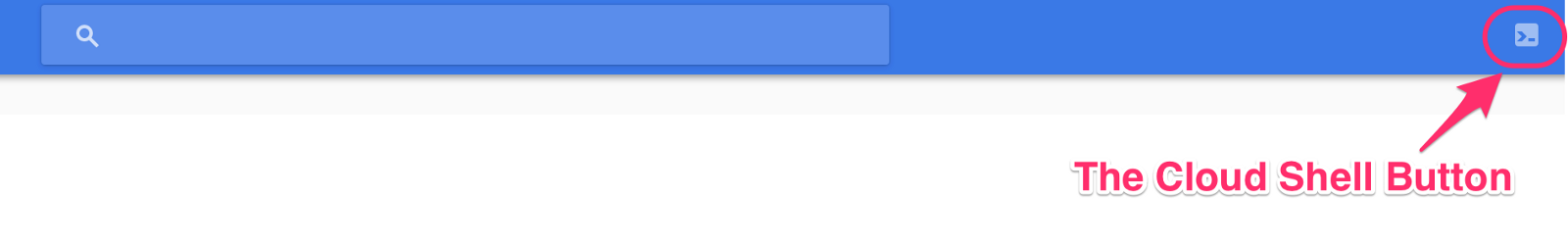

It's time to open the Cloud Shell. If you haven’t done this before, it’s very simple, you need to open the console console.cloud.google.com and click on the Shell icon in the upper right corner:

In case of any problems, here is a small instruction that describes how to run the console in detail.

All subsequent examples will be run in Cloud Shell.

In addition, if this is your first time when you are going to use Cloud ML with Cloud Shell - you need to prepare all the necessary dependencies. To do this, you need to execute just one line of code right in the Shell:

It will install all the necessary packages.

If at this stage everything stumbled on the pillow installation:

This is a manual installation:

Thanks for the tip @ Sp0tted_0wl. Next you have to update the PATH variable:

If this is the first time you use Cloud ML with the current project, you need to initialize the ML module. This can be done in one line:

To check whether everything is installed successfully, you need to run one simple command:

Now it's time to decide which Google Cloud project you will use for network training. I have a special project for all my experiments with ML. In any case, this is up to you, but I will show you my commands, which I use to switch between projects:

If you want to use the same magic, then you need to add the following to your .bashrc / .zshrc / other_rc file:

All right, if you are already here, it means that we have prepared Cloud Shell and moved on to the right project and now we can say with confidence that Cloud Shell has been prepared and we can proceed to the next step with a clear conscience.

First of all, you need to explain why we need cloud storage at all? Since we will be teaching the model in the cloud, the learning process will not have any access to the local file system of your current machine. This means that all the necessary source data must be stored somewhere in the cloud. As well as the trained model will also need to be stored somewhere. This somewhere cannot be the machine on which the training is going, for you have no access to it; and can not be your car, because it does not have access to the learning process. Such a vicious circle that can be broken by introducing a new link - cloud storage for data.

Let's create a new cloud baket that will be used for training:

Here I have to tell you something, if you look at the official manual , you will find the following text there:

Free translation:

However, if you use this advice and create a regional baket, the script will not be able to write something 0_o into it (to keep silence for the gussars, the bug is already written down ).

In an ideal world where everything works, it is expected that it is very important to establish a region, and it must correspond to the region that will be used during the training. Otherwise, it can have a negative impact on the speed of learning.

Now we are ready to prepare input data for the upcoming training.

If you are using your own network, then this part probably can be omitted, well, or selectively read only the part where it is described where this data should be downloaded.

This time (compared to the previous article ) we will use a slightly modified version of the script that prepares the input data. I want to encourage you to read how the script works in the readme file. But now, you can prepare the input data in the following way (you can replace “td src” with “mkdir src; cd src"):

Looking at the code above, you may ask, what is “td”? .. This is just a short form “to dir”, and this is one of the commands that I use most often. In order for you to use this magic, you need to update the rc file by adding the following:

This time we will improve the quality of our model by dividing the data into 2 samples: a training sample and a test one. That is why we see four files instead of two, as it was the previous time.

Great, we finally have the data, let's upload them to the baket:

Now we can prepare a training script. We will use translate.py . However, its current implementation does not allow using it with Cloud ML, so it is necessary to do a little refactoring. As usual, I created a feature request and prepared a brunch with all the necessary changes . And so, let's start with the fact that we slope it:

Please note that we use a non- master branch!

Since distance learning costs money, for testing, you can simulate the learning process locally. The problem here is the truth is that local training of our network on a typewriter on which Cloud Shell is spinning will surely trample it into dirt and crush it. And you have to overload the instance without seeing the result. But do not worry, even in this case nothing will be lost. Fortunately, our script has a self-test mode, which we can use. Here's how to use it:

Pay attention to the folder from which we execute the command!

It looks like the self-test was completed successfully. Let's talk about the keys that we used here:

Finally we got to the most interesting part of the process, for which we, in fact, started all this. But we still have a small detail, we need to prepare all the necessary buckets that will be used in the learning process and set all local variables:

It is important to note here that the name of our remote work (JOB_NAME) must be unique each time we start learning. Now let's change the current folder for translation (do not ask =)):

Now we are ready to start learning. Let's write the command first (but we will not execute it) and discuss its main keys:

We first discuss some of the new flags of the training team:

Also let's touch on new flags that will be passed to the script:

It seems that everything is ready to start learning, so we start (you will need a little patience for the process takes some time) ...

You can monitor the state of your learning. To do this, simply open another tab in your Cloud Shell (or tmux window), then create the necessary variables and run the command:

Now, if everything goes well, we can stop the work and restart it with a large number of steps, for example 200, this is the default number. The new team will look like this:

Probably the biggest advantage of using Cloud Storage to save intermediate states of the model in the learning process is the ability to start communication without interrupting the learning process.

Now, for example, I will show how you can start chatting with a bot after only 1600 training iterations. By the way, this is the only step that needs to be performed on the local machine. I think the reasons are obvious =)

Here's how to do it:

The TRAIN_PATH variable should lead to the “tmp_data” folder, and the current directory should be “models / tutorials / rnn”.

As you can see, the chat bot is far from perfect after just 1600 steps. If you want to see how he can communicate after 50 thousand iterations, I will refer you again to the previous article, since the goal of this is not training the perfect chat bot, but learning how to train any network in the cloud using Google Cloud ML.

I hope that my article has helped you learn the subtleties of working with Cloud ML and Cloud Shell, and you can use them to train your networks. I also hope that you liked the writing and, if so, you can support me on my patreon page and / or by adding likes to the article and helping to distribute it =)

If you notice any problems in any of the steps, please let me know so that I can fix this promptly.

Instead of the preface

Special thanks to my patrons who made this article possible: Aleksandr Shepeliev, Sergei Ten, Alexey Polietaiev, Nikita Penzin, Karnaukhov Andrey, Matveev Evgeny, Anton Potemkin.

I tried to make the article a self-sufficient guide, but I strongly advise you to look at each link in order to understand what is happening under the hood, and not just copy and paste the commands step by step.

')

Prerequisites

There is only one requirement that the reader must satisfy in order to go through all the steps described in the article: to have a Google Cloud account with billing enabled, since we will use paid functionality.

Let's begin our journey with answers to two main questions:

- What is Google Cloud ML?

- What is Google Cloud Shell?

What is Google Cloud ML?

The official definition says the following:

Google Cloud Machine Learning brings the cloud to the cloud. If you’re using your data management tools, you can use your Google Cloud Platform.

I do not know about you, but this definition tells me very little. Let me explain what Google Cloud ML can give you:

- deploy your code in the cloud on a machine that has everything you need to learn the TensorFlow model;

- provide access to Google Cloud Storage baktema for your code on the machine in the cloud;

- run your learning code to execute;

- store a model in the cloud;

- use a trained model to predict future data.

The focus of this article will be on the first 3 points. Later, in subsequent articles, we will look at how to deploy a trained model in Google Cloud ML and how to predict data using the cloud model.

What is Google Cloud Shell?

And again, the official definition :

Google Cloud Shell is a shell environment for Google Cloud Platform.

And again, I'll add a few details, Google Cloud Shell is:

- cloud instance (type?) provided to you,

- with Debian OS on board,

- the Shell of which you can access via the Web,

- where is everything you need to work with Google Cloud.

Yes, you understood correctly, you have a completely free instance with Shell access, which you can access from your Web console.

But nothing comes for free, in the case of Cloud Shell there are some offensive limitations - you can access it only through the Web console, and not via ssh (I personally don’t like to use any other terminals except iTerm). I asked a question on StackOverflow, is it possible to use Cloud Shell via ssh and, like, it’s impossible. But at least there is a way to make your life easier by installing a special Chrome plugin, which, at a minimum, allows you to use the normal binding of the keys, so that the terminal works as a terminal, and not as a browser window (which means =)).

More information about Cloud Shell features can be found here .

The steps that we have to go:

- Cloud Shell preparation for training

- Cloud Storage Preparation

- Preparation of data for training

- Preparation of the training script

- Testing the learning process locally

- Training

- Talking with the bot

Preparing Cloud Shell Environment for Learning

It's time to open the Cloud Shell. If you haven’t done this before, it’s very simple, you need to open the console console.cloud.google.com and click on the Shell icon in the upper right corner:

In case of any problems, here is a small instruction that describes how to run the console in detail.

All subsequent examples will be run in Cloud Shell.

In addition, if this is your first time when you are going to use Cloud ML with Cloud Shell - you need to prepare all the necessary dependencies. To do this, you need to execute just one line of code right in the Shell:

curl https://raw.githubusercontent.com/GoogleCloudPlatform/cloudml-samples/master/tools/setup_cloud_shell.sh | bash It will install all the necessary packages.

If at this stage everything stumbled on the pillow installation:

Command "/usr/bin/python -u -c "import setuptools, tokenize;__file__='/tmp/pip-build-urImDr/olefile/setup.py';f=getattr(tokenize, 'open', open)(__file__);code=fr ead().replace('\r\n', '\n');f.close();exec(compile(code, __file__, 'exec'))" build_ext --disable-jpeg install --record /tmp/pip-GHGxvS-record/install-record.txt - -single-version-externally-managed --compile --user --prefix=" failed with error code 1 in /tmp/pip-build-urImDr/olefile/ This is a manual installation:

pip install --user --upgrade pillow Thanks for the tip @ Sp0tted_0wl. Next you have to update the PATH variable:

export PATH=${HOME}/.local/bin:${PATH} If this is the first time you use Cloud ML with the current project, you need to initialize the ML module. This can be done in one line:

➜ gcloud beta ml init-project Cloud ML needs to add its service accounts to your project (ml-lab-123456) as Editors. This will enable Cloud Machine Learning to access resources in your project when running your training and prediction jobs. Do you want to continue (Y/n)? Added serviceAccount:cloud-ml-service@ml-lab-123456-1234a.iam.gserviceaccount.com as an Editor to project 'ml-lab-123456'. To check whether everything is installed successfully, you need to run one simple command:

➜ curl https://raw.githubusercontent.com/GoogleCloudPlatform/cloudml-samples/master/tools/check_environment.py | python ... You are using pip version 8.1.1, however version 9.0.1 is available. You should consider upgrading via the 'pip install --upgrade pip' command. You are using pip version 8.1.1, however version 9.0.1 is available. You should consider upgrading via the 'pip install --upgrade pip' command. Your active configuration is: [cloudshell-12345] Success! Your environment is configured Now it's time to decide which Google Cloud project you will use for network training. I have a special project for all my experiments with ML. In any case, this is up to you, but I will show you my commands, which I use to switch between projects:

➜ gprojects PROJECT_ID NAME PROJECT_NUMBER ml-lab-123456 ml-lab 123456789012 ... ➜ gproject ml-lab-123456 Updated property [core/project]. If you want to use the same magic, then you need to add the following to your .bashrc / .zshrc / other_rc file:

function gproject() { gcloud config set project $1 } function gprojects() { gcloud projects list } All right, if you are already here, it means that we have prepared Cloud Shell and moved on to the right project and now we can say with confidence that Cloud Shell has been prepared and we can proceed to the next step with a clear conscience.

Cloud Storage Preparation

First of all, you need to explain why we need cloud storage at all? Since we will be teaching the model in the cloud, the learning process will not have any access to the local file system of your current machine. This means that all the necessary source data must be stored somewhere in the cloud. As well as the trained model will also need to be stored somewhere. This somewhere cannot be the machine on which the training is going, for you have no access to it; and can not be your car, because it does not have access to the learning process. Such a vicious circle that can be broken by introducing a new link - cloud storage for data.

Let's create a new cloud baket that will be used for training:

➜ PROJECT_NAME=chatbot_generic ➜ TRAIN_BUCKET=gs://${PROJECT_NAME} ➜ gsutil mb ${TRAIN_BUCKET} Creating gs://chatbot_generic/... Here I have to tell you something, if you look at the official manual , you will find the following text there:

Warning: You must specify a region (like us-central1) for your bucket, not a multi-region location (like us).

Free translation:

Note: You must specify a region (us-central1) for your baket, not a multi-regional location as a country (for example: us).

However, if you use this advice and create a regional baket, the script will not be able to write something 0_o into it (to keep silence for the gussars, the bug is already written down ).

In an ideal world where everything works, it is expected that it is very important to establish a region, and it must correspond to the region that will be used during the training. Otherwise, it can have a negative impact on the speed of learning.

Now we are ready to prepare input data for the upcoming training.

Preparation of data for training

If you are using your own network, then this part probably can be omitted, well, or selectively read only the part where it is described where this data should be downloaded.

This time (compared to the previous article ) we will use a slightly modified version of the script that prepares the input data. I want to encourage you to read how the script works in the readme file. But now, you can prepare the input data in the following way (you can replace “td src” with “mkdir src; cd src"):

➜ td src ➜ ~/src$ git clone https://github.com/b0noI/dialog_converter.git Cloning into 'dialog_converter'... remote: Counting objects: 63, done. remote: Compressing objects: 100% (4/4), done. remote: Total 63 (delta 0), reused 0 (delta 0), pack-reused 59 Unpacking objects: 100% (63/63), done. Checking connectivity... done. ➜ ~/src$ cd dialog_converter/ ➜ ~/src/dialog_converter$ git checkout converter_that_produces_test_data_as_well_as_train_data Branch converter_that_produces_test_data_as_well_as_train_data set up to track remote branch converter_that_produces_test_data_as_well_as_train_data from origin. Switched to a new branch 'converter_that_produces_test_data_as_well_as_train_data' ➜ ~/src/dialog_converter$ python converter.py ➜ ~/src/dialog_converter$ ls converter.py LICENSE movie_lines.txt README.md test.a test.b train.a train.b Looking at the code above, you may ask, what is “td”? .. This is just a short form “to dir”, and this is one of the commands that I use most often. In order for you to use this magic, you need to update the rc file by adding the following:

function td() { mkdir $1 cd $1 } This time we will improve the quality of our model by dividing the data into 2 samples: a training sample and a test one. That is why we see four files instead of two, as it was the previous time.

Great, we finally have the data, let's upload them to the baket:

➜ ~/src/dialog_converter$ gsutil cp test.* ${TRAIN_BUCKET}/input Copying file://test.a [Content-Type=application/octet-stream]... Copying file://test.b [Content-Type=chemical/x-molconn-Z]... \ [2 files][ 2.8 MiB/ 2.8 MiB] 0.0 B/s Operation completed over 2 objects/2.8 MiB. ➜ ~/src/dialog_converter$ gsutil cp train.* ${TRAIN_BUCKET}/input Copying file://train.a [Content-Type=application/octet-stream]... Copying file://train.b [Content-Type=chemical/x-molconn-Z]... - [2 files][ 11.0 MiB/ 11.0 MiB] Operation completed over 2 objects/11.0 MiB. ➜ ~/src/dialog_converter$ gsutil ls ${TRAIN_BUCKET} gs://chatbot_generic/input/ ➜ ~/src/dialog_converter$ gsutil ls ${TRAIN_BUCKET}/input gs://chatbot_generic/input/test.a gs://chatbot_generic/input/test.b gs://chatbot_generic/input/train.a gs://chatbot_generic/input/train.b Preparation of the training script

Now we can prepare a training script. We will use translate.py . However, its current implementation does not allow using it with Cloud ML, so it is necessary to do a little refactoring. As usual, I created a feature request and prepared a brunch with all the necessary changes . And so, let's start with the fact that we slope it:

➜ ~/src/dialog_converter$ cd .. ➜ ~/src$ git clone https://github.com/b0noI/models.git Cloning into 'models'... remote: Counting objects: 1813, done. remote: Compressing objects: 100% (39/39), done. remote: Total 1813 (delta 24), reused 0 (delta 0), pack-reused 1774 Receiving objects: 100% (1813/1813), 49.34 MiB | 39.19 MiB/s, done. Resolving deltas: 100% (742/742), done. Checking connectivity... done. ➜ ~/src$ cd models/ ➜ ~/src/models$ git checkout translate_tutorial_supports_google_cloud_ml Branch translate_tutorial_supports_google_cloud_ml set up to track remote branch translate_tutorial_supports_google_cloud_ml from origin. Switched to a new branch 'translate_tutorial_supports_google_cloud_ml' ➜ ~/src/models$ cd tutorials/rnn/translate/ Please note that we use a non- master branch!

Testing the learning process locally

Since distance learning costs money, for testing, you can simulate the learning process locally. The problem here is the truth is that local training of our network on a typewriter on which Cloud Shell is spinning will surely trample it into dirt and crush it. And you have to overload the instance without seeing the result. But do not worry, even in this case nothing will be lost. Fortunately, our script has a self-test mode, which we can use. Here's how to use it:

➜ ~/src/models/tutorials/rnn/translate$ cd .. ➜ ~/src/models/tutorials/rnn$ gcloud beta ml local train \ > --package-path=translate \ > --module-name=translate.translate \ > -- \ > --self_test Self-test for neural translation model. Pay attention to the folder from which we execute the command!

It looks like the self-test was completed successfully. Let's talk about the keys that we used here:

- package-path - the path to the python package that must be deployed on a remote machine in order to perform training;

- "-" - everything that follows will be sent as input arguments to your module;

- self_test - tells the module to run a self-test without actual training.

Training

Finally we got to the most interesting part of the process, for which we, in fact, started all this. But we still have a small detail, we need to prepare all the necessary buckets that will be used in the learning process and set all local variables:

➜ ~/src/models/tutorials/rnn$ INPUT_TRAIN_DATA_A=${TRAIN_BUCKET}/input/train.a ➜ ~/src/models/tutorials/rnn$ INPUT_TRAIN_DATA_B=${TRAIN_BUCKET}/input/train.b ➜ ~/src/models/tutorials/rnn$ INPUT_TEST_DATA_A=${TRAIN_BUCKET}/input/test.a ➜ ~/src/models/tutorials/rnn$ INPUT_TEST_DATA_B=${TRAIN_BUCKET}/input/test.b ➜ ~/src/models/tutorials/rnn$ JOB_NAME=${PROJECT_NAME}_$(date +%Y%m%d_%H%M%S) ➜ ~/src/models/tutorials/rnn$ echo ${JOB_NAME} chatbot_generic_20161224_203332 ➜ ~/src/models/tutorials/rnn$ TRAIN_PATH=${TRAIN_BUCKET}/${JOB_NAME} ➜ ~/src/models/tutorials/rnn$ echo ${TRAIN_PATH} gs://chatbot_generic/chatbot_generic_20161224_203332 It is important to note here that the name of our remote work (JOB_NAME) must be unique each time we start learning. Now let's change the current folder for translation (do not ask =)):

➜ ~/src/models/tutorials/rnn$ cd translate/ Now we are ready to start learning. Let's write the command first (but we will not execute it) and discuss its main keys:

gcloud beta ml jobs submit training ${JOB_NAME} \ --package-path=. \ --module-name=translate.translate \ --staging-bucket="${TRAIN_BUCKET}" \ --region=us-central1 \ -- \ --from_train_data=${INPUT_TRAIN_DATA_A} \ --to_train_data=${INPUT_TRAIN_DATA_B} \ --from_dev_data=${INPUT_TEST_DATA_A} \ --to_dev_data=${INPUT_TEST_DATA_B} \ --train_dir="${TRAIN_PATH}" \ --data_dir="${TRAIN_PATH}" \ --steps_per_checkpoint=5 \ --from_vocab_size=45000 \ --to_vocab_size=45000 We first discuss some of the new flags of the training team:

- staging-bucket - the bakt to be used during deployment; it makes sense to use the same bake as for training;

- region - the region where you want to start the learning process.

Also let's touch on new flags that will be passed to the script:

- from_train_data / to_train_data is the former en_train_data / fr_train_data, details can be found in the last article ;

- from_dev_data / to_dev_data is the same as from_train_data / to_train_data, but for test (or “dev”, as they are called in the script) data that will be used to estimate losses after training;

- train_dir - folder where learning results will be saved;

- steps_per_checkpoint - how many steps must be performed before saving the temporary results. 5 - too small a value, I set it only to check that the learning process goes without any problems. Later I will restart the process with a large value (200, for example);

- from_vocab_size / to_vocab_size - to understand what it is, you need to read the previous article. There you find out that the default value (40k) is less than the number of unique words in the dialogs, therefore this time we increased the size of the dictionary.

It seems that everything is ready to start learning, so we start (you will need a little patience for the process takes some time) ...

➜ ~/src/models/tutorials/rnn/translate$ gcloud beta ml jobs submit training ${JOB_NAME} \ > --package-path=. \ > --module-name=translate.translate \ > --staging-bucket="${TRAIN_BUCKET}" \ > --region=us-central1 \ > -- \ > --from_train_data=${INPUT_TRAIN_DATA_A} \ > --to_train_data=${INPUT_TRAIN_DATA_B} \ > --from_dev_data=${INPUT_TEST_DATA_A} \ > --to_dev_data=${INPUT_TEST_DATA_B} \ > --train_dir="${TRAIN_PATH}" \ > --data_dir="${TRAIN_PATH}" \ > --steps_per_checkpoint=5 \ > --from_vocab_size=45000 \ > --to_vocab_size=45000 INFO 2016-12-24 20:49:24 -0800 unknown_task Validating job requirements... INFO 2016-12-24 20:49:25 -0800 unknown_task Job creation request has been successfully validated. INFO 2016-12-24 20:49:26 -0800 unknown_task Job chatbot_generic_20161224_203332 is queued. INFO 2016-12-24 20:49:31 -0800 service Waiting for job to be provisioned. INFO 2016-12-24 20:49:36 -0800 service Waiting for job to be provisioned. ... INFO 2016-12-24 20:53:15 -0800 service Waiting for job to be provisioned. INFO 2016-12-24 20:53:20 -0800 service Waiting for job to be provisioned. INFO 2016-12-24 20:53:20 -0800 service Waiting for TensorFlow to start. ... INFO 2016-12-24 20:54:56 -0800 master-replica-0 Successfully installed translate-0.0.0 INFO 2016-12-24 20:54:56 -0800 master-replica-0 Running command: python -m translate.translate --from_train_data=gs://chatbot_generic/input/train.a --to_train_data=gs://chatbot_generic/input/train.b --from_dev_data=gs://chatbot_generic/input/test.a --to_dev_data=gs://chatbot_generic/input/test.b --train_dir=gs://chatbot_generic/chatbot_generic_20161224_203332 --steps_per_checkpoint=5 --from_vocab_size=45000 --to_vocab_size=45000 INFO 2016-12-24 20:56:21 -0800 master-replica-0 Creating vocabulary /tmp/vocab45000 from data gs://chatbot_generic/input/train.b INFO 2016-12-24 20:56:21 -0800 master-replica-0 processing line 100000 INFO 2016-12-24 20:56:21 -0800 master-replica-0 Tokenizing data in gs://chatbot_generic/input/train.b INFO 2016-12-24 20:56:21 -0800 master-replica-0 tokenizing line 100000 INFO 2016-12-24 20:56:21 -0800 master-replica-0 Tokenizing data in gs://chatbot_generic/input/train.a INFO 2016-12-24 20:56:21 -0800 master-replica-0 tokenizing line 100000 INFO 2016-12-24 20:56:21 -0800 master-replica-0 Tokenizing data in gs://chatbot_generic/input/test.b INFO 2016-12-24 20:56:21 -0800 master-replica-0 Tokenizing data in gs://chatbot_generic/input/test.a INFO 2016-12-24 20:56:21 -0800 master-replica-0 Creating 3 layers of 1024 units. INFO 2016-12-24 20:56:21 -0800 master-replica-0 Created model with fresh parameters. INFO 2016-12-24 20:56:21 -0800 master-replica-0 Reading development and training data (limit: 0). INFO 2016-12-24 20:56:21 -0800 master-replica-0 reading data line 100000 You can monitor the state of your learning. To do this, simply open another tab in your Cloud Shell (or tmux window), then create the necessary variables and run the command:

➜ JOB_NAME=chatbot_generic_20161224_213143 ➜ gcloud beta ml jobs describe ${JOB_NAME} ... Now, if everything goes well, we can stop the work and restart it with a large number of steps, for example 200, this is the default number. The new team will look like this:

➜ ~/src/models/tutorials/rnn/translate$ gcloud beta ml jobs submit training ${JOB_NAME} \ > --package-path=. \ > --module-name=translate.translate \ > --staging-bucket="${TRAIN_BUCKET}" \ > --region=us-central1 \ > -- \ > --from_train_data=${INPUT_TRAIN_DATA_A} \ > --to_train_data=${INPUT_TRAIN_DATA_B} \ > --from_dev_data=${INPUT_TEST_DATA_A} \ > --to_dev_data=${INPUT_TEST_DATA_B} \ > --train_dir="${TRAIN_PATH}" \ > --data_dir="${TRAIN_PATH}" \ > --from_vocab_size=45000 \ > --to_vocab_size=45000 Conversation with the bot

Probably the biggest advantage of using Cloud Storage to save intermediate states of the model in the learning process is the ability to start communication without interrupting the learning process.

Now, for example, I will show how you can start chatting with a bot after only 1600 training iterations. By the way, this is the only step that needs to be performed on the local machine. I think the reasons are obvious =)

Here's how to do it:

mkdir ~/tmp-data gsutil cp gs://chatbot_generic/chatbot_generic_20161224_232158/translate.ckpt-1600.meta ~/tmp-data ... gsutil cp gs://chatbot_generic/chatbot_generic_20161224_232158/translate.ckpt-1600.index ~/tmp-data ... gsutil cp gs://chatbot_generic/chatbot_generic_20161224_232158/translate.ckpt-1600.data-00000-of-00001 ~/tmp-data ... gsutil cp gs://chatbot_generic/chatbot_generic_20161224_232158/checkpoint ~/tmp-data TRAIN_PATH=... python -m translate.translate \ --data_dir="${TRAIN_PATH}" \ --train_dir="${TRAIN_PATH}" \ --from_vocab_size=45000 \ --to_vocab_size=45000 \ --decode Reading model parameters from /Users/b0noi/tmp-data/translate.ckpt-1600 > Hi there you ? . . . . . . . . > What do you want? i . . . . . . . . . > yes, you i ? . . . . . . . . > hi you ? . . . . . . . . > who are you? i . . . . . . . . . > yes you! what ? . . . . . . . . > who are you? i . . . . . . . . . > you ' . . . . . . . . The TRAIN_PATH variable should lead to the “tmp_data” folder, and the current directory should be “models / tutorials / rnn”.

As you can see, the chat bot is far from perfect after just 1600 steps. If you want to see how he can communicate after 50 thousand iterations, I will refer you again to the previous article, since the goal of this is not training the perfect chat bot, but learning how to train any network in the cloud using Google Cloud ML.

Post factum

I hope that my article has helped you learn the subtleties of working with Cloud ML and Cloud Shell, and you can use them to train your networks. I also hope that you liked the writing and, if so, you can support me on my patreon page and / or by adding likes to the article and helping to distribute it =)

If you notice any problems in any of the steps, please let me know so that I can fix this promptly.

Source: https://habr.com/ru/post/318922/

All Articles