Docker swarm mode (swarm mode)

On Habré already wrote about Docker swarm mode (swarm mode), which is a new feature of version 1.12 . This option has brought a little confusion to the heads of those who are familiar with the stand-alone Docker Swarm implementation that had spread before and did not differ in ease of configuration and use. However, after adding Swarm to the box with Docker, everything became much simpler, more obvious and more functional.

Learn more about how the new cluster of Docker containers is arranged from the user's point of view, as well as about a simple and convenient way of deploying Docker services on an arbitrary infrastructure further under the cat.

For a start, as I promised in the previous article , with a slight delay, but still released Fabricio release with support for Docker services. At the same time, the ability to work with individual containers remains, plus, the user interface and configuration developer remain unchanged, which greatly simplifies the transition from configurations based on separate containers to fault-tolerant and horizontally scalable services.

Docker swarm mode activation

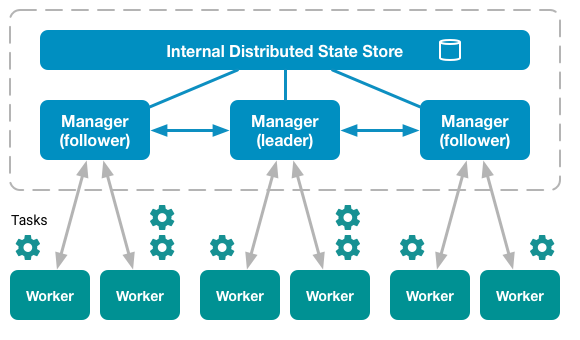

In swarm mode, all nodes are divided into two types: manager and worker. At the same time, a full-fledged cluster can do without working nodes in general, that is, managers by default are also workers.

')

Among managers there is always one who is currently the leader of the cluster. All control commands that run on other managers are automatically redirected to it.

Sample list of nodes in a running Docker cluster

$ docker node ls ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS 6pbqkymsgtnahkqyyw7pccwpz * docker-1 Ready Active Leader avjehhultkslrlcrevaqc4h5f docker-2 Ready Active Reachable cg1maoa11ep7h14f2xciwylf3 docker-3 Ready Active Reachable To enable swarm mode, simply select a host that will be the initial leader in the future cluster, and execute only one command on it:

docker swarm init After the swarm is initialized, it is ready to launch any number of services on it. However, the state of such a cluster will be inconsistent (the consistent state is reached when there are at least 3 managers). And of course, there can be no talk of scaling and failover in this case either. To do this, you need to connect at least two more control nodes to the cluster. You can learn how to do this by running the following commands on the leader:

Adding a control node

$ docker swarm join-token manager To add a manager to this swarm, run the following command: docker swarm join \ --token SWMTKN-1-1yptom678kg6hryfufjyv1ky7xc4tx73m8uu2vmzm1rb82fsas-c12oncaqr8heox5ed2jj50kjf \ 172.28.128.3:2377 Adding a work node

$ docker swarm join-token worker To add a worker to this swarm, run the following command: docker swarm join \ --token SWMTKN-1-1yptom678kg6hryfufjyv1ky7xc4tx73m8uu2vmzm1rb82fsas-511vapm98iiz516oyf8j00alv \ 172.28.128.3:2377 You can add and remove nodes at any time during the life of a cluster — this does not seriously affect its performance.

Creating a service

Creating a service in Docker is not fundamentally different from creating a container:

docker service create --name nginx --publish 8080:80 --replicas 2 nginx:stable-alpine Differences, as a rule, consist in a different set of options. For example, the service does not have the option --volume , but there is an option --mount - these options allow you to connect local node resources to containers, but they do it in different ways.

Service update

Here begins the biggest difference between the work of containers and the work of a cluster of containers (service). Usually, to update a single container, you have to stop the current one and start a new one. This leads, albeit to insignificant, but to the existing idle time of your service (if you have not bothered to handle such situations using other tools).

When using a service with a number of replicas of at least 2, the service is not idle in most cases. This is achieved due to the fact that Docker updates the service containers in turn . That is, at the same moment in time there is always at least one working container that can service the user's request.

For updating (including adding and deleting) service properties that can have several values (for example, --publish or --label ), Docker suggests using special options ending with -add and -rm suffixes :

# docker service update --label-add foo=bar nginx Removing some options, however, is less trivial and often depends on the option itself:

# docker service update --label-rm foo nginx # (target port) docker service update --publish-rm 80 nginx Details on each option can be found in the description of the docker service update command .

Scaling and balancing

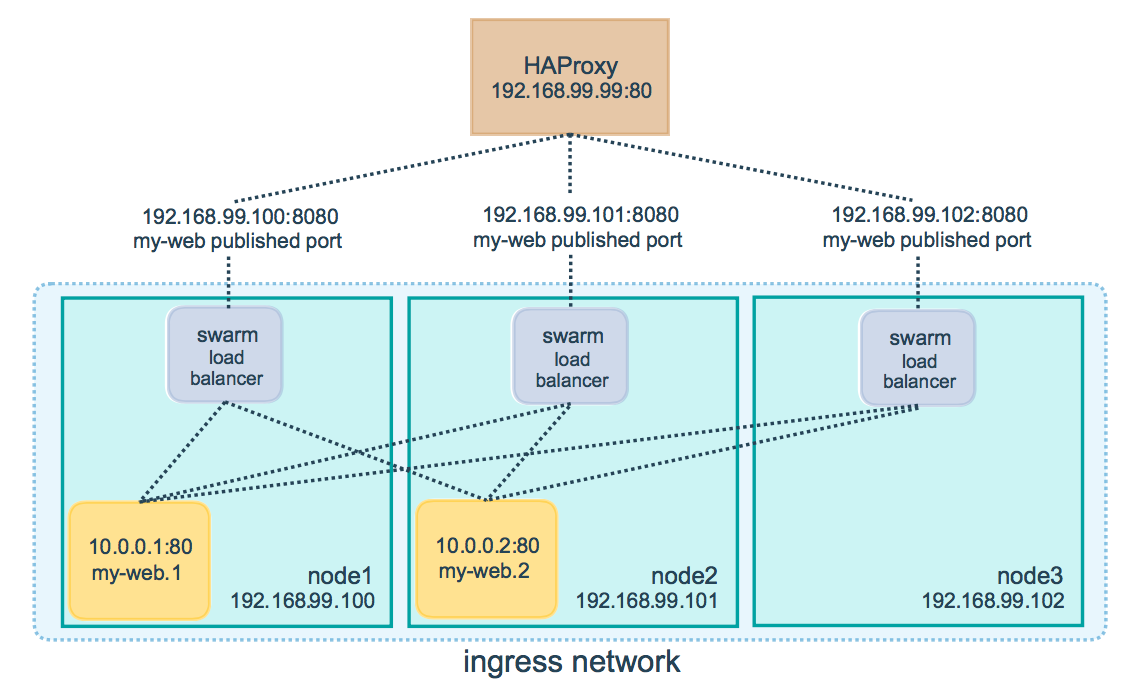

To distribute requests between existing Docker nodes, a scheme called ingress load balacing is used . The essence of this mechanism is that no matter what the user's request comes from, it will first go through the internal balancing mechanism and then be redirected to the node that can serve the request at the moment. That is, any node is able to process a request to any of the cluster services.

Scaling the Docker service is achieved by specifying the required number of replicas. At that moment when you need to increase (or decrease) the number of nodes servicing requests from the client, you simply update the properties of the service with an indication of the desired value of the --replicas option:

docker service update --replicas 3 nginx In this case, you must first remember to make sure that the number of available nodes is not less than the number of replicas you want to use. Although nothing terrible will happen even if the node is smaller than the replicas - just some nodes will launch more than one container of the same service (otherwise Docker will try to launch replicas of the same service on different nodes).

fault tolerance

Fail safety of service is guaranteed by Docker. This is achieved, among other things, due to the fact that several control nodes can work simultaneously in a cluster, which can replace a failed leader at any time. In more detail, the so-called distributed consensus support algorithm, Raft, is used . I recommend to those interested to watch this wonderful visual demonstration: Raft at work .

Raft: choosing a new leader

Automatic Deploy

The warmth of the new version of the application on the combat servers is always accompanied by the risk that something will go wrong. That is why it is considered a bad sign to roll out a new version of the application before weekends and holidays. Moreover, before long holidays, like the New Year holidays, any changes on the combat infrastructure cease within a week or even two before they start.

Despite the fact that Docker services offer a completely reliable way to launch and update an application, quite often a quick rollback to the previous version is difficult for one reason or another, which can cause users to be out of service for many hours.

The most reliable way to avoid problems when updating an application is automation and testing . For this purpose, automatic deployment systems are being developed. An important part of such systems, as a rule, is the ability to quickly upgrade and roll back to the previous version on any chosen infrastructure.

Fabricio

Most deployment automation tools offer to describe the configuration using popular markup languages like XML or YAML. Some go further and develop their own language for describing such configurations (for example, HCL or Puppet language ). I do not see the need to go along any of these paths for the following reasons:

- XML / YAML will never be compared in terms of expansion and use with full-fledged programming languages, and the desire to simplify configuration through the use of simplified markup is often the other way around, only complicates everything. Plus, few programmers will want to program in XML / YAML, and in fact configuration is special case of programming.

- Developing your own programming language is an extremely complicated and tedious process, most often unsuccessful.

Therefore, Fabricio uses standard Python and some of the reliable and time-tested libraries (among them, the well-known Fabric ) to describe the configurations.

Of course, many may argue about this, saying that not all developers and DevOps know Python. Well, first of all, Python (as well as Bash) is included in the gentlemen’s set of scripting languages that every DevOps self-respecting (or almost everyone) should know. And secondly, as it is not paradoxical, knowing Python is almost optional. In support of my words, I give an example of the configuration of the Django-based service for Fabricio:

fabfile.py

from fabricio import tasks from fabricio.apps.python.django import DjangoService django = tasks.DockerTasks( service=DjangoService( name="django", image="project/django", options={ "publish": "8080:80", "env": "DJANGO_SETTINGS_MODULE=my_settings", "replicas": 3, }, ), hosts=["user@manager1", "user@manager2", "user@manager3"], ) Agree that this example is no more complicated than a similar description on YAML. A person with at least one programming language will understand this config without any problems.

But pretty lyrics.

Deploy process

Schematically, the process of deploying a service with Fabricio looks like it is shown in the figure below (after executing the fab django command for the config described above):

Consider each item in order. To begin with, I just want to note that the presented scheme is relevant when the parallel execution mode is on (with the specified option --parallel ). The only difference between the sequential mode is that all actions in it are performed strictly sequentially.

Immediately after launching the deployment team, the following steps begin to be performed sequentially:

- pull , simultaneously on all nodes, the process of downloading a new Docker image starts. I note that in the configuration it is enough to specify only the addresses of the managing nodes (managers), and it is not even necessary to list all the available managers - the unspecified nodes will be automatically updated by Docker. Although nothing prevents to specify in the configuration including workers (in some cases it may be necessary, for example, when using an SSH tunnel ).

- migrate , the next step is to use migrations. It is important that this step be performed simultaneously on only one of the current nodes, so Fabricio in this case uses a special mechanism to ensure that the migration process will be started on only one node and executed only once.

- update , since to update all containers of the service, the update command is enough to run only once, then Fabricio at this step also ensures that it is not executed twice.

Each command (pull, migrate, update), if necessary, can be executed separately. Additional steps (prepare, push, backup) can also be included in the deployment process as described in this earlier review article about Fabricio .

All Fabricio commands (except backup and restore) are idempotent, that is, safe when re-executed with the same parameters.

Idempotency test

fab --parallel nginx

$ fab --parallel nginx [vagrant@172.28.128.3] Executing task 'nginx.pull' [vagrant@172.28.128.4] Executing task 'nginx.pull' [vagrant@172.28.128.5] Executing task 'nginx.pull' [vagrant@172.28.128.5] run: docker pull nginx:stable-alpine [vagrant@172.28.128.4] run: docker pull nginx:stable-alpine [vagrant@172.28.128.3] run: docker pull nginx:stable-alpine [vagrant@172.28.128.3] out: stable-alpine: Pulling from library/nginx [vagrant@172.28.128.3] out: Digest: sha256:ce50816e7216a66ff1e0d99e7d74891c4019952c9e38c690b3c5407f7af57555 [vagrant@172.28.128.3] out: Status: Image is up to date for nginx:stable-alpine [vagrant@172.28.128.3] out: [vagrant@172.28.128.4] out: stable-alpine: Pulling from library/nginx [vagrant@172.28.128.4] out: Digest: sha256:ce50816e7216a66ff1e0d99e7d74891c4019952c9e38c690b3c5407f7af57555 [vagrant@172.28.128.4] out: Status: Image is up to date for nginx:stable-alpine [vagrant@172.28.128.4] out: [vagrant@172.28.128.5] out: stable-alpine: Pulling from library/nginx [vagrant@172.28.128.5] out: Digest: sha256:ce50816e7216a66ff1e0d99e7d74891c4019952c9e38c690b3c5407f7af57555 [vagrant@172.28.128.5] out: Status: Image is up to date for nginx:stable-alpine [vagrant@172.28.128.5] out: [vagrant@172.28.128.3] Executing task 'nginx.migrate' [vagrant@172.28.128.4] Executing task 'nginx.migrate' [vagrant@172.28.128.5] Executing task 'nginx.migrate' [vagrant@172.28.128.5] run: docker info 2>&1 | grep 'Is Manager:' [vagrant@172.28.128.4] run: docker info 2>&1 | grep 'Is Manager:' [vagrant@172.28.128.3] run: docker info 2>&1 | grep 'Is Manager:' [vagrant@172.28.128.3] Executing task 'nginx.update' [vagrant@172.28.128.4] Executing task 'nginx.update' [vagrant@172.28.128.5] Executing task 'nginx.update' [vagrant@172.28.128.5] run: docker inspect --type image nginx:stable-alpine [vagrant@172.28.128.4] run: docker inspect --type image nginx:stable-alpine [vagrant@172.28.128.3] run: docker inspect --type image nginx:stable-alpine [vagrant@172.28.128.3] run: docker inspect --type container nginx_current [vagrant@172.28.128.3] run: docker info 2>&1 | grep 'Is Manager:' [vagrant@172.28.128.4] run: docker inspect --type container nginx_current [vagrant@172.28.128.3] run: docker service inspect nginx [vagrant@172.28.128.4] run: docker info 2>&1 | grep 'Is Manager:' [vagrant@172.28.128.3] No changes detected, update skipped. [vagrant@172.28.128.4] No changes detected, update skipped. [vagrant@172.28.128.5] run: docker inspect --type container nginx_current [vagrant@172.28.128.5] run: docker info 2>&1 | grep 'Is Manager:' [vagrant@172.28.128.5] No changes detected, update skipped. Done. Disconnecting from vagrant@127.0.0.1:2222... done. Disconnecting from vagrant@127.0.0.1:2200... done. Disconnecting from vagrant@127.0.0.1:2201... done. fab nginx

$ fab nginx [vagrant@172.28.128.3] Executing task 'nginx.pull' [vagrant@172.28.128.3] run: docker pull nginx:stable-alpine [vagrant@172.28.128.3] out: stable-alpine: Pulling from library/nginx [vagrant@172.28.128.3] out: Digest: sha256:ce50816e7216a66ff1e0d99e7d74891c4019952c9e38c690b3c5407f7af57555 [vagrant@172.28.128.3] out: Status: Image is up to date for nginx:stable-alpine [vagrant@172.28.128.3] out: [vagrant@172.28.128.4] Executing task 'nginx.pull' [vagrant@172.28.128.4] run: docker pull nginx:stable-alpine [vagrant@172.28.128.4] out: stable-alpine: Pulling from library/nginx [vagrant@172.28.128.4] out: Digest: sha256:ce50816e7216a66ff1e0d99e7d74891c4019952c9e38c690b3c5407f7af57555 [vagrant@172.28.128.4] out: Status: Image is up to date for nginx:stable-alpine [vagrant@172.28.128.4] out: [vagrant@172.28.128.5] Executing task 'nginx.pull' [vagrant@172.28.128.5] run: docker pull nginx:stable-alpine [vagrant@172.28.128.5] out: stable-alpine: Pulling from library/nginx [vagrant@172.28.128.5] out: Digest: sha256:ce50816e7216a66ff1e0d99e7d74891c4019952c9e38c690b3c5407f7af57555 [vagrant@172.28.128.5] out: Status: Image is up to date for nginx:stable-alpine [vagrant@172.28.128.5] out: [vagrant@172.28.128.3] Executing task 'nginx.migrate' [vagrant@172.28.128.3] run: docker info 2>&1 | grep 'Is Manager:' [vagrant@172.28.128.4] Executing task 'nginx.migrate' [vagrant@172.28.128.4] run: docker info 2>&1 | grep 'Is Manager:' [vagrant@172.28.128.5] Executing task 'nginx.migrate' [vagrant@172.28.128.5] run: docker info 2>&1 | grep 'Is Manager:' [vagrant@172.28.128.3] Executing task 'nginx.update' [vagrant@172.28.128.3] run: docker inspect --type image nginx:stable-alpine [vagrant@172.28.128.3] run: docker inspect --type container nginx_current [vagrant@172.28.128.3] run: docker service inspect nginx [vagrant@172.28.128.3] No changes detected, update skipped. [vagrant@172.28.128.4] Executing task 'nginx.update' [vagrant@172.28.128.4] run: docker inspect --type image nginx:stable-alpine [vagrant@172.28.128.4] run: docker inspect --type container nginx_current [vagrant@172.28.128.4] No changes detected, update skipped. [vagrant@172.28.128.5] Executing task 'nginx.update' [vagrant@172.28.128.5] run: docker inspect --type image nginx:stable-alpine [vagrant@172.28.128.5] run: docker inspect --type container nginx_current [vagrant@172.28.128.5] No changes detected, update skipped. Done. Disconnecting from vagrant@172.28.128.3... done. Disconnecting from vagrant@172.28.128.5... done. Disconnecting from vagrant@172.28.128.4... done. Rollback to the previous version

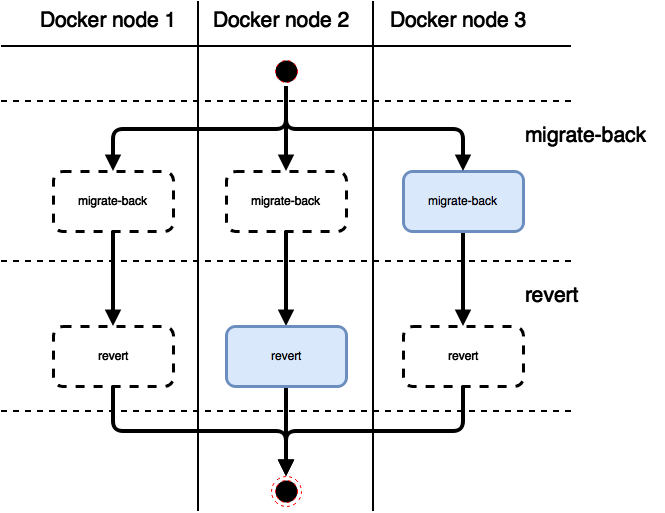

Reverting to the previous version ( fab django.rollback command for the previously described configuration) is in many ways similar to the deployment process:

Both the rollback of migrations and the rollback of the service itself to the previous state are performed strictly once on one of the manager's nodes.

Conclusion

For containerization is the future of server development. Those who have not yet realized this will soon be confronted with a fait accompli. Containers are a convenient, simple and powerful weapon in the hands of developers and DevOps.

With the release of Docker 1.12, the supporters of Kubernetes have practically no arguments in favor of using the latter. Docker services not only provide all the same features as Kubernetes services, but they even have a number of advantages due to the ease of configuration on any OS (Linux, macOS, Windows) and the absence of the need to install and run additional components (containers).

Fabricio - a tool that helps in developing, testing and laying out new versions of applications for combat and test servers with Docker - now supports the deployment of scalable and fault-tolerant services. You can get acquainted with various options for using Fabricio on the page with examples and recipes (all examples are described and automated in detail using Vagrant).

Source: https://habr.com/ru/post/318866/

All Articles