Experience in using self-hosted continuous integration systems

Introduction

It's hard to imagine modern development without Continuous Integration. Many companies release several releases a day and run thousands of tests. Since the time of Jenkins and Travis CI , a wide variety of tools have appeared on the market. Most of them work on the SaaS model - you pay a fixed fee for using the service, or for the number of users.

But using hosted platforms is not always possible, for example, if you can’t transfer application code, or don’t want to depend on external services. In this case, help out systems that can be installed on their servers (self-hosted). As a bonus, you have full control over resources and can scale them according to your needs using, for example, amazon ec2 .

This article presents a personal experience of using multiple opensource self-hosted continuous integration systems. If you used other systems, tell us about it in the comments.

Basic concepts

The basis of any CI is a build (build) - a single build of your project. Build can be collected on various triggers - push to the repository, pull-request, according to the schedule. Build consists of a set of tasks (jobs). Tasks can be performed both sequentially and in parallel. A set of tasks is given by listing all tasks or by the build matrix, the characteristics that divide them. For example, specifying the versions of the programming language and environment variables — each task will be created for each version of the language and each variable value.

In some ci systems there is also a pipeline of tasks (pipeline) - tasks are combined into groups (stage), all tasks in the current group are executed in parallel, the next group is executed only if the previous group has completed successfully. For example, the test-deploy pipeline: if all the tasks in the test have completed successfully, then you can run tasks from the deploy group.

For ci to work efficiently, it is important to have a cache - data that is used to speed up assembly. These can be apt-packages, npm cache, composer. Without a cache, when each task is started, it will be necessary to download and install all dependencies again, which may take longer than the test run itself. The closer the cache is to the servers on which tasks are performed, the better, for example, if you use amazon ec2, then a good option would be to store the cache in amazon s3.

When building a build, artifacts can be generated - build results, clean code reports, logs. CI-system that allows you to view and download these artifacts, greatly facilitates life.

If you have a large project with a large number of simultaneously running tasks, then you can not do without scaling. Scaling is manual and automatic. With manual scaling, you yourself start and stop runners on free servers. In the case of automatic scaling, the system decides whether it is necessary to create new virtual machines and in what quantity. Different ci-systems support different providers - usually amazon, google compute, digital ocean, etc.

It is important how the system runs the commands specified in the build configuration (executors). There are several ways: direct execution (on the host where the runner is running), execution in a virtual machine, in a docker container, via ssh. Each method has features that need to be considered when choosing a ci-system, for example, when starting a task in a docker-container there is no session and terminal, due to which some things will not be able to be tested. And when running on a host, do not forget about cleaning up resources after the end of execution — deleting data from the database, docker images, etc.

Finally, there are parameters that are not critical, but make working with ci more pleasant. Log output - does it work in realtime mode, or at some interval? Is it possible to interrupt the build in case of problems? Can I see the build configuration and tasks?

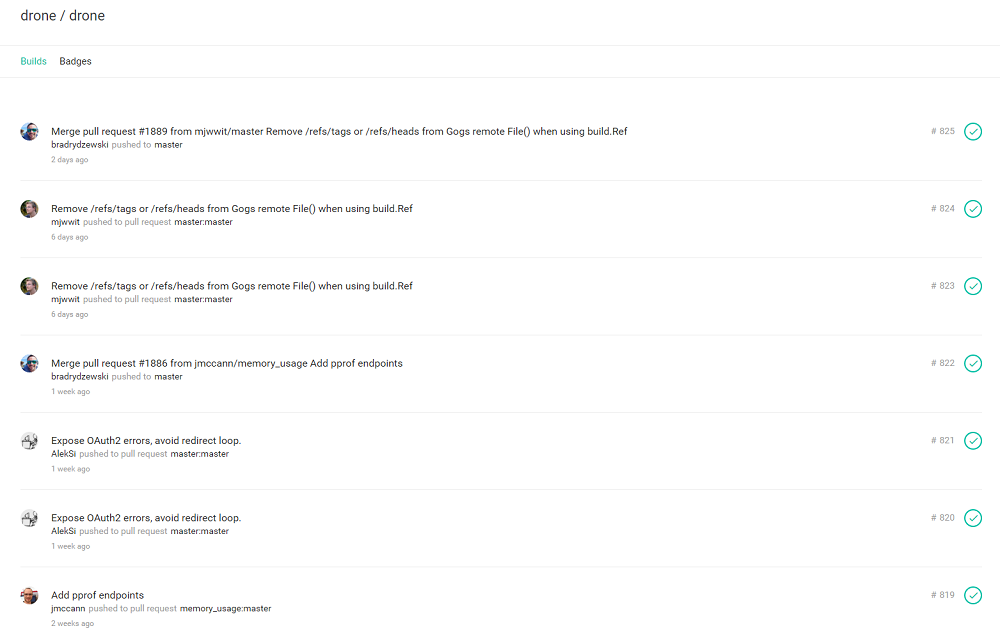

Drone

Drone is a continuous integration system based on docker containers. Written in Go. The current version is 0.4, version 0.5 is in beta. The review covers version 0.5.

Drone can work with a large number of git-repositories - GitHub, Bitbucket, Bitbucket Server, GitLab, Gogs. The build configuration is configured in the .drone.yml file in the repository root.

The build consists of several steps, each step is executed in parallel in a separate docker-container. The build matrix is set by environment variables. It is possible to use service containers - for example, if you are testing a web application that works with a database, then you need to specify the docker image of the database in the services section, and it will automatically be available from the build container. You can use any docker images.

')

Drone consists of drone server and drone agent. Drone server performs the role of coordinator, and one or several drone agents launch builds. Scaling is performed by launching additional drone agents. There are no opportunities to use cloud resources for autorun agents. There is no built-in cache support (there are third-party plug-ins to load and save the cache on s3 storage).

The commands specified in the build are executed directly in the docker container by the sh interpreter, which creates problems if you need to execute complex commands with conditional logic.

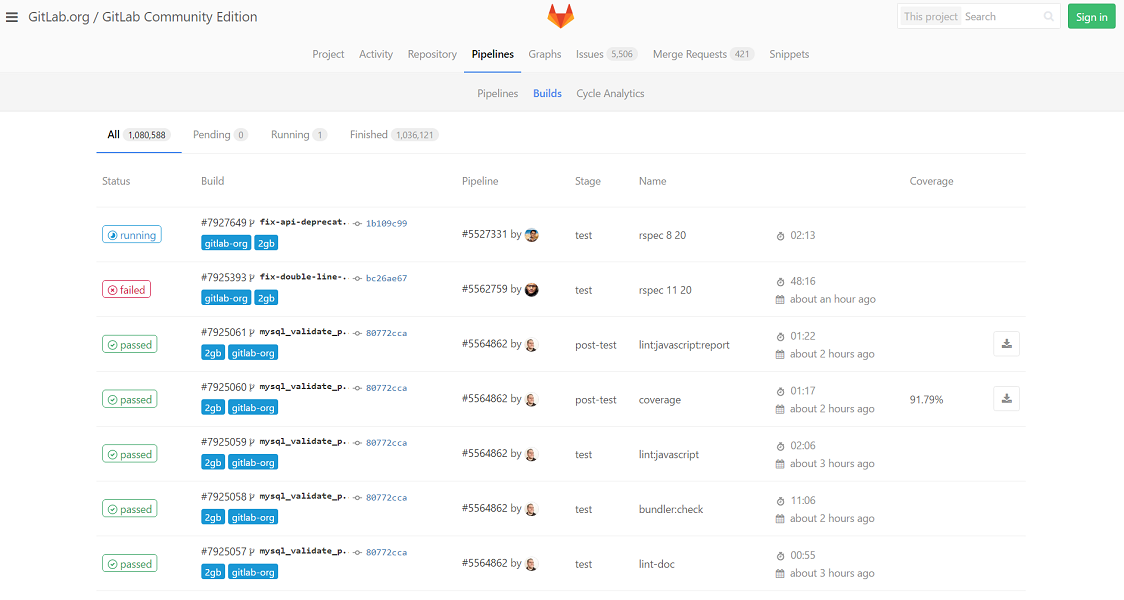

Gitlab ci

Gitlab CI is part of the Gitlab project, a self-hosted Github equivalent. Gitlab is written in ruby, and gitlab runner is written in Go. The current version of gitlab is 8.15, the gitlab runner is 1.9.

Since Gitlab CI is integrated with gitlab, it only uses gitlab as a repository. You can mirror third-party repositories on gitlab, but in my opinion, this is not very convenient. Build is organized on the principle of conveyor. You can customize the type of launch tasks - automatically or manually from the web interface.

Gitlab CI consists of a web interface (coordinator) and runners. The coordinator distributes tasks to runners that perform them. There is a large selection of executors - shell, docker, docker-ssh, ssh, virtualbox, kubernetes. The build log is not real-time - the web interface periodically polls the server; if a new part of the log appears, then it is added to the end.

There is a built-in cache support, any s3-compatible storage can be used as storage. There are artifacts - you can view individual files and download the artifact entirely from the web interface.

Work with cloud resources is organized using docker mashine. When a request is received for a new build, if there is no free machine, docker mashine will create a new machine and start the build on it. In this case, the images required for the build will have to be downloaded again, so gitlab recommends raising a separate docker registry, which would be on the same network as the docker mashine provider.

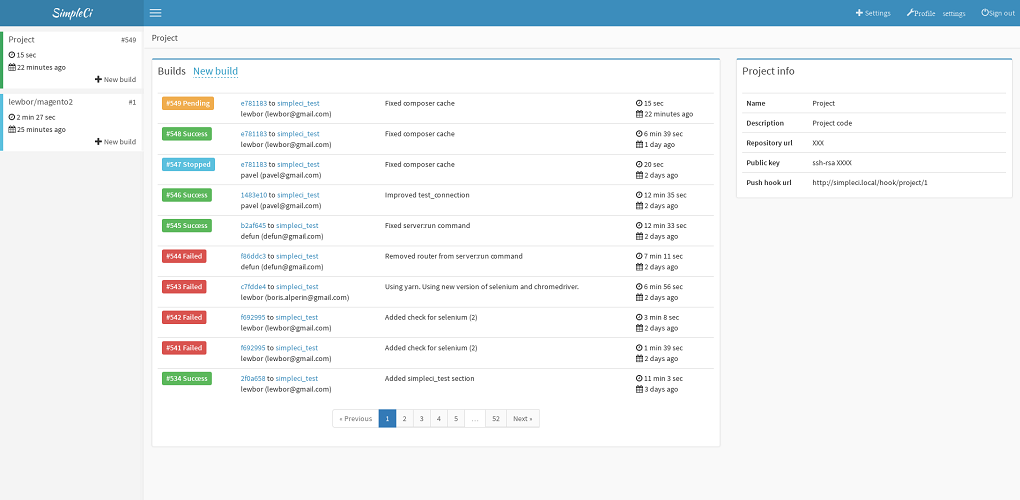

SimpleCI

SimpleCI is a continuous integration system that was written to maximize the efficient use of resources. Frontend is written in php (Symfony3, es6), backend is written in java. The current version is 0.6, is under active development.

SimpleCI supports github and gitlab repositories. Build, also, as well as in gitlab, is organized by the principle of the conveyor. If all tasks within one stage are completed successfully, then the next stage is started.

Supports work with cash. The cache is poured into the storage only if the cache files have changed within the scope of the task. Implemented work with two types of storage - s3-compatible and google storage .

Build is performed by running the docker containers, connecting to the build container via ssh and running the build script. The build log is displayed in real time (using websockets and centrifugo ).

It is possible to autoscale by using the resources of cloud providers (so far only google compute engine ). When setting up scaling, you need to specify a snapshot that will be used when creating a virtual machine. This allows you to create a snapshot with the necessary docker-images, so as not to download from every time you create a new machine. Support artifacts yet.

Conclusion

The review presents several self-hosted open source continuous integration systems. In addition to those considered, there are also many hosted, commercial and open systems. Choosing from all the variety of CI-systems, pay attention to what you need, and the tests will thank you.

Links

- Drone

- Gitlab ci

- SimpleCI

- Jenkins

- awersome ci - list of ci systems, testing tools and deployment

Source: https://habr.com/ru/post/318524/

All Articles