Backing up with Commvault: some statistics and cases

In previous posts, we shared instructions on how to configure backup and replication based on Veeam. Today we want to tell you about backup using Commvault. There will be no instructions, but we'll tell you what and how our customers are backing up.

Storage system backup based on Commvault in OST-2 data center.

Commvault is a platform for backing up applications, databases, file systems, virtual machines and physical servers. At the same time, the source data can be on any site: here, on the client side, in another commercial data center or cloud.

The client installs an iData Agent for the backup objects and configures it in accordance with the required backup policies. The iData Agent collects the necessary data, compresses, deduplicates, encrypts and transfers them to the DataLine backup system.

')

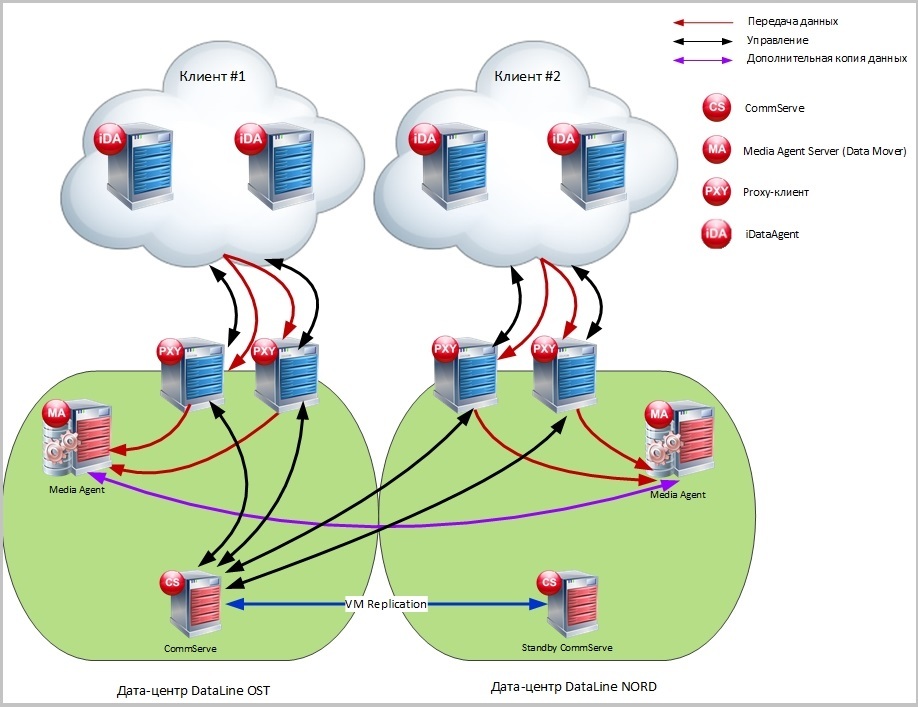

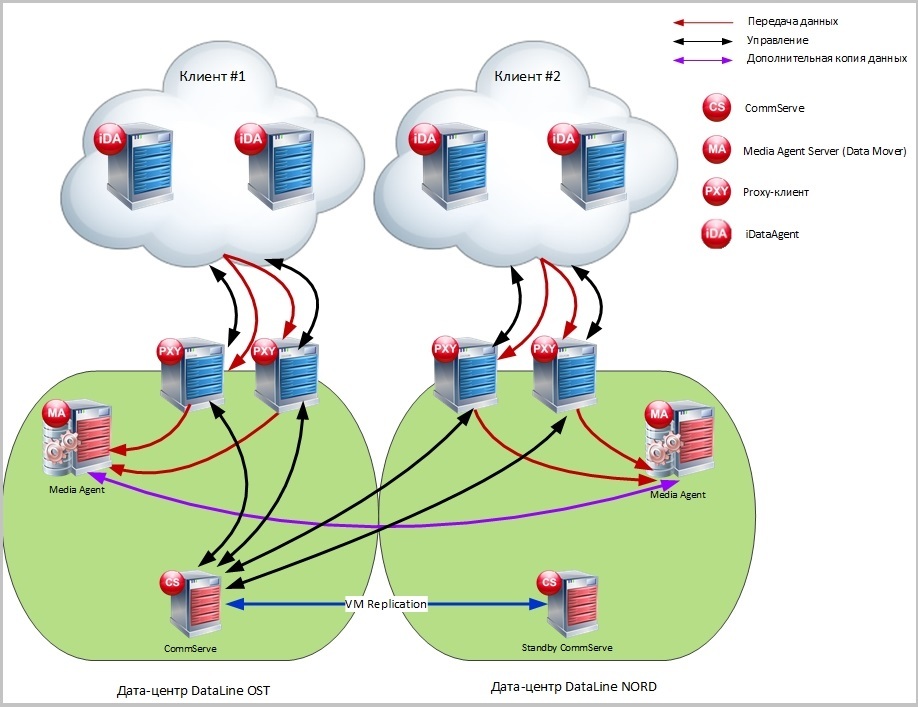

Proxy servers provide connectivity of the client network and our network, isolation of the channels through which data is transmitted.

On the DataLine side, data from the iData Agent takes up the Media Agent Server and sends it to storage on storage systems, tape libraries, etc. CommServe controls all of this. In our configuration, the main control server is located at the OST site, the backup server at the NORD site.

By default, client data is added to one site, but you can back up to two locations at once or set up a schedule for transferring backups to a second site. This option is called auxiliary copy of data. For example, all full backups at the end of the month will be automatically duplicated or moved to the second site.

Scheme of operation of the backup system Commvault.

The backup system works primarily on VMware virtualization: CommServe, Media Agent and Proxy servers are deployed on virtual machines. If the client uses our equipment, then the backups are placed on the storage system Huawei OceanStor 5500 V3. To back up client storage systems and store backups on tape libraries, separate Media Agents are used on physical servers.

From our practice, customers who choose Commvault for backup pay attention to the following points.

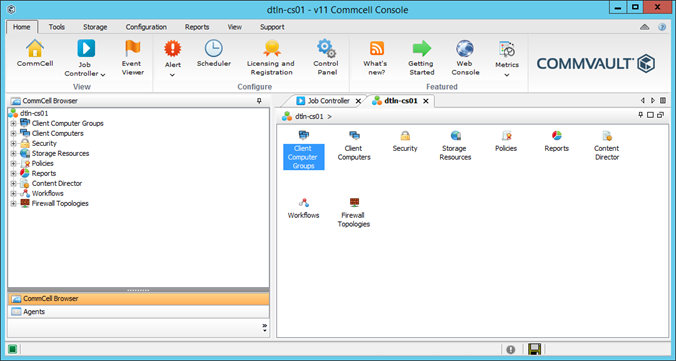

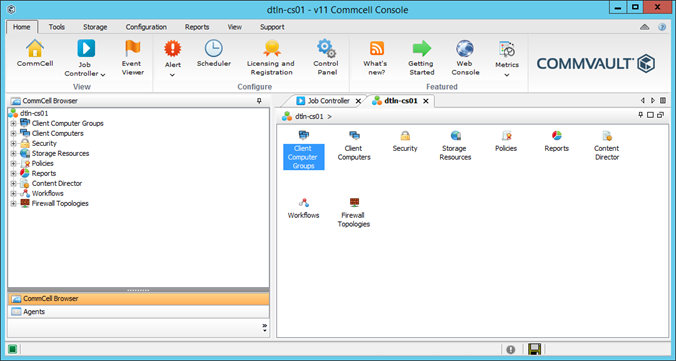

Console. Customers want to manage backups themselves. All main operations are available in the Commvault console:

Deduplication Deduplication allows you to find and delete duplicate data blocks during the backup process. Thus, it helps save storage space and reduces the amount of data transferred, reducing channel speed requirements. Without deduplication, backups would take up two to three times the amount of raw data.

In the case of Commvault, deduplication can be configured on the client side or on the Media Agent side. In the first case, non-unique data blocks will not even be transferred to the Media Agent Server. In the second, the duplicate block is discarded and is not written to the storage system.

Such block deduplication is based on hash functions. Each block is assigned a hash, which is stored in a hash table, a kind of database (Deduplication Database, DDB). When transferring data, the hash “breaks through” on this base. If such a hash is already in the database, then the block is marked as non-unique and is not transmitted to the Media Agent Server (in the first case) or is not written to the data storage system (in the second).

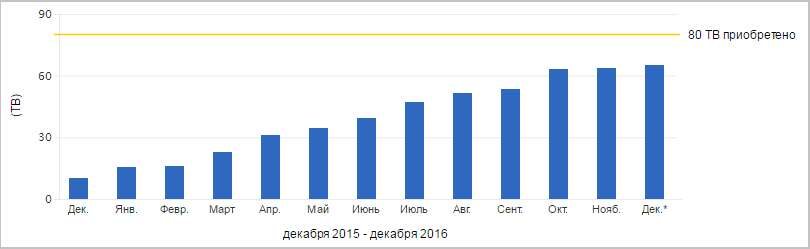

Thanks to deduplication, we manage to save up to 78% of storage space. Now on the storage is stored 166.4 TB. Without deduplication, we would have to store 744 TB.

The ability to distinguish between rights. Commvault has the ability to set different levels of access to backup management. The so-called “roles” determine what actions the user will be allowed with respect to the backup objects. For example, developers can only restore a server with a database to a specific location, and an administrator can launch an extraordinary backup for the same server, add new users.

Encryption. Encrypting data during backup via Commvault can be done in the following ways:

Available encryption agorhythms: Blowfish, GOST, Serpent, Twofish, 3-DES, AES (recommended by Commvault).

In mid-December, with the help of Commvault, we backed up 27 clients. Most of them are retailers and financial organizations. The total amount of the original copy data is 65 TB.

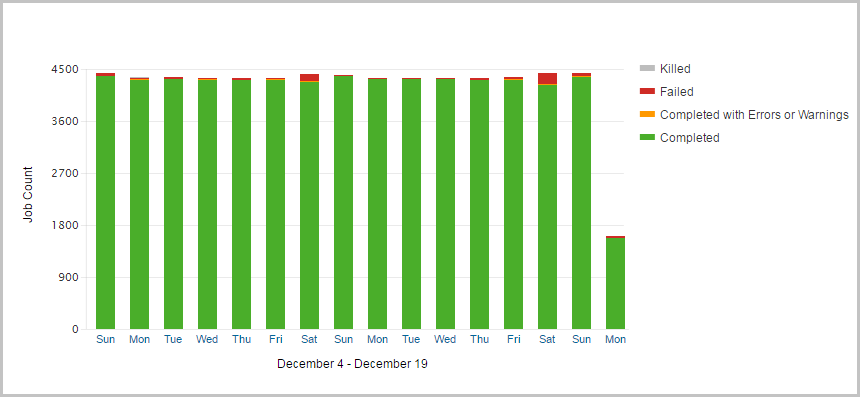

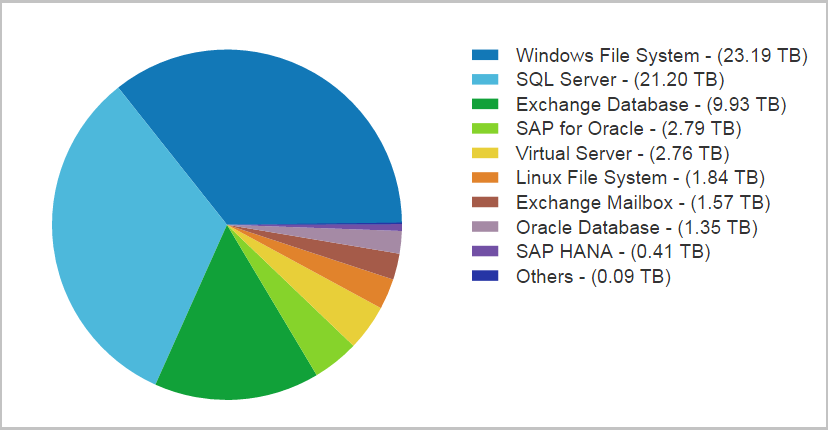

About 4,400 tasks are completed per day. Below are statistics on completed tasks for the last 16 days.

Most of all through Commvault backup Windows File System, SQL Server and Exchange databases.

And now the promised cases. Although impersonal (NDA says hello :)), they give an idea of why and how clients use a backup based on Commvault. Below are cases for customers who use a single backup system, that is, common software, Media Agent Servers and storage systems.

Customer. Russian trading and manufacturing company of the confectionery market with a distributed network of branches throughout Russia.

Task. Backup organization for Microsoft SQL databases, file servers, application servers, Exchange Online mailboxes.

Baseline data are located in offices throughout Russia (more than 10 cities). You need to backup to the DataLine site with the subsequent data recovery in any of the company's offices.

At the same time, the client wanted complete independent control with access control.

Depth of storage - a year. For Exchange Online - 3 months for online copies and a year for archives.

Decision. An additional copy was set up for the second site for the databases: the last full backup of the month is transferred to another site and the year is stored there.

The quality of the channels from the client’s remote offices did not always make it possible to backup and restore at the optimum time. To reduce the amount of transmitted traffic, deduplication was configured on the client side. Thanks to her, the full backup time was acceptable given the remoteness of the offices. For example, a full backup of a 131 GB database from St. Petersburg is done in 16 minutes. From Ekaterinburg, 340 GB database backed up 1 hour 45 minutes.

Using roles, the client has configured different permissions for its developers: only for backup or restore.

Customer. Russian network of children's goods stores.

Task. Backup organization for:

high-load MS SQL cluster based on 4 physical servers;

virtual machines with a site, application servers, 1C, Exchange and file servers.

All of the specified client infrastructure is spaced between the OST and NORD sites.

RPO for SQL servers - 30 minutes, for the rest - 1 day.

Depth of storage - from 2 weeks to 30 days depending on the type of data.

Decision. Chose a combination of solutions based on Veeam and Commvault. For file backup from our cloud, Veeam is used. Database servers, Active Directory, mail and physical servers are backed up via Commvault.

To achieve high backup speeds, the client allocated a separate network adapter for backup servers on physical servers with MS SQL. A full backup of a 3.4 TB database takes 2 hours and 20 minutes, and a full recovery takes 5 hours and 5 minutes.

The client had a large amount of raw data (almost 18 TB). If you add data to a tape library, as the client did before, you would need several dozen cartridges. This would complicate the management of the entire client backup system. Therefore, in the final implementation, the tape library was replaced with the storage system.

Customer. Chain of supermarkets in the CIS

Task. The customer wanted to back up and restore the SAP systems that were hosted in our cloud. For SAP HANA databases, RPO = 15 minutes, for virtual machines with RPO application servers = 24 hours. The storage depth is 30 days. In case of an accident, RTO = 1 hour, to restore a copy on request RTO = 4 hours.

Decision. HANA DB was configured to backup DATA-files and Log-files with a specified frequency. Log files are archived every 15 minutes or when a certain size is reached.

To reduce the recovery time of the database, we configured a two-level storage of backups based on the storage system and tape library. Online copies are added to the disks with the ability to restore at any time during the week. When the backup gets older than 1 week, it is moved to the archive, to the tape library, where it is stored for another 30 days.

A full backup of one of the 181 GB databases is done in 1 hour 54 minutes.

When configuring the backup, the SAP backint interface was used to integrate third-party backup systems with SAP HANA Studio. Therefore, backups can be managed directly from the SAP console. This simplifies the lives of SAP administrators who do not need to get used to the new interface.

Backup management is also available to the client through the standard Commvault client console.

That's all for today. Ask questions in the comments.

Storage system backup based on Commvault in OST-2 data center.

How it works?

Commvault is a platform for backing up applications, databases, file systems, virtual machines and physical servers. At the same time, the source data can be on any site: here, on the client side, in another commercial data center or cloud.

The client installs an iData Agent for the backup objects and configures it in accordance with the required backup policies. The iData Agent collects the necessary data, compresses, deduplicates, encrypts and transfers them to the DataLine backup system.

')

Proxy servers provide connectivity of the client network and our network, isolation of the channels through which data is transmitted.

On the DataLine side, data from the iData Agent takes up the Media Agent Server and sends it to storage on storage systems, tape libraries, etc. CommServe controls all of this. In our configuration, the main control server is located at the OST site, the backup server at the NORD site.

By default, client data is added to one site, but you can back up to two locations at once or set up a schedule for transferring backups to a second site. This option is called auxiliary copy of data. For example, all full backups at the end of the month will be automatically duplicated or moved to the second site.

Scheme of operation of the backup system Commvault.

The backup system works primarily on VMware virtualization: CommServe, Media Agent and Proxy servers are deployed on virtual machines. If the client uses our equipment, then the backups are placed on the storage system Huawei OceanStor 5500 V3. To back up client storage systems and store backups on tape libraries, separate Media Agents are used on physical servers.

What is important to customers?

From our practice, customers who choose Commvault for backup pay attention to the following points.

Console. Customers want to manage backups themselves. All main operations are available in the Commvault console:

- add and remove servers for backup;

- iData Agent setup;

- creating and manually launching tasks;

- self backup backup;

- setting up alerts about the status of backup tasks;

- access control to the console depending on the role and group of users.

Deduplication Deduplication allows you to find and delete duplicate data blocks during the backup process. Thus, it helps save storage space and reduces the amount of data transferred, reducing channel speed requirements. Without deduplication, backups would take up two to three times the amount of raw data.

In the case of Commvault, deduplication can be configured on the client side or on the Media Agent side. In the first case, non-unique data blocks will not even be transferred to the Media Agent Server. In the second, the duplicate block is discarded and is not written to the storage system.

Such block deduplication is based on hash functions. Each block is assigned a hash, which is stored in a hash table, a kind of database (Deduplication Database, DDB). When transferring data, the hash “breaks through” on this base. If such a hash is already in the database, then the block is marked as non-unique and is not transmitted to the Media Agent Server (in the first case) or is not written to the data storage system (in the second).

Thanks to deduplication, we manage to save up to 78% of storage space. Now on the storage is stored 166.4 TB. Without deduplication, we would have to store 744 TB.

The ability to distinguish between rights. Commvault has the ability to set different levels of access to backup management. The so-called “roles” determine what actions the user will be allowed with respect to the backup objects. For example, developers can only restore a server with a database to a specific location, and an administrator can launch an extraordinary backup for the same server, add new users.

Encryption. Encrypting data during backup via Commvault can be done in the following ways:

- on the client agent side: in this case, the data will be transferred to the backup system in encrypted form;

- on the side of the Media Agent;

- at the channel level: data is encrypted on the client agent side and decrypted on the Media Agent Server.

Available encryption agorhythms: Blowfish, GOST, Serpent, Twofish, 3-DES, AES (recommended by Commvault).

Some statistics

In mid-December, with the help of Commvault, we backed up 27 clients. Most of them are retailers and financial organizations. The total amount of the original copy data is 65 TB.

About 4,400 tasks are completed per day. Below are statistics on completed tasks for the last 16 days.

Most of all through Commvault backup Windows File System, SQL Server and Exchange databases.

And now the promised cases. Although impersonal (NDA says hello :)), they give an idea of why and how clients use a backup based on Commvault. Below are cases for customers who use a single backup system, that is, common software, Media Agent Servers and storage systems.

Case 1

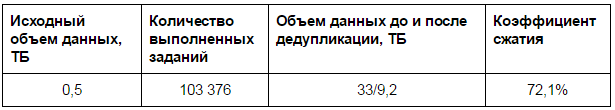

Customer. Russian trading and manufacturing company of the confectionery market with a distributed network of branches throughout Russia.

Task. Backup organization for Microsoft SQL databases, file servers, application servers, Exchange Online mailboxes.

Baseline data are located in offices throughout Russia (more than 10 cities). You need to backup to the DataLine site with the subsequent data recovery in any of the company's offices.

At the same time, the client wanted complete independent control with access control.

Depth of storage - a year. For Exchange Online - 3 months for online copies and a year for archives.

Decision. An additional copy was set up for the second site for the databases: the last full backup of the month is transferred to another site and the year is stored there.

The quality of the channels from the client’s remote offices did not always make it possible to backup and restore at the optimum time. To reduce the amount of transmitted traffic, deduplication was configured on the client side. Thanks to her, the full backup time was acceptable given the remoteness of the offices. For example, a full backup of a 131 GB database from St. Petersburg is done in 16 minutes. From Ekaterinburg, 340 GB database backed up 1 hour 45 minutes.

Using roles, the client has configured different permissions for its developers: only for backup or restore.

Case 2

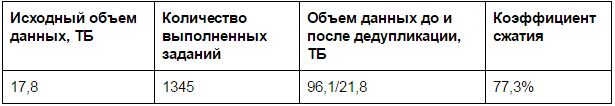

Customer. Russian network of children's goods stores.

Task. Backup organization for:

high-load MS SQL cluster based on 4 physical servers;

virtual machines with a site, application servers, 1C, Exchange and file servers.

All of the specified client infrastructure is spaced between the OST and NORD sites.

RPO for SQL servers - 30 minutes, for the rest - 1 day.

Depth of storage - from 2 weeks to 30 days depending on the type of data.

Decision. Chose a combination of solutions based on Veeam and Commvault. For file backup from our cloud, Veeam is used. Database servers, Active Directory, mail and physical servers are backed up via Commvault.

To achieve high backup speeds, the client allocated a separate network adapter for backup servers on physical servers with MS SQL. A full backup of a 3.4 TB database takes 2 hours and 20 minutes, and a full recovery takes 5 hours and 5 minutes.

The client had a large amount of raw data (almost 18 TB). If you add data to a tape library, as the client did before, you would need several dozen cartridges. This would complicate the management of the entire client backup system. Therefore, in the final implementation, the tape library was replaced with the storage system.

Case 3

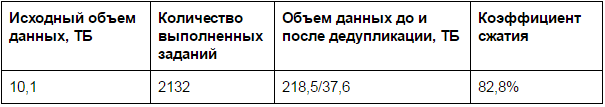

Customer. Chain of supermarkets in the CIS

Task. The customer wanted to back up and restore the SAP systems that were hosted in our cloud. For SAP HANA databases, RPO = 15 minutes, for virtual machines with RPO application servers = 24 hours. The storage depth is 30 days. In case of an accident, RTO = 1 hour, to restore a copy on request RTO = 4 hours.

Decision. HANA DB was configured to backup DATA-files and Log-files with a specified frequency. Log files are archived every 15 minutes or when a certain size is reached.

To reduce the recovery time of the database, we configured a two-level storage of backups based on the storage system and tape library. Online copies are added to the disks with the ability to restore at any time during the week. When the backup gets older than 1 week, it is moved to the archive, to the tape library, where it is stored for another 30 days.

A full backup of one of the 181 GB databases is done in 1 hour 54 minutes.

When configuring the backup, the SAP backint interface was used to integrate third-party backup systems with SAP HANA Studio. Therefore, backups can be managed directly from the SAP console. This simplifies the lives of SAP administrators who do not need to get used to the new interface.

Backup management is also available to the client through the standard Commvault client console.

That's all for today. Ask questions in the comments.

Source: https://habr.com/ru/post/318188/

All Articles