A selection of machine learning frameworks

In recent years, machine learning has become the mainstream of unprecedented power. This trend is fueled not only by low-cost cloud environments, but also by the availability of the most powerful video cards used for such calculations - there is also a mass of frameworks for machine learning. Almost all of them are open source, but more importantly, these frameworks are designed to abstract away the most difficult parts of machine learning, making these technologies more accessible to a wide class of developers. Under the cut is a selection of machine learning frameworks, both recently created and reworked in the outgoing year. If you are doing well with English, the original article is available here .

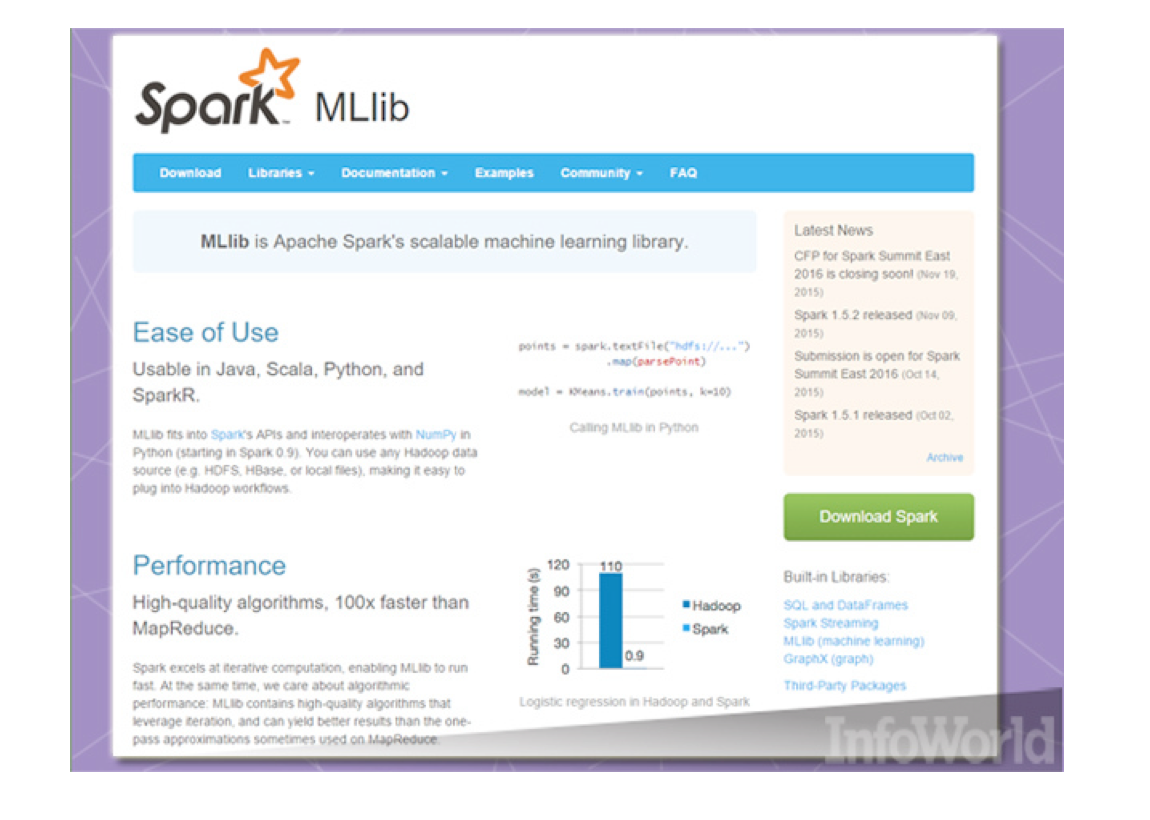

Apache Spark MLlib

Apache Spark is best known for its involvement in the Hadoop family. But this in-memory data processing framework has emerged outside of Hadoop, and still continues to earn a reputation outside of this ecosystem. Spark has become a familiar machine learning tool thanks to a growing library of algorithms that can be quickly applied to in-memory data.

')

Spark is not frozen in its development, its algorithms are constantly expanding and being revised. In release 1.5 , many new algorithms have been added, existing ones have been improved, and support for MLlib, the main platform for solving mathematical and statistical problems, has been enhanced in Python. In Spark 1.6, among other things, thanks to the continuous pipelines (persistent pipelines), it became possible to pause and continue the execution of Spark ML tasks.

Apache singa

“ Deep learning ” frameworks are used to solve heavy machine learning tasks, such as natural language processing and image recognition. Recently, the open source framework, Singa , was adopted in the Apache incubator to facilitate the training of deep learning models on large amounts of data.

Singa provides a simple programming model for training networks based on a cluster of machines, and also supports many standard types of training tasks: convolutional neural networks , limited Boltzmann machines and recurrent neural networks . Models can be trained synchronously (one after another) and asynchronously (together), depending on what is best for this problem. Singa also makes it easy to set up a cluster with Apache Zookeeper .

Caffe

Caffe - deep learning framework. It is made "with the expectation of expressiveness, speed and modularity." Initially, the framework was created for computer vision projects, but has since developed and is now used for other tasks, including speech recognition and working with multimedia.

Caffe's main advantage is speed. The framework is written entirely in C ++, supports CUDA, and if necessary, can switch the processing flow between the processor and the video card. The delivery package includes a set of free and open source reference models for standard classification tasks. Also, many models created by the user community Caffe .

Microsoft Azure ML Studio

Given the enormous amount of data and computing power required for machine learning, the clouds are the ideal medium for ML applications. Microsoft has equipped Azure with its own machine learning service, for which you can pay only upon use - Azure ML Studio. Versions are available with monthly and hourly rates, as well as free (free-tier). In particular, the project HowOldRobot was created using this system.

Azure ML Studio allows you to create and train models, turn them into APIs to provide other services. Up to 10 GB of storage space can be allocated to a single user account, although you can connect your own Azure storage. A wide range of algorithms created by Microsoft and third-party companies is available. To try the service, you do not even need to create an account, just log in anonymously, and you can drive Azure ML Studio for eight hours.

Amazon Machine Learning

Amazon has its standard approach to the provision of cloud services: first, the interested audience is provided with basic functionality, this audience sculpts something from it, and the company finds out what people really need.

The same can be said about Amazon Machine Learning . The service connects to data stored in Amazon S3, Redshift or RDS, it can perform binary classification, multi-class categorization, and regression on the specified data to create a model. However, this service is tied to Amazon. Not only does he use the data that belongs to the company's storage, the models cannot be imported or exported, and the training data samples cannot be more than 100 GB. But it’s still a good tool to start with, illustrating that machine learning is turning from luxury into a practical tool.

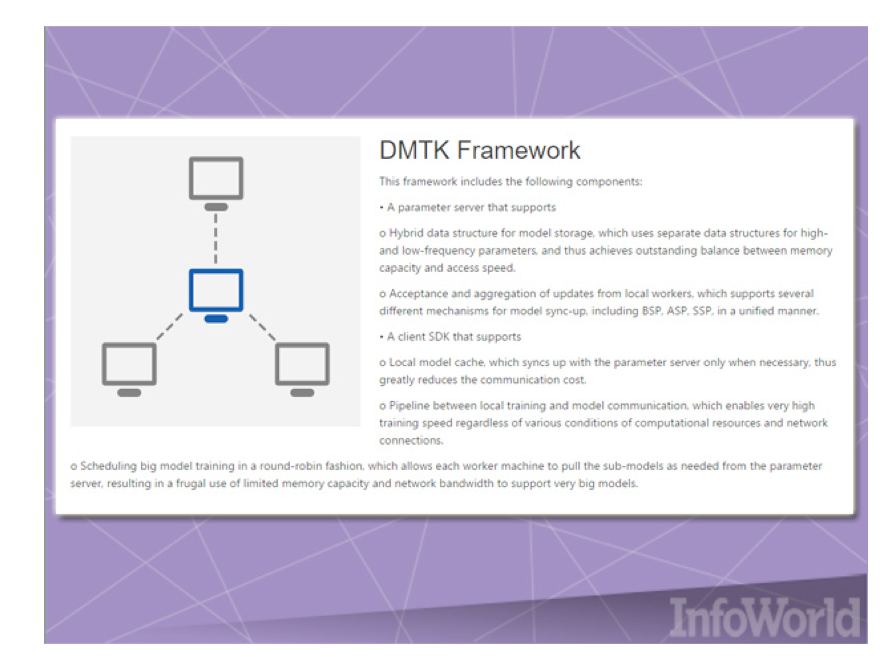

Microsoft Distributed Machine Learning Toolkit

The more computers you can use to solve machine learning problems, the better. But the integration of a large fleet of machines and the creation of ML-applications that are effectively performed on them can be a daunting task. The DMTK (Distributed Machine Learning Toolkit) framework is designed to solve the problem of distributing various ML operations across a cluster of systems.

DMTK is considered a framework, not a full-scale boxed solution, so a small number of algorithms go with it. But the DMTK architecture allows you to expand it and squeeze everything out of clusters with limited resources. For example, each cluster node has its own cache, which reduces the volume of data exchange with the central node, which provides, on request, the parameters for performing tasks.

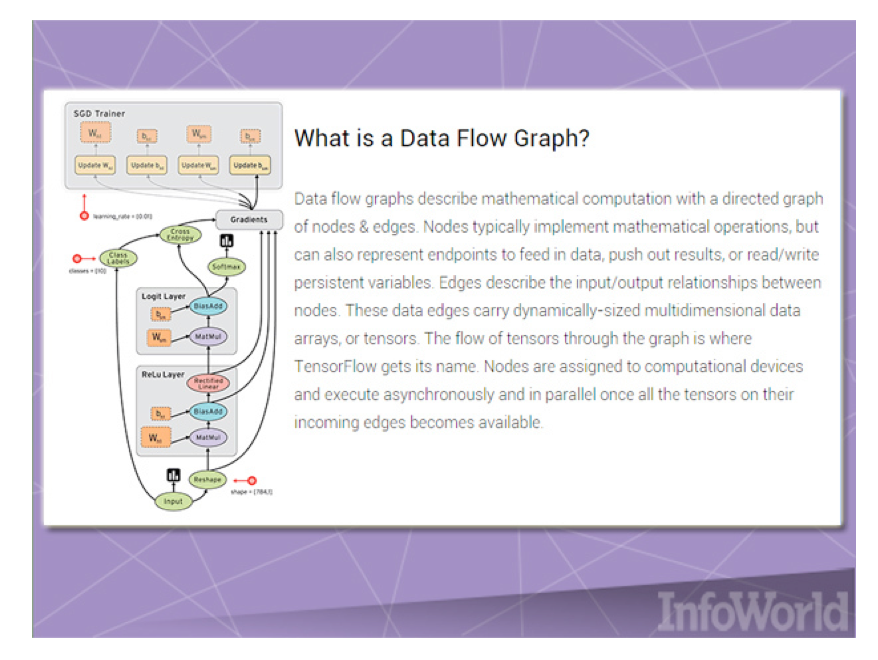

Google TensorFlow

Like Microsoft DMTK, Google TensorFlow is a machine learning framework designed to distribute computing within a cluster. Along with Google Kubernetes, this framework was developed to solve Google’s internal problems, but in the end the company released it to open navigation as an open source product.

TensorFlow implements data flow graphs (data flow graphs), when chunks of data (“tensors”) can be processed by a series of algorithms described by the graph. Moving data around the system is called "streams." Graphs can be collected using C ++ or Python, and processed by a processor or video card. Google has long-term plans for the development of TensorFlow by third-party developers.

Microsoft Computational Network Toolkit

Hot on the heels of DMTK Microsoft has released another tool for machine learning - CNTK .

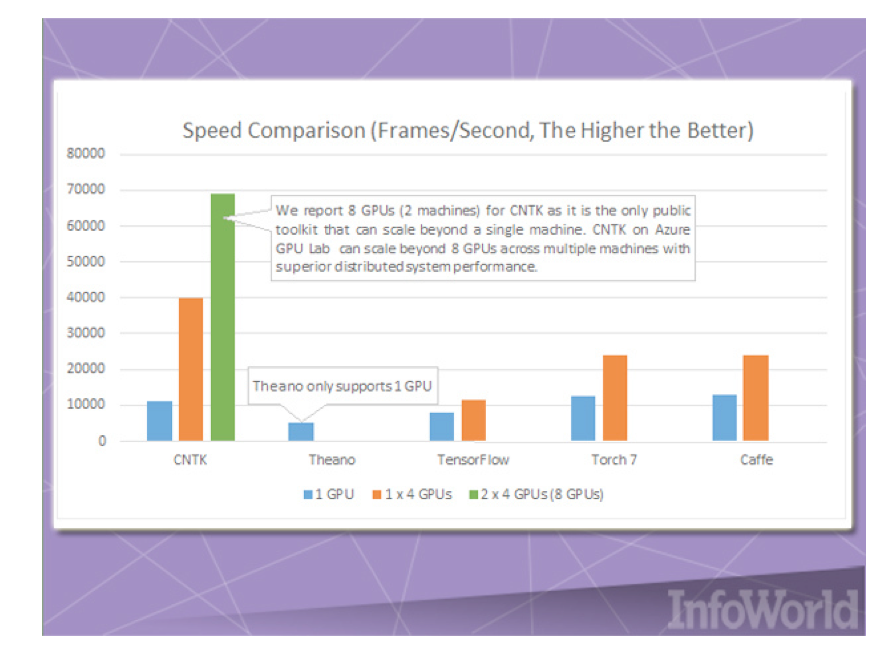

CNTK is similar to Google TensorFlow, it allows you to create neural networks using directed graphs . Microsoft compares this framework with products such as Caffe, Theano and Torch. Its main advantage is speed, especially when it comes to parallel use of multiple processors and video cards. Microsoft claims that using CNTK in conjunction with Azure based GPU clusters will speed up speech recognition training with Cortana's virtual assistant by an order of magnitude.

Originally CNTK was developed as part of a speech recognition research program and offered as an open source project, but since then the company has re-released it to GitHub under a much more liberal license.

Veles (Samsung)

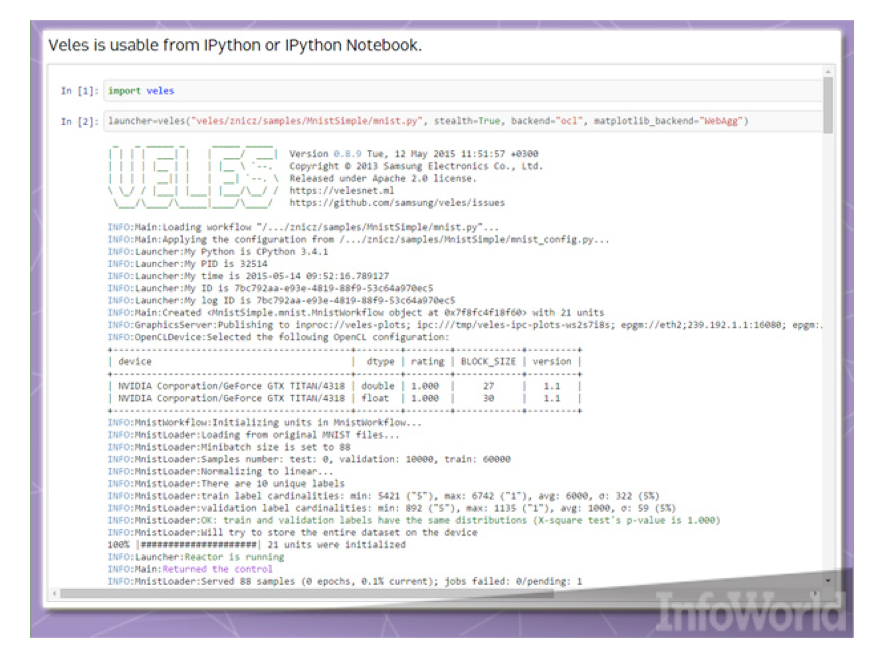

Veles is a distributed platform for creating a deep learning application. Like TensorFlow and DMTK, it is written in C ++, although Python is used to automate and coordinate nodes. Before being fed to the cluster, data samples can be analyzed and automatically normalized. The REST API allows you to immediately use trained models in work projects (if you have powerful enough equipment).

The use of Python in Veles goes beyond the “gluing code”. For example, IPython (now Jupyter), a data visualization and analysis tool, can output data from a Veles cluster. Samsung hopes that the status of open source will help stimulate further product development, as well as porting under Windows and Mac OS X.

Brainstorm

The Brainstorm project was developed by graduate students from IDSIA ( Institute Dalle Molle for Artificial Intelligence ), a Swiss institute. It was created “in order to make neural networks of deep learning faster, more flexible and more interesting.” There is already support for various recurrent neural networks, for example, LSTM.

Brainstorm uses Python to implement two “handlers” —the data management API: one for processor computing using the Numpy library, and the other for using video cards with CUDA. Most of the work is done in Python scripts, so do not expect a luxurious front-end interface, unless you screw something in your own. But the authors have far-reaching plans to “learn lessons from earlier open source projects” and use “new design elements compatible with various platforms and computing backends.”

mlpack 2

Many machine learning projects use mlpack , written in the C ++ library, created in 2011 and designed to “scale, speed up and simplify use.” You can deploy mlpack to perform quick-work operations of the “black box” type using the cache of files executed via the command line, and for more complex jobs using the C ++ API.

In mlpack 2.0 a lot of work was done on refactoring and introducing new algorithms, processing, accelerating and getting rid of inefficient old algorithms. For example, for native functions generating random numbers C ++ 11, the Boost library generator was excluded.

One of the old drawbacks of mlpack is the lack of binding for any other language, except C ++. Therefore, programmers writing in these other languages cannot use mlpack until someone rolls out the appropriate wrapper. Support for MATLAB has been added, but such projects benefit most when they are directly useful in mainstream environments where machine learning is used.

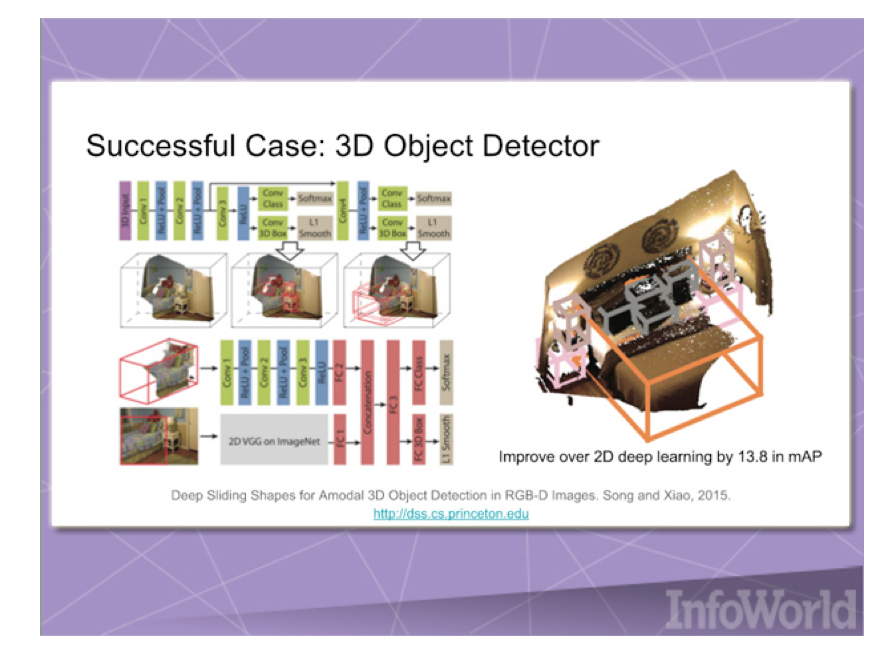

Marvin

Another relatively fresh product. Marvin is a neural network framework created by the Princeton Vision Group. It is based on just a few files written in C ++ and the CUDA framework. Despite the minimalism of the code, Marvin comes with a good number of pre-trained models that can be used with proper citation and implemented using pull requests, like the project code itself.

Neon

The Nervana company creates a hardware and software platform for deep learning. And as an open source project, it offers the Neon framework. With the help of plug-ins, it can perform heavy calculations on processors, video cards or hardware created by Nervana.

Neon is written in Python, with several pieces in C ++ and assembly language. So if you are doing a scientific work in Python, or using some other framework with Python binding, you can immediately use Neon.

In conclusion, I would like to say that of course this is not all popular frameworks. Surely a dozen of your favorite instruments are found in your bins. Feel free to share your findings in the comments to this article.

Source: https://habr.com/ru/post/317994/

All Articles