We monitor the freelance site in slack

In this article, we would like to talk about our new Job Freelance Scanner platform, which is freely available. In search of a new project, it is sometimes necessary to monitor the freelance site for the presence of a suitable offer. This raises a number of problems: searching different sites, driving in the search for the right keywords, sifting out duplicates - all this takes time. Plus, you need to remember whether you read this sentence. It would be convenient to monitor this with one tool, while on-line.

Our company is engaged in the development of integration solutions and regularly needs to search for proposals in this area. Thus, the task was formed: to develop a platform for monitoring job offers from large sites. The platform should track offers containing some keywords and publish them in the slack channel.

After implementing the application and running it locally, we decided to publish it for the community, provide free access for use, and also publish the source code. In summary, we are introducing the Job Freelance Scanner platform.

')

Job Freelance Scanner is a platform for monitoring job offers from large global job search sites developed by Leadex Systems. This platform allows you to receive offers in a single stream to the communication channel (Slack) from such sites as:

The announcement of a new job offer is received as soon as possible after publication, which allows monitoring of the latest offers and responding to them one of the first. An example of a message with a job offer on the slack channel:

To start using the platform you need:

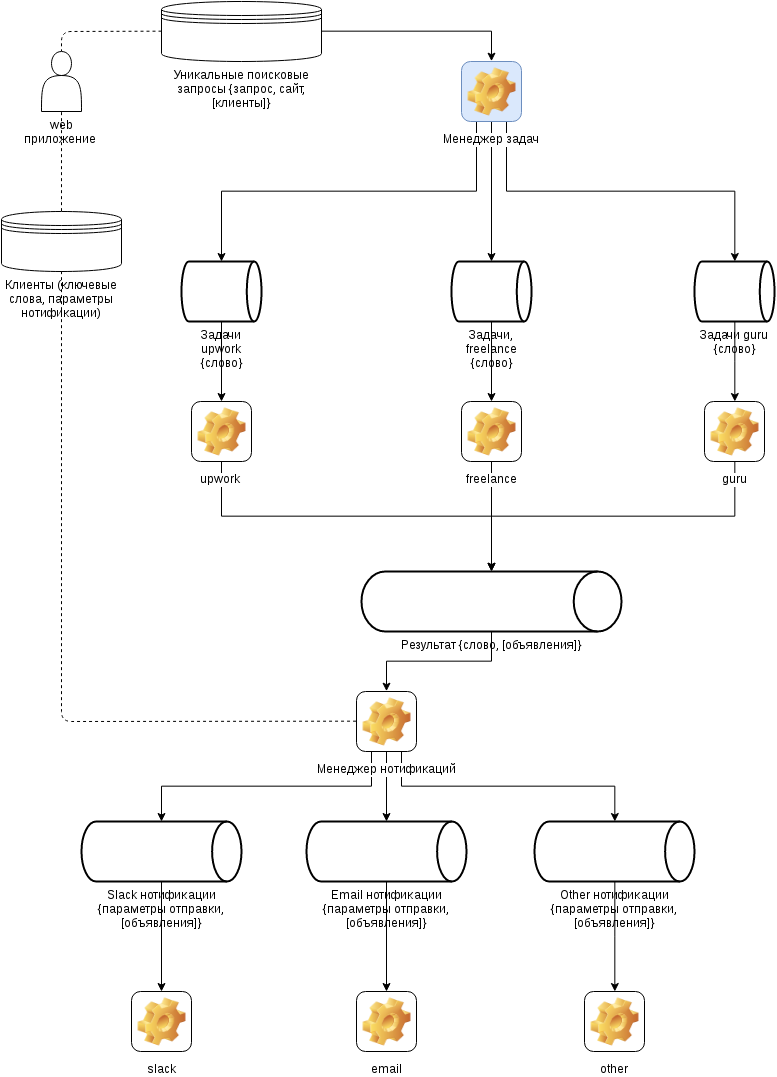

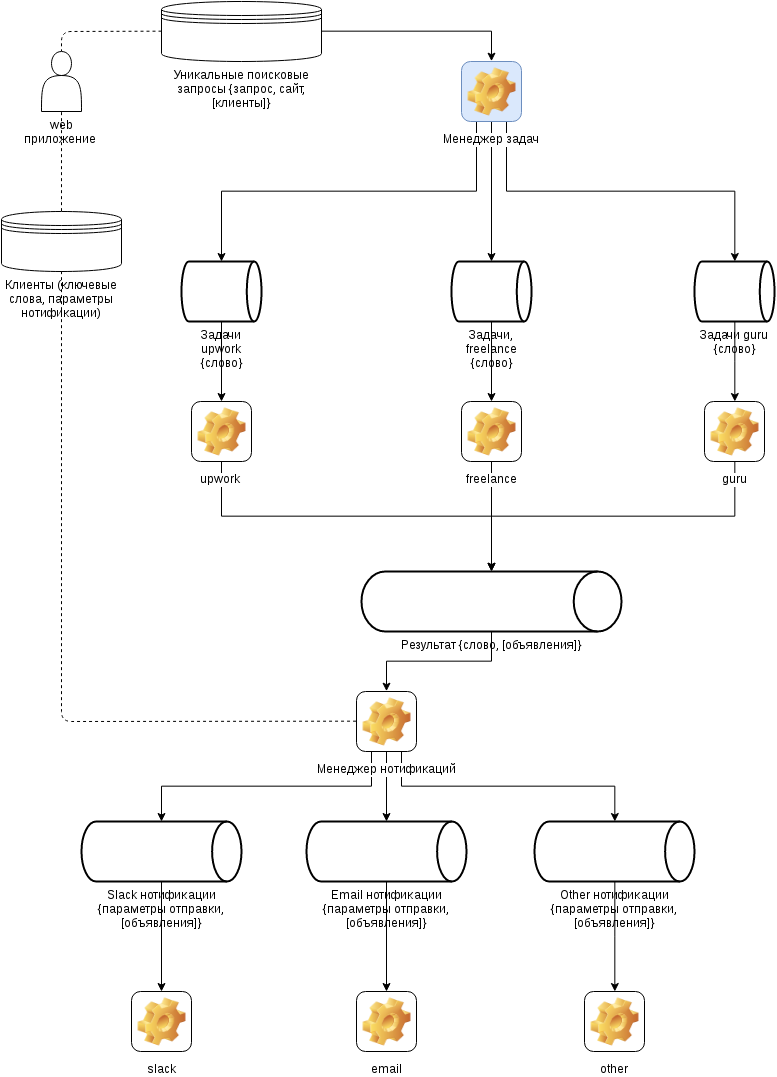

To solve the task, the following architecture was developed: after a certain period of time, job offers from the required sites using their internal API are searched for using keywords. Already published are eliminated and sent to the subscribed clients.

As can be seen from the diagram, the project consists of several independent modules. For communication between application modules, Apache ActiveMQ is used as a message broker and MongoDB for storing client settings. Apache Camel is used to integrate our solution modules.

Let's take a closer look at a module that converts job offers to a message for Slack and sends them using the slack component integration from Apache Camel. The basis of this component is the route described in Java DSL.

Route is a description of the route of transmission of messages. The characteristics of such a route are the starting point indicated by the token from (in our case it is a component for ActiveMQ) and the ending point indicated by the token to (component for Slack). Apache Camel provides various components for integration (see the complete list here camel.apache.org/components).

The configuration of the activemq component is specified using the string URI. In our case, this line is compiled by inserting the parameters specified through the settings file. Consider an example of the resulting URI string.

This is a description of the input point using the generated URI string. Where activemq is the name of the component, and queue is the type of message channel, which can be either a queue or topic. Next comes the name of the channel - slackNotify. The last part of the URI is the endpoint's parameters: username / password is the username and password of the user.

Then there are several converters and a splitter:

The Apache Camel component is also used to integrate with Slack. But a problem arises: the settings of the slak channel are unknown to us in advance. We can only learn them from the incoming message. The aggregateJobProcessor converter forms the URI part of the line with the channel settings and writes it to the header so that you can then create a dynamic route ( Dynamic Router )

The peculiarity of this route is that it dynamically selects the end point, and specifically the slack component with the settings of the channel to which messages will be sent. To construct a route, data from the message itself (its header) is used, which is obtained using SEL (Simple Expression Language) and executed using the simple () function. The EIP - The Recipient List Template allows you to create dynamic endpoints.

The integration example demonstrated the simplicity and usability of Apache Camel. We hope that the Job Freelance Scanner platform will be useful for someone. Also in the future, we plan to develop our platform, for example, add Email notification and improve UI.

→ Link to use: jobfreelancescanner.com/

→ Link to github: github.com/leadex/job-monitor

Our company is engaged in the development of integration solutions and regularly needs to search for proposals in this area. Thus, the task was formed: to develop a platform for monitoring job offers from large sites. The platform should track offers containing some keywords and publish them in the slack channel.

After implementing the application and running it locally, we decided to publish it for the community, provide free access for use, and also publish the source code. In summary, we are introducing the Job Freelance Scanner platform.

')

Opportunities

Job Freelance Scanner is a platform for monitoring job offers from large global job search sites developed by Leadex Systems. This platform allows you to receive offers in a single stream to the communication channel (Slack) from such sites as:

The announcement of a new job offer is received as soon as possible after publication, which allows monitoring of the latest offers and responding to them one of the first. An example of a message with a job offer on the slack channel:

Customization

To start using the platform you need:

- follow the link jobfreelancescanner.com ;

- Sign in with your Google or LinkedIn account

- enter search queries (keywords), which will be monitored job offers from the corresponding site;

- on the adjacent tab, change the slack notification settings;

- is ready. In the specified channel slack begin to fall sentences.

Architecture

To solve the task, the following architecture was developed: after a certain period of time, job offers from the required sites using their internal API are searched for using keywords. Already published are eliminated and sent to the subscribed clients.

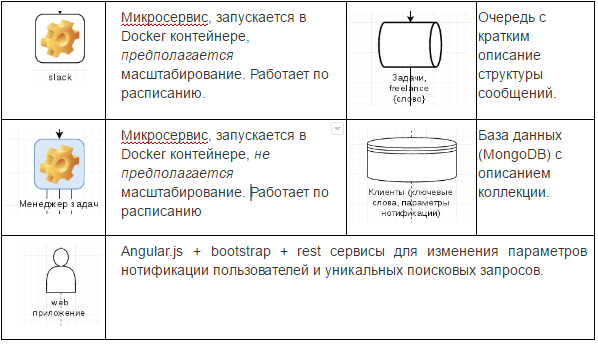

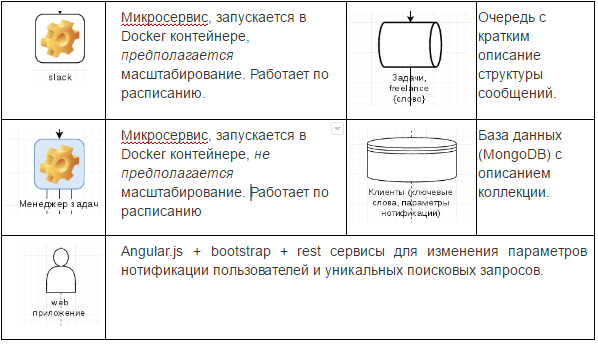

Legend:

As can be seen from the diagram, the project consists of several independent modules. For communication between application modules, Apache ActiveMQ is used as a message broker and MongoDB for storing client settings. Apache Camel is used to integrate our solution modules.

Slack and ActiveMQ integration example using Apache Camel

Let's take a closer look at a module that converts job offers to a message for Slack and sends them using the slack component integration from Apache Camel. The basis of this component is the route described in Java DSL.

from("activemq:queue://" + activemqQueue + "?username=" + activemqUser + "&password=" + activemqPass + "&disableReplyTo=true") .process(jsonToPojoProcessor) .process(aggregateJobProcessor) .split(body()) .process(jobToSlackMessageProcessor) .to("direct:slackOutput"); from("direct:slackOutput") .recipientList(simple("slack:#${header.uri}")); Route is a description of the route of transmission of messages. The characteristics of such a route are the starting point indicated by the token from (in our case it is a component for ActiveMQ) and the ending point indicated by the token to (component for Slack). Apache Camel provides various components for integration (see the complete list here camel.apache.org/components).

The configuration of the activemq component is specified using the string URI. In our case, this line is compiled by inserting the parameters specified through the settings file. Consider an example of the resulting URI string.

from("activemq:queue://slackNotify?username=admin&password=admin") This is a description of the input point using the generated URI string. Where activemq is the name of the component, and queue is the type of message channel, which can be either a queue or topic. Next comes the name of the channel - slackNotify. The last part of the URI is the endpoint's parameters: username / password is the username and password of the user.

Then there are several converters and a splitter:

- jsonToPojoProcessor — convert the input JSON message of the format to the internal data model Notification (the model contains a list of job offers and settings for slack). For conversion, the Google Gson library is used, which allows you to convert JSON to a Java object and vice versa.

- aggregateJobProcessor — convert from the Notification data model to an array of job offers. The slack channel settings are also recorded in the header.

- split (body ()) - splits the input array of job offers into independent streams.

- jobToSlackMessageProcessor - converts a job offer into a message for the slack channel.

The Apache Camel component is also used to integrate with Slack. But a problem arises: the settings of the slak channel are unknown to us in advance. We can only learn them from the incoming message. The aggregateJobProcessor converter forms the URI part of the line with the channel settings and writes it to the header so that you can then create a dynamic route ( Dynamic Router )

from("direct:slackOutput") .recipientList(simple("slack:#${header.uri}")); The peculiarity of this route is that it dynamically selects the end point, and specifically the slack component with the settings of the channel to which messages will be sent. To construct a route, data from the message itself (its header) is used, which is obtained using SEL (Simple Expression Language) and executed using the simple () function. The EIP - The Recipient List Template allows you to create dynamic endpoints.

Conclusion

The integration example demonstrated the simplicity and usability of Apache Camel. We hope that the Job Freelance Scanner platform will be useful for someone. Also in the future, we plan to develop our platform, for example, add Email notification and improve UI.

References:

→ Link to use: jobfreelancescanner.com/

→ Link to github: github.com/leadex/job-monitor

Source: https://habr.com/ru/post/317752/

All Articles