As we have 4 years survived in terms of two releases per day

Hello, Habr! Today I want to complete a series of articles about the organization of testing (starting with the study of errors and experience ), talking about how Badoo still produces two high-quality server releases every day. Except Friday, when we are released only in the morning. No need to be released on Friday night.

I came to Badoo a little over four years ago. All this time, our processes and tools for testing have continually evolved and improved. For what? The number of developers and testers increased about two times - it means that more tasks are being prepared for each release. The number of active and registered users also doubled - which means that the price of any of our mistakes has become higher. In order to deliver the highest quality product to users, we need more and more powerful means of quality control, and this race never ends. The goal of this article is not only to demonstrate a working example, but also to show that no matter how steep your quality control processes are, you can certainly make them even better . You can find technical implementations of some tools through links to other articles; we have yet to write about some of them.

')

In Badoo, there are several different QA-flow, the difference of which is based on different development tools and target platforms ( but we use common systems for them: JIRA, TeamCity, Git, etc. ), and I will tell you about the testing process and deployment of our server tasks (and along with the website). It can be divided into 5 major stages ( although here, of course, many of my colleagues think differently ), each of which includes both a manual and an automated component. I will try to tell you in turn about each of them, highlighting separately what has changed and developed in recent years.

1. Code Review

Yes, do not be surprised, this is actually also a quality control stage. It was designed to ensure that architectural and logical errors did not fall into the combat code, and the task did not in any way violate our standards and rules.

Yes, do not be surprised, this is actually also a quality control stage. It was designed to ensure that architectural and logical errors did not fall into the combat code, and the task did not in any way violate our standards and rules.The review code starts automatically in our mode even before the developer “launches” his code into the common repository. When you try to do git push on the receiving side, pre-receive hooks are launched that do a lot of different things:

- check the name of the branch : each branch in our repository must be tied to a ticket in JIRA, and the key of the ticket must be contained in the name of the branch, for example, HABR-1_make_everything_better ;

- check comments on commits : each commit must contain the same ticket key in its description. We do this automatically using client hooks, and the comments always look something like this: [HABR-1]: Initial commit ;

- check the compliance with the code format : all the code in the repository must comply with the standard adopted in Badoo, so each modified line of PHP code is checked by the phpcf utility;

- check statuses in JIRA : in order to make commits to a task, it must be in a certain status: for example, you cannot make changes to the task code if the task is already “locked” to the release branch;

- and many many others...

Failure of any of these checks results in a ban on pushing, and the developer needs to correct the remarks that have arisen. These hooks are constantly becoming more and more.

And besides the pre-receive hooks, we also have a growing pool of post-receive hooks that perform many operations that automate our processes: they change the fields and task statuses in JIRA, run tests, give signals to our deployment system ... In general, they are very big fellows .

If the code could break through all the stages of the automatic check, then it gets to the manual code review, which is conducted on the basis of the heavily modified version of GitPHP . A colleague-developer checks the optimality and consistency of the solution of the problem to the adopted architecture, the general correctness of business logic. At the same stage, verification of the coverage of a new or modified code with unit tests is carried out (tasks that are not covered by tests in due measure do not pass the review). If, while solving the problem, the code of other departments was affected, then their representatives will definitely join the review.

If the code could break through all the stages of the automatic check, then it gets to the manual code review, which is conducted on the basis of the heavily modified version of GitPHP . A colleague-developer checks the optimality and consistency of the solution of the problem to the adopted architecture, the general correctness of business logic. At the same stage, verification of the coverage of a new or modified code with unit tests is carried out (tasks that are not covered by tests in due measure do not pass the review). If, while solving the problem, the code of other departments was affected, then their representatives will definitely join the review.

Directly in GitPHP, the reviewer can make comments on the code - and they will all appear as comments in the corresponding task in JIRA, and the QA-engineer will be able to check the correction of all comments during testing (the developers even sometimes arrange holivars in these comments, it is a pleasure to read ).

2. Testing at the "devil"

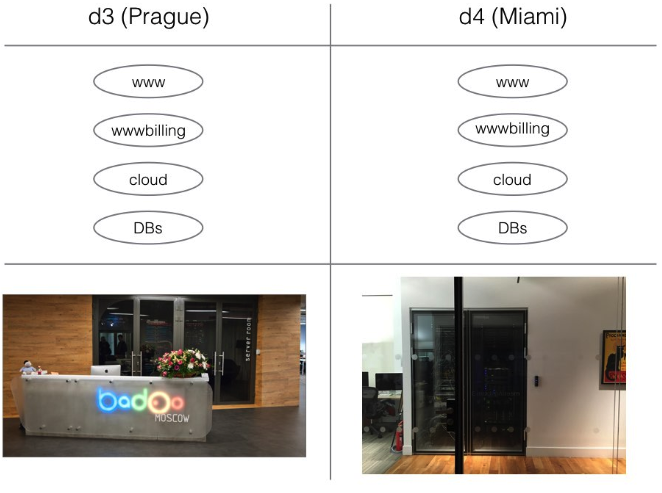

What is “understanding-environment” in our understanding? This is a full-fledged platform that maximally copies the production architecture on a smaller scale, intended for development and testing. Devel contains its own databases, its own copies of daemons, services, and CLI scripts. It is even divided into two completely separate platforms for testing their interaction: one is located in the Moscow office and emulates the work of our Prague data center, the second is in London, emulates the work of the data center in Miami.

As part of the development, each developer and tester has his own sandbox, where he can upload any version of server code he needs and which is accessible from our internal network at the corresponding URL. Deploying each developer his own sandbox on a local machine is unfortunately too costly: Badoo consists of too many different services and depends on specific software versions.

2.1. Automatic branch testing

It would be great if, immediately after the developer finished work on the task, at least integration tests and unit tests were launched in it, right? Now I will tell you a little story of a desperate struggle.

- year 2012. 15,000 tests. Run in one thread, or clumsily chopped into several equal threads. Approximately 40 minutes pass (or absolutely infinitely in the case of high server load), there can be no automatic launch of any speech. Sad

year 2013. 25,000 tests. No one is trying to calculate how much they go in one stream. Sad But here we are developing our own multi-threaded start-up ! Now all tests pass in approximately 5 minutes: you can run them immediately after completing the task! Now the tester, taking the task in hand, can immediately assess its performance: all the results of the test runs in a convenient form are fastened to JIRA.

year 2013. 25,000 tests. No one is trying to calculate how much they go in one stream. Sad But here we are developing our own multi-threaded start-up ! Now all tests pass in approximately 5 minutes: you can run them immediately after completing the task! Now the tester, taking the task in hand, can immediately assess its performance: all the results of the test runs in a convenient form are fastened to JIRA.- year 2014. 40,000 tests. Everything was fine, the multi-thread start-up coped ... But then wonderful PHP 5.5 came along, and the runkit framework we used started working ... well, let's say, very slowly. Sad We conclude that we need to split the tests into very small sets. But if you try to run even 100 (instead of the previously used 10) processes on one machine, then it very quickly chokes. Solution to the problem? We take tests to the cloud ! As a result - 2-3 minutes for all the tests, and they just vzhzhzhzhzhzh !

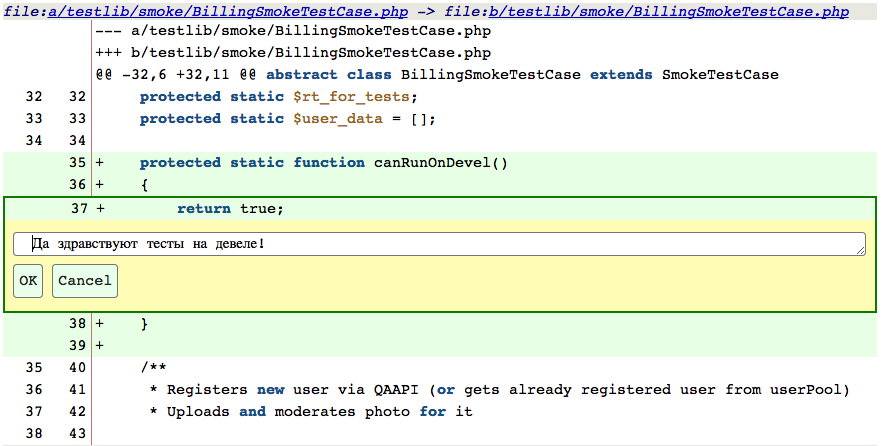

- 2015 55,000 tests. Problems are being solved more and more, tests are multiplying, and even the cloud ceases to cope with them. Sad What can you do about it? And let's try at the end of the task to run only those tests that cover the functionality affected by it, and all tests run at later stages? No sooner said than done! Twice a day we collect information on code coverage with tests, and if we can build a comprehensive test suite that will cover all the code affected in the task, then we run only them (if there are any doubts, new files or large-scale changes in the base classes - still run all). Now we get 1-2 minutes on average for passing all tests on the branch and 4-5 minutes for running all tests in general!

- 2016 year. 75,000 tests . We did not have time to start the tests (and we did not have time to start feeling sad again), as several saviors came to us at once. We switched to PHP7 and replaced the runkit ( which still does not support PHP7 ) that caused us difficulties with our own SoftMocks development. All tests now pass in 3-4 minutes, but who knows what problems we will face next year?

Running the tests in the right branches at the right time is our indispensable assistant AIDA ( Automated Interactive Deploy Assistant ), developed by our release engineers. In fact, most of all automated processes in our development can not do without its gentle ( albeit sufficiently bony ) hands.

Running the tests in the right branches at the right time is our indispensable assistant AIDA ( Automated Interactive Deploy Assistant ), developed by our release engineers. In fact, most of all automated processes in our development can not do without its gentle ( albeit sufficiently bony ) hands.2.2. Manual testing at the "devil"

So, the tester finally gets his hands on the puzzle. All unit tests in it passed, you can start testing. What to do? That's right, you need to play all the necessary cases. And 4 years ago it was a very dull affair.

Badoo is a dating service, which means that all the functionality strongly depends on the user. Practically for each test (and in a good way - and for each case) we need to register a fresh user. Since most of the functionality is closed to users without a photo, you also need to upload (and moderate) some photos. In sum, it already takes about 5 minutes of routine work.

Badoo is a dating service, which means that all the functionality strongly depends on the user. Practically for each test (and in a good way - and for each case) we need to register a fresh user. Since most of the functionality is closed to users without a photo, you also need to upload (and moderate) some photos. In sum, it already takes about 5 minutes of routine work.Then you need to prepare the test data. We want to see a promotional window displayed by the user who wrote a message to hundreds of other users within 24 hours? Well, we stretch our fingers and begin to flood. And cry.

Oh, for this case you need a user registered more than a year ago? I don’t have one like this: (Guys, do you have anyone? No?: (((You have to go pull developers to correct the values in the database ...

Very sad story, is not it? But salvation came to us - QaApi ! What it is? This is a great system that allows using http-requests to perform various actions on the server side (of course, all this is covered by authorization and can only affect users who are marked as test ones. Do not try to find this interface at home ).

A QA engineer can make a simple request for QaApi ( like qaapi.example.com/userRegister ), transfer the necessary settings (user gender, city of registration, age, etc.) with GET parameters and immediately get authorization data in response! And this way you can get users both on the development environment and on production. At the same time, we do not litter our production with bots, test users are not displayed alive, and instead of regularly registering users, we get a suitable (and already completely cleared after previous tests) user from the pool, if one already exists.

A QA engineer can make a simple request for QaApi ( like qaapi.example.com/userRegister ), transfer the necessary settings (user gender, city of registration, age, etc.) with GET parameters and immediately get authorization data in response! And this way you can get users both on the development environment and on production. At the same time, we do not litter our production with bots, test users are not displayed alive, and instead of regularly registering users, we get a suitable (and already completely cleared after previous tests) user from the pool, if one already exists.With the same simple requests, you can change and fill in various data that cannot be changed from the interface: the date of registration already mentioned, the number of new contacts or likes per day (hurray!) And everything you please. And if there is no QaApi method for changing the desired parameter? Most of the time to add it is enough basic knowledge of PHP and any engineer can do it! Sometimes, however, deeper edits are required in the code, and then you have to contact the developers responsible for the component.

Naturally, all this beauty was invented not only for manual testing. Our tests also began to use QaApi with might and main: tests became faster and more atomic, they only test what they really need to be tested (of course, there are also some tests for operations that are simplified in this way).

And when we decided that this was not enough, we implemented QaApi scripts that allow you to write ( do not ask ) simple scripts on Lua that even complex data for testing complex cases are prepared by calling various methods in one click.

2.3. Improved environment and tools

In addition to ways to optimize and automate testing at the developer, of course, ways to make it as complete and as close to production as possible are very important.

For example, CLI scripts. Previously, they were launched using the crown. Accordingly, if the tester wanted to test a new version of a script, he should have done the following:

- comment out the script launch line in crontab;

- kill all active instances of this script;

- run your version of the script and test it;

- kill all your instances of this script;

- uncomment the line in crontab.

Skipping any of these stages leads to problems either for the tester himself (the version of the script that he expects may work) or for everyone around him (the script does not work and no one knows why).

Skipping any of these stages leads to problems either for the tester himself (the version of the script that he expects may work) or for everyone around him (the script does not work and no one knows why).But now we don’t have such problems: all the scripts are chasing in the general scripting framework, on the developer version of which you can tell the script: “Start now from my working directory, and not from the general one”. In this case, any errors or timeout expiration return the script back to the general stream, and the system will ensure that all the workers are only the correct version.

Another problem is multiple A / B tests. We have literally dozens of them at any given time. Previously, it was necessary to either create a dozen users to capture all test variants, or to hack the code in order to intentionally shove their users into the options you are interested in at the moment. And now we use the general UserSplit system, which allows for each specific test to add QA-settings like “This user always gets this option” and always be sure that you are checking exactly what you need at the moment.

3. Shot Testing

What is a "shot" in our understanding? In fact, this is a branch of the task (plus the current master) in the production environment. From a technical point of view, it is a directory with the required version of the code on one of our machines in the staging cluster and a line in the NGINX config that sends http-requests like habr-1.shot to this folder. Thus, a shot can be specified as a server for any clients, both web and mobile. All engineers can create shots for a specific task using the link in JIRA.

It is intended primarily to test the performance of a task in a real environment (instead of a very similar, but still “synthetic” devil), but also to solve additional tasks, such as generating and checking translations (so that translators work with new lexemes before the task on the ranking and did not delay the release of other tasks). It is also possible to mark the shot as external - and then it will be available not only from our work network (or via VPN), but also from the Internet. This allows us to provide the opportunity to test the task of our partners, for example, when connecting a new payment method.

The main change that has occurred in recent years is the automatic launch of tests in a shot immediately after its generation (a shot is a much more stable environment than a test, so test results here are always much more relevant and less often give false results). AIDA runs two types of tests here: Selenium tests and cUrl tests . The results of their runs are fasted to the task and sent to the tester personally responsible for the task.

What to do if the modified or added functionality is not covered by Selenium tests? First of all - do not panic. Our tests cover only well-established or critical functionality (our project is developing very quickly, and covering tests with each experimental feature would slow down this process), and the tasks to write new tests are set after the release of the production tasks.

What to do if the modified or added functionality is not covered by Selenium tests? First of all - do not panic. Our tests cover only well-established or critical functionality (our project is developing very quickly, and covering tests with each experimental feature would slow down this process), and the tasks to write new tests are set after the release of the production tasks.And if the test is and fell? There may be two possible reasons for this. In the first case, there is an error in the task: the programmer is not good and should fix the task, and the tests did their job. In the second case, the functionality works properly, but its logic ( or even just the layout ) has changed and the test no longer corresponds to the current state of the system. It is necessary to repair the test.

Previously, this was done by a separate group of QA engineers responsible for automation. They were able to do it well and quickly, but still each release broke a certain number of tests ( sometimes huge ), and the guys spent much more time on maintaining the system than on developing it. As a result, we decided to change the approach to this business: we held a series of seminars and lectures so that each QA engineer had at least basic skills for writing Selenium tests (and at the same time modified our libraries so that writing tests became more convenient and easier). Now, if a test begins to fall in a test task, he repairs it himself in the task branch. If the case is very complex and requires major changes, it still gives the task to knowledgeable people.

Previously, this was done by a separate group of QA engineers responsible for automation. They were able to do it well and quickly, but still each release broke a certain number of tests ( sometimes huge ), and the guys spent much more time on maintaining the system than on developing it. As a result, we decided to change the approach to this business: we held a series of seminars and lectures so that each QA engineer had at least basic skills for writing Selenium tests (and at the same time modified our libraries so that writing tests became more convenient and easier). Now, if a test begins to fall in a test task, he repairs it himself in the task branch. If the case is very complex and requires major changes, it still gives the task to knowledgeable people.4. Testing on rating

So, the task has passed its three

Here ALL our tests pass. When you change the steying branch, run smoke tests and complete sets of our system, integration and unit tests. Each error in them is transmitted to the people responsible for the release.

Then the tester checks the task on rating. The main thing here is to check the compatibility of the task with the others who have left for staging (we have about twenty of them in each build).

Oh no, something still broke! It is necessary as soon as possible to figure out which ticket is to blame! This will help our simple, but powerful system Selenium manager . It allows you to view the results of the tests run in the shot of each task on staging, and run not run tests (by default, when creating a shot, it is not a full suite). Thus, it is often possible to find the ticket culprit ... But not always. Then you have to rummage through the logs and look for the guilty code yourself: after all, not everything can still be optimized.

Oh no, something still broke! It is necessary as soon as possible to figure out which ticket is to blame! This will help our simple, but powerful system Selenium manager . It allows you to view the results of the tests run in the shot of each task on staging, and run not run tests (by default, when creating a shot, it is not a full suite). Thus, it is often possible to find the ticket culprit ... But not always. Then you have to rummage through the logs and look for the guilty code yourself: after all, not everything can still be optimized.And so we found this problem ticket. If everything is really bad and the solution of the problem takes a long time, we roll back the staging ticket, rebuild the build and continue to work quietly while the unlucky developer fixes the incident. But if, in order to correct the error, it is enough just to change one character in one line?

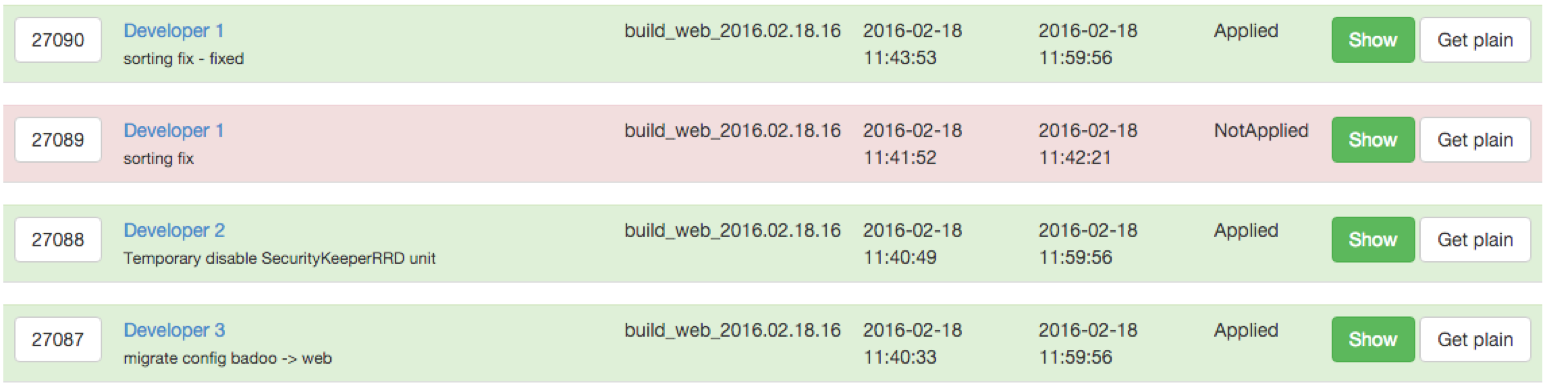

Previously, developers fixed such problems with direct commits to the build branch. This is quite fast and convenient, but this is a real nightmare from the point of view of QA. It is rather difficult to check such a “plantain leaf attached to the wound”, and even harder to find all such fixes and find the culprit if he caused a new error in another place. It was impossible to live this long, and our release engineers wrote their own patch interface.

Previously, developers fixed such problems with direct commits to the build branch. This is quite fast and convenient, but this is a real nightmare from the point of view of QA. It is rather difficult to check such a “plantain leaf attached to the wound”, and even harder to find all such fixes and find the culprit if he caused a new error in another place. It was impossible to live this long, and our release engineers wrote their own patch interface.

It allows you to attach a Git patch to the current build with a description of the problem being solved, marked by the author's name and the possibility of a preliminary review by another developer or testing by QA. Each patch is attached to a particular task, and when a task is rolled back from a build, all patches associated with it will also be automatically rolled out.

It seems everyone checked everything. It seems you can go to the production. Is it true? Previously, the engineer responsible for the release had to independently find out if everything was in order: watch the results of the test runs, find out if all the dropped Selenium tests were repaired, all the tasks were checked, all the patches were tested and applied ... A huge amount of work and a very big responsibility. Therefore, we have put together such a simple interface that allows everyone responsible for one or another component (or test block) to put a check mark “I'm fine,” and as soon as the entire sign turns green, you can go.

So we managed! You can celebrate, pour champagne and dance on the table ... In fact, no. Most likely, there are still a few hours of working time ahead. And it is likely - another release. But even this is not the main thing: the quality control of the task does not end when it is sent to users!

5. Production Verification

It is imperative to check that under the pressure of the production environment and hundreds of thousands ( or millions! ) Of users, the task behaves exactly as expected. We initially lay out large and complex projects only for a part of the audience (for example, only within a certain country) in order to evaluate the performance and stress resistance of the system in combat conditions. All participants in the process, including developers, testers, and managers, are involved in evaluating the results of such experiments from various angles. And even after such an “experiment” was recognized as a success and the new feature dispersed to all users, even despite the work of a full-fledged monitoring department, it is always useful to look carefully at the state of the system after the release: all the same, live users can accumulate more cases. than the brain can come up with the most sophisticated tester.

It is imperative to check that under the pressure of the production environment and hundreds of thousands ( or millions! ) Of users, the task behaves exactly as expected. We initially lay out large and complex projects only for a part of the audience (for example, only within a certain country) in order to evaluate the performance and stress resistance of the system in combat conditions. All participants in the process, including developers, testers, and managers, are involved in evaluating the results of such experiments from various angles. And even after such an “experiment” was recognized as a success and the new feature dispersed to all users, even despite the work of a full-fledged monitoring department, it is always useful to look carefully at the state of the system after the release: all the same, live users can accumulate more cases. than the brain can come up with the most sophisticated tester.What is it for me?

There is always where to strive and develop. 4 years ago I came to Badoo and was delighted with the way everything was arranged here. I actively participated in the development of processes and a year later I spoke at conferences, telling what was EVEN BETTER. Three years have passed - and I can not imagine how we lived without QaApi, without such cool auto tests and other beautiful and convenient things. Sit somewhere in your free time and dream up, what would you like to change or improve in your own processes? Unleash your imagination, invent the most incredible features and share them with your friends. It may well be that some of this is terribly necessary for everyone around, and the implementation will be far from as fantastic as you might think. And then all of you ( and eventually your users ) will become a little happier.

Kudinov Ilya, Sr. QA Engineer

Source: https://habr.com/ru/post/317700/

All Articles