Performance comparison of GPU calculations in Python and C

Python has a number of attractive advantages, which include ease of implementation of software solutions, clarity and conciseness of the code, the presence of a large number of libraries and numerous active community. At the same time, the well-known slowness of the python often limits its applicability to "heavy" calculations. For a number of tasks, it is possible to achieve a significant acceleration of calculations by using the CUDA technology for parallel computing on the GPU. The purpose of this small study is to analyze the possibilities of effectively using python for calculations on GPU and comparing the performance of various python solutions with implementation on C.

Introduction

By the nature of my work, I often do numerical modeling tasks. In many cases, we use CUDA technology to speed up calculations by using the parallel computing capabilities of the GPU, while programs are written in C. At the same time, in some cases, we would like to be able to implement calculations on python, because it is convenient, fast , flexible, concise,

Test task

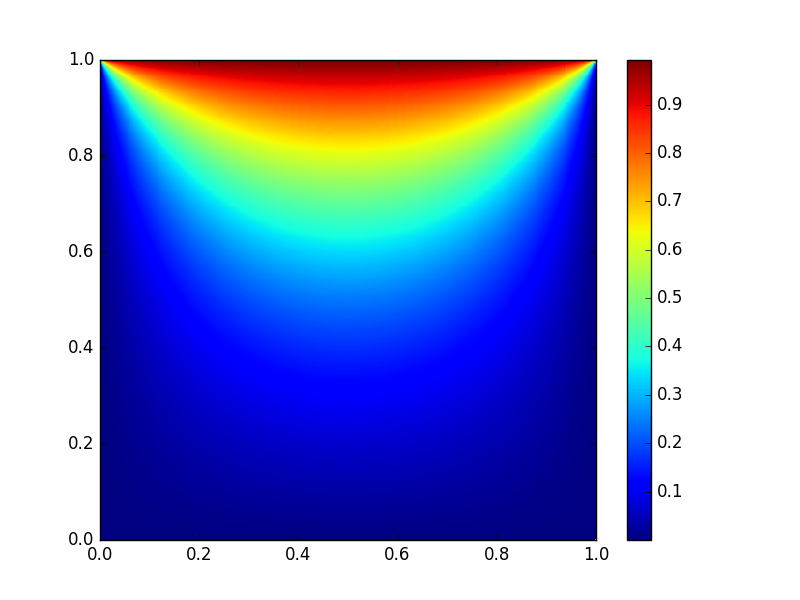

The choice of the test problem and the conditions of testing was determined by the nature of the real problems, for which further use of python is planned. In this case, the simplest problem was chosen, namely the problem of solving the two-dimensional heat conduction equation using an explicit finite-difference scheme . The task was considered on a square domain with given temperature values at the boundaries. The number of nodes of the computational grid in x and y is the same and is equal to n. The figure at the beginning of the article shows the steady-state solution to the test problem.

Algorithm and testing conditions

The algorithm for solving the problem can be represented by the following pseudocode:

')

u0 u, u0 u for(i = 0; i < nstp/2; i++) u , u0 u0 , u (u0) In all tests presented below, the number of square grid nodes in each direction (n) ranged from 512 to 4096, and nstp = 5000.

Software and hardware

Testing was conducted on a personal computer:

Intel® Core (TM) 2 Quad CPU Q9650 @ 3.00GHz, 8 Gb RAM

GPU: Nvidia GTX 580

Operating system: Ubuntu 16.04 LTS with CUDA 7.5 installed

C implementation

All further python implementations were compared with the results obtained using the C program described in this section.

C code

#include <stdio.h> #include <time.h> #include <cuda_runtime.h> #define BLOCK_SIZE 16 int N = 512; //1024; //2048; double L = 1.0; double h = L/N; double h2 = h*h; double tau = 0.1*h2; double th2 = tau/h2; int nstp = 5000; __constant__ int dN; __constant__ double dth2; __global__ void NextStpGPU(double *u0, double *u) { int i = blockDim.x * blockIdx.x + threadIdx.x; int j = blockDim.y * blockIdx.y + threadIdx.y; double uim1, uip1, ujm1, ujp1, u00, d2x, d2y; if (i > 0) uim1 = u0[(i - 1) + dN*j]; else uim1 = 0.0; if (i < dN - 1) uip1 = u0[(i + 1) + dN*j]; else uip1 = 0.0; if (j > 0) ujm1 = u0[i + dN*(j - 1)]; else ujm1 = 0.0; if (j < dN - 1) ujp1 = u0[i + dN*(j + 1)]; else ujp1 = 1.0; u00 = u0[i + dN*j]; d2x = (uim1 - 2.0*u00 + uip1); d2y = (ujm1 - 2.0*u00 + ujp1); u[i + dN*j] = u00 + dth2*(d2x + d2y); } int main(void) { size_t size = N * N * sizeof(double); double pStart, pEnd, pD; int i; double *h_u0 = (double *)malloc(size); double *h_u1 = (double *)malloc(size); for (i = 0; i < N*N; ++i) { h_u0[i] = 0.0; h_u1[i] = 0.0; } pStart = (double) clock(); double *d_u0 = NULL; cudaMalloc((void **)&d_u0, size); double *d_u1 = NULL; cudaMalloc((void **)&d_u1, size); cudaMemcpy(d_u0, h_u0, size, cudaMemcpyHostToDevice); cudaMemcpy(d_u1, h_u1, size, cudaMemcpyHostToDevice); cudaMemcpyToSymbol(dN, &N, sizeof(int)); cudaMemcpyToSymbol(dth2, &th2, sizeof(double)); dim3 dimBlock(BLOCK_SIZE, BLOCK_SIZE, 1); dim3 dimGrid(N/BLOCK_SIZE, N/BLOCK_SIZE, 1); for(i = 0; i < int(nstp/2); i++) { NextStpGPU<<<dimGrid,dimBlock>>>(d_u0, d_u1); NextStpGPU<<<dimGrid,dimBlock>>>(d_u1, d_u0); } cudaMemcpy(h_u0, d_u0, size, cudaMemcpyDeviceToHost); cudaFree(d_u0); cudaFree(d_u1); pEnd = (double) clock(); pD = (pEnd-pStart)/CLOCKS_PER_SEC; printf("Calculation time on GPU = %f sec\n", pD); } free(h_u0); free(h_u1); return 0; } Calculations show that for N = 512, the execution time of the C program parallelized on the GPU is 0.27 seconds versus 33.06 seconds for the sequential implementation of the algorithm on the CPU. That is, the CPU / GPU acceleration is about 120 times. With increasing N, the magnitude of the acceleration does not decrease.

Python with Numba

The Numba library provides the ability to jit (just-in-time) compile python code into byte-code comparable in performance to C or Fortran code. Numba supports compiling and running python code not only on the CPU, but also on the GPU, while the style and appearance of the program using the Numba library remains purely Python.

This is what our program looks like in this case.

from numba import cuda import numpy as np import matplotlib.pyplot as plt from time import time n = 512 blockdim = 16, 16 griddim = int(n/blockdim[0]), int(n/blockdim[1]) L = 1. h = L/n dt = 0.1*h**2 nstp = 5000 @cuda.jit("void(float64[:,:], float64[:,:])") def nextstp_gpu(u0, u): i,j = cuda.grid(2) if i > 0: uim1 = u0[i-1,j] else: uim1 = 0. if i < n-1: uip1 = u0[i+1,j] else: uip1 = 0. if j > 0: ujm1 = u0[i,j-1] else: ujm1 = 0. if j < n-1: ujp1 = u0[i,j+1] else: ujp1 = 1. d2x = (uim1 - 2.*u0[i,j] + uip1) d2y = (ujm1 - 2.*u0[i,j] + ujp1) u[i,j] = u0[i,j] + (dt/h**2)*(d2x + d2y) u0 = np.full((n,n), 0., dtype = np.float64) u = np.full((n,n), 0., dtype = np.float64) st = time() d_u0 = cuda.to_device(u0) d_u = cuda.to_device(u) for i in xrange(0, int(nstp/2)): nextstp_gpu[griddim,blockdim](d_u0, d_u) nextstp_gpu[griddim,blockdim](d_u, d_u0) cuda.synchronize() u0 = d_u0.copy_to_host() print 'time on GPU = ', time() - st Note here are some nice features. First, this implementation is much shorter and clearer. Here we used two-dimensional arrays, which makes the code much more readable. Secondly, if in the C-implementation we were required to pass all constants (for example, N) by executing functions like

cudaMemcpyToSymbol(dN, &N, sizeof(int)); here we simply use global variables as in the usual python functions. Finally, the implementation does not require any knowledge of the C language and the GPU architecture.This code is easy to rewrite and for the case of using one-dimensional arrays of size n * n, as will be shown later, this significantly affects the execution speed.

Here is the code

from numba import cuda import numpy as np import matplotlib.pyplot as plt from time import time n = 512 blockdim = 16, 16 griddim = int(n/blockdim[0]), int(n/blockdim[1]) L = 1. h = L/n dt = 0.1*h**2 nstp = 5000 @cuda.jit("void(float64[:], float64[:])") def nextstp_gpu(u0, u): i,j = cuda.grid(2) u00 = u0[i + n*j] if i > 0: uim1 = u0[i-1 + n*j] else: uim1 = 0. if i < n-1: uip1 = u0[i+1 + n*j] else: uip1 = 0. if j > 0: ujm1 = u0[i + n*(j-1)] else: ujm1 = 0. if j < n-1: ujp1 = u0[i + n*(j+1)] else: ujp1 = 1. d2x = (uim1 - 2.*u00 + uip1) d2y = (ujm1 - 2.*u00 + ujp1) u[i + n*j] = u00 + (dt/h/h)*(d2x + d2y) u0 = np.full(n*n, 0., dtype = np.float64) u = np.full(n*n, 0., dtype = np.float64) st = time() d_u0 = cuda.to_device(u0) d_u = cuda.to_device(u) for i in xrange(0, int(nstp/2)): nextstp_gpu[griddim,blockdim](d_u0, d_u) nextstp_gpu[griddim,blockdim](d_u, d_u0) cuda.synchronize() u0 = d_u0.copy_to_host() print 'time on GPU = ', time() - st PyCUDA

The second python library tested was the PyCUDA library. Unlike Numba, here from the developer you will need to write kernel code in C, therefore you cannot do without knowledge of this language. On the other hand, apart from the actual C kernel, you do not need to write anything.

PyCUDA code is obtained as follows.

import pycuda.driver as cuda import pycuda.autoinit from pycuda.compiler import SourceModule import numpy as np import matplotlib.pyplot as plt from time import time n = 512 blockdim = 16, 16 griddim = int(n/blockdim[0]), int(n/blockdim[1]) L = 1. h = L/n dt = 0.1*h**2 nstp = 5000 mod = SourceModule(""" __global__ void NextStpGPU(int* dN, double* dth2, double *u0, double *u) { int i = blockDim.x * blockIdx.x + threadIdx.x; int j = blockDim.y * blockIdx.y + threadIdx.y; double uim1, uip1, ujm1, ujp1, u00, d2x, d2y; uim1 = exp(-10.0); if (i > 0) uim1 = u0[(i - 1) + dN[0]*j]; else uim1 = 0.0; if (i < dN[0] - 1) uip1 = u0[(i + 1) + dN[0]*j]; else uip1 = 0.0; if (j > 0) ujm1 = u0[i + dN[0]*(j - 1)]; else ujm1 = 0.0; if (j < dN[0] - 1) ujp1 = u0[i + dN[0]*(j + 1)]; else ujp1 = 1.0; u00 = u0[i + dN[0]*j]; d2x = (uim1 - 2.0*u00 + uip1); d2y = (ujm1 - 2.0*u00 + ujp1); u[i + dN[0]*j] = u00 + dth2[0]*(d2x + d2y); } """) u0 = np.full(n*n, 0., dtype = np.float64) u = np.full(n*n, 0., dtype = np.float64) nn = np.full(1, n, dtype = np.int64) th2 = np.full(1, dt/h/h, dtype = np.float64) st = time() u0_gpu = cuda.to_device(u0) u_gpu = cuda.to_device(u) n_gpu = cuda.to_device(nn) th2_gpu = cuda.to_device(th2) func = mod.get_function("NextStpGPU") for i in xrange(0, int(nstp/2)): func(n_gpu, th2_gpu, u0_gpu, u_gpu, block=(blockdim[0],blockdim[1],1),grid=(griddim[0],griddim[1],1)) func(n_gpu, th2_gpu, u_gpu, u0_gpu, block=(blockdim[0],blockdim[1],1),grid=(griddim[0],griddim[1],1)) u0 = cuda.from_device_like(u0_gpu, u0) print 'time on GPU = ', time() - st This all looks like a pure python with the exception of a local C-code insert.

Performance comparison

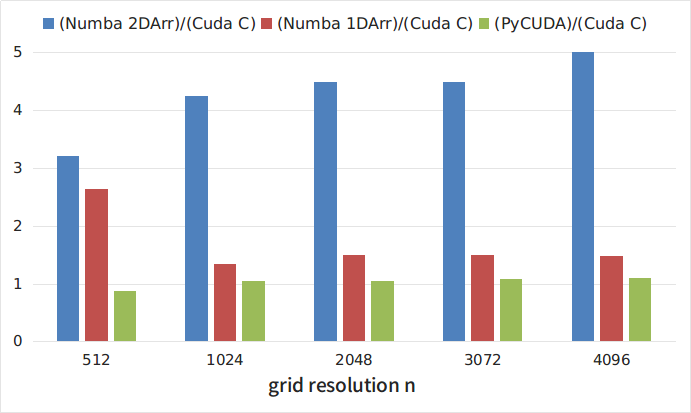

Figure 1 and Table 1 show the dependencies of the test program runtime (in seconds) on the mesh size n, obtained when running the C code (CUDA C curve) and python implementations with the Numba library and two-dimensional arrays (Numba 2DArr), with the library Numba and one-dimensional arrays (Numba 1DArr), with the PyCUDA library (PyCUDA curve).

Picture 1

| n | Cuda c | Numba 2DArr | Numba 1DArr | PyCUDA |

|---|---|---|---|---|

| 512 | 0.25 | 0.8 | 0.66 | 0.216 |

| 1024 | 0.77 | 3.26 | 1.03 | 0.808 |

| 2048 | 2.73 | 12.23 | 4.07 | 2.87 |

| 3073 | 6.1 | 27.3 | 9.12 | 6.6 |

| 4096 | 11.05 | 55.88 | 16.27 | 12.02 |

Figure 2 shows the relationship between the execution times of various python implementations and the execution time of the C code. As can be seen from the figures, the slowest of the considered is the implementation using the Numba library using two-dimensional arrays. At the same time, this approach is the most obvious and simple. Interestingly, the sweep of two-dimensional arrays in one-dimensional leads to approximately threefold acceleration of the code. The quickest solution was to use the PyCUDA library. At the same time, as noted above, using this library is somewhat more laborious, since it requires writing a kernel in C. However, the costs pay off and the execution speed of such a python program is only 5-8% less than a program written entirely in C.

Figure 2

findings

Miracles do not happen and the most simple and visual solutions are at the same time the slowest. At the same time, the available libraries allow you to achieve the speed of execution of python-programs, comparable to the speed of execution of pure C-code. Existing libraries give the developer a choice between more and less high-level solutions. This choice, however, there is always a trade-off between the speed of development and the speed of program execution.

Links

» Numba Library Documentation

» Examples of using Numba

» PyCUDA Library Documentation

» PyCUDA usage examples

Source: https://habr.com/ru/post/317328/

All Articles