An inside look at OpenBMC for OpenPOWER systems

In a previous article, Maxim touched on the hardware of the Baseboard Management Controller (BMC). I want to continue the story and talk about our approach to BMC and participation in the OpenBMC project.

For completeness of the story, you will have to start a little from a distance and tell you about the purpose of service processors and the role of BMC in the server’s work, affect the IPMI protocol and the software part. After this, I briefly describe how BMC is involved in booting systems on POWER8. I will finish the review of the OpenBMC project and our attitude to the question. Experienced in the subject of service processors, readers can immediately rewind to the lower sections.

The service processor is a separate specialized controller embedded in the server. Its chip can be soldered to the motherboard, located on a separate card or, for example, placed in a blade chassis to manage the resources of the entire system as a whole (and then it can be called SMC - System Management Controller). BMC is a special case of a service processor to control a particular host, and hereinafter we will only talk about them and use the term “service processor” only in the meaning of “BMC”. In this case, saying "BMC", in general, we mean both the actual chip and the control firmware. In some cases, we will separately indicate that we are talking about the hardware or software part.

The BMC is powered separately from the main system, turns on automatically when the standby power is supplied to the server, and operates until the power is turned off. Almost all service processors can manage host power, provide access to the console of the main operating system via Serial Over LAN (SoL), read system sensors (fan speed, voltage on power supplies and VRM, components temperature), monitor the health of components, store hardware error log (SEL). Many offer remote KVM, virtual media (DVD, ISO), support various out-of-band connection protocols (IPMI / RMCP, SSH, RedFish, RESTful, SMASH) and more.

')

Now remote control has spread everywhere. It facilitates the management of a large fleet of servers, improves availability due to reduced downtime, and improves the operational efficiency of data centers. As a result, the availability of extensive remote management capabilities is taken into account by customers when choosing a supplier of hardware platform.

BMC users are mainly system administrators for remote control, disaster recovery, log collection, OS installation, etc. Data from the service processor uses technical support. For her, BMC is often the only source of information when troubleshooting and identifying faulty components for replacement.

In modern infrastructure, BMC is not just a pleasant additional option of remote server management (although not going to the server room, where it is cold, noisy, no place to sit, and does not catch a mobile well, this is nice). In many situations, this is a critical component of the infrastructure. When the operating system or application does not respond or is in an incomprehensible state, the service processor is the only source of information and a way to quickly recover.

To connect to the service processor using a dedicated network port (out-of-band), or BMC shares a network port with the main system (sideband). That is one physical Ethernet connector, but two independent MACs and two IP addresses. For initial setup often use console RS-232 connection.

Historically, the software part of BMC was developed along with the server hardware platform and the same developers. As a result, for each platform, the software of the service processor was unique. The same vendor could have several versions of BMC firmware for different product lines. Despite the proliferation of open source, the BMC firmware has long remained extremely proprietary.

Typically, the service processor is based on specialized on-chip systems (System-on-Chip, SoC), and the de facto standard for describing hardware architecture requirements is the IPMI (Intelligent Platform Management Interface Interface) specification. This is a fairly old standard. Back in 1998, a group of companies developed the first IPMI specification for standardizing server management.

IPMI provides a generic messaging interface for accessing all of the managed components in a system and describes a large set of interfaces for different types of operations — for example, temperature monitoring, voltage, fan speed, or access to the OS console. It also provides methods for managing the power of the entire complex, obtaining SEL (System Event Log) hardware logs, reading sensor data (SDR), and implementing hardware watchdogs. IPMI provides a replacement or abstraction for individual sensor access methods, such as the System Management Bus (SMBus) or Inter Integrated Circuit (I2C). Most BMCs use a proprietary IPMI stack from a small number of vendors.

The protocol has accumulated a lot of complaints, including in terms of security when accessing the network (IPMI over LAN). Periodically, the network is shaken by stories like this . The thing is - by gaining access to the service processor, we gain complete control over the server. Nothing prevents to reboot into recovery mode and change the password for the 'root'-account. The only reliable means of such a vulnerability is the rule that IPMI traffic (UDP port 623) should not go beyond a dedicated network or VLAN. There must be strict control over the activity in the control network.

In addition to security issues, the hardware landscape of data centers has changed a lot over the years. Spread virtualization, disaggregation of components, clouds. It's hard to add something new to IPMI. The more servers you need to administer, the higher the value of automation procedures. API specifications appear to replace IPMI over LAN. Many pin their hopes on RedFish.

This API uses modern JSON and HTTPS protocols and a RESTful interface for accessing 'out-of-band' data. The goal of developing a new API is to offer the industry a single standard that is suitable for heterogeneous data centers. And for single complex enterprise servers and for cloud data centers from multiple commodity servers. And this API must meet current security requirements.

At the same time, at the hardware level, the standard is IPMI support, which is involved in the entire working cycle of the server, starting from power-up, loading of the operating system, and ending with disaster recovery (hang, panic, etc.).

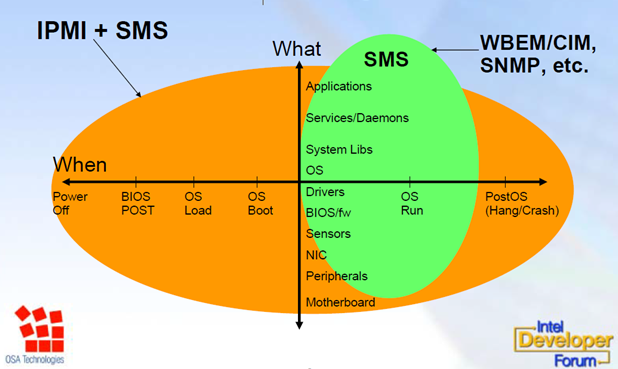

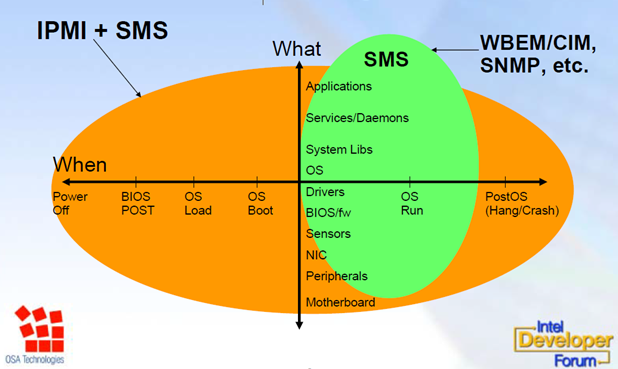

The role of BMC in the life of the server. In this picture, SMS means "System Management Software". The picture is taken from here .

At the heart of the IPMI hardware is the BMC chip. It is involved in the operation of the server, starting from the moment the power is applied, and participates in the server boot process on OpenPOWER. That is, the BMC is required to turn on the system. At the same time, the reboot / crash of the BMC does not affect the operating system already running.

BMC and POWER8 processor are connected by LPC bus (Low Pin Count). This bus is designed to connect the processor with peripheral, relatively slow devices. It operates at a frequency of up to 33 MHz. Through the LPC, the central processor (that is, the Hostboot / OPAL microcode) communicates with the BMI IPMI stack over the BT (p. 104) interface. For the same bus, the POWER8 receives the boot code from the PNOR (Processor NOR chip) via the LPC → SPI (Serial Peripheral Interface) connection.

The first boot phase begins as soon as the power supplies are plugged in and ends at the stage where the BMC is fully turned on and ready to start downloading the entire host. Looking ahead, I note that from here and further I describe the work of BMC software on OpenPOWER systems in general, but specifically in our server, OpenBMC performs these functions. When power is applied, the BMC starts executing the code from the SPI flash, loads the u-boot, and then the Linux kernel. At this stage, the BMC is running IPMI, the LPC bus is prepared for host access to PNOR memory (mounted via mtdblock). The POWER8 chip is off at this stage. In this state, you can connect to the BMC network interface and do something about it.

When the system is in standby mode and the power button is pressed, the BMC initiates the download and launches the “small” SBE (Self Boot Engine) controller inside the POWER8 chip on the master processor to load the Hostcode microcode from the PNOR flash into the L3 cache master processor.

Hostboot microcode is responsible for initializing the processor buses, SDRAM memory, the other POWER8 processors, OPAL (Open Power Abstraction Layer) and another microcontroller built into POWER8, called OCC (On Chip Controller).

We will tell about this controller in more detail, since it has a special meaning for the BMC. When Hostboot finishes, the next component of the Skiboot microcode is started from the PNOR flash. This level synchronizes the processors, initializes the PCIe bus, and also interacts with the BMC via IPMI (for example, it updates the sensor value of “FW Boot progress”). Skiboot is also responsible for running the next boot level of Petitboot, which chooses where the main operating system will be loaded from and starts it via kexec.

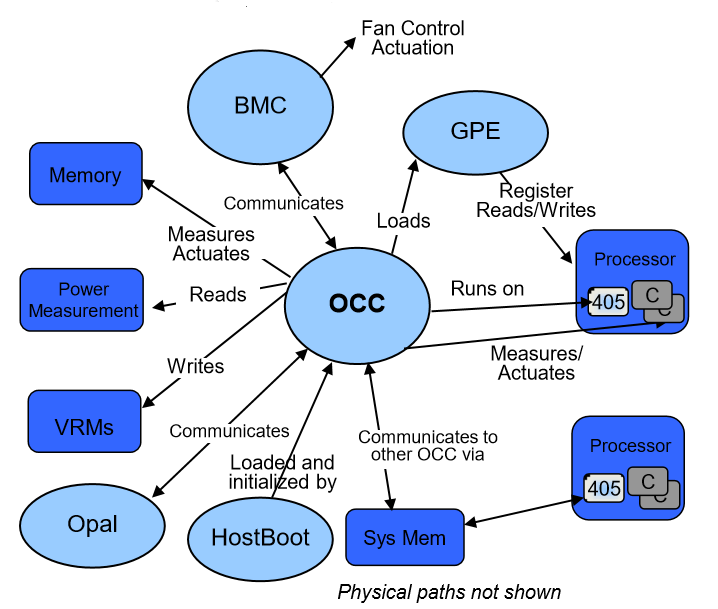

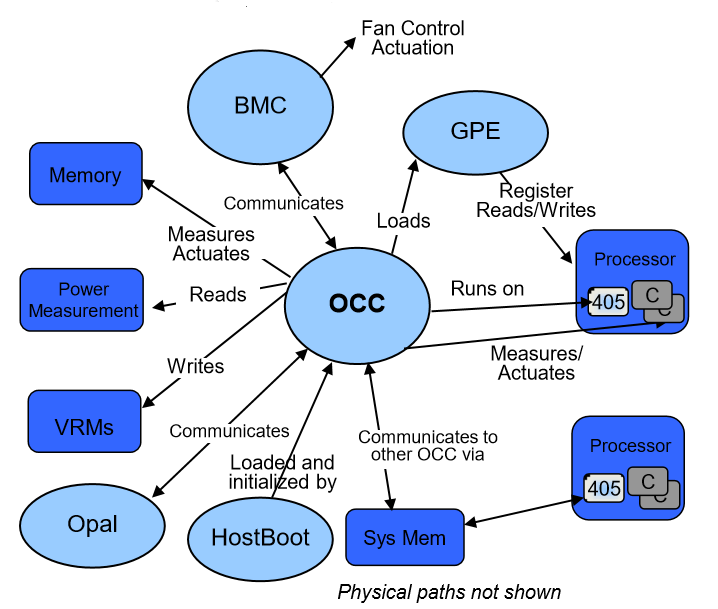

But let's take a step back and return to the OCC. The OCC chip is a PPC 405 core built into the IBM POWER8 processor along with the main POWER8 cores (one OSS per chip). It has its own 512 KB of SRAM, access to main memory. It is a real-time system responsible for temperature control (monitoring of memory and processor core temperatures), memory performance management, monitoring voltage and processor frequency. The OCC provides access to all of this information for the BMC over the I2C bus.

What exactly does the OCC do?

Thus, the OCC is a provider of information for the BMC, which is connected via the I2C bus. The source code for most of the microcode for POWER8 (and in particular for OCC) was discovered by IBM .

In addition to interacting with the OCC and the CPU via the LPC bus, the BMC has other interfaces. For example, GPIO on a BMC chip is used to control power supplies and LEDs, and I2C can be used to read sensors.

The interconnection of all the above is not so complicated.

At the moment, most of the OpenPOWER microcode is open. At the same time, the software part of the service processor and the IPMI stack, until recently, remained proprietary. The first open source project for the service processor was OpenBMC. The community met him with enthusiasm and began to actively develop. About OpenBMC and talk further.

Finally, we come to the story of the emergence of OpenBMC and how we use it.

OpenBMC appeared in Facebook when developing the Wedge switch as part of the OCP community in 2015. Initially, the BMC software was developed by an iron supplier. In the first months of work, many new requirements for BMC emerged, coordination of which with developers was difficult and caused delays. Under the influence of this, on one of the hackathons, four Facebook engineers implemented some of the basic BMC functions in 24 hours. It was very far from productive, but it became clear that the task of implementing the software part of the BMC can be solved separately from the hardware.

A few months later, OpenBMC was officially released along with the Wedge switch, and some time later the OpenBMC source code was opened as part of the OCP partnership.

Next, Facebook adapted OpenBMC for NVMe Flash shelf Lightning, and then for the chassis of Yosemite microservers. In the last change, Facebook abandoned RMCP / RMCP + (IPMI access over LAN), but the REST API via HTTP (S) and console access via SSH appeared. Thus, Facebook has turned out a single API for managing various types of equipment and greater flexibility in implementing new features. With a proprietary approach to BMC, this would be impossible.

In the concept of Facebook, BMC is a regular server, but it works on SoC with limited resources (slow processor, low memory, small flash). With this in mind, OpenBMC was conceived as a specialized Linux distribution, all packages of which are built from source using the Yocto project. The description of all the packages in Yocto are combined into 'prescriptions' (recipes), which in turn are combined into 'layers' (layers).

OpenBMC has three layers:

Facebook is not the first to use Yocto at BMC. Proprietary Dell iDRAC is built on the same assembly system.

OpenBMC is easily ported from one platform to another using rebuilding with several bitbake commands. This allows you to use the same BMC and, as a result, the same API on different hardware platforms. This can break the current tradition of dependence of the software stack on the hardware.

The OCP community quickly became imbued with the idea of OpenBMC, and another OCP member, IBM, was actively involved in the development. Their efforts created a fork of the project under OpenPOWER , and by August 2015 the first version of OpenBMC was released for the Rackspace Barreleye server under the SoC AST2400 . IBM engineers resolutely got down to business and not only adapted OpenBMC for a new platform, but significantly reworked it. At the same time, due to the tight deadlines and for ease of development, they actively used Python.

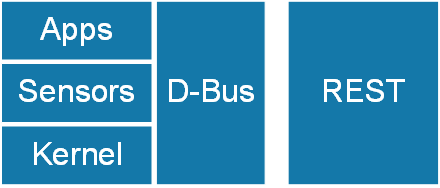

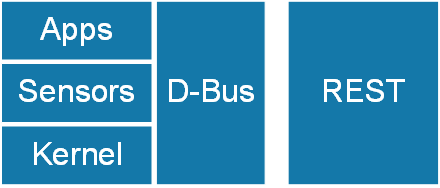

The changes affected all layers of the project, including the redesigned Linux kernel for SoC (installable drivers, device tree added), a D-Bus for interprocess communication appeared at the user level (facebook D-Bus did not exist). It is through D-Bus that all OpenBMC functions are implemented. The main way to work with OpenBMC is a REST interface for accessing bus interfaces. In addition, there is a dropbear ssh.

There is a web-access to the REST API (for debugging, for example) through the Python Bottle framework.

Thanks to the easy portability of OpenBMC from one platform to another, debugging boards, up to RaspberryPI, can be used for development. To simplify development, an assembly for the QEMU emulator is provided.

Now OpenBMC has a fairly austere console interface via SSH. IPMI is minimally supported by the host. The REST interface can be used by applications for remote control and monitoring. Some of the most popular features are implemented through the

Probably, 90% of the operations performed through the BMC is the on / off of the server. In OpenBMC, this is done by the

Also, for example, through

Now, not only IBM is actively involved in the OpenBMC project, but also many other vendors interested in avoiding the closed BMC stack. IBM itself focuses mainly on adapting to the P9 platform.

Most of the development of OpenBMC is under the Apache-2.0 license, but OpenBMC includes many components with different licenses (for example, the Linux kernel and the u-boot under GPLv2). The result is a mix of different open source licenses. In addition, developers can add their own proprietary components to the final assembly, which work in parallel with Open Source.

As is clear from the text above, we design the software part of our service processor based on OpenBMC. The product is still raw, but the very minimum of functions for server administration is already implemented in it, IPMI is partially implemented (for the most basic needs). Service processors in servers with this set of features were on the market several years ago.

OpenBMC is constantly changing and improving, almost every day on the gerrit server you can see new commits. Therefore, to concentrate strongly on the functionality at the moment is not very grateful. Refactoring is continuously performed, the Python code is replaced with C / C ++, more functions are transferred to systemd.

The attitude to the service processor as to a normal server is not typical for BMC because of its important role in the life of the server. The use of systemd and D-Bus has not been common in this area before. New time - new trends.

The first task for us is to adapt the current state of OpenBMC to our platform. Next, we plan to modify it with options and interfaces in which our customers are interested. Given the limited functionality at the moment, there are a great many areas for development. As new features are implemented, we will definitely commit changes to the OpenBMC project for the community to benefit from.

For completeness of the story, you will have to start a little from a distance and tell you about the purpose of service processors and the role of BMC in the server’s work, affect the IPMI protocol and the software part. After this, I briefly describe how BMC is involved in booting systems on POWER8. I will finish the review of the OpenBMC project and our attitude to the question. Experienced in the subject of service processors, readers can immediately rewind to the lower sections.

Service processors - what, why and how

The service processor is a separate specialized controller embedded in the server. Its chip can be soldered to the motherboard, located on a separate card or, for example, placed in a blade chassis to manage the resources of the entire system as a whole (and then it can be called SMC - System Management Controller). BMC is a special case of a service processor to control a particular host, and hereinafter we will only talk about them and use the term “service processor” only in the meaning of “BMC”. In this case, saying "BMC", in general, we mean both the actual chip and the control firmware. In some cases, we will separately indicate that we are talking about the hardware or software part.

The BMC is powered separately from the main system, turns on automatically when the standby power is supplied to the server, and operates until the power is turned off. Almost all service processors can manage host power, provide access to the console of the main operating system via Serial Over LAN (SoL), read system sensors (fan speed, voltage on power supplies and VRM, components temperature), monitor the health of components, store hardware error log (SEL). Many offer remote KVM, virtual media (DVD, ISO), support various out-of-band connection protocols (IPMI / RMCP, SSH, RedFish, RESTful, SMASH) and more.

')

Now remote control has spread everywhere. It facilitates the management of a large fleet of servers, improves availability due to reduced downtime, and improves the operational efficiency of data centers. As a result, the availability of extensive remote management capabilities is taken into account by customers when choosing a supplier of hardware platform.

BMC users are mainly system administrators for remote control, disaster recovery, log collection, OS installation, etc. Data from the service processor uses technical support. For her, BMC is often the only source of information when troubleshooting and identifying faulty components for replacement.

In modern infrastructure, BMC is not just a pleasant additional option of remote server management (although not going to the server room, where it is cold, noisy, no place to sit, and does not catch a mobile well, this is nice). In many situations, this is a critical component of the infrastructure. When the operating system or application does not respond or is in an incomprehensible state, the service processor is the only source of information and a way to quickly recover.

To connect to the service processor using a dedicated network port (out-of-band), or BMC shares a network port with the main system (sideband). That is one physical Ethernet connector, but two independent MACs and two IP addresses. For initial setup often use console RS-232 connection.

IPMI

Quick reference

Historically, the software part of BMC was developed along with the server hardware platform and the same developers. As a result, for each platform, the software of the service processor was unique. The same vendor could have several versions of BMC firmware for different product lines. Despite the proliferation of open source, the BMC firmware has long remained extremely proprietary.

Typically, the service processor is based on specialized on-chip systems (System-on-Chip, SoC), and the de facto standard for describing hardware architecture requirements is the IPMI (Intelligent Platform Management Interface Interface) specification. This is a fairly old standard. Back in 1998, a group of companies developed the first IPMI specification for standardizing server management.

IPMI provides a generic messaging interface for accessing all of the managed components in a system and describes a large set of interfaces for different types of operations — for example, temperature monitoring, voltage, fan speed, or access to the OS console. It also provides methods for managing the power of the entire complex, obtaining SEL (System Event Log) hardware logs, reading sensor data (SDR), and implementing hardware watchdogs. IPMI provides a replacement or abstraction for individual sensor access methods, such as the System Management Bus (SMBus) or Inter Integrated Circuit (I2C). Most BMCs use a proprietary IPMI stack from a small number of vendors.

Claims against IPMI

The protocol has accumulated a lot of complaints, including in terms of security when accessing the network (IPMI over LAN). Periodically, the network is shaken by stories like this . The thing is - by gaining access to the service processor, we gain complete control over the server. Nothing prevents to reboot into recovery mode and change the password for the 'root'-account. The only reliable means of such a vulnerability is the rule that IPMI traffic (UDP port 623) should not go beyond a dedicated network or VLAN. There must be strict control over the activity in the control network.

In addition to security issues, the hardware landscape of data centers has changed a lot over the years. Spread virtualization, disaggregation of components, clouds. It's hard to add something new to IPMI. The more servers you need to administer, the higher the value of automation procedures. API specifications appear to replace IPMI over LAN. Many pin their hopes on RedFish.

This API uses modern JSON and HTTPS protocols and a RESTful interface for accessing 'out-of-band' data. The goal of developing a new API is to offer the industry a single standard that is suitable for heterogeneous data centers. And for single complex enterprise servers and for cloud data centers from multiple commodity servers. And this API must meet current security requirements.

At the same time, at the hardware level, the standard is IPMI support, which is involved in the entire working cycle of the server, starting from power-up, loading of the operating system, and ending with disaster recovery (hang, panic, etc.).

The role of BMC in the life of the server. In this picture, SMS means "System Management Software". The picture is taken from here .

BMC's role in booting the OpenPOWER system

At the heart of the IPMI hardware is the BMC chip. It is involved in the operation of the server, starting from the moment the power is applied, and participates in the server boot process on OpenPOWER. That is, the BMC is required to turn on the system. At the same time, the reboot / crash of the BMC does not affect the operating system already running.

BMC and POWER8 processor are connected by LPC bus (Low Pin Count). This bus is designed to connect the processor with peripheral, relatively slow devices. It operates at a frequency of up to 33 MHz. Through the LPC, the central processor (that is, the Hostboot / OPAL microcode) communicates with the BMI IPMI stack over the BT (p. 104) interface. For the same bus, the POWER8 receives the boot code from the PNOR (Processor NOR chip) via the LPC → SPI (Serial Peripheral Interface) connection.

Role of the BMC in the boot phase Power off -> Standby

The first boot phase begins as soon as the power supplies are plugged in and ends at the stage where the BMC is fully turned on and ready to start downloading the entire host. Looking ahead, I note that from here and further I describe the work of BMC software on OpenPOWER systems in general, but specifically in our server, OpenBMC performs these functions. When power is applied, the BMC starts executing the code from the SPI flash, loads the u-boot, and then the Linux kernel. At this stage, the BMC is running IPMI, the LPC bus is prepared for host access to PNOR memory (mounted via mtdblock). The POWER8 chip is off at this stage. In this state, you can connect to the BMC network interface and do something about it.

Standby -> OS boot

When the system is in standby mode and the power button is pressed, the BMC initiates the download and launches the “small” SBE (Self Boot Engine) controller inside the POWER8 chip on the master processor to load the Hostcode microcode from the PNOR flash into the L3 cache master processor.

Hostboot microcode is responsible for initializing the processor buses, SDRAM memory, the other POWER8 processors, OPAL (Open Power Abstraction Layer) and another microcontroller built into POWER8, called OCC (On Chip Controller).

We will tell about this controller in more detail, since it has a special meaning for the BMC. When Hostboot finishes, the next component of the Skiboot microcode is started from the PNOR flash. This level synchronizes the processors, initializes the PCIe bus, and also interacts with the BMC via IPMI (for example, it updates the sensor value of “FW Boot progress”). Skiboot is also responsible for running the next boot level of Petitboot, which chooses where the main operating system will be loaded from and starts it via kexec.

But let's take a step back and return to the OCC. The OCC chip is a PPC 405 core built into the IBM POWER8 processor along with the main POWER8 cores (one OSS per chip). It has its own 512 KB of SRAM, access to main memory. It is a real-time system responsible for temperature control (monitoring of memory and processor core temperatures), memory performance management, monitoring voltage and processor frequency. The OCC provides access to all of this information for the BMC over the I2C bus.

What exactly does the OCC do?

- Monitors the power status of server components.

- Monitors and controls the temperature of components; in case of overheating, memory performance decreases (memory throttling).

- If necessary, the OCC reduces the frequency / power consumption of the processor by reducing the maximum P-state (performance state, see ACPI ). However, the OCC does not specify the P-state directly. It sets the limits within which the operating system can change the P-state.

- Provides BMC power and temperature information for efficient fan control.

Thus, the OCC is a provider of information for the BMC, which is connected via the I2C bus. The source code for most of the microcode for POWER8 (and in particular for OCC) was discovered by IBM .

In addition to interacting with the OCC and the CPU via the LPC bus, the BMC has other interfaces. For example, GPIO on a BMC chip is used to control power supplies and LEDs, and I2C can be used to read sensors.

The interconnection of all the above is not so complicated.

At the moment, most of the OpenPOWER microcode is open. At the same time, the software part of the service processor and the IPMI stack, until recently, remained proprietary. The first open source project for the service processor was OpenBMC. The community met him with enthusiasm and began to actively develop. About OpenBMC and talk further.

OpenBMC, its history and features

Finally, we come to the story of the emergence of OpenBMC and how we use it.

The birth of OpenBMC on Facebook

OpenBMC appeared in Facebook when developing the Wedge switch as part of the OCP community in 2015. Initially, the BMC software was developed by an iron supplier. In the first months of work, many new requirements for BMC emerged, coordination of which with developers was difficult and caused delays. Under the influence of this, on one of the hackathons, four Facebook engineers implemented some of the basic BMC functions in 24 hours. It was very far from productive, but it became clear that the task of implementing the software part of the BMC can be solved separately from the hardware.

A few months later, OpenBMC was officially released along with the Wedge switch, and some time later the OpenBMC source code was opened as part of the OCP partnership.

Next, Facebook adapted OpenBMC for NVMe Flash shelf Lightning, and then for the chassis of Yosemite microservers. In the last change, Facebook abandoned RMCP / RMCP + (IPMI access over LAN), but the REST API via HTTP (S) and console access via SSH appeared. Thus, Facebook has turned out a single API for managing various types of equipment and greater flexibility in implementing new features. With a proprietary approach to BMC, this would be impossible.

In the concept of Facebook, BMC is a regular server, but it works on SoC with limited resources (slow processor, low memory, small flash). With this in mind, OpenBMC was conceived as a specialized Linux distribution, all packages of which are built from source using the Yocto project. The description of all the packages in Yocto are combined into 'prescriptions' (recipes), which in turn are combined into 'layers' (layers).

OpenBMC has three layers:

- general level, user space of the application (not dependent on hardware).

- SoC level (Linux kernel, bootloader, C library).

- platform / product level (packages specific to a specific product, kernel settings, libraries for sensors).

Facebook is not the first to use Yocto at BMC. Proprietary Dell iDRAC is built on the same assembly system.

OpenBMC is easily ported from one platform to another using rebuilding with several bitbake commands. This allows you to use the same BMC and, as a result, the same API on different hardware platforms. This can break the current tradition of dependence of the software stack on the hardware.

Fork project at IBM

The OCP community quickly became imbued with the idea of OpenBMC, and another OCP member, IBM, was actively involved in the development. Their efforts created a fork of the project under OpenPOWER , and by August 2015 the first version of OpenBMC was released for the Rackspace Barreleye server under the SoC AST2400 . IBM engineers resolutely got down to business and not only adapted OpenBMC for a new platform, but significantly reworked it. At the same time, due to the tight deadlines and for ease of development, they actively used Python.

The changes affected all layers of the project, including the redesigned Linux kernel for SoC (installable drivers, device tree added), a D-Bus for interprocess communication appeared at the user level (facebook D-Bus did not exist). It is through D-Bus that all OpenBMC functions are implemented. The main way to work with OpenBMC is a REST interface for accessing bus interfaces. In addition, there is a dropbear ssh.

There is a web-access to the REST API (for debugging, for example) through the Python Bottle framework.

Thanks to the easy portability of OpenBMC from one platform to another, debugging boards, up to RaspberryPI, can be used for development. To simplify development, an assembly for the QEMU emulator is provided.

Now OpenBMC has a fairly austere console interface via SSH. IPMI is minimally supported by the host. The REST interface can be used by applications for remote control and monitoring. Some of the most popular features are implemented through the

obmcutil command.Probably, 90% of the operations performed through the BMC is the on / off of the server. In OpenBMC, this is done by the

obmcutil poweron and obmcutil poweroff .Also, for example, through

obmcutil you can see the value of sensors and detailed information about server hardware (FRU), if it is supported on a specific platform: obmcutil getinventory obmcutil getsensors Now, not only IBM is actively involved in the OpenBMC project, but also many other vendors interested in avoiding the closed BMC stack. IBM itself focuses mainly on adapting to the P9 platform.

Most of the development of OpenBMC is under the Apache-2.0 license, but OpenBMC includes many components with different licenses (for example, the Linux kernel and the u-boot under GPLv2). The result is a mix of different open source licenses. In addition, developers can add their own proprietary components to the final assembly, which work in parallel with Open Source.

Our view on OpenBMC

As is clear from the text above, we design the software part of our service processor based on OpenBMC. The product is still raw, but the very minimum of functions for server administration is already implemented in it, IPMI is partially implemented (for the most basic needs). Service processors in servers with this set of features were on the market several years ago.

OpenBMC is constantly changing and improving, almost every day on the gerrit server you can see new commits. Therefore, to concentrate strongly on the functionality at the moment is not very grateful. Refactoring is continuously performed, the Python code is replaced with C / C ++, more functions are transferred to systemd.

The attitude to the service processor as to a normal server is not typical for BMC because of its important role in the life of the server. The use of systemd and D-Bus has not been common in this area before. New time - new trends.

The first task for us is to adapt the current state of OpenBMC to our platform. Next, we plan to modify it with options and interfaces in which our customers are interested. Given the limited functionality at the moment, there are a great many areas for development. As new features are implemented, we will definitely commit changes to the OpenBMC project for the community to benefit from.

Source: https://habr.com/ru/post/317140/

All Articles