Death transit traffic?

Is there a light at the end of the tunnel for transit traffic providers?

I was amazed at the recent NANOG meeting with how few speeches there were on ISP space issues and on issues related to ISP operations, and how many there were on data center environments.

If there is a topic that is decisive in our communication, then this is certainly an environment that seems to dominate in the design of data centers and network operation. And, it appears that the function of the Internet service provider (ISP function) and, in particular, the function of the transit traffic provider disappears. The task of delivering users to content is changed to the task of delivering content to users. Does this mean that transit for Internet users is no longer valid? Let's take a closer look at this.

The Internet consists of several tens of thousands of individual networks, each of which plays a role. If we consider the Internet routing system to be a good indicator, then there are 55,400 individual networks (or “autonomous systems” in the language of the routing system).

')

Most of these networks are located on the periphery of the network and, obviously, do not provide the packet transit service to any other network - currently there are 47,700 such structures. The remaining 7,700 networks operate in a slightly different capacity. By issuing a set of addresses to the Internet, they also carry messages from other networks - in other words, we can say that they play the role of transit traffic providers.

Why was this distinction important in the past? What was so special about transit?

A significant part of the problem was in the financial features of the mechanisms that operated between providers. In many operations where several providers are involved in providing a service to a client, there is usually a provider who receives payment from the client and distributes it to other providers for their participation in the service provided to the client.

No matter how logical it looks, the Internet is not ready to recognize such mechanisms. There is no clear model of what the “service” component is - there is no general agreement on how to account for the services that should have been the basis of any form of financial settlement between providers.

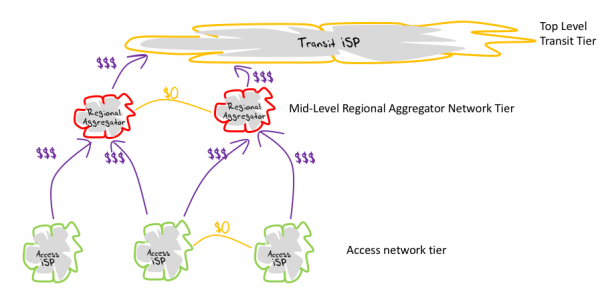

Ultimately, the Internet has done without such mechanisms and adopted a simpler model based on only two forms of interconnection. In one model, one provider positions itself as a provider for others who become customers and pay a provider for services. In the other model, two providers position themselves as approximately equal positions; in this case, they must find a mutually acceptable result in the ability to exchange traffic with each other for free (the so-called "peering") (Fig. 1).

Fig. 1. Provider / Client and Peering Models in the World of Internet Service Providers (ISP)

In this environment, to understand who the provider and who is the client, becomes a difficult task in some cases. Similarly, it is also often problematic to understand whether peering with another provider is useful to you or vice versa. But there was one criterion that seemed to be largely self-evident: access providers pay transit providers for their traffic.

The overall result of these arrangements was a certain number of “levels” (Tier), where players who together existed at some level usually found some form of interaction that boiled down to peering, while at the interaction of several levels the lower level of the network became a client , and higher - provider. These levels were not formally defined, and there was no “tolerance” for any level with a predetermined result.

In many respects, this layered model described the result of the negotiation process, where each provider was essentially known at its level to those Internet providers with whom he interacted: with those who were his clients and those whose client he was .

Fig. 2. Multilevel in ISP-environment

It is pretty clear where this process is going, because at the top of this hierarchy were the dominant transit service providers. At the "upper" level were so-called. one level providers (Tier I). Together, these providers formed an informal "oligarchy" of the Internet (Tier I Club).

To understand why transit played such an important role, it was enough just to look at relatively remote areas of the world, where transit costs completely dominated the costs of providing access to the network. In Australia a decade ago or so, about 75% of all data that was delivered to end users was transmitted across the Pacific (and back) by Australian Internet service providers who charged for this transit feature. The result was high retail prices for Internet access, the use of data volume limits, and all sorts of efforts to cache local data.

Even in markets where transit costs were not so dominant, there was still a clear distinction between the levels at which the combination of infrastructure assets, the total number of direct and indirect customers (in other words, the total market share) and the ability to overcome obstacles determined their particular position relative to other Internet service providers in the same market space. In such markets, transit was important because consideration of infrastructure assets included their breadth from the point of view of the coverage interval. A regional or local Internet provider would find it problematic to have peering with a provider serving, for example, the entire country.

But, that was - that passed, and it seems, a lot has changed in this model. The original model of the Internet was built on the forwarding function and its role was to provide client traffic in both directions with all the intermediate points where the servers can be located. According to Senator Ted Stevens (outside the context of his monologue), this forwarding-oriented model of the Internet was, indeed, "a multitude of pipes." Services filled the network to the edge, and the network was evenly able to connect users to services wherever they were located. But it is necessary to see the difference between opportunity and actual work, that is, ability.

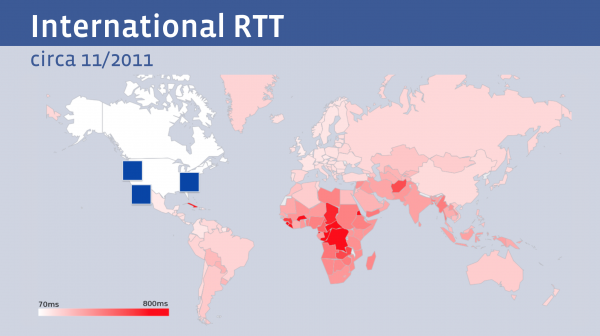

While the model allowed any user to use any service, the forwarding route within the network was noticeably different for users when accessing the same service. This often led to abnormal operation in which, the farther away the user was from the server, the slower the transfer occurred. For example, the figure below is taken from the Facebook presentation on NANOG 68 (Fig. 3).

Fig. 3. Internet performance, 2011

The areas served by geostationary satellites and areas with extremely long routes in terms of channel length had, of course, much more time to transmit and confirm (RTT) for the data exchanged between the user and the point of service.

Naturally, such an increase in RTT is not a one-time phenomenon. If we consider that an extended DNS transaction may occur, then TCP handshaking, potentially followed by TLS handshaking, then a request transaction, and then delivery of the required content with a slow TCP start, the average user has a transaction delay of at least 3 RTTS and, more likely, twice as big.

A transaction that takes less than one tenth of a second for a user located in the same city where the server is located may require up to 6 seconds for a remote user.

These delays do not always occur due to poor routing by the routing system or rarely located base stations, although these considerations also matter. Part of the problem is simple physical reality: the size of the Earth and the speed of light.

A third of a second goes to the packet path to the geostationary satellite and back. Satellite delay is determined by the speed of light and the distance from the Earth to a satellite in a geostationary orbit.

With a submarine cable, the situation is better, but not by much. The speed of light in a fiber-optic cable is two-thirds of the speed of light in a vacuum, so a journey only across the Pacific Ocean and back can take 160 milliseconds. So in a sense, this is the border that can be reached by trying to organize an optimal transit topology for the Internet and there is nowhere to move after that, unless you are ready to wait for the end of the continental drift, which will bring us all together again!

If you really want to get improved performance for your service - it is clear that the next step should be a complete refusal of transit and moving a copy of the content closer to the user.

That is what is happening now. We see that now the projects of submarine cables are focused more on providing communication for data centers of the largest providers, than on providing user traffic to remote data centers. In 2008, Google began to take possession of submarine cables and currently has positions in six of them (see, in particular, the recent cable installation report (Fig. 4)).

Fig. 4. CDN cable report

Tim Strongd, vice president of TeleGeography, as reported by WIRED , said that this new cable continues the current trend:

“Large content providers have huge and often unpredictable traffic requirements, especially between their own data centers. Their performance requirements are such that it makes sense for them to lay cables on the busiest routes, rather than buy traffic. Possession of underwater fiber optic pairs also gives the freedom of maneuver during the modernization, when they consider it appropriate, removing the dependence on the third-party submarine cable operator. "

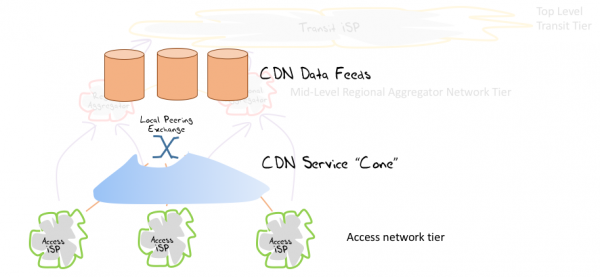

This shift in content access technologies also changes the role of various Internet exchanges.

The initial motivation for such an exchange was to group together a number of local providers of similar volume in order to enable them to directly exchange traffic with each other. The exchange allowed transit providers to bypass the fee when performing cross-connections and since there was a significant share of local traffic exchange, such an exchange filled the real need to replace part of the transit provider’s role with a local switching function.

However, exchange interaction operators quickly realized that when they also include data center services in exchange, local exchange participants see an increased amount of user traffic provided during the exchange, which increased the relative importance of this exchange.

There is another factor pushing content and service to these specialized content distribution systems - the threat of a malicious attack. Some enterprises, especially those whose main activity is not the provision of online services, find it difficult to make significant investments in staff training and infrastructure to withstand network attacks.

The desire to increase the stability of the service under attack conditions, combined with the requirements of users for quick access, necessitates the use of some form of anonymous service, in which the distribution of services occurs over many access points close to different groups of users. It is therefore not surprising that companies that distribute content, such as Akamai and Cloudflare (let's call these two at least), have a high demand for their services.

But then, if the content is shifted to data centers located closer to the users, what should transit traffic providers do?

The prospect does not look bright and, although this may only be the first bell, it is likely that we have seen the latest infrastructure projects of long-distance cable financed by transit Internet providers (at least for the time being).

In many developed consumer Internet markets, there is simply no longer any need for end users to access remote services in such large volumes as before. Instead, content providers transfer their main content much closer to the user, to content distribution systems, which take the lead in financing further integration into the long-distance infrastructure of the Internet.

For the user, this means better performance and potential cost reduction, especially if we consider the component part of the cost of shipping only. The need for long-distance transit infrastructure still exists, but now it's up to content providers who take on the bulk of this need. The remaining, user-defined transit component becomes a niche of activity that is understood only by the initiate and which is far beyond the mainstream data services.

As a result, the entire Internet architecture is also changing. From the peer-to-peer multipoint flat grid of the Internet of the early 1990s, we moved mainly to the client / server architecture, where most of the traffic comes from communication between clients and servers, and the traffic between the clients themselves is almost absent.

Network Address Translators (NATs) contributed to this by effectively hiding clients until they initiated a connection to the server. It is likely that solely thanks to these broadcasters, the exhaustion of IPv4 addresses did not become such a catastrophic disaster, as we all expected. By the time that the provision of new addresses had dried up significantly, we largely moved away from the peer-to-peer model of network connectivity, and the growing use of NAT translators in the access network was ultimately being ultimately fully compatible with the client / server access model . When we started using NAT in full-scale, the client / server network service model was already firmly established, and these two approaches complemented each other.

Now it is quite possible that we will continue to further split the client / server model into smaller parts, effectively directing each client to the service “cone” defined by a set of local data centers (Fig. 5).

Fig. 5. "Service cones" content delivery networks (CDN)

If the world of Internet service is actually determined by a small number of CDN providers: Google, Facebook, Amazon, Akamai, Microsoft, Apple, Netflix, Cloudflare, and perhaps a few more — and all other service providers mainly build their own services to these huge clouds of content, then why should client traffic go to remote data centers? What exactly is the remaining need for long-distance transit? Do transit traffic providers have a future?

Now, it seems, it will be somewhat premature to talk about this - recalling Mark Twain, we can say that the reports about the death of Internet transit traffic providers are greatly exaggerated and this statement is likely to be really true today. But it must be remembered that there is no prescriptive message from above that would save a fully connected Internet and set some specific model of service on the Internet.

In the world of private investment and private entrepreneurship, it is not uncommon to see providers solving the problems that they choose to solve, and coping with them so that this action will bring them profit. At the same time, they ignore what is a hindrance in their eyes and, given that they have limited time and energy resources, ignore what does not represent a commercial benefit for them. Thus, while “universal service” and “universal connectivity” have the right to be significant qualities in the regulated old world of telephony systems (and in the explicit social contract that was the foundation of this system), such concepts of social obligations are not subject to unquestioning performance on the Internet. If users do not value the offer enough to pay for it, then service providers have a limited incentive to provide it!

If transit is a little in demand and little-used service, then are we able to provide an Internet architecture that eliminates transit in general, as an entity?

Of course, it’s realistic to see a picture where the Internet is developing in the direction of a group of client “cones” that hang local data distribution points, and where we get the opportunity to use completely different mechanisms to coordinate the movement of data between centers or their synchronization. Instead of a single Internet, we would see a distributed structure, which would be basically similar to the client / service environment operating today, but with some level of implicit segmentation between each of these service “clouds”. It should be recognized that rather strong extrapolation has been applied to the current situation here, but, nevertheless, the result does not go beyond what is possible.

In this case, in such a collection of service cones, is there any value in the globally oriented address space? If all traffic flows are limited to the scope of each service cone, then the persistent addressing requirement is, at best, the requirement to distinguish endpoints only within the cone without any specific requirement for uniqueness across the entire network (no matter what is “all over the network” could mean in such a system). If anyone still remembers the work of the IETF on the RSIP (Realm-Specific IP, RFC 3102 ), then I mean this by expressing these considerations.

But this can only be realized in a few years, if, in general, it can be realized ...

Today we see further changes in the ongoing tension between data transfer and content. We see a decrease in the use of a model that invests mainly in data transfer so that the user is “transported” to the bunker door with content.

Instead, there comes a model that transmits a copy of the content towards the user, bypassing many of the previously used data transfer functions. In other words, it now turns out that content is in the upstream segment of the market, and the value of data transfer services, especially long-distance transit, is noticeably reduced.

However, this model also raises some interesting questions about consistency on the Internet. Not all services are the same, and not all content is delivered in the same way. The content delivery network may make assumptions about the default language to be used to represent its servers, or comply with regional content licensing. For example, Netflix uses various directories in different parts of the world. The final service in different places varies slightly.

At a more general level, we see some degree of segmentation or fragmentation in the Internet architecture as a result of service specialization. In such an environment that is segmented into almost autonomous service cones, what is the driving force of the market that would allow you to see all the possible services loaded at each service distribution point? Who will pay for this multiply duplicated provision of services? And if no one is ready to go for this model of “universal” content, to what extent will the “Internet” be different for different places on the planet? Will all domain names be consistently accessible and uniformly transformed in this segmented environment?

Obviously, such considerations on the adaptation of the local service provided in each individual region lead rather quickly to Internet fragmentation, so I’ll probably stop here and leave them as open questions, answers to which can be a side effect of a possible termination of transit and growth of delivery networks content.

How long this distribution-oriented delivery model will last is only guessing. It may well be that this is only a certain phase that we are going through, and the existing presentation focused on data centers is just an intermediate stage, not where we could be, in the end. So, perhaps, now it’s still not an entirely correct time to completely abandon the traditional concept of universal connectivity and an all-encompassing transit service, despite the fact that, it seems, today's Internet is already taking a rather big step in a completely different direction!

Source: https://habr.com/ru/post/316754/

All Articles