Unity: squeezing compressed

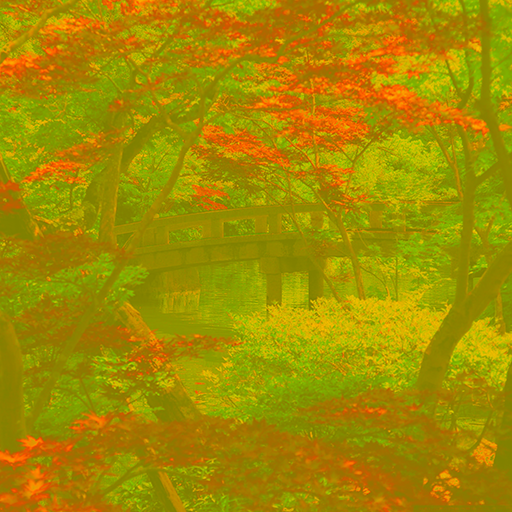

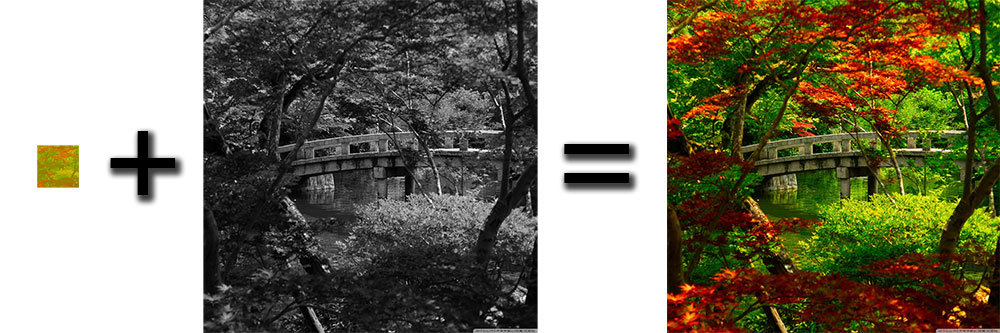

Result: the color information takes 1/64 of the original area with a sufficiently high quality of the result. Test image taken from this site .

Textures are almost always the most significant consumer of space both on disk and in RAM. Compressing textures in one of the supported formats is relatively helpful in solving this problem, but what if, even in this case, there are a lot of textures, and I want more?

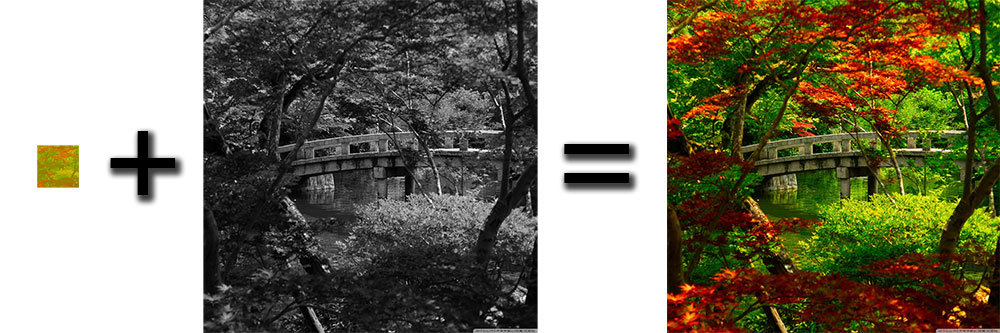

The story began about a year and a half ago, when one game designer (let's call him Akkelman) as a result of experiments with different modes of blending layers in photoshop found the following: if the texture is discolored and the texture is superimposed in color, but 2-4 times smaller with the blending mode set to “Color”, the picture will look pretty much like the original.

The story began about a year and a half ago, when one game designer (let's call him Akkelman) as a result of experiments with different modes of blending layers in photoshop found the following: if the texture is discolored and the texture is superimposed in color, but 2-4 times smaller with the blending mode set to “Color”, the picture will look pretty much like the original.Storage Features

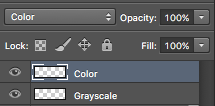

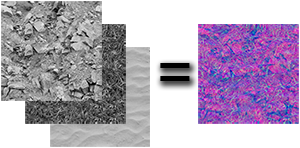

What is the meaning of this separation? Black and white images containing essentially the brightness of the original image (hereinafter referred to as “grayscale”, from the English word “grayscale”) contain only intensity and can be stored in one color plane each. That is, in the usual picture without transparency, having 3 color channels R, G, B, we can save 3 such “greyskeiles” without losing space. You can also use channel 4 (A) (transparency), but with it there are big problems on mobile devices (there is no universal format on android with gles2 that supports compression of RGBA textures, compression quality deteriorates a lot and TP), therefore for universality only 3 will be considered -channel solution. If this is implemented, then we will get almost 3-fold compression (+ incommensurably small “color” texture) for already compressed textures.

What is the meaning of this separation? Black and white images containing essentially the brightness of the original image (hereinafter referred to as “grayscale”, from the English word “grayscale”) contain only intensity and can be stored in one color plane each. That is, in the usual picture without transparency, having 3 color channels R, G, B, we can save 3 such “greyskeiles” without losing space. You can also use channel 4 (A) (transparency), but with it there are big problems on mobile devices (there is no universal format on android with gles2 that supports compression of RGBA textures, compression quality deteriorates a lot and TP), therefore for universality only 3 will be considered -channel solution. If this is implemented, then we will get almost 3-fold compression (+ incommensurably small “color” texture) for already compressed textures.')

Assessment of feasibility

You can roughly estimate the benefits of such a solution. Suppose we have a 3x3 field of textures with a resolution of 2048x2048 without transparency, each of which is compressed in DXT1 / ETC1 / PVRTC4 and has a size of 2.7 MB (16 MB without compression). The total size of the occupied memory is 9 * 2.7Mb = 24.3Mb. If we can extract color from each texture, reduce the size of this “color” card to 256x256 and 0.043 MB in size (it looks quite tolerable, that is, it’s enough to store 1/64 part of the total texture area), and pack full-size “greyscales” by 3 pieces in new textures, we get an approximate size: 0.043Mb * 9 + 3 * 2.7Mb = 8.5Mb (estimated size, rounded up). Thus, we can get compression 2.8 times - it sounds pretty good, given the limited hardware capabilities of mobile devices and the unlimited desires of designers / content providers. You can either greatly reduce resource consumption and load time, or throw more content.

First try

Well, we try. A quick search produced a ready-made algorithm / implementation of the “Color” blending method. After studying its source, hair began to move all over the body: about 40 “brunches” (conditional branches that negatively affect the performance on a not-so-top gland), 160 alu instructions, and 2 texture samples. Such computational complexity is quite a lot not only for mobile devices, but also for the desktop, that is, it is not at all suitable for real-time. This was told to the designer and the topic was safely closed / forgotten.

Second try

A couple of days ago, this topic resurfaced, it was decided to give her a second chance. We do not need to get 100% compatibility with the implementation of photoshop (we have no goal to mix several textures in several layers), we need a faster solution with a visually similar result. The basic implementation looked like a double round-trip conversion between RGB / HSL spaces with calculations between them. Refactoring led to the fact that the complexity of the shader dropped to 50 alu and 9 “brunches”, which was already at least 2 times faster, but still not enough. After asking for help from the hall, comrade wowaaa gave an idea how to rewrite a piece that generates “branching”, without conditions, for which many thanks to him. Part of the conditional calculations were rendered into the lookup texture, which was generated by the script in the editor and then simply used in the shader. As a result of all the optimizations, the complexity dropped to 17 alu, 3 texture samples and the lack of “brunching”.

It seems like a victory, but not quite. First of all, such complexity is still excessive for mobile devices, you need at least 2 times less. Secondly, all this was tested on contrasting pictures filled with solid color.

Example of artifacts (clickable): the left is wrong, the right is the reference options

After tests on real pictures with gradients and other delights (nature photos), it turned out that this implementation is very capricious of the combination of allowing a “color” map with mipmap settings and filtering: obvious artifacts appeared caused by mixing texture data in the shader and rounding errors / compression of the textures themselves. Yes, it was possible to use textures without compression, with POINT filtering and without drastically reducing the size of the “color map”, but then this experiment lost all meaning.

Third attempt

And then another help from the hall helped. Comrade Belfegnar , who loves “grafony, nekstgen, that's it” and reading all the available research on this topic, proposed a different color space - YCbCr and rolled out corrections to my test bench, supporting it. As a result, the complexity of the shader on the move dropped to 8 alu, without “brunching” and lookup-textures. Also, I was thrown off references to research with the formulas of all sorts of brainy mathematicians who tested different color spaces for the possibility / feasibility of their existence. Variants for RDgDb, LDgEb, YCoCg were collected from them (you can google, there is only the last, the first 2 can be found on the links: sun.aei.polsl.pl/~starstar/index.html , sun.aei.polsl.pl /~rstaros/papers/s2014-jvcir-AAM.pdf ). RDgDb and LDgEb are based on the same base channel (used as a full-sized “grayscale”) and the ratio of the two remaining channels to it. A person poorly perceives a difference in color, but determines the difference in brightness quite well. That is, with a strong compression of the “color” card, not only the color was lost, but also the contrast - the quality suffered greatly.

As a result, “won” YCoCg - the data is based on brightness, tolerates the compression of the “color” map (they “wash” more strongly than YCbCr - the compression preserves the contrast better), the shader complexity is less than that of YCbCr.

“Color” map - packed data (CoCg) is contained in RG channels, B-channel is empty (can be used for user data).

After the basic implementation, dancing with a tambourine began again for the sake of optimization, but I was not very successful in that.

Total

Once again, the picture with the result: the resolution of the color texture can be varied over a wide range without significant loss in quality.

The experiment was quite successful: a shader (without transparency support) with a complexity of 6 alu and 2 texture samples, 2.8x memory compression. In each material, you can specify the color channel of the “greyscale” atlas, which will be used as brightness. Similarly, for a shader with transparency support, the color channel of the grayscale atlas is selected for use as an alpha.

Sources: Github

License: MIT license.

All characters are fictional and any coincidence with people who actually live or have ever lived by chance. No designer in the course of this experiment was hurt.

Source: https://habr.com/ru/post/316672/

All Articles