IIS Request filtering against ddos attacks

Lie down

The customer, whose websites I supported earlier, turned to the fact that the site lies and gives 500 error. He has a standard site on ASP.NET WebForms, I will not say that it is very loaded, but there were problems with database performance (MS SQL Server on a separate server). Recently, the database server was changed and transferred data.

This site is not the main business of the customer, so it is practically not maintained. He does not have any monitoring and collection of metrics, and in general he is not particularly watched.

Telemetry data

What anomalies were evident:

')

- The w3wp process used more than 50% of the CPU (usually much less).

- The number of threads in this process has steadily increased (the site did not have time to serve customers).

- The disk on the database server was used 100% (Active Time).

- The queue length for disk calls with project databases was long (usually around zero-ones).

- RAM on the database server is fully used.

- The profiler showed that there is one hot method that goes to the database.

Tuning DBMS

My first hypothesis was related to problems on the server side of the database due to its transfer: they forgot to configure something, it does not work out to collect statistics and rebuild the index, etc.

Memory - it immediately became clear that when transferring the DBMS they forgot to limit the use of RAM on the new server - we limit it. From past experience, this configuration was quite enough 24GB (of the total 32).

Check Joba - all the rules. We start Tuning Advisor and complete the missing indexes (among them was the index for the hot query from the profiler). Exhaust close to zero: the site is.

IIS

I log in and immediately everything becomes clear - DDoS:

The first time such a situation, it is not clear who needed. Requests became 10 times more, what in logs?

In the logs we see about 200 requests per second (regular users generate up to ten per minute by metrics). All requests from different IP, they are united only by a similar user-agent:

| Get | 101.200.177.82 | WordPress / 4.2.2; www.renwenqifei.com; + verifying + pingback + from + 37.1.211.155 | 503 |

| Get | 54.69.1.242 | WordPress / 4.2.10; 54.69.1.242; + verifying + pingback + from + 37.1.211.155 | 503 |

| Get | 123.57.33.117 | WordPress / 4.0; www.phenomenon.net.cn; + verifying + pingback + from + 37.1.211.155 | 503 |

| Get | 54.69.236.133 | WordPress / 4.3.1; + http: //www.the-call-button.com; + verifying + pingback + from + 37.1.211.155 | 503 |

| Get | 52.19.227.86 | WordPress / 4.3.6; 52.19.227.86; + verifying + pingback + from + 37.1.211.155 | 503 |

| Get | 52.27.233.237 | WordPress / 4.1.13; 52.27.233.237; verifying + pingback + from + 37.1.211.155 | 503 |

| Get | 202.244.241.54 | WordPress / 3.5.1; www.fm.geidai.ac.jp | 503 |

| Get | 52.34.12.105 | WordPress / 4.3.6; 52.34.12.105; verifying + pingback + from + 37.1.211.155 | 503 |

| Get | 128.199.195.155 | WordPress / 4.3.6; www.glamasia.com; + verifying + pingback + from + 37.1.211.155 | 503 |

| Get | 61.194.65.94 | WordPress / 4.2.10; wpwewill.help; + verifying + pingback + from + 37.1.211.155 | 503 |

| Get | 23.226.237.2 | WordPress / 4.3.1; hypergridder.com; + verifying + pingback + from + 37.1.211.155 | 503 |

| Get | 104.239.228.203 | WordPress / 4.2.5; pjtpartners.com; + verifying + pingback + from + 37.1.211.155 | 503 |

| Get | 104.239.168.88 | WordPress / 4.2.10; creatorinitiative.com; + verifying + pingback + from + 37.1.211.155 | 503 |

| Get | 166.78.66.195 | WordPress / 3.6; remote.wisys.com/website | 503 |

| Get | 212.34.236.214 | WordPress / 3.5.1; nuevavista.am | 503 |

This is a known type of attack: a WordPress site with Pingback enabled (enabled by default), can be used in a DDOS attack on other sites. More detailed article on Habré.

Configuring the query filter

There are several levels where you can filter requests. The first is a firewall. We get ip - we add them to the firewall, on schedule we collect newly appeared ones. Pros - great speed, no garbage requests in the logs. Cons - need to write scripts and follow.

The second level is IIS itself (from obvious minuses, garbage requests get into the logs). The third level is to write a module and use it. This is the most flexible approach, but is laborious and has low productivity.

I stopped at the second level from considerations to get a decision having made a minimum of actions.

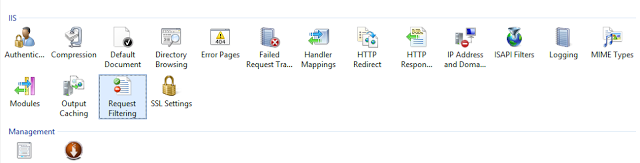

IIS has a lot of filtering capabilities. In this case, is suitable Request Filtering . More details about installation and configuration.

Choose site → Request Filtering → Rules → Add filtering rules

And we indicate that we want to filter all requests where in Header: User-Agent is the word WordPress.

Or you can specify the appropriate settings in the web.config file:

<system.webServer> <security> <requestFiltering> <filteringRules> <filteringRule name="ddos" scanUrl="false" scanQueryString="false"> <scanHeaders> <clear /> <add requestHeader="User-Agent" /> </scanHeaders> <denyStrings> <clear /> <add string="WordPress" /> </denyStrings> </filteringRule> </filteringRules> </requestFiltering> </security> </system.webServer> Immediately after applying this filter, the site has earned. All indicators are back to normal. If I checked the logs right away, it would take less than half an hour.

What else can IIS do?

IIS is a very cool and productive web application server. In addition to sending the request to the managed environment, it can do a lot and, in terms of performance, strongly beats managed solutions.

In the Request Filtering section, you can configure many more different filters: by methods, URL segments, query parameters, extensions, etc. You can disable PHP-specific query parameters in an asp.net project (an attempt to access htaccess or a password file). You can prohibit malicious queries, for example, containing sql-injection. This is done not as protection against these attacks, but in order to save server resources: IIS will automatically discard these requests and do it quickly with minimal memory and processor time.

Another mechanism is called IP Address and Domain Restrictions . This mechanism allows you to make white and black lists of IP addresses to restrict access to the site. You can block the evil parser or vice versa to open access to the test site or admin panel only from certain IP. Read more in the official documentation .

And the third mechanism that can help you counteract DDoS attacks and unwanted parsers is Dynamic IP Address Restrictions.

Not always we can follow the constantly changing ip-addresses of the attacker. But with this tool we can limit the frequency of requests. Thus, IIS for an abnormally large number of requests from a single IP address will quickly give out 403 or 404. Be careful with search bots. Official documentation .

findings

Do not disable logs, set up monitoring, which clearly warns you about anomalies in the indicators. This can be done quickly and inexpensively, and will save you time when investigating incidents. We shall leave the monitoring setting, as well as administrative and organizational countermeasures beyond the scope of this article.

Source: https://habr.com/ru/post/316550/

All Articles