Queues and locks. Theory and practice

We exhaled after HighLoad ++ and continue to publish the best reports of the past. HighLoad ++ turned out great, the number of organizational improvements spasmed into a new product quality. Habr, by the way, led the text translation from the conference ( first , second days).

Dear colleagues! My report will be about the thing, without which not a single HighLoad project can do - about the queue server, and if I have time, I’ll tell you about the blocking (the decryptor’s note had time :).

')

What will the report be about? I will tell you about where and how queues are used, why all this is needed, I will tell you a little bit about protocols.

Since Our conference calls HighLoad Junior, I would like to go from the Junior project. We have a typical Junior project - this is some kind of web page that accesses the database. Maybe this is an electronic store or something else there. And so, the users went and went to us, and at some stage we got a mistake (maybe another error):

We climbed the Internet, began to explore how to scale, we decided to get backends.

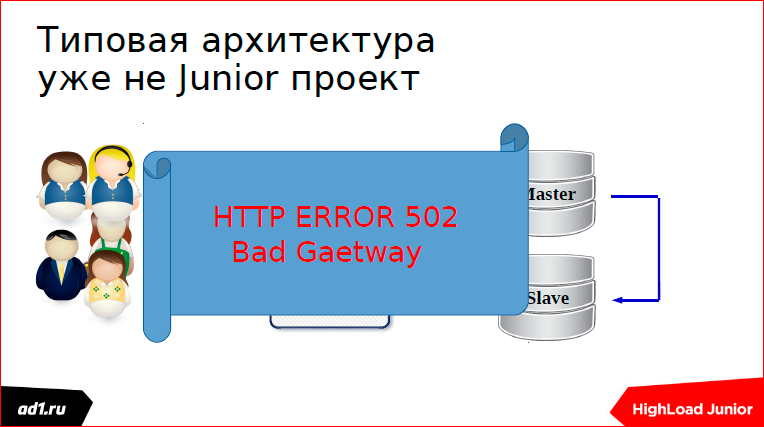

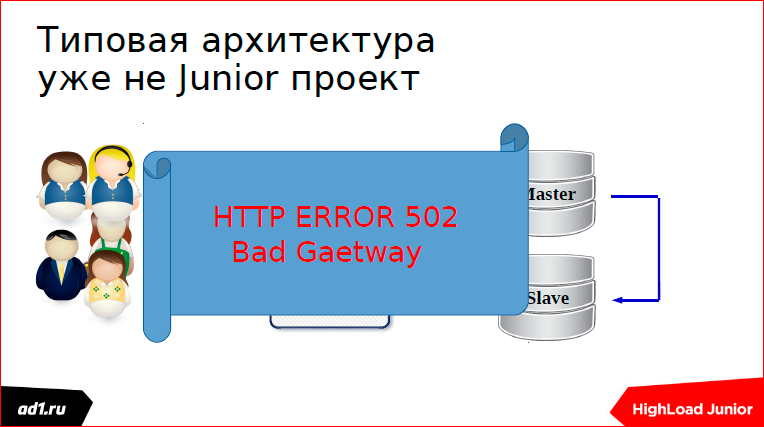

More users and more users went, and we had another mistake:

Then we got into the logs, looked at how you can scale the SQL-server. Found and made replication.

But here we got errors in MySQL:

Well, errors can be in simpler configurations, I showed it here figuratively.

And at this moment we begin to think about our architecture.

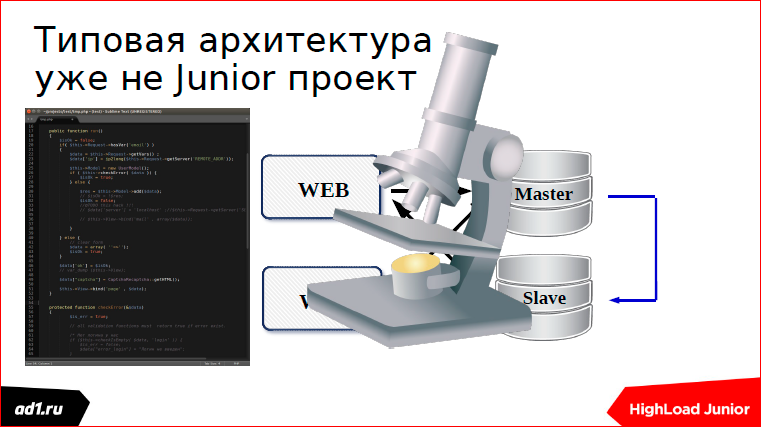

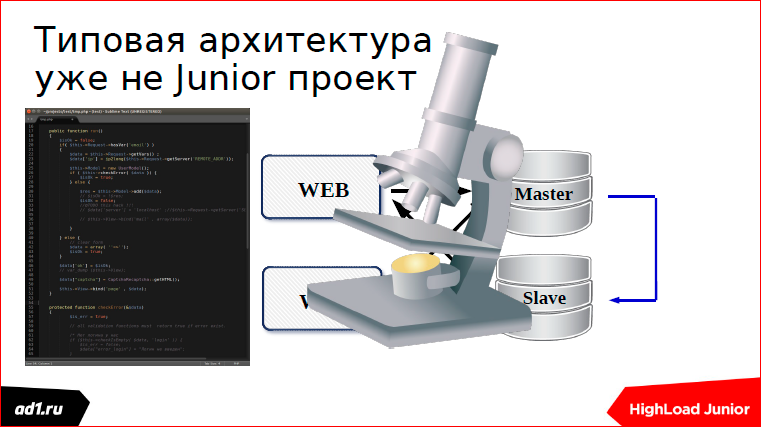

We view our architecture “under the microscope” and single out two things:

The first is some critical elements of our logic that need to be done; and the second - some slow and unnecessary things that can be put off until later. And we are trying to divide this architecture:

We divided it into two parts.

We try to put one part on one server, and another part on another. I call this pattern a “cunning student”.

I myself once “suffered” with it: I told my parents that I had already done my homework and ran for a walk, and the next day I read these homework before homework and told the teachers in two minutes.

There is a more scientific name for this pattern:

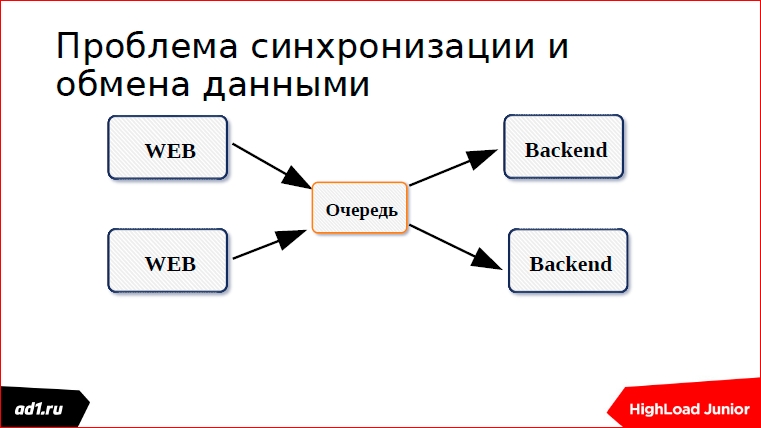

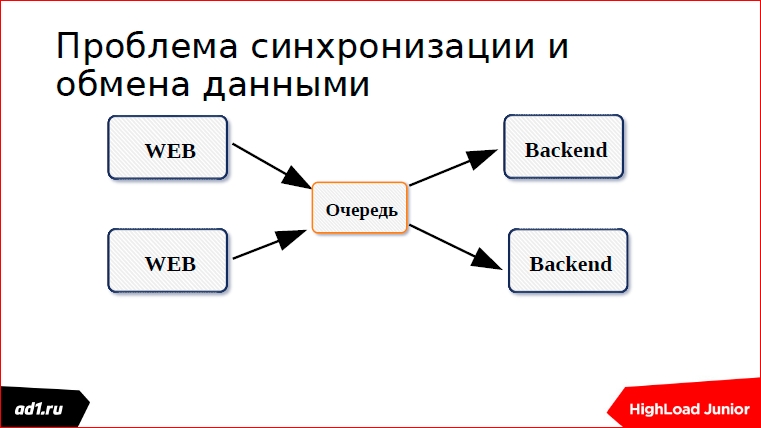

And in the end we come here to this architecture, where there is a web server and a backend server:

Between themselves, they must somehow bind. When these are two servers, it is made easier. When there are several, it is a little more complicated. And we wonder how to connect them? And one of the solutions of communication between these servers is a queue.

What is the queue? The queue is a list.

There is a longer and tedious, but this is just a list where we write the elements, and then we read them and delete them, we execute them. The list goes on, the elements are reduced, the queue is so regulated.

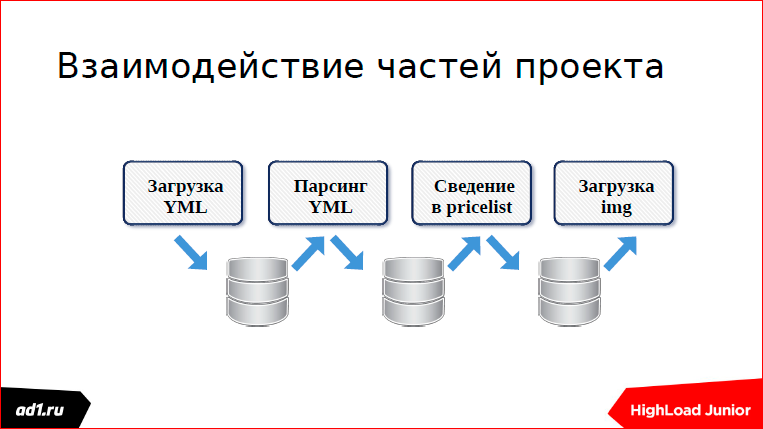

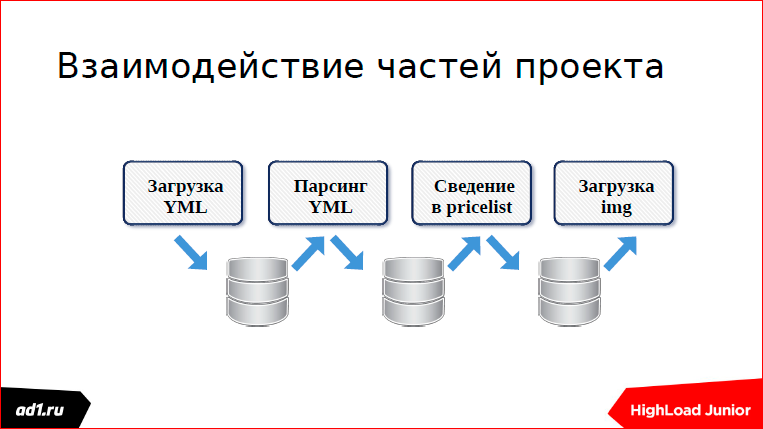

Moving on to the second part - where and how is it used? Once I worked in such a project:

This analogue of Yandex.Market. In this project, there are a lot of different services. And these services somehow had to be synchronized. They synchronized through the database.

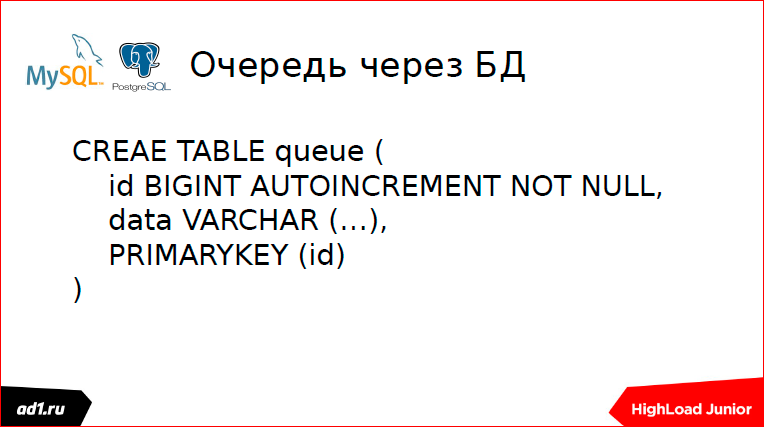

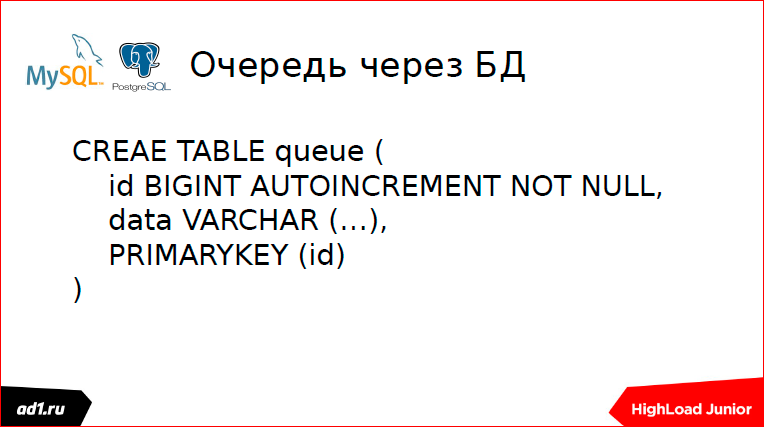

How is the queue on the database?

There is a certain counter - in MySQL it is an auto-increment, in Postgress this is realized through Sikkens; there is some data.

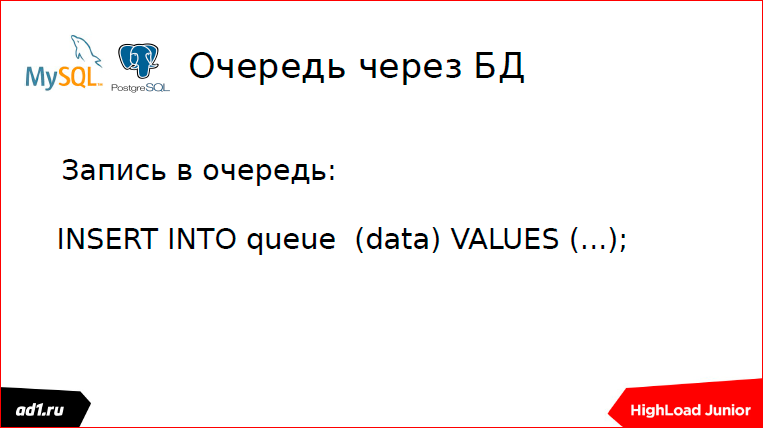

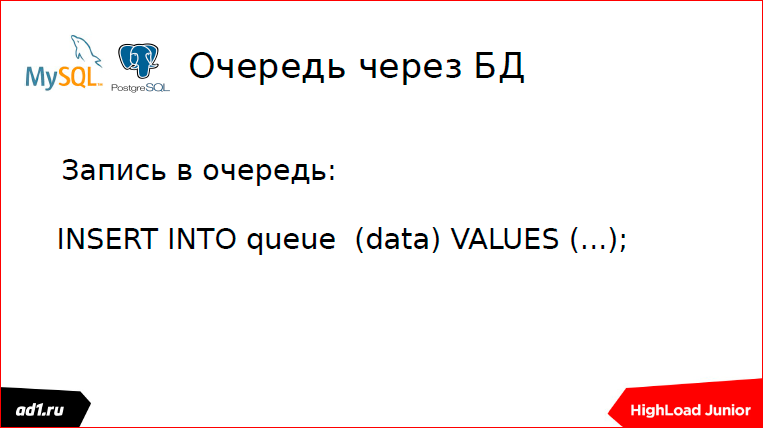

Write the data:

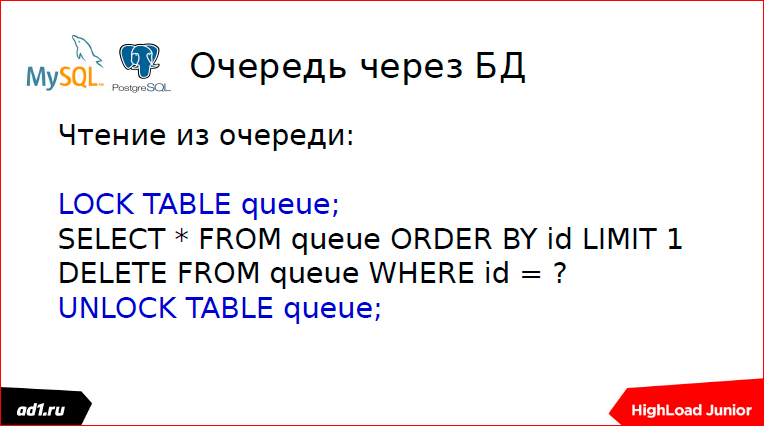

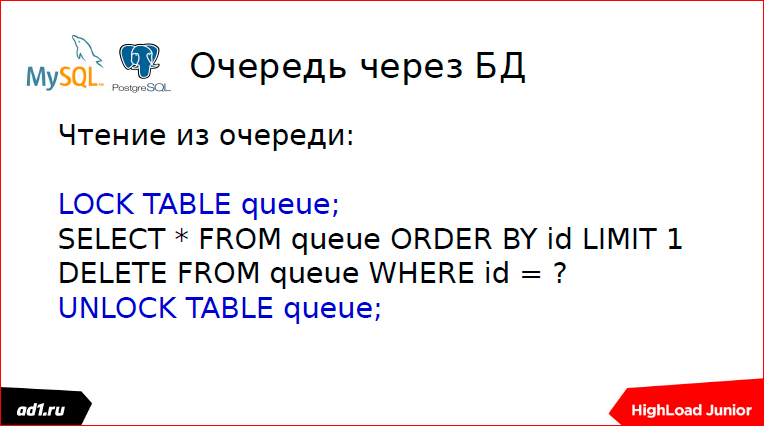

We read data:

We remove from the queue, but for complete happiness you need lock'i.

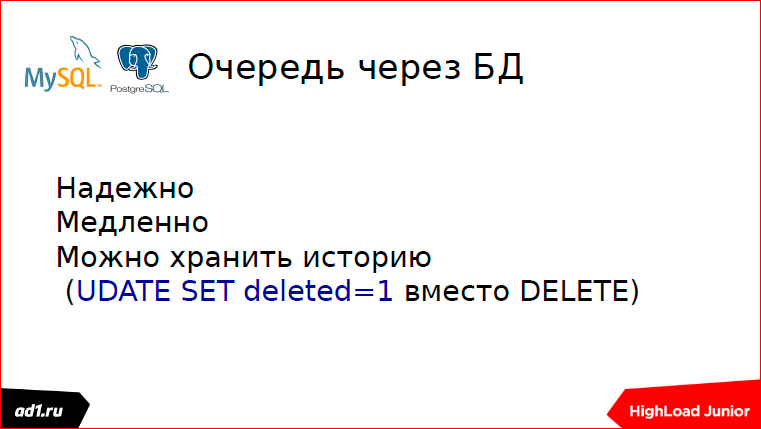

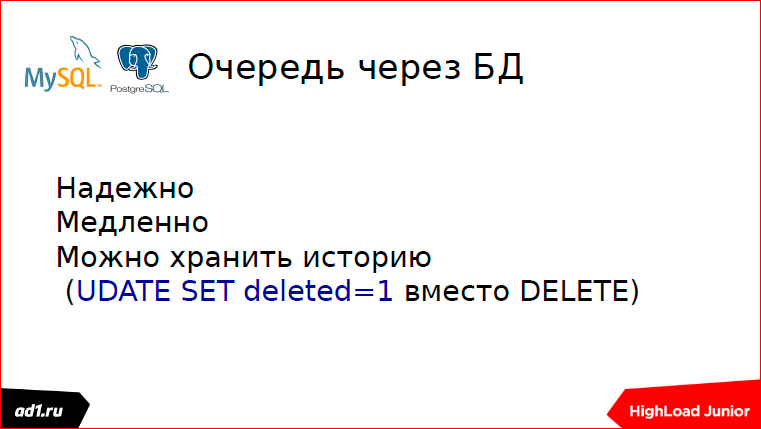

Is it good or bad?

It is slow. My given pattern for this does not exist, but in some cases many people make a queue through the database.

First, through the database you can store history. Then the deleted field is added, it can be both flag and timestamp to write. My colleagues through the line do the communication, which goes through the affiliate network, they record banners - how many clicks, then they have analytics on Vertic find clusters, which groups of users respond to which banners more.

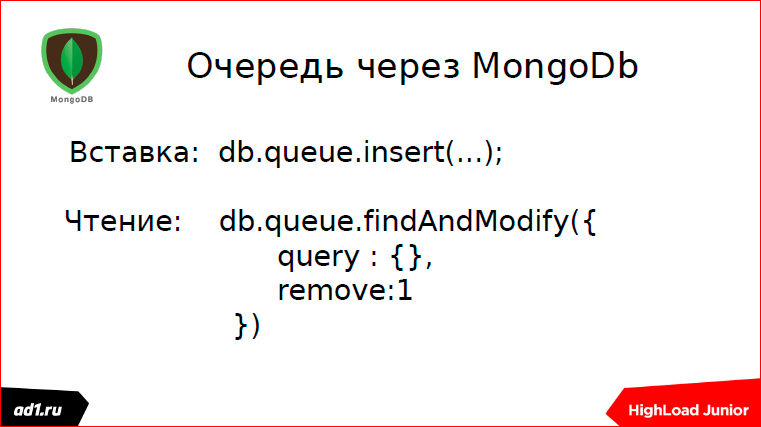

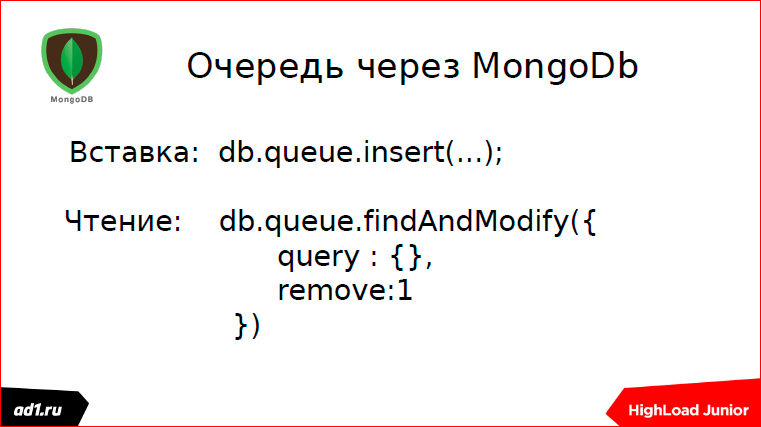

We use MongoDb for this.

In principle, everything is the same, only some collection is used. We write to this collection, we read from this collection. With deletion - read the item, it is automatically deleted.

It is also slow, but still faster than DB. For our needs, we use it in statistics, this is normal.

Further, I worked in such a project, it was a social toy - “one-armed bandit”:

Everyone knows, you press a button, our drums are spinning, coincidences fall out. The toy was implemented in PHP, but I was asked to refactor it because our database could not cope.

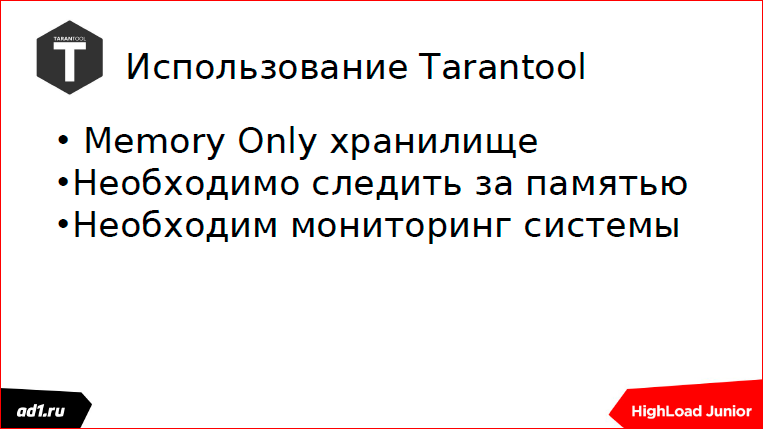

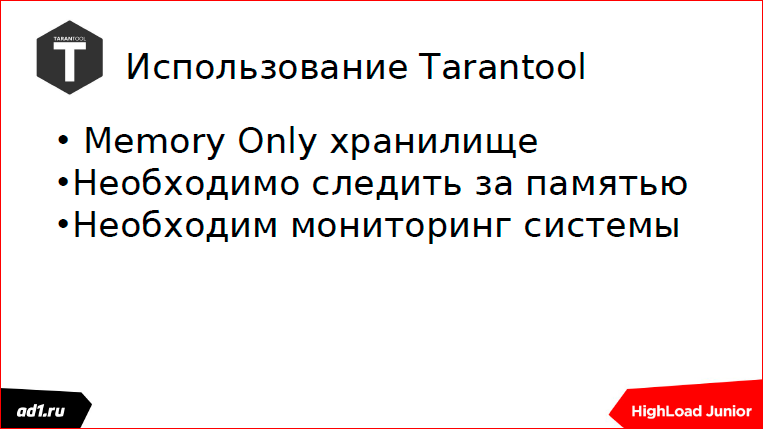

I used Tarantool for this. Tarantool in a nutshell is key value storage, it is now closer to document-oriented databases. The operational cache was implemented on my Tarantool, it only slightly helped. We decided to organize all this through a queue. Everything worked fine, but once we have it all fell. The backend server fell. And in Tarantool, queues began to accumulate, user data accumulate, and memory overflowed because this is Memory Only storage.

Memory overflowed, everything fell, user data lost in half a day. Users are a little unhappy, somewhere they played something, lost, won. Who has lost, to that well, who has won - to that is worse. What is the conclusion? It is necessary to do monitoring.

What is there to monitor? The length of the queue. If we see that it exceeds the average length every 5 or 10, 20 times, then we have to send an SMS - we have such a service done on Telegram. Telegram is free, SMS still costs money.

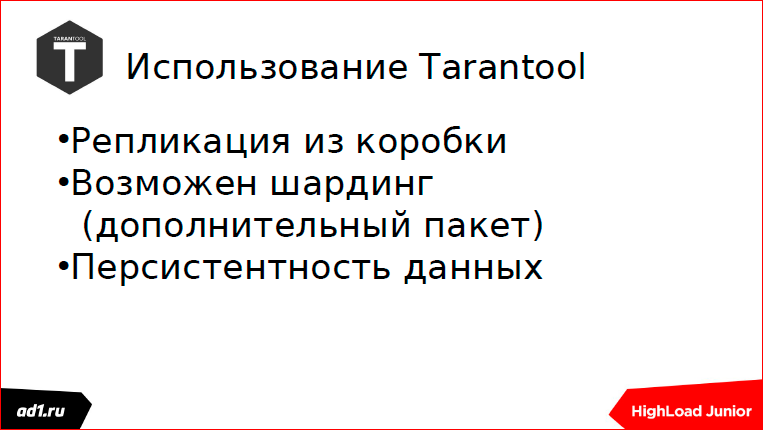

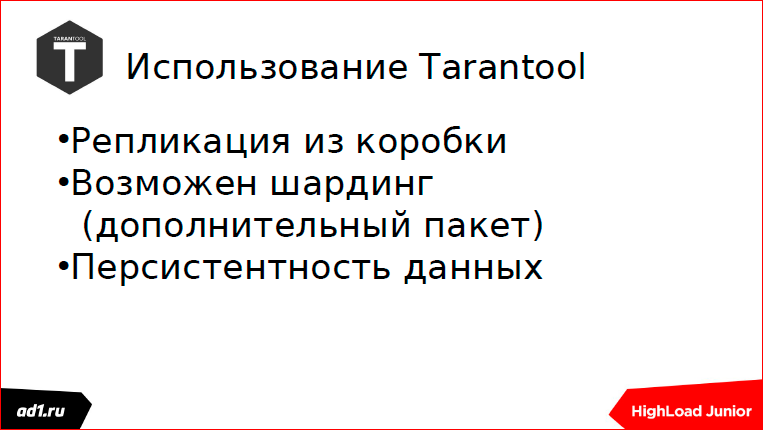

What else does Tarantool give us? Tarantool is a good solution, there is a sharding out of the box, replication out of the box.

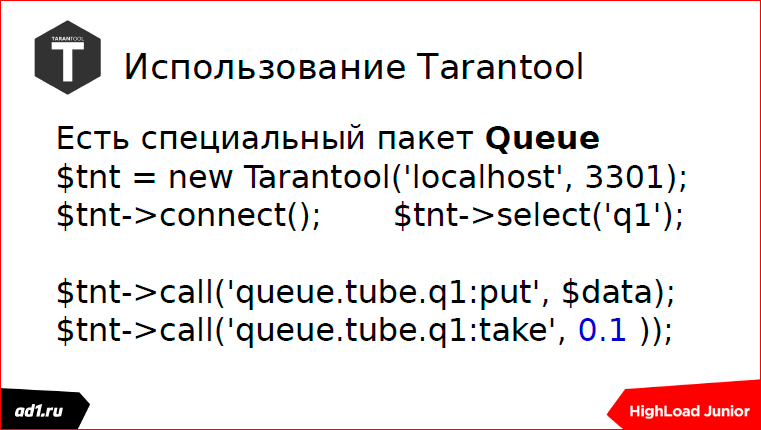

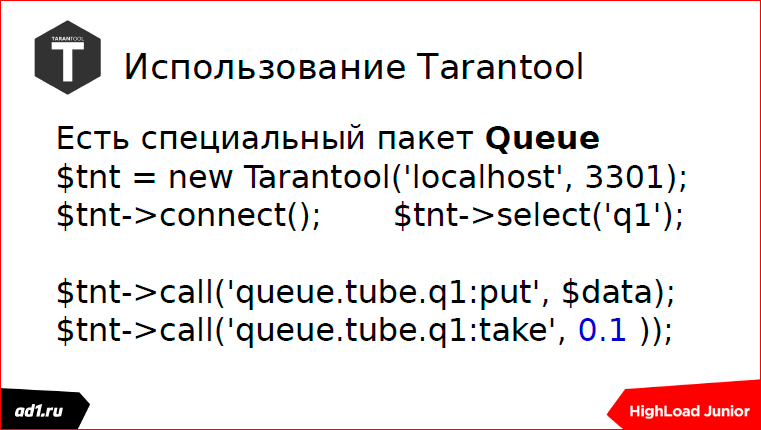

Even in Tarantool there is a wonderful package with Queue.

What I implemented was 4-5 years ago, then there was no such package yet. Now there is a very good API for Tarantool, if anyone is using Python, they have an API, generally honed under the queue. I myself have been in PHP since 2002, 15 years already. Developed a module for Tarantool in PHP, so PHP is a little closer to me.

There are two operations: writing to a queue and reading from a queue. I want to draw attention to this tsiferka (0.1 blue on the slide) - this is our timeout. And, in general, there are two approaches to the approach to writing backend scripts that parse the queue: synchronous and asynchronous.

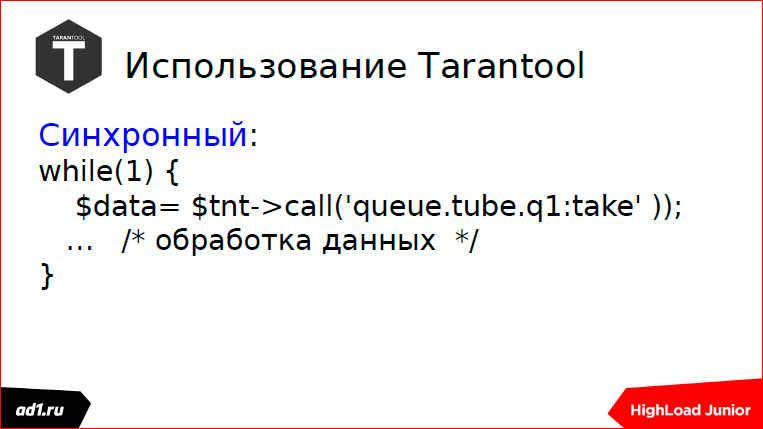

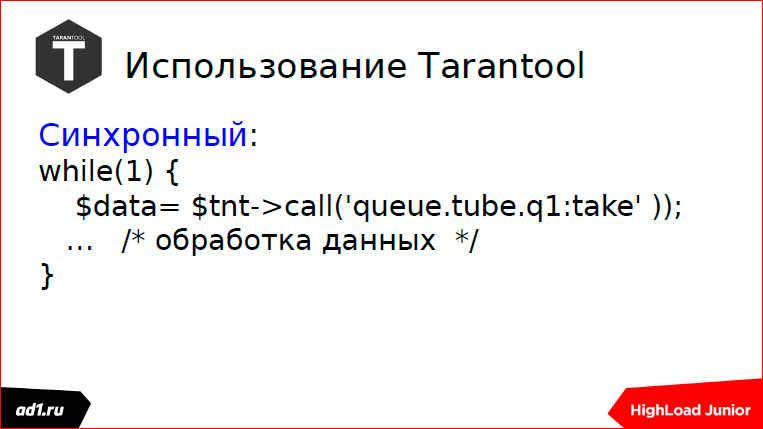

What is a synchronous approach? This is when we read from the queue and, if there is data, then we process it, if there is no data, then we get up in the lock and wait for the data to come. The data came, we went read further.

Asynchronous approach, as is clear, when there is no data, we went further - either we read from another queue, or we do some other operations. If necessary, wait. And again we go to the beginning of the cycle. Everyone understands everything is very simple.

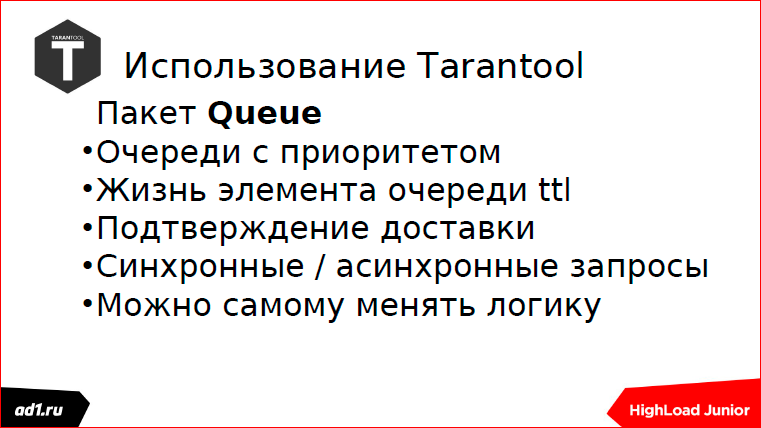

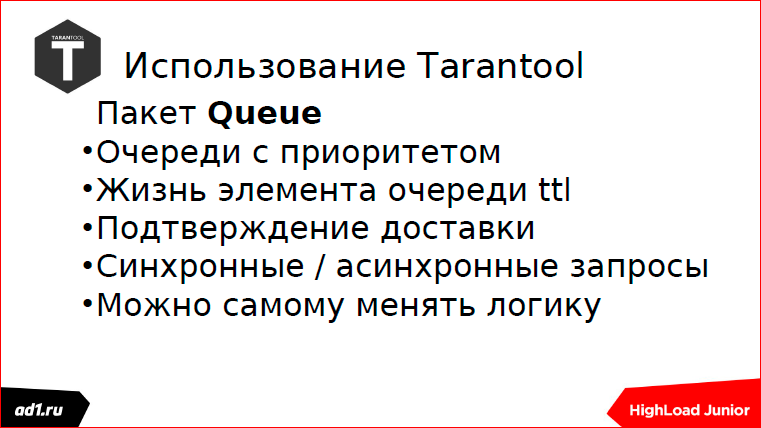

What kind of buns do we give the package Queue? There are queues with priorities, this I have not seen anywhere else among other servers of the queues. There you can still set the life of an element of the queue - sometimes it is very useful. Delivery confirmation - and that's it. I spoke about synchrony and asynchrony.

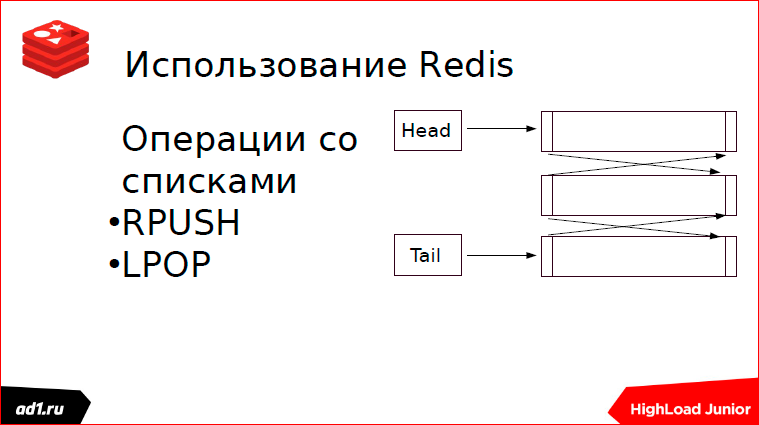

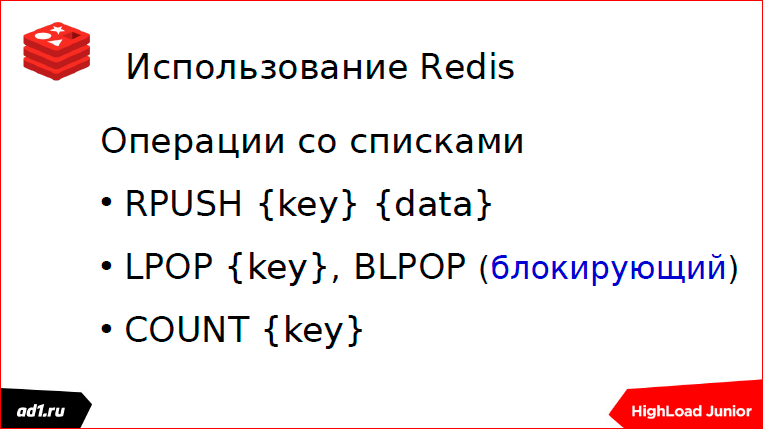

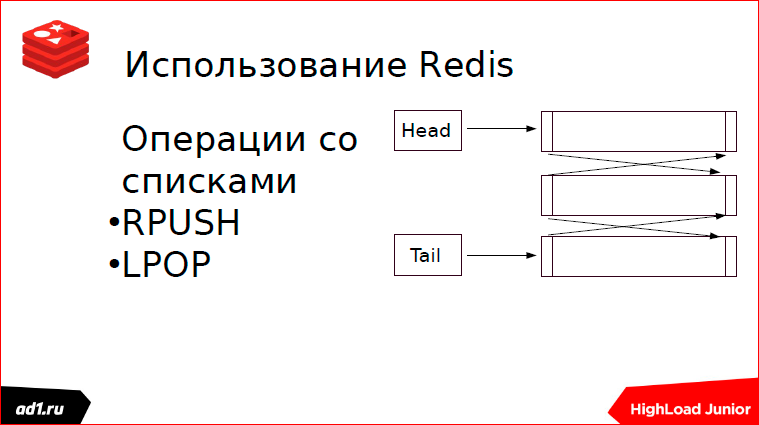

Redis. This is our zoo, where there are many different data structures. The queue is implemented on the lists. What are good lists? They are good because the access time to the first element of the list or to the last one occurs in constant time. How is the queue implemented? From the beginning of the list we write, from the end of the list we read. You can do the opposite, it does not matter.

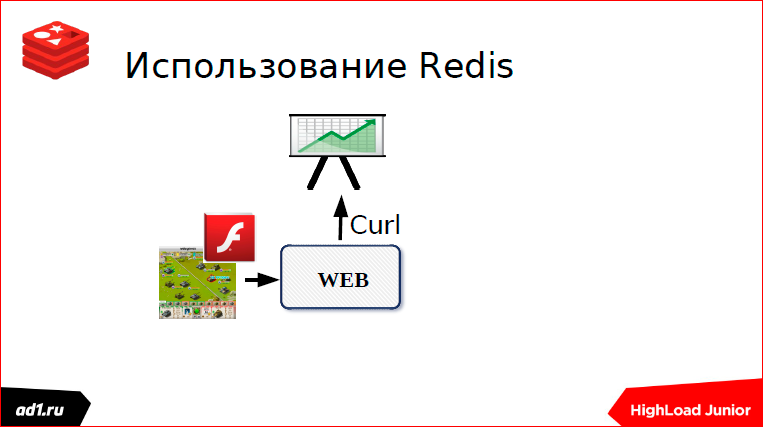

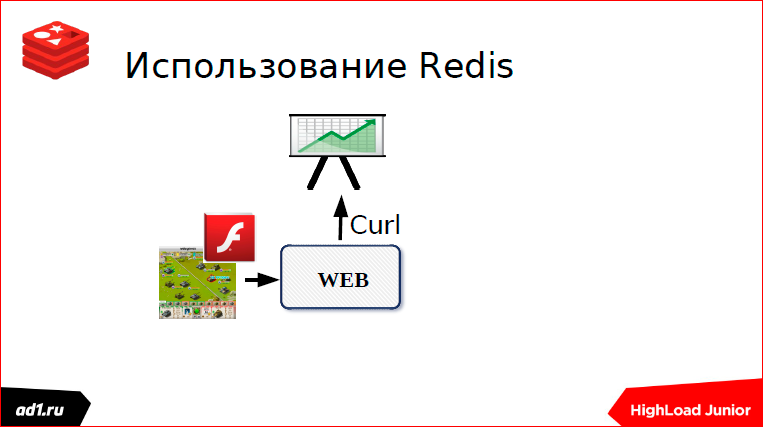

I worked in a toy. This toy was written on VKontakte. The classic implementation was, the toy worked fast, the flash drive communicated with the web server.

Everything was fine, but once we were told from above: “Let's use statistics, our partners want to know how many units we bought, which units we buy most, how many we have left and from what level of users, etc.”. And they suggested that we not reinvent the wheel and use external statistics scripts. And everything was wonderful.

Only my script worked 50 ms, and when they turned to the external script, there was some kind of America, it was at least 250 ms, and then more than 2 seconds ping went there. Accordingly, the whole toy is frozen.

We applied the following scheme:

And everything was good, everything worked quickly. But once our admin went on vacation. The admin went on vacation, everything was fine the first week, and a week later we learned that Redis was flowing. Redis flows, there is no admin, we come in, we look at the console in the morning, we look at how much memory is left, how much is left before the swap'a, sighing: "Oh, how good, you have carried by today." On Friday, many users came to us, especially after lunch, there was not enough memory.

The conclusions are the same as with Tarantool. Another project, the same mistakes. Memory needs to be monitored. The queue length needs to be monitored. Everybody says a lot about monitoring, I will not repeat.

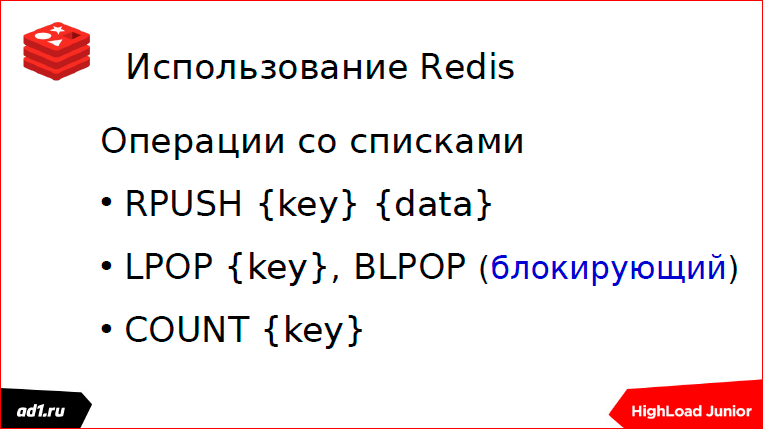

In Redis, you can also perform both blocking and non-blocking read operations; Count operation is needed just for monitoring.

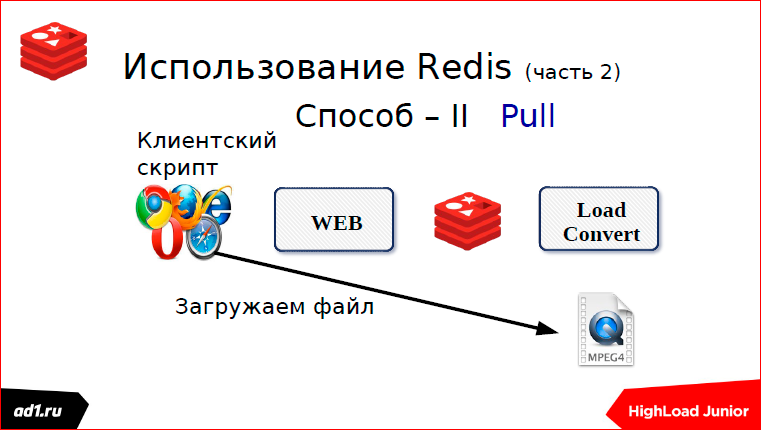

Somehow I also worked remotely in the project of downloading videos from popular video hosting sites:

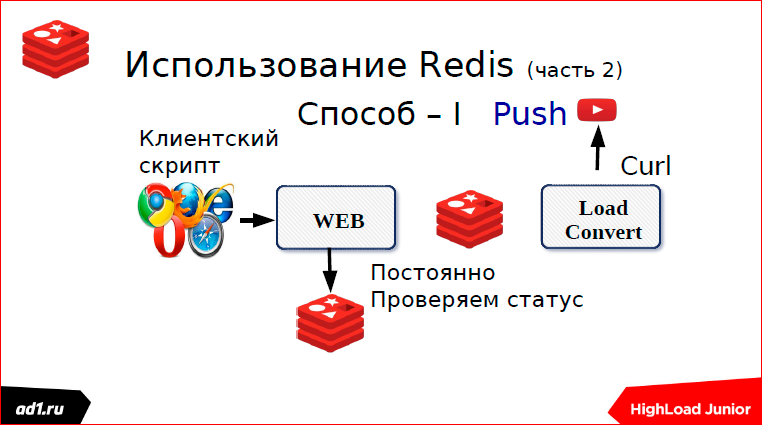

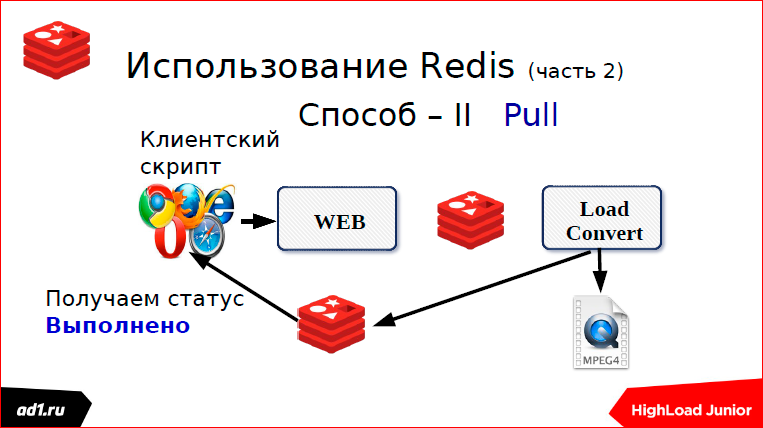

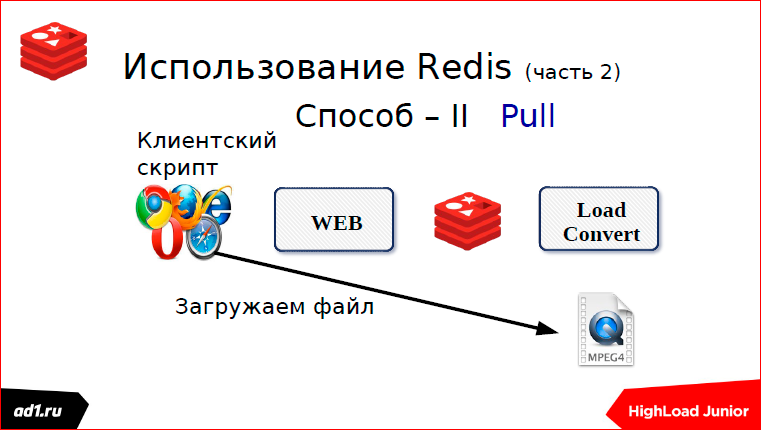

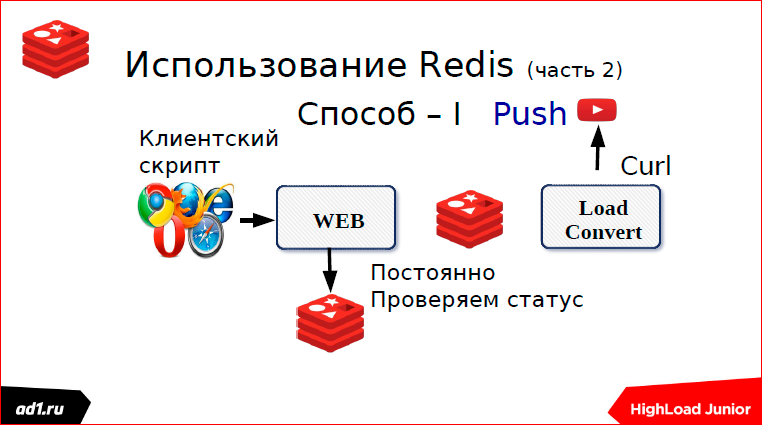

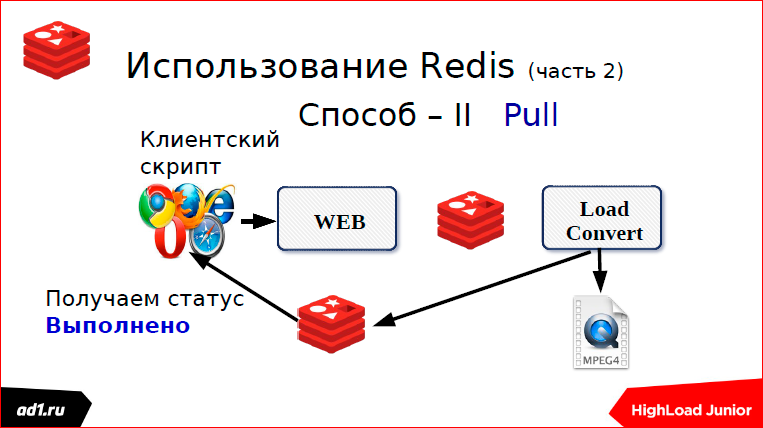

What is the problem with this project? The fact is that if we upload a video, then we convert it, if the video is slightly longer, the web client just falls off. Applied this scheme:

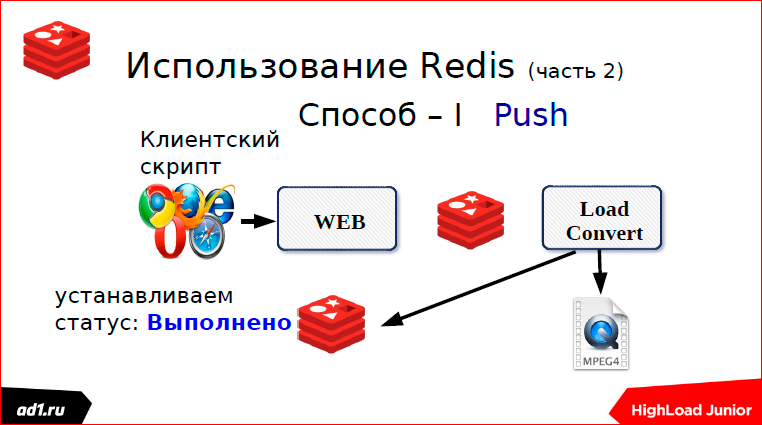

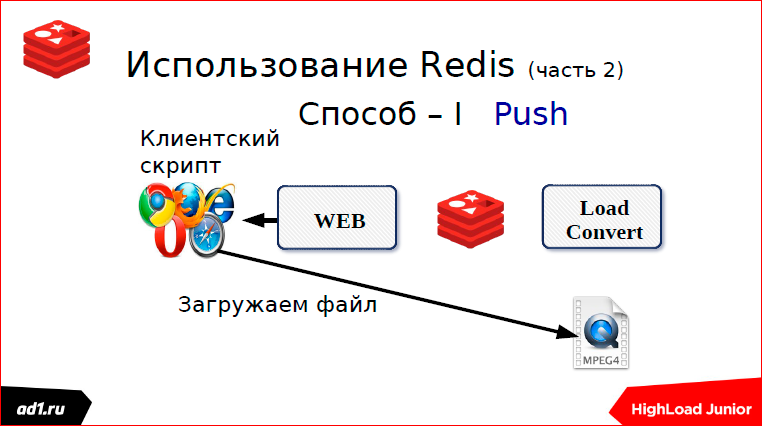

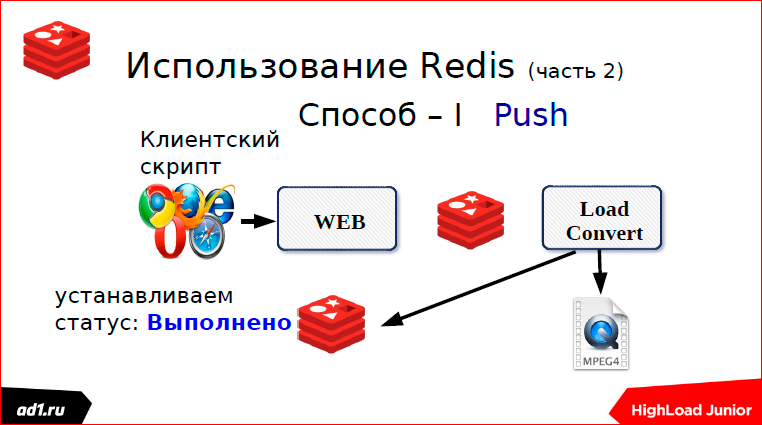

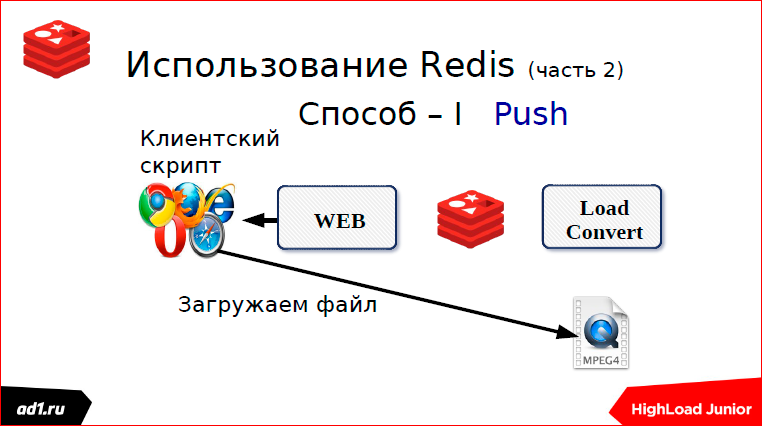

Everything is good with us. Upload the file. But the queue works for us only in one direction, and we have to inform our web script - the file has already been downloaded.

How it's done? This is done in two ways.

First, we check the status through some specific timeout. What could be the status? This is the keyvalue repository - it is better to take the same Redis. Key can serve any MD5 hash from our URL. And after we convert, we write the status in the keyvalue. The status can be: "completed", "converted", "not found" or something else. After a second, after some timeut, the script will request the status, see what is done or not done, show everything to the client. All clear. This is the first way we used pooling.

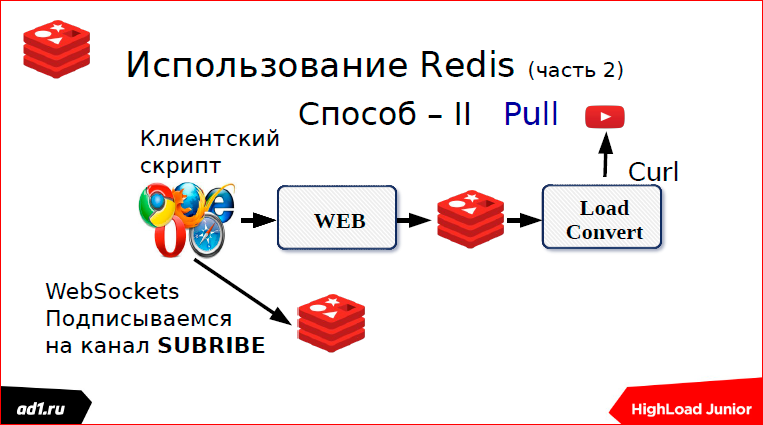

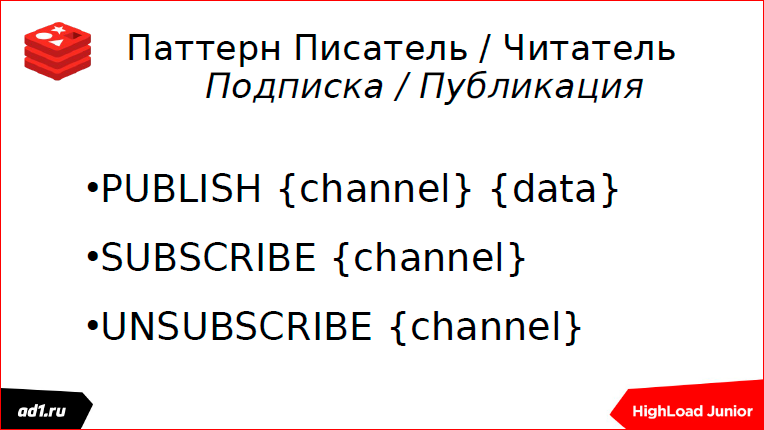

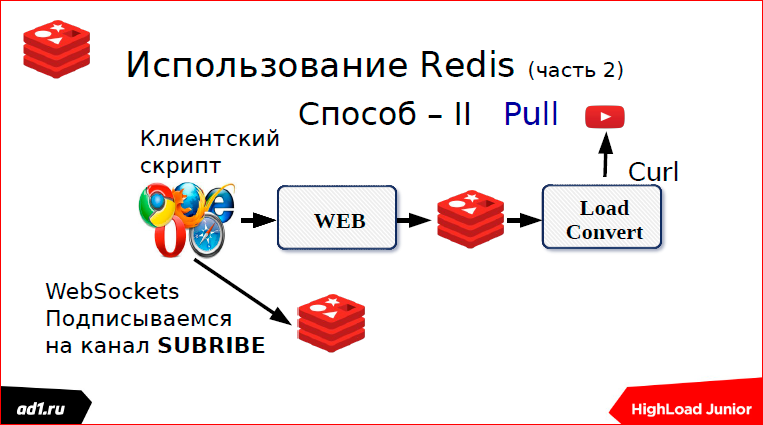

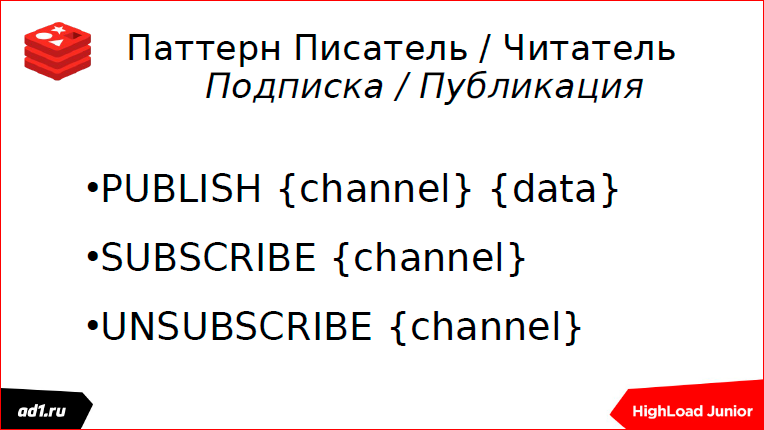

The second is web sockets.

We load the file - this is the second way. These are subscriptions. Here, just, used web sockets.

How it's done? As soon as we started downloading, we immediately subscribe to the channel in Redis. If there it was possible, say, to use memcahed or something else, if we did not use Redis, then it is tied to Redis. Subscribe to a channel, the name of the channel. Roughly speaking, the same MD5 hash from the URL.

As soon as we uploaded the file, we take and push into the channel, that we have the status "fulfilled" or the status "not found". And immediately instantly with us, Push gives status to a web script. After that, download the file if it is found.

Not quite directly, some such scheme.

How it's done? There is a certain source of data - the temperature of the volcano, the number of stars visible in telescopes, directed by NASA, the number of deals for specific stocks ... We accept this data, and our background script, which received this data, pulls them into a certain channel. Our web script is via a web socket, JS nodes are usually used, subscribes to a certain channel, as soon as data is received there, it sends this data to a client script via a web socket, and they are displayed there.

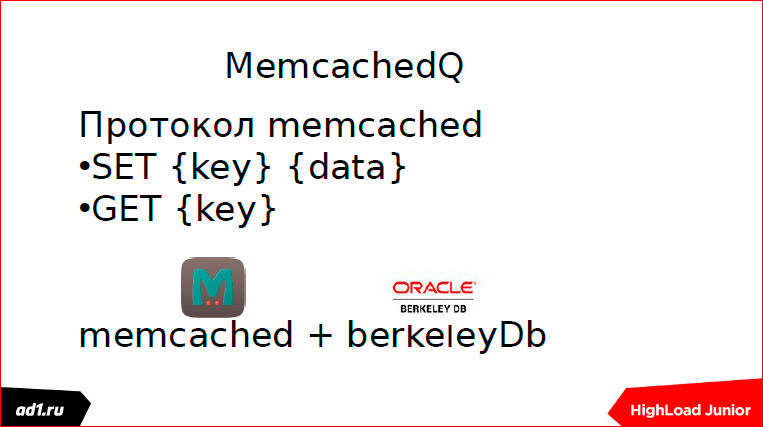

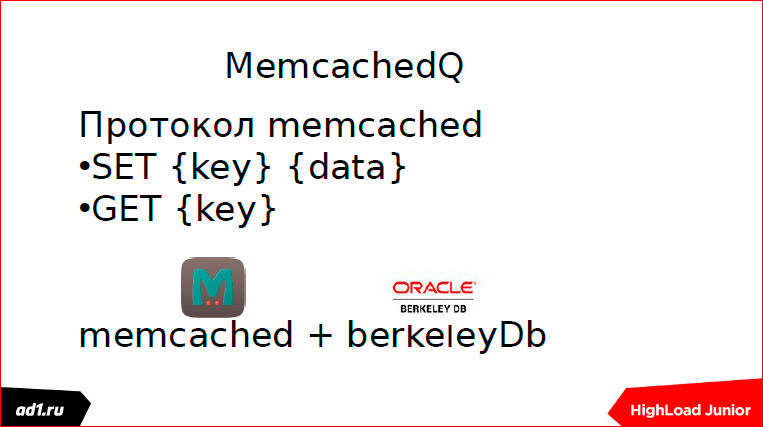

There is such a solution - MamecachedQ. This is a rather old solution, I would say, one of the first. It was spawned by using Mamecached and BerkeleyDb, this is an embedded, one of the earliest, keyvalue repository.

What is interesting about this decision? By using the Mamecached protocol.

What is the big minus - we are not able to understand the queue length here. What I said is monitoring is needed, but there is no monitoring here.

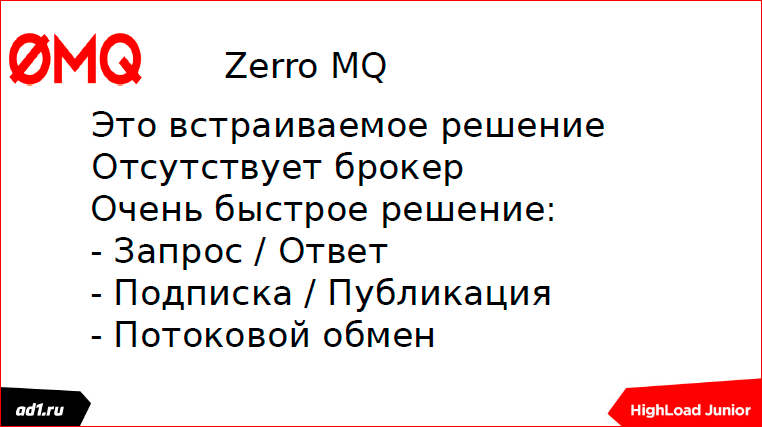

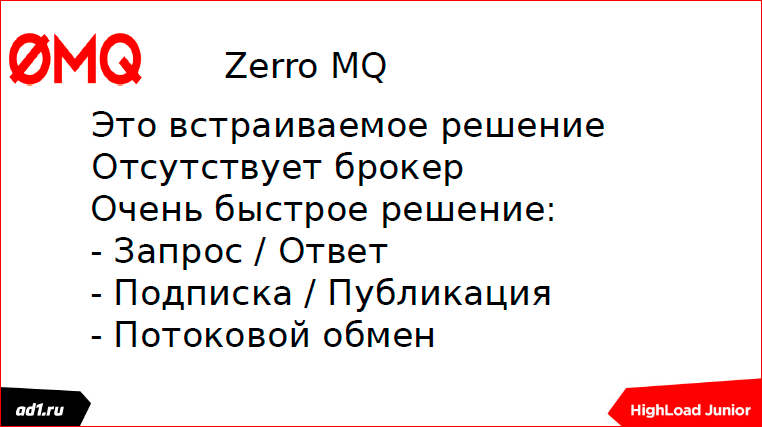

Speaking of queues, one can not say about the Zerro MQ.

Zerro MQ is a good and fast solution, but it is not a queuing broker, it must be understood. This is just an API, i.e. we connect one point to another point. Or one point with many points. But there are no queues, if one of the points disappears, then some data will be lost. Of course, I can write the same broker on the same Zerro MQ and realize it ...

Apache Kafka. Somehow I tried to use this solution. This is a decision from the hadoop stack. It is, in principle, a good, high-performance solution, but it is needed where there is a large flow of data and it needs to be processed. And so, I would use lighter solutions.

It needs to be configured for a very long time, synchronized via Zookepek, etc.

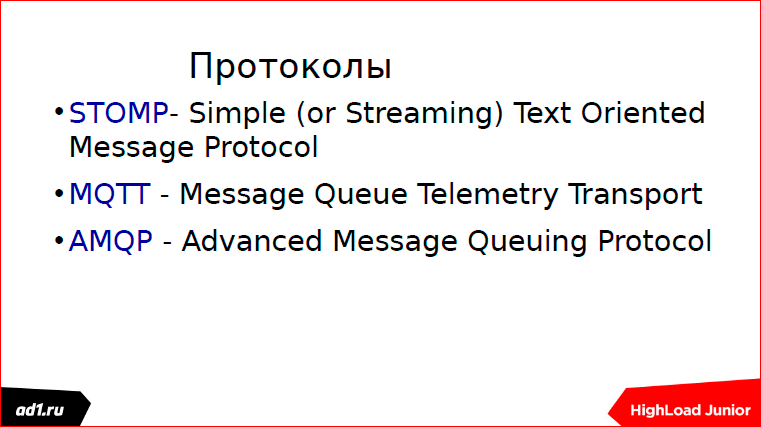

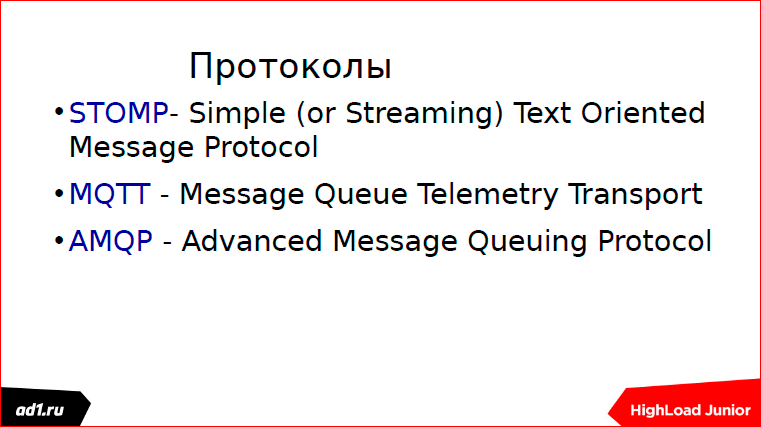

Protocols. What are protocols?

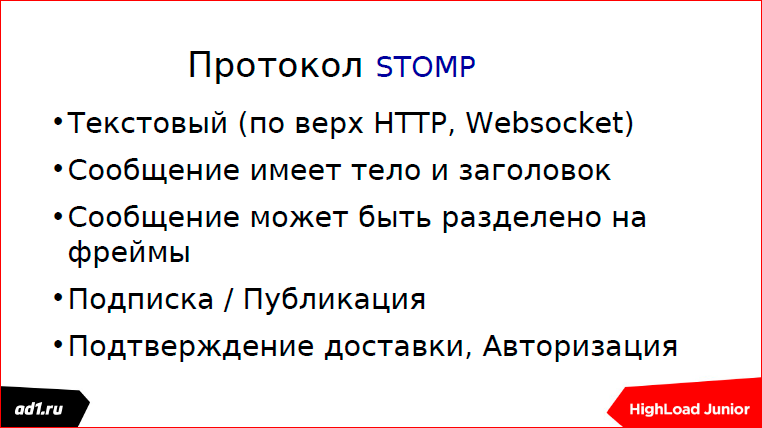

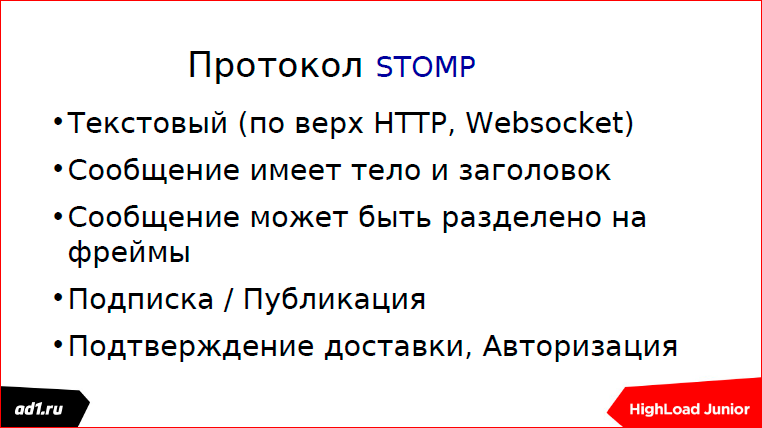

I showed you a bunch of all sorts of decisions. The IT community thought and said: “What are we all reinventing bicycles, let’s lead us to the whole thing.” And invented protocols. One of the earliest protocols is STOMP.

His description covers everything that can be done with queues.

The second protocol, MQTT, is the Message Queue Telemetry Transport protocol.

It is binary, as opposed to STOMP, covers, in principle, all the same functionality as STOMP, but more quickly due to the fact that binary.

Here are the most prominent representatives, the queue broker, who work with protocols:

ActiveMQ uses all three protocols, even four (there is one more). RabbitMQ uses three protocols; Qpid uses Q and P.

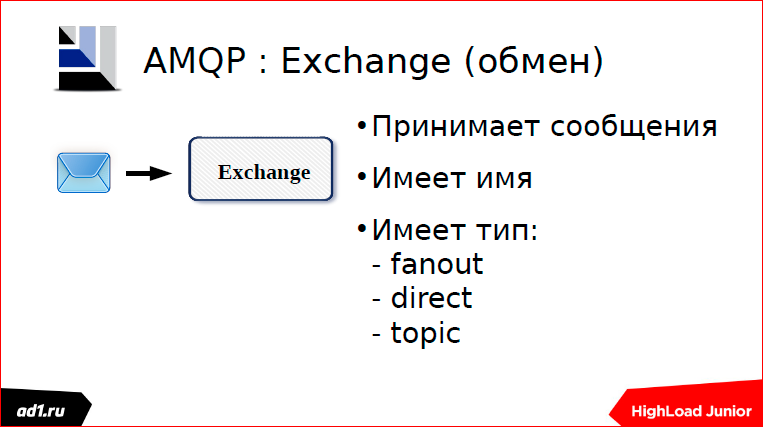

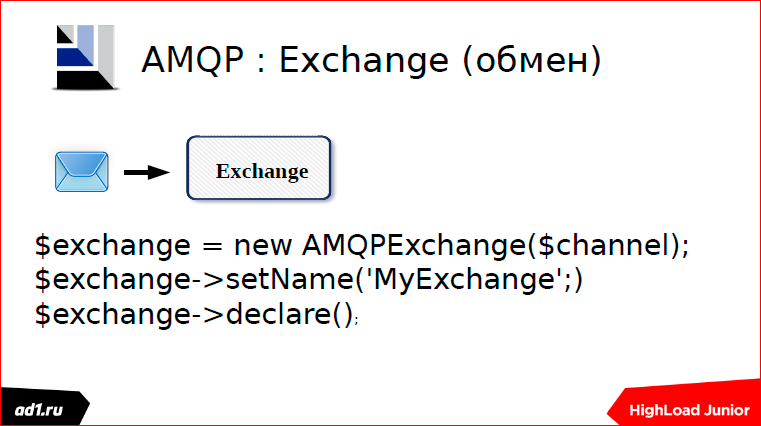

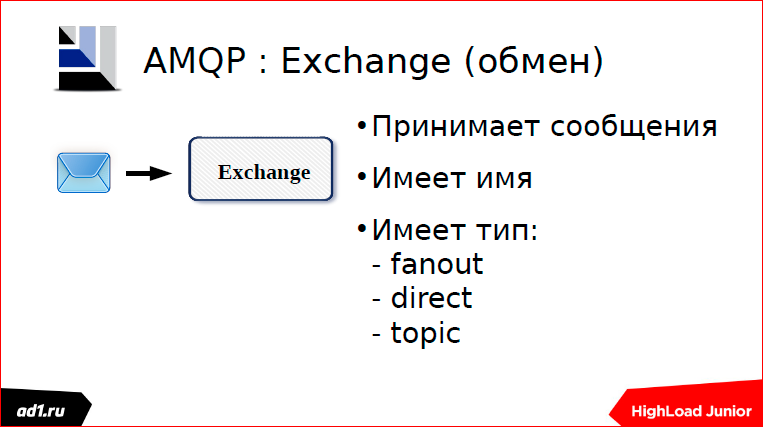

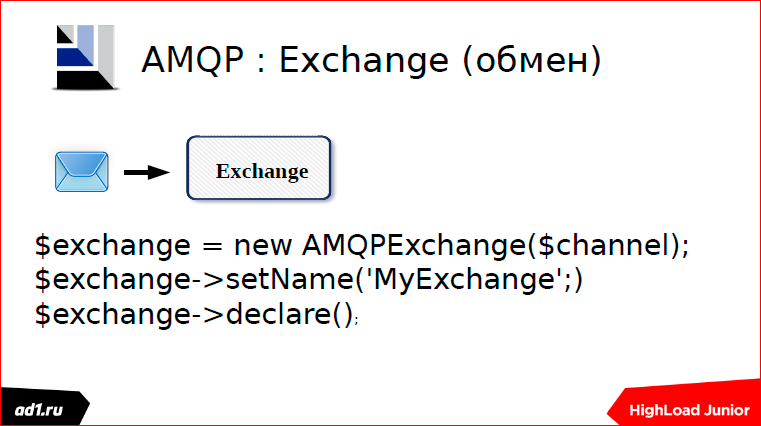

Now briefly about AMQP - Advanced Message Queuing Protocol.

If you talk about it for a long time, then you can talk about its features for an hour and a half, no less. I am brief. We will present a broker as an ideal postal service. Exchange - this will be the mailbox of the sender where the message arrives.

This Exchange has type, properties.

Here it is written in PHP how to declare it. By the way, I also developed this driver.

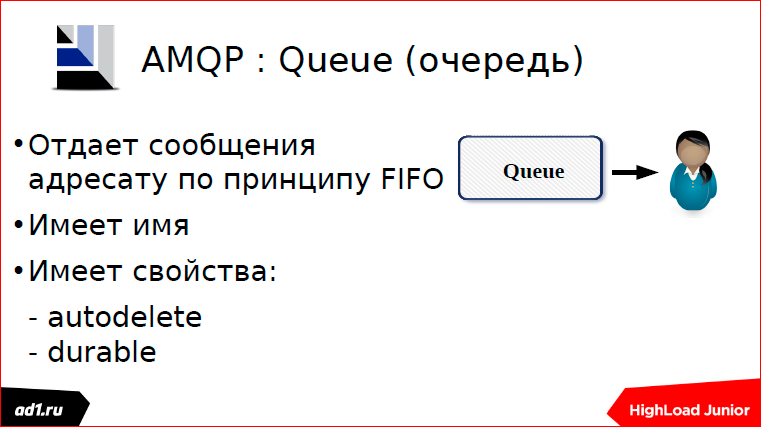

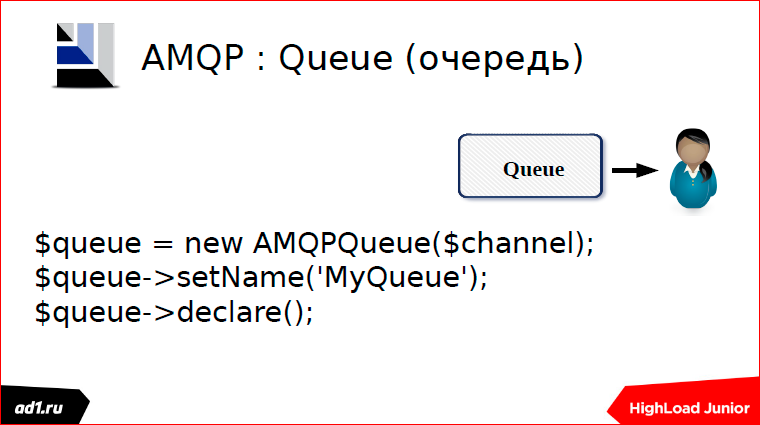

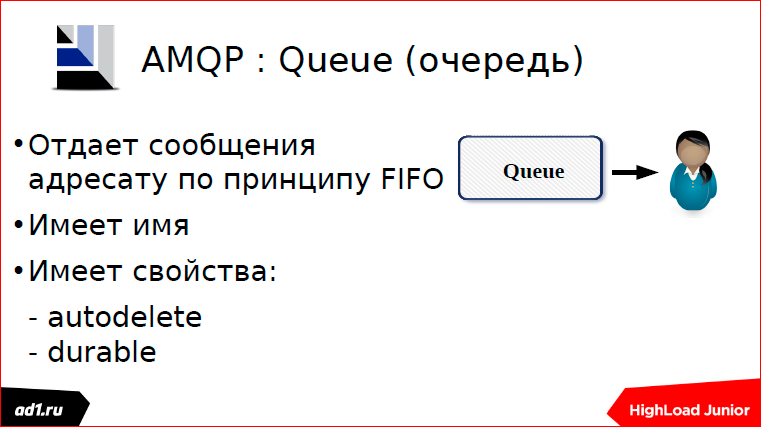

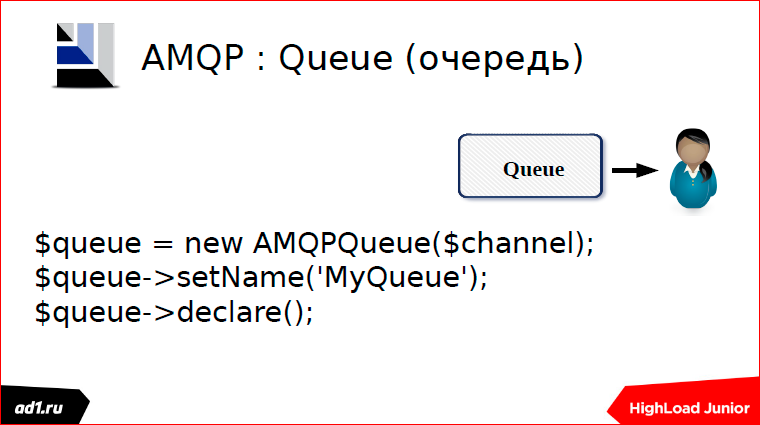

Once there is a sender's box, we must have a recipient's box. The recipient's box has such a feature that we can take only one letter for one appeal. The recipient's box also has a name, a property. Something like this should be declared:

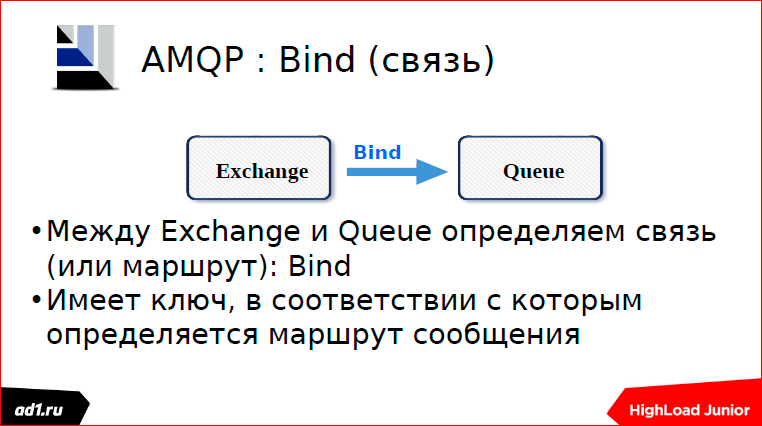

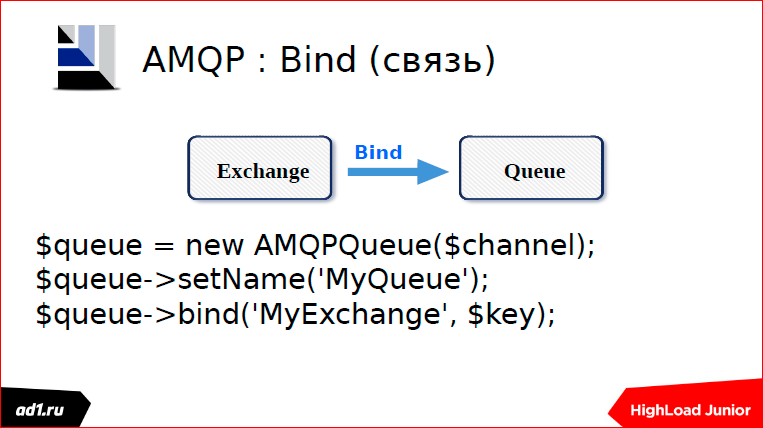

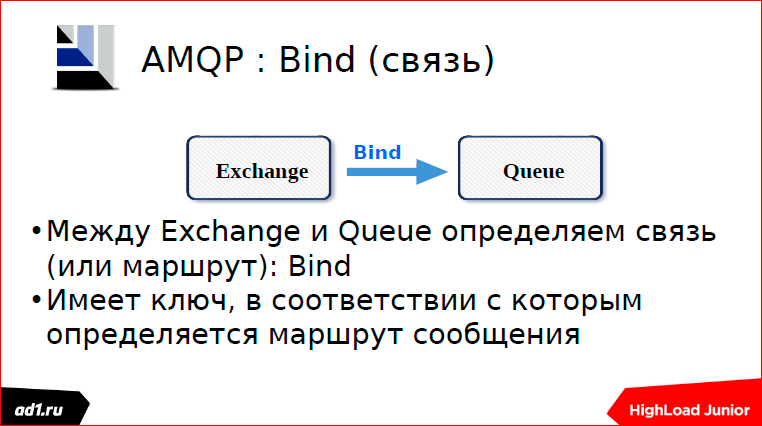

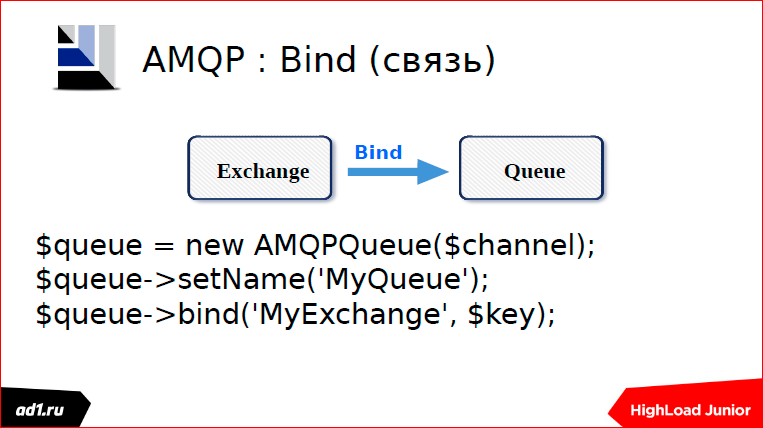

Between the sender's box and the recipient's box, you need to build a route along which the postmen will run and carry our letters.

This route is determined by the routing key.

When we declare a connection, we will definitely specify the routing key. This is one of the ways to advertise.

Approximately this is how messages are sent:

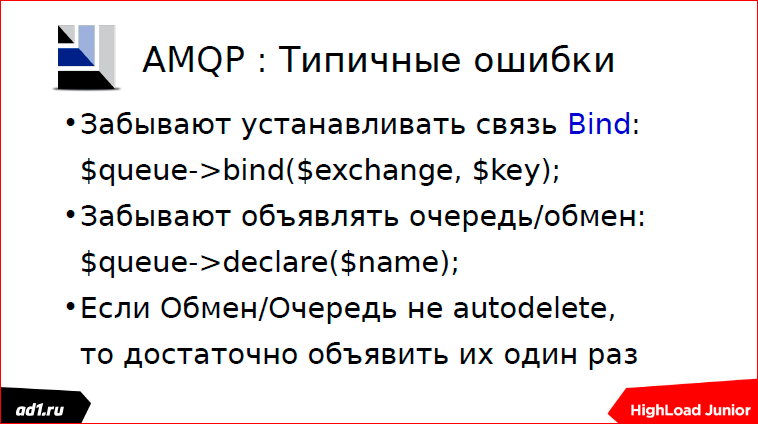

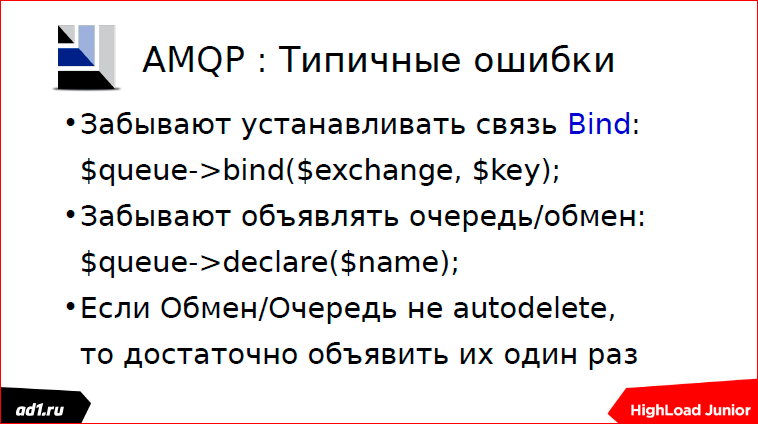

What typical mistakes do we have?

Typical mistakes are that people often forget to define a connection. Now the third Rabbit is more or less decent, it has a web interface, you can see everything through the web interface: what is announced there, which queues, what type they have, what exchange there is, what type they have.

The second typical mistake. When we declare a queue or exchange, they default to our autodelete - the session has ended, the queue was killed. Therefore, it must be redeclared each time. In principle, it is undesirable to do this, but it is better to make a permanent queue and still designate durable. Durable is such a sign that if we have a queue durable, then after a RabbitMQ restart, we will have this queue.

What can you say about RabbitMQ? It is not very pleasant to administer, but it can be expanded if we know Erlang. He is very picky from memory. RabbitMQ works through Erlang's embedded solution, but it eats a lot of memory. There are some plugins that work with other repositories, but I honestly did not work with them.

Here in this journal "System Administrator" I wrote an article "Rabbit in the sandbox" - there, in principle, the same thing that I told you here.

And in this article, I described more such interesting patterns, how to use RabbitMQ, what queues can be redirected there, for example, how to make it so that if the queue is not read, then this data is written to another queue, to the spare one, which can then read another script. If possible, read.

I told about such a project at the very beginning. If I did this project today, I would use microservices.

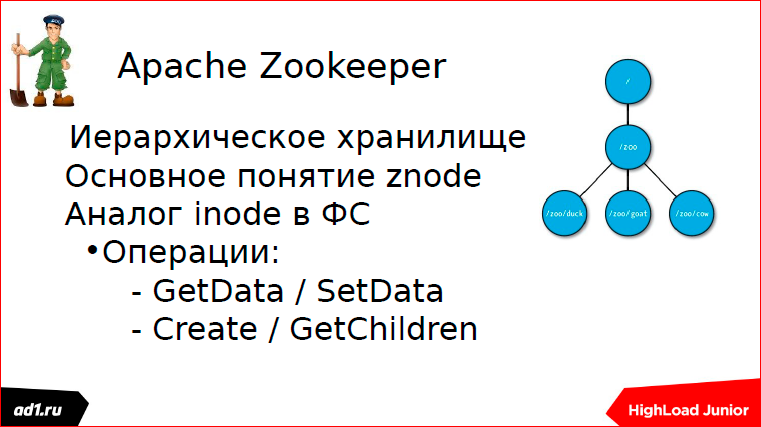

And microservices require synchronization as an interaction. We use a tool like Apache Zookeeper for synchronization.

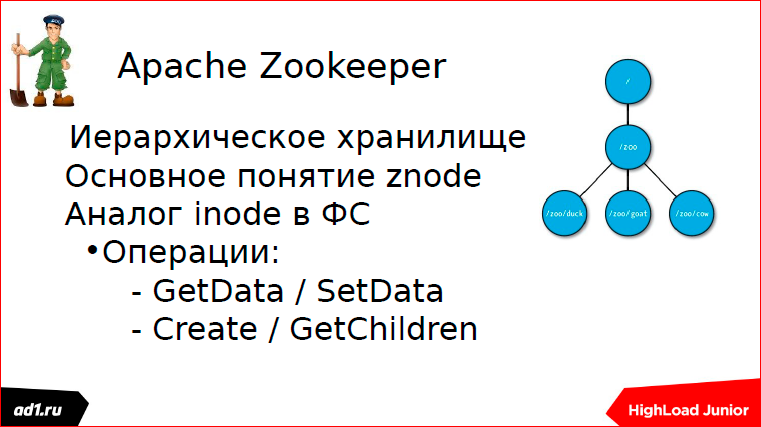

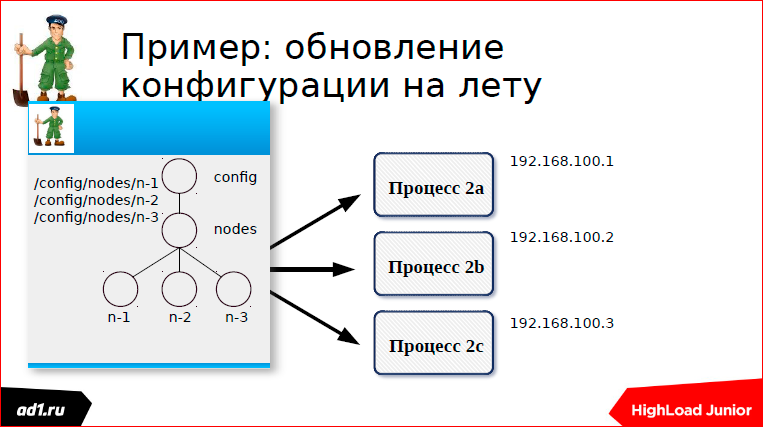

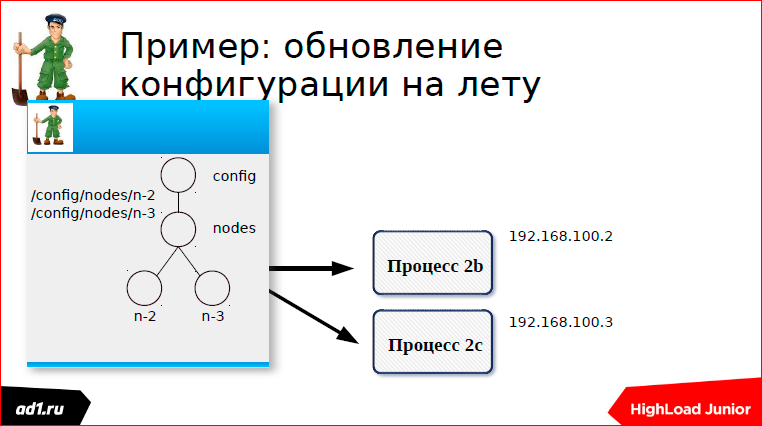

The Apache Zookeeper philosophy is based on znode. Znode, by analogy with the file system element, has a certain path. And we have the operation of creating a node, creating children of a node, receiving children, obtaining data and writing something to the data.

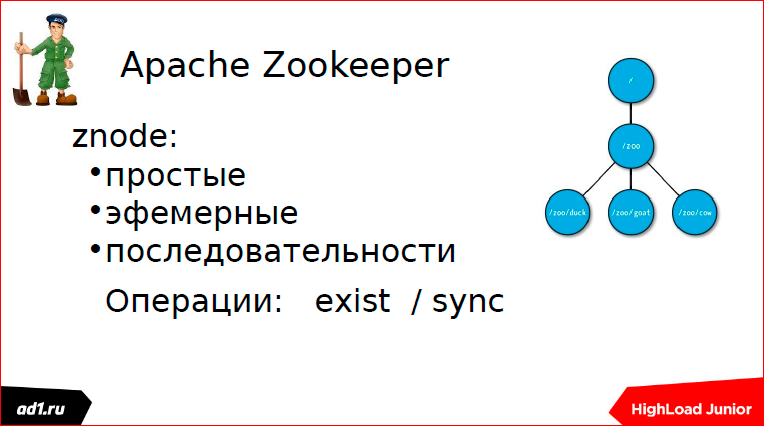

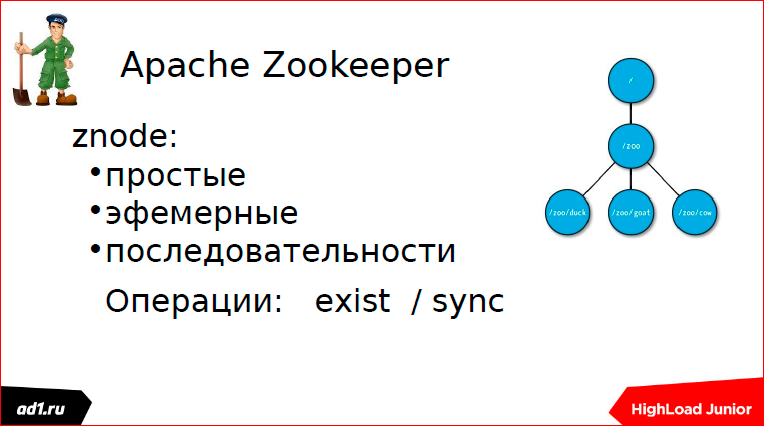

Znode come in two types: simple and ephemeral.

Ephemeral - these are znode, which, if our user session has died, then znode is destroyed, autodelete.

Sequences are auto-increment znode, i.e. these are znode, which have a certain name and auto-increment numeric prefix.

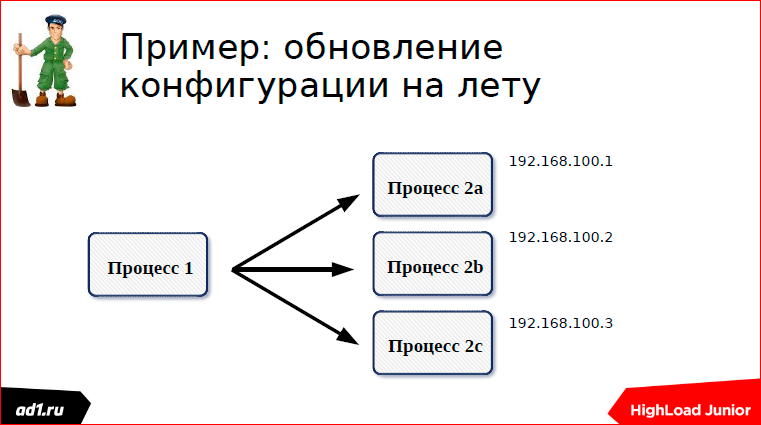

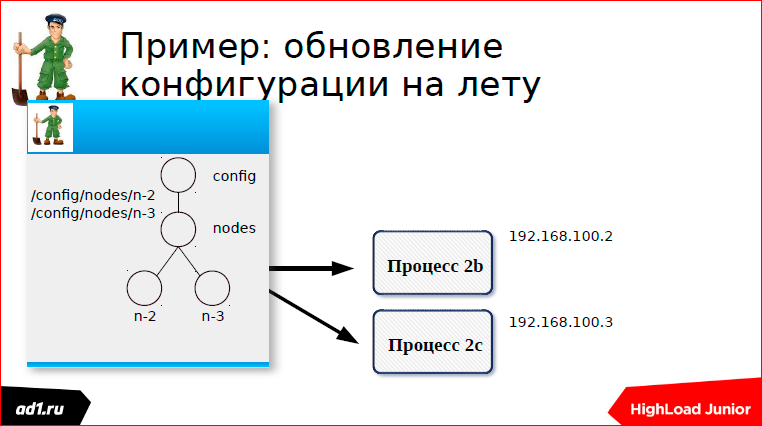

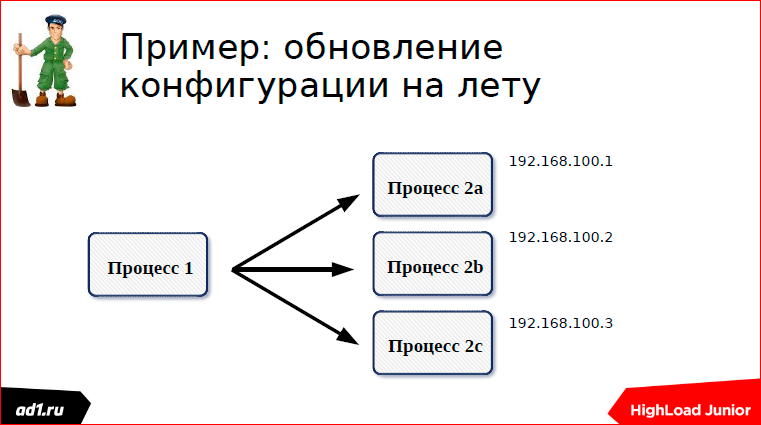

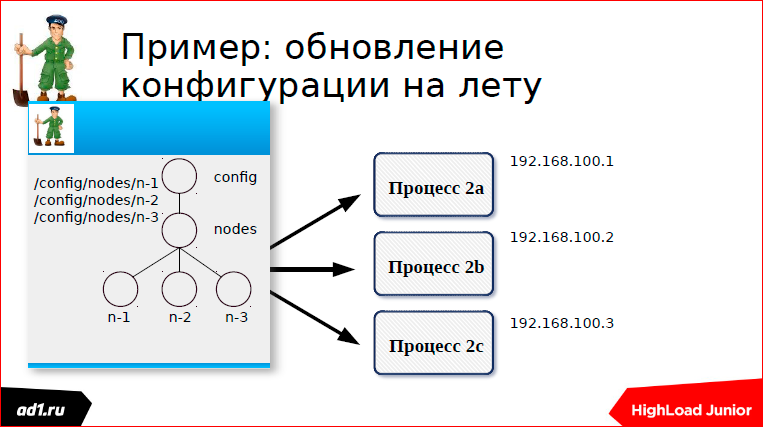

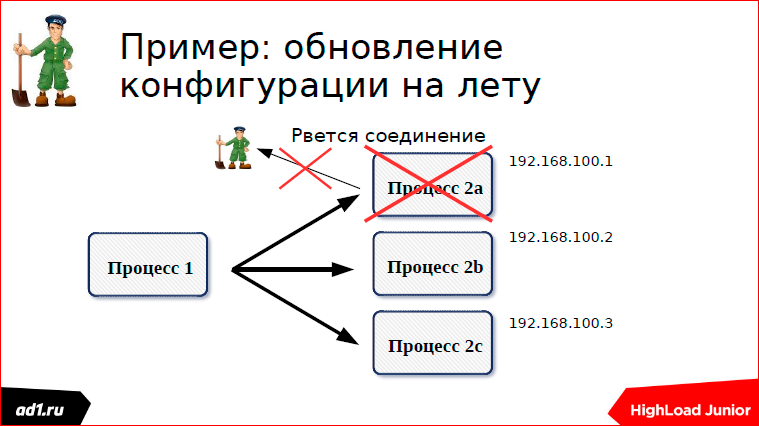

Using the configuration example on the fly, I’ll tell you how it all works. We have two groups of processes - a group of processes a and a group of processes b. Processes 1 connect to processes 2 and somehow interact. Processes 2, when started, write their configuration in Zookeeper.

Each process creates its own znode - the first process, the second, the third.

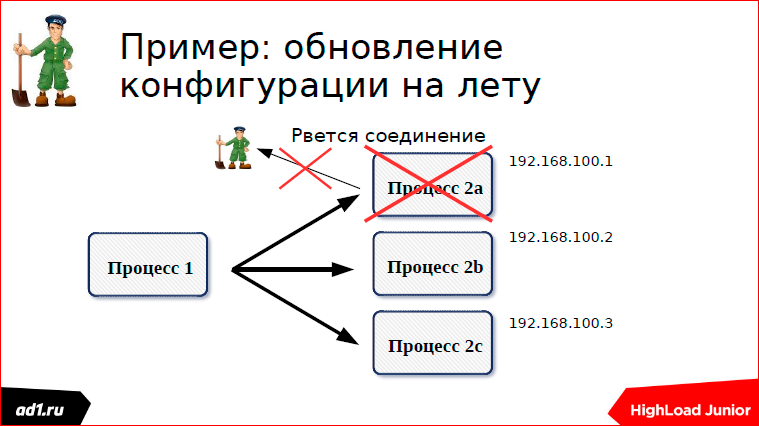

And here we are one of the processes stopped or, for example, launched. I have here on the example of stopping the processes shown:

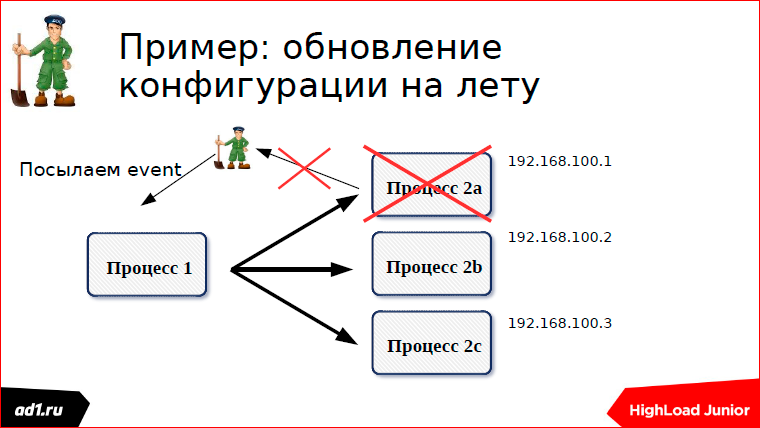

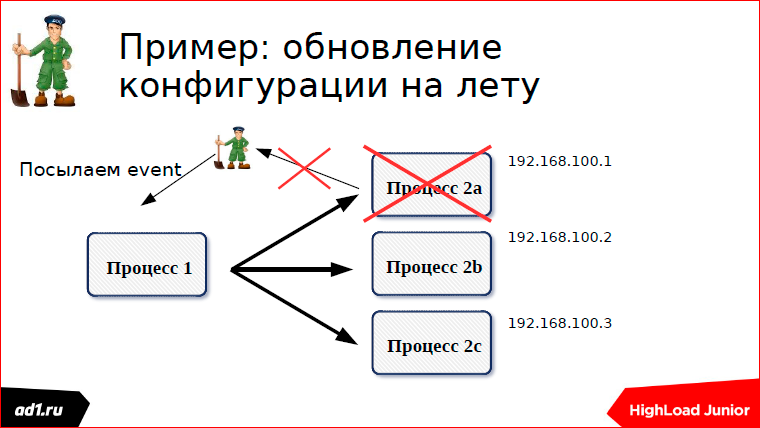

Our process is stopped, the connection is broken, the znode is gone, the event is sent, that we listened to this znode, that there was one znode in it.

Event is sent, we recalculate the configuration. Everything works very nicely.

Something like this is synchronized. There are other examples, as someone synchronized there with backups.

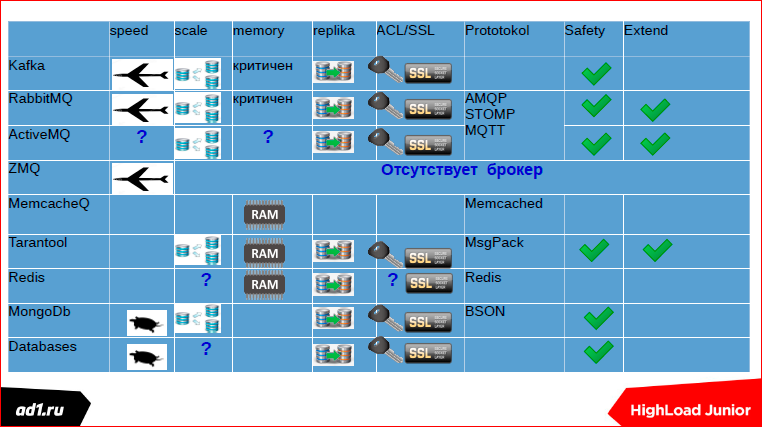

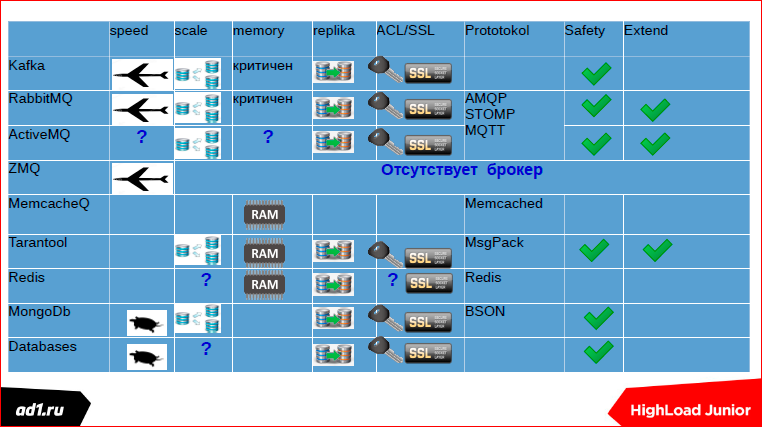

In this summary slide, I would like to demonstrate all the capabilities of the queue servers. Where we have question marks is either a controversial point, or there simply was no data. For example, the database we scale, right? It is not clear, but, in principle, it scales.But is it possible to scale the queues for them or not? Basically, no. Therefore, I have a question here. On ActiveMQ I just have no data. With Redis, I can explain - there is an ACL, but it is not quite correct. You can say it is not. Scaling Redis? Through the client it is scaled, I have not seen such some elements, boxed solutions.

Such are the conclusions:

» Akalend

» akalend@mail.ru

Alexander Calendarev ( akalend )

Dear colleagues! My report will be about the thing, without which not a single HighLoad project can do - about the queue server, and if I have time, I’ll tell you about the blocking (the decryptor’s note had time :).

')

What will the report be about? I will tell you about where and how queues are used, why all this is needed, I will tell you a little bit about protocols.

Since Our conference calls HighLoad Junior, I would like to go from the Junior project. We have a typical Junior project - this is some kind of web page that accesses the database. Maybe this is an electronic store or something else there. And so, the users went and went to us, and at some stage we got a mistake (maybe another error):

We climbed the Internet, began to explore how to scale, we decided to get backends.

More users and more users went, and we had another mistake:

Then we got into the logs, looked at how you can scale the SQL-server. Found and made replication.

But here we got errors in MySQL:

Well, errors can be in simpler configurations, I showed it here figuratively.

And at this moment we begin to think about our architecture.

We view our architecture “under the microscope” and single out two things:

The first is some critical elements of our logic that need to be done; and the second - some slow and unnecessary things that can be put off until later. And we are trying to divide this architecture:

We divided it into two parts.

We try to put one part on one server, and another part on another. I call this pattern a “cunning student”.

I myself once “suffered” with it: I told my parents that I had already done my homework and ran for a walk, and the next day I read these homework before homework and told the teachers in two minutes.

There is a more scientific name for this pattern:

And in the end we come here to this architecture, where there is a web server and a backend server:

Between themselves, they must somehow bind. When these are two servers, it is made easier. When there are several, it is a little more complicated. And we wonder how to connect them? And one of the solutions of communication between these servers is a queue.

What is the queue? The queue is a list.

There is a longer and tedious, but this is just a list where we write the elements, and then we read them and delete them, we execute them. The list goes on, the elements are reduced, the queue is so regulated.

Moving on to the second part - where and how is it used? Once I worked in such a project:

This analogue of Yandex.Market. In this project, there are a lot of different services. And these services somehow had to be synchronized. They synchronized through the database.

How is the queue on the database?

There is a certain counter - in MySQL it is an auto-increment, in Postgress this is realized through Sikkens; there is some data.

Write the data:

We read data:

We remove from the queue, but for complete happiness you need lock'i.

Is it good or bad?

It is slow. My given pattern for this does not exist, but in some cases many people make a queue through the database.

First, through the database you can store history. Then the deleted field is added, it can be both flag and timestamp to write. My colleagues through the line do the communication, which goes through the affiliate network, they record banners - how many clicks, then they have analytics on Vertic find clusters, which groups of users respond to which banners more.

We use MongoDb for this.

In principle, everything is the same, only some collection is used. We write to this collection, we read from this collection. With deletion - read the item, it is automatically deleted.

It is also slow, but still faster than DB. For our needs, we use it in statistics, this is normal.

Further, I worked in such a project, it was a social toy - “one-armed bandit”:

Everyone knows, you press a button, our drums are spinning, coincidences fall out. The toy was implemented in PHP, but I was asked to refactor it because our database could not cope.

I used Tarantool for this. Tarantool in a nutshell is key value storage, it is now closer to document-oriented databases. The operational cache was implemented on my Tarantool, it only slightly helped. We decided to organize all this through a queue. Everything worked fine, but once we have it all fell. The backend server fell. And in Tarantool, queues began to accumulate, user data accumulate, and memory overflowed because this is Memory Only storage.

Memory overflowed, everything fell, user data lost in half a day. Users are a little unhappy, somewhere they played something, lost, won. Who has lost, to that well, who has won - to that is worse. What is the conclusion? It is necessary to do monitoring.

What is there to monitor? The length of the queue. If we see that it exceeds the average length every 5 or 10, 20 times, then we have to send an SMS - we have such a service done on Telegram. Telegram is free, SMS still costs money.

What else does Tarantool give us? Tarantool is a good solution, there is a sharding out of the box, replication out of the box.

Even in Tarantool there is a wonderful package with Queue.

What I implemented was 4-5 years ago, then there was no such package yet. Now there is a very good API for Tarantool, if anyone is using Python, they have an API, generally honed under the queue. I myself have been in PHP since 2002, 15 years already. Developed a module for Tarantool in PHP, so PHP is a little closer to me.

There are two operations: writing to a queue and reading from a queue. I want to draw attention to this tsiferka (0.1 blue on the slide) - this is our timeout. And, in general, there are two approaches to the approach to writing backend scripts that parse the queue: synchronous and asynchronous.

What is a synchronous approach? This is when we read from the queue and, if there is data, then we process it, if there is no data, then we get up in the lock and wait for the data to come. The data came, we went read further.

Asynchronous approach, as is clear, when there is no data, we went further - either we read from another queue, or we do some other operations. If necessary, wait. And again we go to the beginning of the cycle. Everyone understands everything is very simple.

What kind of buns do we give the package Queue? There are queues with priorities, this I have not seen anywhere else among other servers of the queues. There you can still set the life of an element of the queue - sometimes it is very useful. Delivery confirmation - and that's it. I spoke about synchrony and asynchrony.

Redis. This is our zoo, where there are many different data structures. The queue is implemented on the lists. What are good lists? They are good because the access time to the first element of the list or to the last one occurs in constant time. How is the queue implemented? From the beginning of the list we write, from the end of the list we read. You can do the opposite, it does not matter.

I worked in a toy. This toy was written on VKontakte. The classic implementation was, the toy worked fast, the flash drive communicated with the web server.

Everything was fine, but once we were told from above: “Let's use statistics, our partners want to know how many units we bought, which units we buy most, how many we have left and from what level of users, etc.”. And they suggested that we not reinvent the wheel and use external statistics scripts. And everything was wonderful.

Only my script worked 50 ms, and when they turned to the external script, there was some kind of America, it was at least 250 ms, and then more than 2 seconds ping went there. Accordingly, the whole toy is frozen.

We applied the following scheme:

And everything was good, everything worked quickly. But once our admin went on vacation. The admin went on vacation, everything was fine the first week, and a week later we learned that Redis was flowing. Redis flows, there is no admin, we come in, we look at the console in the morning, we look at how much memory is left, how much is left before the swap'a, sighing: "Oh, how good, you have carried by today." On Friday, many users came to us, especially after lunch, there was not enough memory.

The conclusions are the same as with Tarantool. Another project, the same mistakes. Memory needs to be monitored. The queue length needs to be monitored. Everybody says a lot about monitoring, I will not repeat.

In Redis, you can also perform both blocking and non-blocking read operations; Count operation is needed just for monitoring.

Somehow I also worked remotely in the project of downloading videos from popular video hosting sites:

What is the problem with this project? The fact is that if we upload a video, then we convert it, if the video is slightly longer, the web client just falls off. Applied this scheme:

Everything is good with us. Upload the file. But the queue works for us only in one direction, and we have to inform our web script - the file has already been downloaded.

How it's done? This is done in two ways.

First, we check the status through some specific timeout. What could be the status? This is the keyvalue repository - it is better to take the same Redis. Key can serve any MD5 hash from our URL. And after we convert, we write the status in the keyvalue. The status can be: "completed", "converted", "not found" or something else. After a second, after some timeut, the script will request the status, see what is done or not done, show everything to the client. All clear. This is the first way we used pooling.

The second is web sockets.

We load the file - this is the second way. These are subscriptions. Here, just, used web sockets.

How it's done? As soon as we started downloading, we immediately subscribe to the channel in Redis. If there it was possible, say, to use memcahed or something else, if we did not use Redis, then it is tied to Redis. Subscribe to a channel, the name of the channel. Roughly speaking, the same MD5 hash from the URL.

As soon as we uploaded the file, we take and push into the channel, that we have the status "fulfilled" or the status "not found". And immediately instantly with us, Push gives status to a web script. After that, download the file if it is found.

Not quite directly, some such scheme.

How it's done? There is a certain source of data - the temperature of the volcano, the number of stars visible in telescopes, directed by NASA, the number of deals for specific stocks ... We accept this data, and our background script, which received this data, pulls them into a certain channel. Our web script is via a web socket, JS nodes are usually used, subscribes to a certain channel, as soon as data is received there, it sends this data to a client script via a web socket, and they are displayed there.

There is such a solution - MamecachedQ. This is a rather old solution, I would say, one of the first. It was spawned by using Mamecached and BerkeleyDb, this is an embedded, one of the earliest, keyvalue repository.

What is interesting about this decision? By using the Mamecached protocol.

What is the big minus - we are not able to understand the queue length here. What I said is monitoring is needed, but there is no monitoring here.

Speaking of queues, one can not say about the Zerro MQ.

Zerro MQ is a good and fast solution, but it is not a queuing broker, it must be understood. This is just an API, i.e. we connect one point to another point. Or one point with many points. But there are no queues, if one of the points disappears, then some data will be lost. Of course, I can write the same broker on the same Zerro MQ and realize it ...

Apache Kafka. Somehow I tried to use this solution. This is a decision from the hadoop stack. It is, in principle, a good, high-performance solution, but it is needed where there is a large flow of data and it needs to be processed. And so, I would use lighter solutions.

It needs to be configured for a very long time, synchronized via Zookepek, etc.

Protocols. What are protocols?

I showed you a bunch of all sorts of decisions. The IT community thought and said: “What are we all reinventing bicycles, let’s lead us to the whole thing.” And invented protocols. One of the earliest protocols is STOMP.

His description covers everything that can be done with queues.

The second protocol, MQTT, is the Message Queue Telemetry Transport protocol.

It is binary, as opposed to STOMP, covers, in principle, all the same functionality as STOMP, but more quickly due to the fact that binary.

Here are the most prominent representatives, the queue broker, who work with protocols:

ActiveMQ uses all three protocols, even four (there is one more). RabbitMQ uses three protocols; Qpid uses Q and P.

Now briefly about AMQP - Advanced Message Queuing Protocol.

If you talk about it for a long time, then you can talk about its features for an hour and a half, no less. I am brief. We will present a broker as an ideal postal service. Exchange - this will be the mailbox of the sender where the message arrives.

This Exchange has type, properties.

Here it is written in PHP how to declare it. By the way, I also developed this driver.

Once there is a sender's box, we must have a recipient's box. The recipient's box has such a feature that we can take only one letter for one appeal. The recipient's box also has a name, a property. Something like this should be declared:

Between the sender's box and the recipient's box, you need to build a route along which the postmen will run and carry our letters.

This route is determined by the routing key.

When we declare a connection, we will definitely specify the routing key. This is one of the ways to advertise.

There is a second approach. We can declare Exchange and make it Bind to the queue, i.e. it is the opposite - we can make a connection from Exchange to Exchange or from Exchange to a queue, it makes no difference.

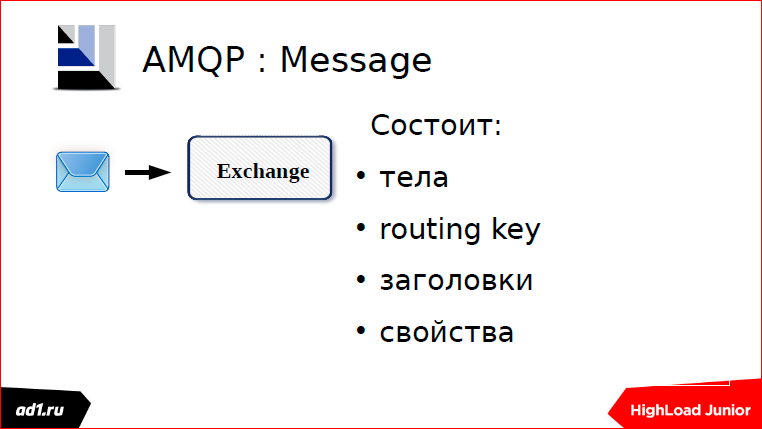

We have a message. The message must necessarily specify routingKey, i.e. This is the key that our postman will follow the route.

Our postmen can be of three types:

- The first type is blind postmen. They cannot read the key; they run only along the route that we have laid for them. These postmen are fleeing, and this

the fastest postmen. - Postmen of the second type can read a little, they check letters, but they don’t know how to ... They checked the letters, that the key of our message is the same and

corresponding path, which run, and run along that path. Those. our recipient is purely on the key match. - And the third kind of postmen is Topic. We can set a mask, a mask is the same as in the file system, and according to this mask our postmen carry letters.

Approximately this is how messages are sent:

What typical mistakes do we have?

Typical mistakes are that people often forget to define a connection. Now the third Rabbit is more or less decent, it has a web interface, you can see everything through the web interface: what is announced there, which queues, what type they have, what exchange there is, what type they have.

The second typical mistake. When we declare a queue or exchange, they default to our autodelete - the session has ended, the queue was killed. Therefore, it must be redeclared each time. In principle, it is undesirable to do this, but it is better to make a permanent queue and still designate durable. Durable is such a sign that if we have a queue durable, then after a RabbitMQ restart, we will have this queue.

What can you say about RabbitMQ? It is not very pleasant to administer, but it can be expanded if we know Erlang. He is very picky from memory. RabbitMQ works through Erlang's embedded solution, but it eats a lot of memory. There are some plugins that work with other repositories, but I honestly did not work with them.

Here in this journal "System Administrator" I wrote an article "Rabbit in the sandbox" - there, in principle, the same thing that I told you here.

And in this article, I described more such interesting patterns, how to use RabbitMQ, what queues can be redirected there, for example, how to make it so that if the queue is not read, then this data is written to another queue, to the spare one, which can then read another script. If possible, read.

Blocking

I told about such a project at the very beginning. If I did this project today, I would use microservices.

And microservices require synchronization as an interaction. We use a tool like Apache Zookeeper for synchronization.

The Apache Zookeeper philosophy is based on znode. Znode, by analogy with the file system element, has a certain path. And we have the operation of creating a node, creating children of a node, receiving children, obtaining data and writing something to the data.

Znode come in two types: simple and ephemeral.

Ephemeral - these are znode, which, if our user session has died, then znode is destroyed, autodelete.

Sequences are auto-increment znode, i.e. these are znode, which have a certain name and auto-increment numeric prefix.

Using the configuration example on the fly, I’ll tell you how it all works. We have two groups of processes - a group of processes a and a group of processes b. Processes 1 connect to processes 2 and somehow interact. Processes 2, when started, write their configuration in Zookeeper.

Each process creates its own znode - the first process, the second, the third.

And here we are one of the processes stopped or, for example, launched. I have here on the example of stopping the processes shown:

Our process is stopped, the connection is broken, the znode is gone, the event is sent, that we listened to this znode, that there was one znode in it.

Event is sent, we recalculate the configuration. Everything works very nicely.

Something like this is synchronized. There are other examples, as someone synchronized there with backups.

In this summary slide, I would like to demonstrate all the capabilities of the queue servers. Where we have question marks is either a controversial point, or there simply was no data. For example, the database we scale, right? It is not clear, but, in principle, it scales.But is it possible to scale the queues for them or not? Basically, no. Therefore, I have a question here. On ActiveMQ I just have no data. With Redis, I can explain - there is an ACL, but it is not quite correct. You can say it is not. Scaling Redis? Through the client it is scaled, I have not seen such some elements, boxed solutions.

Such are the conclusions:

- It is necessary to use each tool for its intended purpose. I talked a lot with different developers, RabbitMQ now uses only non-lazy, but in

most cases the same RabbitMQ can be replaced by Redis. - Speed multiplied by reliability. What did I mean by that? The faster the tool works, the less reliability it has. But on the other hand, the

value of this constant may vary - this is my personal observation. - Well, about the monitoring, there was a lot of talk before me and professionals.

Contacts

» Akalend

» akalend@mail.ru

This report is a transcript of one of the best speeches at the training conference for developers of high-load systems HighLoad ++ Junior .

Also, some of these materials are used by us in an online training course on the development of high-load systems HighLoad.Guide is a chain of specially selected letters, articles, materials, videos. Already, in our textbook more than 30 unique materials. Get connected!

Well, the main news is that we have begun preparations for the spring festival " Russian Internet Technologies ", which includes eight conferences, including HighLoad ++ Junior . Of course, we are greedy merchants, but now we are selling tickets at cost price - you can catch up to the price increase :)

Source: https://habr.com/ru/post/316458/

All Articles