Caches for "dummies"

Cash through the eyes of the "kettle":

Cache is a complex system. Accordingly, at different angles, the result can lie in both the real and imaginary regions. It is very important to understand the difference between what we expect and what it really is.

Let's scroll through the full situation.

')

Tl; dr: adding to the cache architecture it is important to clearly realize that the cache can be a means of destabilizing the system under load. See the end of the article.

Imagine that we have access to a database that returns currency rates. We ask for rates.example.com/?currency1=XXX¤cy2=XXX and in return we get a plain text course value. Each 1000 database queries for us, for example, cost 1 eurocent.

So, now we want to show on our website the dollar against the euro. To do this, we need to get a course, so on our site we create an API wrapper for convenient use:

For example:

And in the templates in the right place we insert something like:

(well, or even

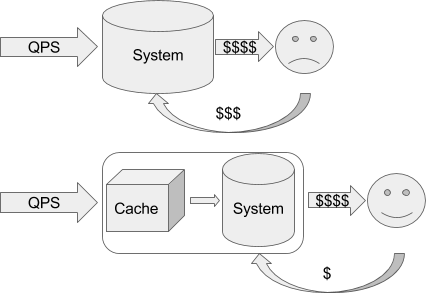

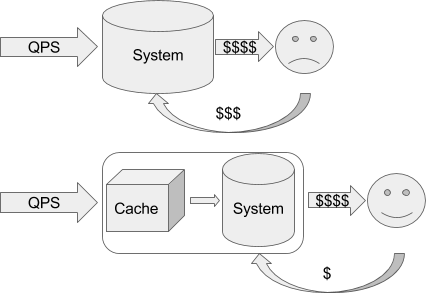

A naive implementation does the simplest thing you can think of: for each request from the user, ask the remote system and use the answer directly. This means that now every 1000 views by users of our page cost us a penny more. It would seem - pennies. But the project is growing, we already have 1000 regular users who visit the site every day and browse 20 pages, and this is already 6 euros per month, which turns the site from free to completely comparable to the payment for the cheapest dedicated virtual servers.

This is where His Majesty Cash comes onto the scene.

Why should we ask a course for each user for each page refresh, if this information is generally not needed for people so often? Let's just limit the refresh rate to, for example, every 5 seconds. Users moving from page to page will still see a new number, and we will pay 1,000 times less.

No sooner said than done! Add a few lines:

This is the most important aspect of the cache: storing the last result .

And voila! The site again becomes almost free for us ... Until the end of the month, when we discover a bill for 4 euros from an external system. Of course, not 6, but we expected much greater savings!

Fortunately, the external system allows you to see charges, where we see bursts of 100 or more requests every 5 seconds during peak attendance.

So we got to know the second important aspect of the cache: query deduplication . The fact is that as soon as the value is outdated, between checking the availability of the result in the cache and storing the new value, all incoming requests actually execute the request to the external system simultaneously.

In the case of memcache, this can be implemented, for example, as follows:

And then, finally, consumption was equal to the expected - 1 request in 5 seconds, the costs were reduced to 2 euros per month.

Why 2? There were 6 without caching for thousands of people, we cached everything, but it decreased only 3 times? Yes, it was worthwhile to calculate early ... 1 time in 5 seconds = 12 per minute = 72 per hour = 576 per working day = 17 thousand per month, and not everyone goes according to schedule, there are strange people looking late at night ... So it turns out a peak instead of one hundred appeals is one, and in a quiet time - still a request for almost every call passes. But still, even in the worst case, the bill should be 31 × 86400 ÷ 5 = 5.36 euros.

So we met with another facet: the cache helps , but does not eliminate the load.

However, in our case, people come to the project and go away and at some point start complaining about the brakes: the pages freeze for a few seconds. It also happens that the site is not responding at all in the morning ... Viewing the site console shows that sometimes during the day additional instances are launched. At the same time, the speed of execution of requests drops to 5-15 seconds per request - because of what it happens.

Exercise for the reader: look carefully at the previous code and find the reason.

Yes Yes Yes. Of course, this is in the

By the way, this rake is not only a cache, it is a general aspect of distributed locks: it is important to release locks and have timeouts in order to avoid deadlocks. If we added "?" in general, without the lifetime, everything would stop at the first error of communication with the external system. Unfortunately, memcache does not provide good ways to create distributed locks, the use of a full-fledged database with row-level locks is better, but it was just a lyrical digression, necessary simply because they attacked this rake.

So, we fixed the problem, only nothing has changed: the brakes rarely started anyway. Remarkably, they coincided in time with the newsletter from the external system about the technical work ...

Well, let's ... Let's take a brief breather and recalculate what we have already collected, what the cache should be able to do:

Did you notice? The cache should provide points 1-2 and for the case of an error! Initially it seems obvious: you never know what happened, one request fell off, the next one will update. What will happen if the next one also returns an error? And the next one? Here we have received 10 requests, first captured the lock, tried to get the result, fell off, went out. The following checks - so, there is no lock, there is no value, we follow the result. Broke off, went out. And so for everyone. Well, nonsense! For a good 10 came, one tried it - everyone fell off. And let the next one try again!

From here: the cache must be able to store a negative result for some time. Our naive initial assumption essentially implies storing a negative result of 0 seconds (but the transfer of this very negative to all who are already waiting for it). Unfortunately, in the case of Memcache, the implementation of zero latency is quite problematic (I ’ll leave it as a homework to a corrosive reader ; advice: use the CAS mechanism; and yes, you can use Memcache and Memcached in AppEngine).

We simply add the preservation of a negative value with 1 second of life:

It would seem, well, now everything is already, and you can calm down? As if not so. While we were growing up, our favorite external service was also growing, and at some point, it sometimes began to slow down and respond as much as a second ... And what is remarkable - our website began to slow down along with it! And again for everyone! But why? We all cache, in case of errors, we remember the error and thereby release all those who are waiting right away, do not we?

... But no. Take a closer look at the code again: the request to the external system will be executed as long as

Well, instead of waiting, we can add an

No sooner said than done:

So, we won again: even if the external service slows down, it slows down no more than one page ... That is, the average response time is reduced, but users are still a little unhappy.

Note: plain PHP by default writes sessions to files, blocking parallel requests. To avoid this behavior, you can pass the read_and_close parameter to session_start or forcefully close the session after session_close after making all the necessary changes, otherwise not one page, but one user will slow down: since the script updating the value will block opening the session by another request from the same user When running on AppEngine, storage of sessions in memcache is turned on by default, that is, without locks, so the problem will not be so noticeable.

So, users are still unhappy (oh, those users!). Those who spend the most time on the site still notice these short hangs. And they are not at all pleased with the realization of the fact that it seldom happens this way, and they simply have no luck. It is necessary to make the requirement even more stringent for this case : no requests should wait for a response .

What can we do in such a question? We can:

In essence, keeping data always hot is a bit more complicated than just a few lines of PHP code, so for our simple case we will have to accept the fact that some query will “reflect” regularly (important: not accidental, but some; that is, not random , but arbitrary ). The applicability of this approach is always important to try on the task!

So, our data provider is growing, but not all of its clients read Habr, and therefore they do not use correct caching (if they use it at all) and at some point they start to issue a huge number of requests, because of what the service becomes bad, and occasionally he begins to answer not just slowly, but very slowly . Up to tens of seconds or more. Users, of course, quickly discovered that you can press F5 or otherwise reload the page, and it appears instantly - only the page started to rest against free limits again, as processes that just wait for an external response, but consume our resources, constantly began to hang.

Among other side effects, the incidence of obsolete courses has become more frequent. [Hmm ... well, imagine that we are not talking about our case, but about something more complicated, where obsolescence can be seen with the naked eye :) in fact, even in the simple case, there will always be a user who will notice such completely unobvious jambs ].

See what happens:

Of course, here we have to set the timeout we need via

So let's summarize. In the everyday sense of the cache:

In reality:

The cache assumes the “ephemerality” of the stored data, in connection with which the caching systems are free to handle the lifetime in general and the very fact of the request for data storage:

Thus, simply applying the cache, we often lay a mine of deferred action, which will necessarily explode - but not now, but in the future, when the solution will cost much more. When calculating system performance, it is important to consider without taking into account the reduction in execution time from caching positive responses, otherwise we improve the system behavior at quiet times (when the maximum hit ratio is maximum), and not during peak load / dependency overload (when cache poisoning usually happens).

Consider the simplest case:

And under heavy load, our cache is poisoned, and requests are not cached for 5 seconds, but for 1 second only, which means that we have 2 requests constantly busy (one executes the first request, the second starts updating the cache after a second while the first works), and the residual capacity for maintenance is reduced to 60 requests per second. That is, the effective capacity from (based on the average) 6000 requests per minute dramatically drops to ~ 3600. Which means that if the poisoning occurred on 5000 requests per minute, until the load drops from 5000 to 3000, the system is unstable. That is, any (even peak!) Surge of traffic can potentially cause long-term system instability.

Especially it looks great when after the newsletter with any new features almost at the same time comes a wave of users. A kind of marketing habraeffekt on a regular basis.

All this does not mean that the cache can not be used or harmful! How to properly use the cache to improve the stability of the system and how to recover from the above hysteresis loop, we will talk in the next article, do not switch.

Cache is a complex system. Accordingly, at different angles, the result can lie in both the real and imaginary regions. It is very important to understand the difference between what we expect and what it really is.

Let's scroll through the full situation.

')

Tl; dr: adding to the cache architecture it is important to clearly realize that the cache can be a means of destabilizing the system under load. See the end of the article.

Imagine that we have access to a database that returns currency rates. We ask for rates.example.com/?currency1=XXX¤cy2=XXX and in return we get a plain text course value. Each 1000 database queries for us, for example, cost 1 eurocent.

So, now we want to show on our website the dollar against the euro. To do this, we need to get a course, so on our site we create an API wrapper for convenient use:

For example:

<?php // :) function get_current_rate($currency1, $currency2) { $api_host = "http://rates.example.com/"; $args = http_build_query(array("currency1"=>$currency1, "currency2"=>$currency2)); $rate = @file_get_contents($api_host."?".$args); if ($rate === FALSE) { return $rate; } else { return (float) $rate; } } And in the templates in the right place we insert something like:

{{ get_current_rate("USD","EUR")|format(".2f") }} USD/EUR (well, or even

<?=sprintf(".2f", get_current_rate("USD","EUR"))?> , but this is the last century).A naive implementation does the simplest thing you can think of: for each request from the user, ask the remote system and use the answer directly. This means that now every 1000 views by users of our page cost us a penny more. It would seem - pennies. But the project is growing, we already have 1000 regular users who visit the site every day and browse 20 pages, and this is already 6 euros per month, which turns the site from free to completely comparable to the payment for the cheapest dedicated virtual servers.

This is where His Majesty Cash comes onto the scene.

Why should we ask a course for each user for each page refresh, if this information is generally not needed for people so often? Let's just limit the refresh rate to, for example, every 5 seconds. Users moving from page to page will still see a new number, and we will pay 1,000 times less.

No sooner said than done! Add a few lines:

<?php function get_current_rate($currency1, $currency2) { $cache_key = "rate_".$currency1."_".$currency2; // https://cloud.google.com/appengine/docs/php/memcache/ $memcache = new Memcache; $memcache->addServer("localhost", 11211); $rate = $memcache->get($cache_key); if ($rate) { return $rate; } else { $api_host = "http://rates.example.com/"; $args = http_build_query(array("currency1"=>$currency1, "currency2"=>$currency2)); $rate = @file_get_contents($api_host."?".$args); if ($rate === FALSE) { return $rate; } else { $memcache->set($cache_key, (float) $rate, 0, 5); return (float) $rate; } } } This is the most important aspect of the cache: storing the last result .

And voila! The site again becomes almost free for us ... Until the end of the month, when we discover a bill for 4 euros from an external system. Of course, not 6, but we expected much greater savings!

Fortunately, the external system allows you to see charges, where we see bursts of 100 or more requests every 5 seconds during peak attendance.

So we got to know the second important aspect of the cache: query deduplication . The fact is that as soon as the value is outdated, between checking the availability of the result in the cache and storing the new value, all incoming requests actually execute the request to the external system simultaneously.

In the case of memcache, this can be implemented, for example, as follows:

<?php function get_current_rate($currency1, $currency2) { $cache_key = "rate_".$currency1."_".$currency2; $memcache = new Memcache(); $memcache->addServer("localhost", 11211); while (true) { $rate = $memcache->get($cache_key); if ($rate == "?") { sleep(0.05); } else if ($rate) { return $rate; } else { // , , if ($memcache->add($cache_key, "?", 0, 5)) { $api_host = "http://rates.example.com/"; $args = http_build_query(array("currency1"=>$currency1, "currency2"=>$currency2)); $rate = @file_get_contents($api_host."?".$args); if ($rate === FALSE) { return $rate; } else { $memcache->set($cache_key, (float) $rate, 0, 5); return (float) $rate; } } } } } And then, finally, consumption was equal to the expected - 1 request in 5 seconds, the costs were reduced to 2 euros per month.

Why 2? There were 6 without caching for thousands of people, we cached everything, but it decreased only 3 times? Yes, it was worthwhile to calculate early ... 1 time in 5 seconds = 12 per minute = 72 per hour = 576 per working day = 17 thousand per month, and not everyone goes according to schedule, there are strange people looking late at night ... So it turns out a peak instead of one hundred appeals is one, and in a quiet time - still a request for almost every call passes. But still, even in the worst case, the bill should be 31 × 86400 ÷ 5 = 5.36 euros.

So we met with another facet: the cache helps , but does not eliminate the load.

However, in our case, people come to the project and go away and at some point start complaining about the brakes: the pages freeze for a few seconds. It also happens that the site is not responding at all in the morning ... Viewing the site console shows that sometimes during the day additional instances are launched. At the same time, the speed of execution of requests drops to 5-15 seconds per request - because of what it happens.

Exercise for the reader: look carefully at the previous code and find the reason.

Yes Yes Yes. Of course, this is in the

if ($rate === FALSE) branch if ($rate === FALSE) . If the external service returned an error, we did not release the lock ... In the sense that "?" It remained recorded, and everyone is waiting for it to become obsolete. Well, it's easy to fix: if ($rate === FALSE) { $memcache->delete($cache_key); return $rate; } else { By the way, this rake is not only a cache, it is a general aspect of distributed locks: it is important to release locks and have timeouts in order to avoid deadlocks. If we added "?" in general, without the lifetime, everything would stop at the first error of communication with the external system. Unfortunately, memcache does not provide good ways to create distributed locks, the use of a full-fledged database with row-level locks is better, but it was just a lyrical digression, necessary simply because they attacked this rake.

So, we fixed the problem, only nothing has changed: the brakes rarely started anyway. Remarkably, they coincided in time with the newsletter from the external system about the technical work ...

Well, let's ... Let's take a brief breather and recalculate what we have already collected, what the cache should be able to do:

- remember the last known result;

- deduplicate queries when the result is still or is not already known;

- ensure correct unblocking in case of error.

Did you notice? The cache should provide points 1-2 and for the case of an error! Initially it seems obvious: you never know what happened, one request fell off, the next one will update. What will happen if the next one also returns an error? And the next one? Here we have received 10 requests, first captured the lock, tried to get the result, fell off, went out. The following checks - so, there is no lock, there is no value, we follow the result. Broke off, went out. And so for everyone. Well, nonsense! For a good 10 came, one tried it - everyone fell off. And let the next one try again!

From here: the cache must be able to store a negative result for some time. Our naive initial assumption essentially implies storing a negative result of 0 seconds (but the transfer of this very negative to all who are already waiting for it). Unfortunately, in the case of Memcache, the implementation of zero latency is quite problematic (I ’ll leave it as a homework to a corrosive reader ; advice: use the CAS mechanism; and yes, you can use Memcache and Memcached in AppEngine).

We simply add the preservation of a negative value with 1 second of life:

<?php function get_current_rate($currency1, $currency2) { $cache_key = "rate_".$currency1."_".$currency2; $memcache = new Memcache(); $memcache->addServer("localhost", 11211); while (true) { $flags = FALSE; $rate = $memcache->get($cache_key, $flags); if ($rate == "?") { sleep(0.05); } else if ($flags !== FALSE) { // , , , // , false. return $rate; } else { if ($memcache->add($cache_key, "?", 0, 5)) { $api_host = "http://rates.example.com/"; $args = http_build_query(array("currency1"=>$currency1, "currency2"=>$currency2)); $rate = @file_get_contents($api_host."?".$args); if ($rate === FALSE) { // $memcache->set($cache_key, $rate, 0, 1); return $rate; } else { $memcache->set($cache_key, (float) $rate, 0, 5); return (float) $rate; } } } } } It would seem, well, now everything is already, and you can calm down? As if not so. While we were growing up, our favorite external service was also growing, and at some point, it sometimes began to slow down and respond as much as a second ... And what is remarkable - our website began to slow down along with it! And again for everyone! But why? We all cache, in case of errors, we remember the error and thereby release all those who are waiting right away, do not we?

... But no. Take a closer look at the code again: the request to the external system will be executed as long as

file_get_contents() allows. At the time of the request, everyone else is waiting, so every time the cache becomes obsolete, all threads are waiting for the main execution, and will receive new data only when they arrive.Well, instead of waiting, we can add an

else{} branch to the condition around memcache->add ... True, we should probably return the last known value, yes? After all, we cache exactly because we agree to get outdated information if there is no fresh information; So, one more requirement to the cache: let no more than one query slow down .No sooner said than done:

<?php function get_current_rate($currency1, $currency2) { $cache_key = "rate_".$currency1."_".$currency2; $memcache = new Memcache(); $memcache->addServer("localhost", 11211); while (true) { $flags = FALSE; $rate = $memcache->get($cache_key, $flags); if ($rate == "?") { sleep(0.05); } else if ($flags !== FALSE) { return $rate; } else { if ($memcache->add($cache_key, "?", 0, 5)) { $api_host = "http://rates.example.com/"; $args = http_build_query(array("currency1"=>$currency1, "currency2"=>$currency2)); $rate = @file_get_contents($api_host."?".$args); if ($rate === FALSE) { // , // . , . $memcache->set($cache_key, $rate, 0, 1); return $rate; } else { // _stale_ , // - : , , // . $memcache->set("_stale_".$cache_key, (float) $rate); $memcache->set($cache_key, (float) $rate, 0, 5); return (float) $rate; } } else { // , — // , . // , false, . return $memcache->get("_stale_".$cache_key); } } } } So, we won again: even if the external service slows down, it slows down no more than one page ... That is, the average response time is reduced, but users are still a little unhappy.

Note: plain PHP by default writes sessions to files, blocking parallel requests. To avoid this behavior, you can pass the read_and_close parameter to session_start or forcefully close the session after session_close after making all the necessary changes, otherwise not one page, but one user will slow down: since the script updating the value will block opening the session by another request from the same user When running on AppEngine, storage of sessions in memcache is turned on by default, that is, without locks, so the problem will not be so noticeable.

So, users are still unhappy (oh, those users!). Those who spend the most time on the site still notice these short hangs. And they are not at all pleased with the realization of the fact that it seldom happens this way, and they simply have no luck. It is necessary to make the requirement even more stringent for this case : no requests should wait for a response .

What can we do in such a question? We can:

- Trying to perform execution after response tricks, that is, if we have to update the value, we register the handler that will do it after the rest of the script has been executed. That's just it depends a lot on the application and the execution environment; The most reliable way is to use

fastcgi_finish_request (), which requires setting up the server via php-fpm (respectively, not available for AppEngine). - Make an update in a separate thread (that is, perform

pcntl_fork()or run the script viasystem()or something else) - again, it can work for your server, sometimes it even works on some shared hosting, where they are not very concerned about security, but, of course, does not work on services with paranoid security, that is, AppEngine does not fit. - Have a constantly running background process for updating the cache: the process should check with a given periodicity whether the value in the cache becomes outdated, and if the lifetime comes to an end, and the value required during the cache lifetime, updates it. We will discuss this moment a little later, when we get tired of our poor site with the exchange rate and move on to more fun matters.

In essence, keeping data always hot is a bit more complicated than just a few lines of PHP code, so for our simple case we will have to accept the fact that some query will “reflect” regularly (important: not accidental, but some; that is, not random , but arbitrary ). The applicability of this approach is always important to try on the task!

So, our data provider is growing, but not all of its clients read Habr, and therefore they do not use correct caching (if they use it at all) and at some point they start to issue a huge number of requests, because of what the service becomes bad, and occasionally he begins to answer not just slowly, but very slowly . Up to tens of seconds or more. Users, of course, quickly discovered that you can press F5 or otherwise reload the page, and it appears instantly - only the page started to rest against free limits again, as processes that just wait for an external response, but consume our resources, constantly began to hang.

Among other side effects, the incidence of obsolete courses has become more frequent. [Hmm ... well, imagine that we are not talking about our case, but about something more complicated, where obsolescence can be seen with the naked eye :) in fact, even in the simple case, there will always be a user who will notice such completely unobvious jambs ].

See what happens:

- Request 1 has arrived, there is no data in the cache, so we added a token '?' for 5 seconds and went for the course.

- After 1 second, query number 2 came, I saw the '?' Marker, I returned the data from the stale record.

- After 3 seconds, request number 3 arrived, I saw a '?' Marker, returned stale.

- After 1 second marker '?' outdated, even though query 1 is still waiting for a response.

- After another 2 seconds, query number 4 arrived, there is no marker, adds a new marker, and sets off for the course.

- ...

- Request 1 received a response, saved the result.

- Request X came, received the actual response from the cache of the 1st question (and when did the answer come? At the time of the request, or the time of the answer? - no one knows that ...).

- Request number 4 received an answer, saved the result - and again it is not clear whether this answer was newer or older ...

Of course, here we have to set the timeout we need via

ini_set("default_socket_timeout") or use stream_context_create ... So we come to another important aspect: the cache must take into account the time of receiving values . There is no general solution for behavior, but, as a rule, the caching time should be longer than the calculation time. If the calculation time exceeds the cache lifetime, the cache is not applicable . This is no longer a cache, but predictions that should be stored in a secure storage.So let's summarize. In the everyday sense of the cache:

- replaces most requests for a known answer;

- limits the number of requests for obtaining expensive data;

- makes request time invisible to the user.

In reality:

- replaces some requests from the cache life window with stored values (the cache can be lost at any time, for example, due to lack of memory or extravagant requests);

- tries to limit the number of requests (but without special implementation of the limitation of the frequency of outgoing requests, it is really possible to provide only the characteristics of the “maximum 1 outgoing request at one time” type);

- the request execution time is visible only to some users (and the “lucky ones” are not evenly distributed).

The cache assumes the “ephemerality” of the stored data, in connection with which the caching systems are free to handle the lifetime in general and the very fact of the request for data storage:

- cache can be lost at any time. Even our execution blockers '?' may be lost if in parallel 10 thousand more users walk around the site, all while saving something (often the time of the last visit to the site) to a session that lies on the same cache server; after the token is lost (“cache poisoned”), the next query again starts the procedure of updating the value in the cache;

- the faster the request is executed in the remote system, the fewer requests will be deduplicated in the event of cache poisoning.

Thus, simply applying the cache, we often lay a mine of deferred action, which will necessarily explode - but not now, but in the future, when the solution will cost much more. When calculating system performance, it is important to consider without taking into account the reduction in execution time from caching positive responses, otherwise we improve the system behavior at quiet times (when the maximum hit ratio is maximum), and not during peak load / dependency overload (when cache poisoning usually happens).

Consider the simplest case:

- We look at the system in a calm state, and we see an average execution time of 0.05 sec.

- Conclusion: 1 process can serve 20 requests per second, which means that for 100 requests per second 5 processes are enough.

- That's only if the update time of the request increases to 2 seconds, it turns out:

- 1 process is busy updating (for 2 seconds);

- During these 2 seconds, we have only 4 processes available = 80 requests per second.

And under heavy load, our cache is poisoned, and requests are not cached for 5 seconds, but for 1 second only, which means that we have 2 requests constantly busy (one executes the first request, the second starts updating the cache after a second while the first works), and the residual capacity for maintenance is reduced to 60 requests per second. That is, the effective capacity from (based on the average) 6000 requests per minute dramatically drops to ~ 3600. Which means that if the poisoning occurred on 5000 requests per minute, until the load drops from 5000 to 3000, the system is unstable. That is, any (even peak!) Surge of traffic can potentially cause long-term system instability.

Especially it looks great when after the newsletter with any new features almost at the same time comes a wave of users. A kind of marketing habraeffekt on a regular basis.

All this does not mean that the cache can not be used or harmful! How to properly use the cache to improve the stability of the system and how to recover from the above hysteresis loop, we will talk in the next article, do not switch.

Source: https://habr.com/ru/post/316344/

All Articles