Better in RainTime than never: Extend the JIRA API on the fly

What should be done if the API available in the application is not enough to solve the problem, but there is no possibility to promptly make changes to the code?

The last hope in this situation may be the use of java.lang.instrument tools. Anyone who is interested in what and how in Java can be done with code in an already running VM, welcome under cat.

On Habré there are already articles about working with bytecode:

')

But the application of these technologies, as a rule, is limited to logging or other simplest functions. And what if you try to wipe at expanding the functionality of the application through instrumentation?

In this article, I will show how you can perform tooling for a java agent application (both OSGi and Byte Buddy libraries) in order to add new functionality to the application. The article will be interesting primarily to people working with JIRA, but the approach used is quite universal and can be applied to other platforms.

So, after two or three days of researching the JIRA API, we understand that it is normal to implement cross-validation of field values (in the case when the valid values of one field depend on the value of another field) when creating / changing a task does not work out except through transition validation . The approach is working, but difficult and not convenient, so in my free time I decided to continue research in order to have a plan B.

On the recent Joker, there was a report by Rafael Winterhalter about the Byte Buddy library, which wraps a powerful low-level bytecode editing API into a more convenient high-level shell. The library is currently quite popular, in particular, recently it is used in Mockito and Hibernate . Among other things, Raphael talked about the possibility of changing already loaded classes with Byte Buddy.

We think "And this is a thought!", And begin the work.

First, from the report by Raphael, we recall that modification of already loaded classes is possible only with the help of the java.lang.instrument.Instrumentation interface, which is available when you start the java agent. It can be installed either when starting a VM using the command line, or using the Attach API , which is platform-specific and comes with the JDK.

There is an important detail - you cannot remove the agent - its classes remain loaded until the end of the VM.

As for JIRA in terms of attach API support, then we cannot guarantee that it will be launched on the JDK and even more so we cannot guarantee the OS on which it will be running.

Second, we recall that the basic unit for expanding JIRA functionality is the add-on - Bundle on steroids. So all our logic, whatever it may be, will have to be in the form of add-ons. Hence the requirement that if we make any changes to the system, they must be idempotent and disconnectable.

Given these limitations, we see globally 2 tasks:

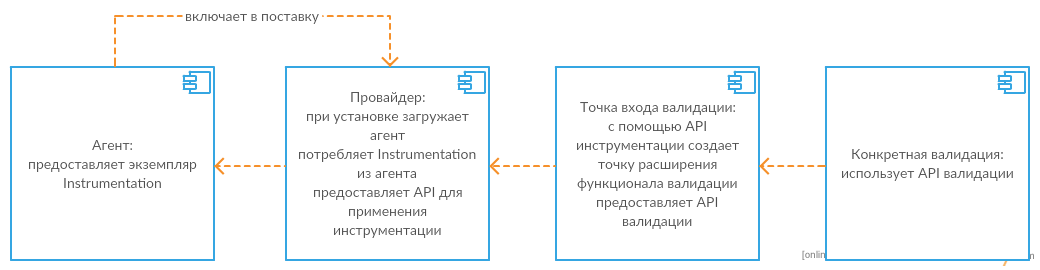

In the distribution of responsibility between the components, I got this scheme:

First we create an agent:

The code is trivial, explanations, I think, are not needed. As agreed - the most common logic, it is unlikely we will need to update it often.

Now create an add-on that this agent will attach to the target VM. Let's start with the agent installation logic. Full installer code under the spoiler:

Let's sort the code in parts.

The simplest scenario is if the agent is already loaded. Maybe it was enabled via the command line parameters at boot, or maybe the add-on is not installed the first time.

Check - easy, just load the agent class with the system classifier

If it is available, then nothing else needs to be installed. But let's say we have the first installation, and the agent is not yet loaded - we will do it ourselves using the attach API. Similarly to the previous case, we first check whether we are working under the JDK, i.e. the necessary API is available to us without additional manipulations or not. If not, then try to deliver the API.

Now consider the installation procedure attach API. The task of “turning” a JRE into a JDK begins with the definition of a container OS. In JIRA, the OS definition code is already implemented:

Now, knowing what OS we are under, let's consider how attach API can be downloaded. First of all, let's take a look at what the attach API actually consists of . As I said he is platform dependent.

Note: tools.jar is listed as platform independent, but this is not the case. In META-INF / services / it hides the com.sun.tools.attach.spi.AttachProvider configuration file, which lists the available providers for the environment:

They, in turn, are just very platform dependent.

To connect the necessary files to the assembly at the moment, I decided to simply pull out the library files and copies of the tools.jar from the corresponding JDK distributions and put them into the repository.

What is important to note is that after the upload, the attach API files cannot be deleted or modified, so if we want our add-on to still be deleted and updated, then we don’t need to load libraries directly from the jar - better when downloading copy them from our jar to a quiet, peaceful location accessible to us from JIRA.

To copy the files we will use the following method:

In addition to the usual file operations, this code also performs checksum calculation. At the time of writing this method of verification of components that are not updated in runtime, it first occurred to me. In principle, you can with the same success do a version check, if you version the artifacts. If the files are already uploaded, but the checksums do not match the artifacts from the archive, we will try to replace them.

So, there are files, let's figure out how to download. Let's start with the most difficult - loading the native library. If we look into the attach API subsoil, we will see that directly when performing tasks, the library is unloaded using this code:

This suggests that we need to add the location of our library to “java.library.path”

After that, it remains to add the necessary file of the native library into the correct directory and ... to score the first crutch in our solution. "Java.library.path" is cached in the ClassLoader class, in private static String sys_paths []. Well, we are private - let's go reset the cache ...

Here, we uploaded the native part — go to the Java part of the API. tools.jar in JDK is loaded by the system loader. We need to do the same.

After a bit of a jib, we find that the system loader implements java.net.URLClassLoader.

In short, this loader stores class locations as a list of URLs. All we need to download is to add the URL of our tools- [OS] .jar to this list. Having studied the URLClassLoader API, we are upset once again, because we find that the addURL method, which does exactly what is needed, turns out to be protected. Eh ... another backup to a slim prototype:

Well, finally, everything is ready to load the class of the virtual machine.

It is necessary to load it not with the current OSGi-classifier, but with the system one, which always remains in the system, since during the attach process, this class will load the native library, and this can only be done once. OSGi is the same when creating a bundle - every time a new one. So we risk getting such a thing:

The description is not obvious, but the real reason is that we are trying to load an already loaded library — you can only find out about this by attaching the attach method and seeing the real exception.

When we loaded the class, we can load the necessary methods and finally attach our agent:

The only subtlety here is the code for getting the pid of the virtual machine:

The method is not standardized, but quite working, and in the Java 9 Process API it will generally allow you to do this without any problems.

Now we embed this logic in the add-on. We are interested in the ability to call the code during the installation of the add-on - this is done using the standard Springs InitializingBean.

First, we call the agent installation logic (discussed above), and then open ServiceTracker - one of the main mechanisms for implementing the whiteboard pattern in OSGi. In short, this piece allows us to perform logic when adding / changing services of a certain type in a container.

Now, every time a service that implements the InstrumentationConsumer class is registered to the container, we will do the following

The java.lang.instrument.Instrumentation object will be received like this:

We proceed to writing the validation engine.

We find the point to which the most effective changes are made - the DefaultIssueService class (in fact, not all creation / change calls go through this point, but this is a separate topic), and its methods:

validateCreate:

and validateUpdate:

and pretend what logic we lack.

We need to, after calling the main logic, cause custom validation of the initial parameters by our code, which, if necessary, can change the result.

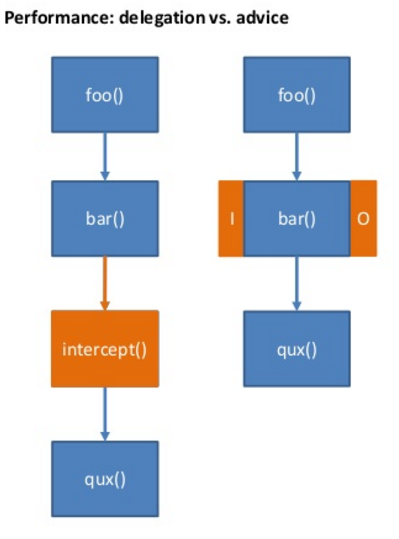

ByteBuddy offers us 2 options for implementing our ideas: using an interrupt and using the Advice mechanism. The difference of approaches can be clearly seen on the slide of the Raphael presentation .

The Interceptor API is well documented, any public class can act as it, more details here . Interceptor's call to the original bytecode is inserted INSTEAD of the original method.

When trying to use this method, I identified 2 significant drawbacks:

During the work on the project, I was born a solution to this problem, but since It interferes with the logic of loading classes of the entire application. I do not recommend using it without thorough testing and will lay out under the spoiler.

The second implementation of the instrumentation is the use of the Advice. This method is much worse documented - in fact, examples can only be found on Github tickets and StackOverflow responses.

It differs from the first one in that by default our advice methods are embedded in the class code. For us, this means:

It sounds perfect, we are provided with a smart API that allows you to get the original arguments, the results of the original code (including exceptions) and even get the results of the work of the Advice who worked to the original code. But there is always a “but”, and embedding imposes some restrictions on the code that can be embedded:

I did not find these limitations in the Byte Buddy documentation.

Well, let's try to write our Advice-style logic. As we remember, we need to try to minimize the necessary instrumentation. This means that I would like to abstract away from specific validation checks — to make sure that when a new check appears, it is automatically added to the list of checks that will be performed when validateCreate / validateUpdate is called, and the code of the DefaultIssueService class would not have to be changed.

In OSGi, this is easy to do, but the DefaultIssueService is beyond the framework of the framework and OSGi tricks will not work here.

Suddenly, the JIRA API comes to our rescue. Each add-on is represented in JIRA as an object of the Plugin class (wrapper over a Bundle with a number of special functions) with a specific key by which you can search for this plugin.

The key is set by us in the addon configuration, the plugin API is loaded by the same classifier as our DefaultIssueService - so nothing prevents us from calling our plugin in our advice, and using it to load any class that comes with this plugin. For example, this could be our check aggregator.

After that we can get an instance of this class through again the standard com.atlassian.jira.component.ComponentAccessor # getOSGiComponentInstanceOfType.

And no magic:

DefaultIssueServiceValidateUpdateAdvice looks similar to class and method names. It's time to write InstrumentationConsumer, which will apply our advice to the method you need.

Here I must say about one nice bonus. Using advice is idempotent! No need to take care not to apply the transformation twice when reinstalling the addon - the VM will do it for us .

Well, business for small - we will write the aggregator. First, we define the validation API:

Next, using standard OSGi tools at the time of the call, we obtain all available validations and execute them:

Everything is ready - we collect, we establish

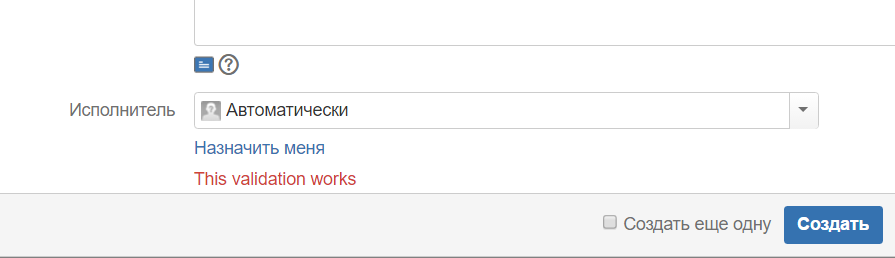

To test the approach, we implement the simplest test:

We are trying to create a new task and voila!

Now we can delete and reinstall any addon from the developed ones - the behavior of JIRA changes correctly.

Thus, we have obtained a tool for dynamic extension of the application API, in this case JIRA. Of course, before using such an approach in production, thorough testing is required, but in my opinion, the solution is not completely crumbled, and with proper elaboration, this approach can be used to solve “hopeless tasks” - fixing long-lived thirdparty defects, for API expansion, etc. .

The full code of the project itself can be viewed on Github - use on health!

ps In order not to complicate the article, I did not describe the details of the assembly of the project and the features of the development of add-ons for JIRA - see this here .

The last hope in this situation may be the use of java.lang.instrument tools. Anyone who is interested in what and how in Java can be done with code in an already running VM, welcome under cat.

On Habré there are already articles about working with bytecode:

')

- Java Agent on JVM service

- Theory and Practice of AOP. How we do it in Yandex

- Aspect-oriented programming. The basics

But the application of these technologies, as a rule, is limited to logging or other simplest functions. And what if you try to wipe at expanding the functionality of the application through instrumentation?

In this article, I will show how you can perform tooling for a java agent application (both OSGi and Byte Buddy libraries) in order to add new functionality to the application. The article will be interesting primarily to people working with JIRA, but the approach used is quite universal and can be applied to other platforms.

Task

So, after two or three days of researching the JIRA API, we understand that it is normal to implement cross-validation of field values (in the case when the valid values of one field depend on the value of another field) when creating / changing a task does not work out except through transition validation . The approach is working, but difficult and not convenient, so in my free time I decided to continue research in order to have a plan B.

On the recent Joker, there was a report by Rafael Winterhalter about the Byte Buddy library, which wraps a powerful low-level bytecode editing API into a more convenient high-level shell. The library is currently quite popular, in particular, recently it is used in Mockito and Hibernate . Among other things, Raphael talked about the possibility of changing already loaded classes with Byte Buddy.

We think "And this is a thought!", And begin the work.

Design

First, from the report by Raphael, we recall that modification of already loaded classes is possible only with the help of the java.lang.instrument.Instrumentation interface, which is available when you start the java agent. It can be installed either when starting a VM using the command line, or using the Attach API , which is platform-specific and comes with the JDK.

There is an important detail - you cannot remove the agent - its classes remain loaded until the end of the VM.

As for JIRA in terms of attach API support, then we cannot guarantee that it will be launched on the JDK and even more so we cannot guarantee the OS on which it will be running.

Second, we recall that the basic unit for expanding JIRA functionality is the add-on - Bundle on steroids. So all our logic, whatever it may be, will have to be in the form of add-ons. Hence the requirement that if we make any changes to the system, they must be idempotent and disconnectable.

Given these limitations, we see globally 2 tasks:

- Agent installation: should occur when installing an add-on, provide protection against double installation, support installation of an agent on Linux and Windows, on JDK and JRE.

Since the agent cannot be removed, its update will require restarting the application - this does not really fit into the concept of OSGi. Therefore, it is necessary to minimize the responsibility of the agent so that the need to update it occurs as little as possible. - Implementing the instrumentation: should occur during the installation of the add-on, should provide the idempotency of the transformation of classes, should ensure the extensibility of the validation logic.

In the distribution of responsibility between the components, I got this scheme:

Implementation

Agent

First we create an agent:

public class InstrumentationSupplierAgent { public static volatile Instrumentation instrumentation; public static void agentmain(String args, Instrumentation inst) throws Exception { System.out.println("==**agent started**=="); InstrumentationSupplierAgent.instrumentation = inst; System.out.println("==**agent execution complete**=="); } } The code is trivial, explanations, I think, are not needed. As agreed - the most common logic, it is unlikely we will need to update it often.

Provider

Now create an add-on that this agent will attach to the target VM. Let's start with the agent installation logic. Full installer code under the spoiler:

AgentInstaller.java

@Component public class AgentInstaller { private static final Logger log = LoggerFactory.getLogger(AgentInstaller.class); private final JiraHome jiraHome; private final JiraProperties jiraProperties; @Autowired public AgentInstaller( @ComponentImport JiraHome jiraHome, @ComponentImport JiraProperties jiraProperties ) { this.jiraHome = jiraHome; this.jiraProperties = jiraProperties; } private static File getInstrumentationDirectory(JiraHome jiraHome) throws IOException { final File dataDirectory = jiraHome.getDataDirectory(); final File instrFolder = new File(dataDirectory, "instrumentation"); if (!instrFolder.exists()) { Files.createDirectory(instrFolder.toPath()); } return instrFolder; } private static File loadFileFromCurrentJar(File destination, String fileName) throws IOException { try (InputStream resourceAsStream = AgentInstaller.class.getResourceAsStream("/lib/" + fileName)) { final File existingFile = new File(destination, fileName); if (!existingFile.exists() || !isCheckSumEqual(new FileInputStream(existingFile), resourceAsStream)) { Files.deleteIfExists(existingFile.toPath()); existingFile.createNewFile(); try (OutputStream os = new FileOutputStream(existingFile)) { IOUtils.copy(resourceAsStream, os); } } return existingFile; } } private static boolean isCheckSumEqual(InputStream existingFileStream, InputStream newFileStream) { try (InputStream oldIs = existingFileStream; InputStream newIs = newFileStream) { return Arrays.equals(getMDFiveDigest(oldIs), getMDFiveDigest(newIs)); } catch (NoSuchAlgorithmException | IOException e) { log.error("Error to compare checksum for streams {},{}", existingFileStream, newFileStream); return false; } } private static byte[] getMDFiveDigest(InputStream is) throws IOException, NoSuchAlgorithmException { final MessageDigest md = MessageDigest.getInstance("MD5"); md.digest(IOUtils.toByteArray(is)); return md.digest(); } public void install() throws PluginException { try { log.trace("Trying to install tools and agent"); if (!isProperAgentLoaded()) { log.info("Instrumentation agent is not installed yet or has wrong version"); final String pid = getPid(); log.debug("Current VM PID={}", pid); final URLClassLoader systemClassLoader = (URLClassLoader) ClassLoader.getSystemClassLoader(); log.debug("System classLoader={}", systemClassLoader); final Class<?> virtualMachine = getVirtualMachineClass( systemClassLoader, "com.sun.tools.attach.VirtualMachine", true ); log.debug("VM class={}", virtualMachine); Method attach = virtualMachine.getMethod("attach", String.class); Method loadAgent = virtualMachine.getMethod("loadAgent", String.class); Method detach = virtualMachine.getMethod("detach"); Object vm = null; try { log.trace("Attaching to VM with PID={}", pid); vm = attach.invoke(null, pid); final File agentFile = getAgentFile(); log.debug("Agent file: {}", agentFile); loadAgent.invoke(vm, agentFile.getAbsolutePath()); } finally { tryToDetach(vm, detach); } } else { log.info("Instrumentation agent is already installed"); } } catch (Exception e) { throw new IllegalPluginStateException("Failed to load: agent and tools are not installed properly", e); } } private boolean isProperAgentLoaded() { try { ClassLoader.getSystemClassLoader().loadClass(InstrumentationProvider.INSTRUMENTATION_CLASS_NAME); return true; } catch (Exception e) { return false; } } private void tryToDetach(Object vm, Method detach) { try { if (vm != null) { log.trace("Detaching from VM: {}", vm); detach.invoke(vm); } else { log.warn("Failed to detach, vm is null"); } } catch (Exception e) { log.warn("Failed to detach", e); } } private String getPid() { String nameOfRunningVM = ManagementFactory.getRuntimeMXBean().getName(); return nameOfRunningVM.split("@", 2)[0]; } private Class<?> getVirtualMachineClass(URLClassLoader systemClassLoader, String className, boolean tryLoadTools) throws Exception { log.trace("Trying to get VM class, loadingTools={}", tryLoadTools); try { return systemClassLoader.loadClass(className); } catch (ClassNotFoundException e) { if (tryLoadTools) { final OS os = getRunningOs(); os.tryToLoadTools(systemClassLoader, jiraHome); return getVirtualMachineClass(systemClassLoader, className, false); } else { throw new ReflectiveOperationException("Failed to load VM class", e); } } } private OS getRunningOs() { final String osName = jiraProperties.getSanitisedProperties().get("os.name"); log.debug("OS name: {}", osName); if (Pattern.compile(".*[Ll]inux.*").matcher(osName).matches()) { return OS.LINUX; } else if (Pattern.compile(".*[Ww]indows.*").matcher(osName).matches()) { return OS.WINDOWS; } else { throw new IllegalStateException("Unknown OS running"); } } private File getAgentFile() throws IOException { final File agent = loadFileFromCurrentJar(getInstrumentationDirectory(jiraHome), "instrumentation-agent.jar"); agent.deleteOnExit(); return agent; } private enum OS { WINDOWS { @Override protected String getToolsFilename() { return "tools-windows.jar"; } @Override protected String getAttachLibFilename() { return "attach.dll"; } }, LINUX { @Override protected String getToolsFilename() { return "tools-linux.jar"; } @Override protected String getAttachLibFilename() { return "libattach.so"; } }; public void tryToLoadTools(URLClassLoader systemClassLoader, JiraHome jiraHome) throws Exception { log.trace("Trying to load tools"); final File instrumentationDirectory = getInstrumentationDirectory(jiraHome); appendLibPath(instrumentationDirectory.getAbsolutePath()); loadFileFromCurrentJar(instrumentationDirectory, getAttachLibFilename()); resetCache(); final File tools = loadFileFromCurrentJar(instrumentationDirectory, getToolsFilename()); final Method method = URLClassLoader.class.getDeclaredMethod("addURL", URL.class); method.setAccessible(true); method.invoke(systemClassLoader, tools.toURI().toURL()); } private void resetCache() throws NoSuchFieldException, IllegalAccessException { Field fieldSysPath = ClassLoader.class.getDeclaredField("sys_paths"); fieldSysPath.setAccessible(true); fieldSysPath.set(null, null); } private void appendLibPath(String instrumentationDirectory) { if (System.getProperty("java.library.path") != null) { System.setProperty("java.library.path", System.getProperty("java.library.path") + System.getProperty("path.separator") + instrumentationDirectory); } else { System.setProperty("java.library.path", instrumentationDirectory); } } protected abstract String getToolsFilename(); protected abstract String getAttachLibFilename(); } } Let's sort the code in parts.

The simplest scenario is if the agent is already loaded. Maybe it was enabled via the command line parameters at boot, or maybe the add-on is not installed the first time.

Check - easy, just load the agent class with the system classifier

private boolean isProperAgentLoaded() { try { ClassLoader.getSystemClassLoader().loadClass(InstrumentationProvider.INSTRUMENTATION_CLASS_NAME); return true; } catch (Exception e) { return false; } } If it is available, then nothing else needs to be installed. But let's say we have the first installation, and the agent is not yet loaded - we will do it ourselves using the attach API. Similarly to the previous case, we first check whether we are working under the JDK, i.e. the necessary API is available to us without additional manipulations or not. If not, then try to deliver the API.

private Class<?> getVirtualMachineClass(URLClassLoader systemClassLoader, String className, boolean tryLoadTools) throws Exception { log.trace("Trying to get VM class, loadingTools={}", tryLoadTools); try { return systemClassLoader.loadClass(className); } catch (ClassNotFoundException e) { if (tryLoadTools) { final OS os = getRunningOs(); os.tryToLoadTools(systemClassLoader, jiraHome); return getVirtualMachineClass(systemClassLoader, className, false); } else { throw new ReflectiveOperationException("Failed to load VM class", e); } } } Now consider the installation procedure attach API. The task of “turning” a JRE into a JDK begins with the definition of a container OS. In JIRA, the OS definition code is already implemented:

private OS getRunningOs() { final String osName = jiraProperties.getSanitisedProperties().get("os.name"); log.debug("OS name: {}", osName); if (Pattern.compile(".*[Ll]inux.*").matcher(osName).matches()) { return OS.LINUX; } else if (Pattern.compile(".*[Ww]indows.*").matcher(osName).matches()) { return OS.WINDOWS; } else { throw new IllegalStateException("Unknown OS running"); } } Now, knowing what OS we are under, let's consider how attach API can be downloaded. First of all, let's take a look at what the attach API actually consists of . As I said he is platform dependent.

Note: tools.jar is listed as platform independent, but this is not the case. In META-INF / services / it hides the com.sun.tools.attach.spi.AttachProvider configuration file, which lists the available providers for the environment:

# [solaris] sun.tools.attach.SolarisAttachProvider

# [windows] sun.tools.attach.WindowsAttachProvider

# [linux] sun.tools.attach.LinuxAttachProvider

# [macosx] sun.tools.attach.BsdAttachProvider

# [aix] sun.tools.attach.AixAttachProvider

They, in turn, are just very platform dependent.

To connect the necessary files to the assembly at the moment, I decided to simply pull out the library files and copies of the tools.jar from the corresponding JDK distributions and put them into the repository.

What is important to note is that after the upload, the attach API files cannot be deleted or modified, so if we want our add-on to still be deleted and updated, then we don’t need to load libraries directly from the jar - better when downloading copy them from our jar to a quiet, peaceful location accessible to us from JIRA.

public void tryToLoadTools(URLClassLoader systemClassLoader, JiraHome jiraHome) throws Exception { log.trace("Trying to load tools"); final File instrumentationDirectory = getInstrumentationDirectory(jiraHome);//{JIRA_HOME}/data/instrumentation loadFileFromCurrentJar(instrumentationDirectory, getAttachLibFilename());// final File tools = loadFileFromCurrentJar(instrumentationDirectory, getToolsFilename());// tools.jar ... } To copy the files we will use the following method:

private static File loadFileFromCurrentJar(File destination, String fileName) throws IOException { try (InputStream resourceAsStream = AgentInstaller.class.getResourceAsStream("/lib/" + fileName)) { final File existingFile = new File(destination, fileName); if (!existingFile.exists() || !isCheckSumEqual(new FileInputStream(existingFile), resourceAsStream)) { Files.deleteIfExists(existingFile.toPath());// - existingFile.createNewFile(); try (OutputStream os = new FileOutputStream(existingFile)) { IOUtils.copy(resourceAsStream, os); } } return existingFile; } } In addition to the usual file operations, this code also performs checksum calculation. At the time of writing this method of verification of components that are not updated in runtime, it first occurred to me. In principle, you can with the same success do a version check, if you version the artifacts. If the files are already uploaded, but the checksums do not match the artifacts from the archive, we will try to replace them.

So, there are files, let's figure out how to download. Let's start with the most difficult - loading the native library. If we look into the attach API subsoil, we will see that directly when performing tasks, the library is unloaded using this code:

static { System.loadLibrary("attach"); } This suggests that we need to add the location of our library to “java.library.path”

private void appendLibPath(String instrumentationDirectory) { if (System.getProperty("java.library.path") != null) { System.setProperty("java.library.path", System.getProperty("java.library.path") + System.getProperty("path.separator") + instrumentationDirectory); } else { System.setProperty("java.library.path", instrumentationDirectory); } } After that, it remains to add the necessary file of the native library into the correct directory and ... to score the first crutch in our solution. "Java.library.path" is cached in the ClassLoader class, in private static String sys_paths []. Well, we are private - let's go reset the cache ...

private void resetCache() throws NoSuchFieldException, IllegalAccessException { Field fieldSysPath = ClassLoader.class.getDeclaredField("sys_paths"); fieldSysPath.setAccessible(true); fieldSysPath.set(null, null); } Here, we uploaded the native part — go to the Java part of the API. tools.jar in JDK is loaded by the system loader. We need to do the same.

After a bit of a jib, we find that the system loader implements java.net.URLClassLoader.

In short, this loader stores class locations as a list of URLs. All we need to download is to add the URL of our tools- [OS] .jar to this list. Having studied the URLClassLoader API, we are upset once again, because we find that the addURL method, which does exactly what is needed, turns out to be protected. Eh ... another backup to a slim prototype:

final Method method = URLClassLoader.class.getDeclaredMethod("addURL", URL.class); method.setAccessible(true); method.invoke(systemClassLoader, tools.toURI().toURL()); Well, finally, everything is ready to load the class of the virtual machine.

It is necessary to load it not with the current OSGi-classifier, but with the system one, which always remains in the system, since during the attach process, this class will load the native library, and this can only be done once. OSGi is the same when creating a bundle - every time a new one. So we risk getting such a thing:

... 19 more

Caused by: com.sun.tools.attach.AttachNotSupportedException: no providers installed

at com.sun.tools.attach.VirtualMachine.attach (VirtualMachine.java:203)

The description is not obvious, but the real reason is that we are trying to load an already loaded library — you can only find out about this by attaching the attach method and seeing the real exception.

When we loaded the class, we can load the necessary methods and finally attach our agent:

Method attach = virtualMachine.getMethod("attach", String.class); Method loadAgent = virtualMachine.getMethod("loadAgent", String.class); Method detach = virtualMachine.getMethod("detach"); Object vm = null; try { final String pid = getPid(); log.debug("Current VM PID={}", pid); log.trace("Attaching to VM with PID={}", pid); vm = attach.invoke(null, pid); final File agentFile = getAgentFile(); log.debug("Agent file: {}", agentFile); loadAgent.invoke(vm, agentFile.getAbsolutePath()); } finally { tryToDetach(vm, detach); } The only subtlety here is the code for getting the pid of the virtual machine:

private String getPid() { String nameOfRunningVM = ManagementFactory.getRuntimeMXBean().getName(); return nameOfRunningVM.split("@", 2)[0]; } The method is not standardized, but quite working, and in the Java 9 Process API it will generally allow you to do this without any problems.

Add-on

Now we embed this logic in the add-on. We are interested in the ability to call the code during the installation of the add-on - this is done using the standard Springs InitializingBean.

@Override public void afterPropertiesSet() throws Exception { this.agentInstaller.install(); this.serviceTracker.open(); } First, we call the agent installation logic (discussed above), and then open ServiceTracker - one of the main mechanisms for implementing the whiteboard pattern in OSGi. In short, this piece allows us to perform logic when adding / changing services of a certain type in a container.

private ServiceTracker<InstrumentationConsumer, Void> initTracker(final BundleContext bundleContext, final InstrumentationProvider instrumentationProvider) { return new ServiceTracker<>(bundleContext, InstrumentationConsumer.class, new ServiceTrackerCustomizer<InstrumentationConsumer, Void>() { @Override public Void addingService(ServiceReference<InstrumentationConsumer> serviceReference) { try { log.trace("addingService called"); final InstrumentationConsumer consumer = bundleContext.getService(serviceReference); log.debug("Consumer: {}", consumer); if (consumer != null) { applyInstrumentation(consumer, instrumentationProvider); } } catch (Throwable t) { log.error("Error on 'addingService'", t); } return null; } @Override public void modifiedService(ServiceReference<InstrumentationConsumer> serviceReference, Void aVoid) { } @Override public void removedService(ServiceReference<InstrumentationConsumer> serviceReference, Void aVoid) { } }); } Now, every time a service that implements the InstrumentationConsumer class is registered to the container, we will do the following

private void applyInstrumentation(InstrumentationConsumer consumer, InstrumentationProvider instrumentationProvider) { final Instrumentation instrumentation; try { instrumentation = instrumentationProvider.getInstrumentation(); consumer.applyInstrumentation(instrumentation); } catch (InstrumentationAgentException e) { log.error("Error on getting insrumentation", e); } } The java.lang.instrument.Instrumentation object will be received like this:

@Component public class InstrumentationProviderImpl implements InstrumentationProvider { private static final Logger log = LoggerFactory.getLogger(InstrumentationProviderImpl.class); @Override public Instrumentation getInstrumentation() throws InstrumentationAgentException { try { final Class<?> agentClass = ClassLoader.getSystemClassLoader().loadClass(INSTRUMENTATION_CLASS_NAME);// , javaagents log.debug("Agent class loaded from system classloader", agentClass); final Field instrumentation = agentClass.getDeclaredField(INSTRUMENTATION_FIELD_NAME);// reflection log.debug("Instrumentation field: {}", instrumentation); final Object instrumentationValue = instrumentation.get(null); if (instrumentationValue == null) { throw new NullPointerException("instrumentation data is null. Seems agent is not installed"); } return (Instrumentation) instrumentationValue; } catch (Throwable e) { String msg = "Error getting instrumentation"; log.error(msg, e); throw new InstrumentationAgentException("Error getting instrumentation", e); } } } We proceed to writing the validation engine.

Validation engine

We find the point to which the most effective changes are made - the DefaultIssueService class (in fact, not all creation / change calls go through this point, but this is a separate topic), and its methods:

validateCreate:

IssueService.CreateValidationResult validateCreate(@Nullable ApplicationUser var1, IssueInputParameters var2); and validateUpdate:

IssueService.UpdateValidationResult validateUpdate(@Nullable ApplicationUser var1, Long var2, IssueInputParameters var3); and pretend what logic we lack.

We need to, after calling the main logic, cause custom validation of the initial parameters by our code, which, if necessary, can change the result.

ByteBuddy offers us 2 options for implementing our ideas: using an interrupt and using the Advice mechanism. The difference of approaches can be clearly seen on the slide of the Raphael presentation .

The Interceptor API is well documented, any public class can act as it, more details here . Interceptor's call to the original bytecode is inserted INSTEAD of the original method.

When trying to use this method, I identified 2 significant drawbacks:

- In general, we have the opportunity to get the original method, and even the object of the method call . However, due to restrictions on changing the signature of the loaded classes , in the case when we are instructing an already loaded class, we lose the original method (because it cannot be saved as a private method of the same class). So if we want to reuse the original logic, we will have to re-write it ourselves.

- Since we actually call the methods of another class, we need to provide visibility between the classes in the chain of class-cloneers. In the case when the class is instrumented inside the OSGi-container, there will be no problems with visibility. But in our case, most of the classes from the JIRA API are loaded by a WebappClassLoader, which is outside OSGi, which means that when we try to call our Interceptor method we will get a well-deserved ClassNotFoundException.

During the work on the project, I was born a solution to this problem, but since It interferes with the logic of loading classes of the entire application. I do not recommend using it without thorough testing and will lay out under the spoiler.

Solving the downloader problem

The basic idea is to interrupt the WebappClassLoader's parent chain and insert a certain ClassLoader proxy that will try to load classes using the BundleClassLoader before delegating the download to the real WebappClassLoader parent

Like this:

The implementation of the approach looks like this:

It should be used in the application of instrumentation:

In this case, we will be able to load the OSGi classes via the WebappClassLoader. The only thing to take care of is not to try to load classes using OSGi, loading of which will be delegated to outside of OSGi, since this will obviously lead to looping and exceptions.

BundleProxyClassLoader code:

I saved it in case someone wants to develop this idea.

Like this:

The implementation of the approach looks like this:

private void tryToFixClassloader(ClassLoader originalClassLoader, BundleWiringImpl.BundleClassLoader bundleClassLoader) { try { final ClassLoader originalParent = originalClassLoader.getParent(); if (originalParent != null) { if (!(originalParent instanceof BundleProxyClassLoader)) { final BundleProxyClassLoader proxyClassLoader = new BundleProxyClassLoader<>(originalParent, bundleClassLoader); FieldUtils.writeDeclaredField(originalClassLoader, "parent", proxyClassLoader, true); } } } catch (IllegalAccessException e) { log.warn("Error on try to fix originalClassLoader {}", originalClassLoader, e); } } It should be used in the application of instrumentation:

... .transform((builder, typeDescription, classloader) -> { builder.method(named("validateCreate").and(ElementMatchers.isPublic())).intercept(MethodDelegation.to(Interceptor.class)); if (!ClassUtils.isVisible(InstrumentationConsumer.class, classloader)) { tryToFixClassloader(classloader, (BundleWiringImpl.BundleClassLoader) Interceptor.class.getClassLoader()); } }) .installOn(instrumentation); In this case, we will be able to load the OSGi classes via the WebappClassLoader. The only thing to take care of is not to try to load classes using OSGi, loading of which will be delegated to outside of OSGi, since this will obviously lead to looping and exceptions.

BundleProxyClassLoader code:

class BundleProxyClassLoader<T extends BundleWiringImpl.BundleClassLoader> extends ClassLoader { private static final Logger log = LoggerFactory.getLogger(BundleProxyClassLoader.class); private final Set<T> proxies; private final Method loadClass; private final Method shouldDelegate; public BundleProxyClassLoader(ClassLoader parent, T proxy) { super(parent); this.loadClass = getLoadClassMethod(); this.shouldDelegate = getShouldDelegateMethod(); this.proxies = new HashSet<>(); proxies.add(proxy); } private Method getLoadClassMethod() throws IllegalStateException { try { Method loadClass = ClassLoader.class.getDeclaredMethod("loadClass", String.class, boolean.class); loadClass.setAccessible(true); return loadClass; } catch (NoSuchMethodException e) { throw new IllegalStateException("Failed to get loadClass method", e); } } private Method getShouldDelegateMethod() throws IllegalStateException { try { Method shouldDelegate = BundleWiringImpl.class.getDeclaredMethod("shouldBootDelegate", String.class); shouldDelegate.setAccessible(true); return shouldDelegate; } catch (NoSuchMethodException e) { throw new IllegalStateException("Failed to get shouldDelegate method", e); } } @Override public Class<?> loadClass(String name, boolean resolve) throws ClassNotFoundException { synchronized (getClassLoadingLock(name)) { log.trace("Trying to find already loaded class {}", name); Class<?> c = findLoadedClass(name); if (c == null) { log.trace("This is new class. Trying to load {} with OSGi", name); c = tryToLoadWithProxies(name, resolve); if (c == null) { log.trace("Failed to load with OSGi. Trying to load {} with parent CL", name); c = super.loadClass(name, resolve); } } if (c == null) { throw new ClassNotFoundException(name); } return c; } } private Class<?> tryToLoadWithProxies(String name, boolean resolve) { for (T proxy : proxies) { try { final String pkgName = Util.getClassPackage(name); //avoid cycle if(!isShouldDelegatePackageLoad(proxy, pkgName)) { log.trace("The load of class {} should not be delegated to OSGI parent, so let's try to load with bundles", name); return (Class<?>) this.loadClass.invoke(proxy, name, resolve); } } catch (ReflectiveOperationException e) { log.trace("Class {} is not found with {}", name, proxy); } } return null; } private boolean isShouldDelegatePackageLoad(T proxy, String pkgName) throws IllegalAccessException, InvocationTargetException { return (boolean)this.shouldDelegate.invoke( FieldUtils.readDeclaredField(proxy, "m_wiring", true), pkgName ); } } I saved it in case someone wants to develop this idea.

The second implementation of the instrumentation is the use of the Advice. This method is much worse documented - in fact, examples can only be found on Github tickets and StackOverflow responses.

But it is not all that bad

Here we must give Rafael his due - all the questions and tickets that I saw are provided with detailed explanations and examples, so it won't be difficult to figure it out - I hope these works will bear fruit and we will see Byte Buddy in even more projects.

It differs from the first one in that by default our advice methods are embedded in the class code. For us, this means:

- no need to dance with ClassLoaders

- preservation of the original logic - we can only perform certain actions before the original code or after

It sounds perfect, we are provided with a smart API that allows you to get the original arguments, the results of the original code (including exceptions) and even get the results of the work of the Advice who worked to the original code. But there is always a “but”, and embedding imposes some restrictions on the code that can be embedded:

- all embedded code must be executed by one method

- the method must not contain calls to methods of classes that are not available to the class in which we are embedded, including and anonymous (goodbye lambdas!)

- exceptions are not supported - exceptions must be thrown explicitly in the body of the method

I did not find these limitations in the Byte Buddy documentation.

Well, let's try to write our Advice-style logic. As we remember, we need to try to minimize the necessary instrumentation. This means that I would like to abstract away from specific validation checks — to make sure that when a new check appears, it is automatically added to the list of checks that will be performed when validateCreate / validateUpdate is called, and the code of the DefaultIssueService class would not have to be changed.

In OSGi, this is easy to do, but the DefaultIssueService is beyond the framework of the framework and OSGi tricks will not work here.

Suddenly, the JIRA API comes to our rescue. Each add-on is represented in JIRA as an object of the Plugin class (wrapper over a Bundle with a number of special functions) with a specific key by which you can search for this plugin.

The key is set by us in the addon configuration, the plugin API is loaded by the same classifier as our DefaultIssueService - so nothing prevents us from calling our plugin in our advice, and using it to load any class that comes with this plugin. For example, this could be our check aggregator.

After that we can get an instance of this class through again the standard com.atlassian.jira.component.ComponentAccessor # getOSGiComponentInstanceOfType.

And no magic:

public class DefaultIssueServiceValidateCreateAdvice { @Advice.OnMethodExit(onThrowable = IllegalArgumentException.class) public static void intercept( @Advice.Return(readOnly = false) CreateValidationResult originalResult,// - (readOnly = false) @Advice.Thrown Throwable throwable,// - @Advice.Argument(0) ApplicationUser user, @Advice.Argument(1) IssueInputParameters issueInputParameters ) { try { if (throwable == null) { //current plugin key final Plugin plugin = ComponentAccessor.getPluginAccessor().getEnabledPlugin("org.jrx.jira.instrumentation.issue-validation"); //related aggregator class final Class<?> issueValidatorClass = plugin != null ? plugin.getClassLoader().loadClass("org.jrx.jira.instrumentation.validation.spi.issueservice.IssueServiceValidateCreateValidatorAggregator") : null; final Object issueValidator = issueValidatorClass != null ? ComponentAccessor.getOSGiComponentInstanceOfType(issueValidatorClass) : null;// API JIRA if (issueValidator != null) { final Method validate = issueValidator.getClass().getMethod("validate", CreateValidationResult.class, ApplicationUser.class, IssueInputParameters.class); if (validate != null) { final CreateValidationResult validationResult = (CreateValidationResult) validate .invoke(issueValidator, originalResult, user, issueInputParameters); if (validationResult != null) { originalResult = validationResult; } } else { System.err.println("==**Warn: method validate is not found on aggregator " + "**=="); } } } //Nothing should break service } catch (Throwable e) { System.err.println("==**Warn: Exception on additional logic of validateCreate " + e + "**=="); } } } DefaultIssueServiceValidateUpdateAdvice looks similar to class and method names. It's time to write InstrumentationConsumer, which will apply our advice to the method you need.

@Component @ExportAsService public class DefaultIssueServiceTransformer implements InstrumentationConsumer { private static final Logger log = LoggerFactory.getLogger(DefaultIssueServiceTransformer.class); private static final AgentBuilder.Listener listener = new LogTransformListener(log); private final String DEFAULT_ISSUE_SERVICE_CLASS_NAME = "com.atlassian.jira.bc.issue.DefaultIssueService"; @Override public void applyInstrumentation(Instrumentation instrumentation) { new AgentBuilder.Default().disableClassFormatChanges() .with(new AgentBuilder.Listener.Filtering( new StringMatcher(DEFAULT_ISSUE_SERVICE_CLASS_NAME, EQUALS_FULLY), listener )) .with(AgentBuilder.TypeStrategy.Default.REDEFINE) .with(AgentBuilder.RedefinitionStrategy.REDEFINITION) .with(AgentBuilder.InitializationStrategy.NoOp.INSTANCE) .type(named(DEFAULT_ISSUE_SERVICE_CLASS_NAME)) .transform((builder, typeDescription, classloader) -> builder //transformation is idempotent!!! You can call it many times with same effect //no way to add advice on advice if it applies to original class //https://github.com/raphw/byte-buddy/issues/206 .visit(Advice.to(DefaultIssueServiceValidateCreateAdvice.class).on(named("validateCreate").and(ElementMatchers.isPublic()))) .visit(Advice.to(DefaultIssueServiceValidateUpdateAdvice.class).on(named("validateUpdate").and(ElementMatchers.isPublic())))) .installOn(instrumentation); } } Here I must say about one nice bonus. Using advice is idempotent! No need to take care not to apply the transformation twice when reinstalling the addon - the VM will do it for us .

Well, business for small - we will write the aggregator. First, we define the validation API:

public interface IssueServiceValidateCreateValidator { @Nonnull CreateValidationResult validate( final @Nonnull CreateValidationResult originalResult, final ApplicationUser user, final IssueInputParameters issueInputParameters ); } Next, using standard OSGi tools at the time of the call, we obtain all available validations and execute them:

@Component @ExportAsService(IssueServiceValidateCreateValidatorAggregator.class) public class IssueServiceValidateCreateValidatorAggregator implements IssueServiceValidateCreateValidator { private static final Logger log = LoggerFactory.getLogger(IssueServiceValidateCreateValidatorAggregator.class); private final BundleContext bundleContext; @Autowired public IssueServiceValidateCreateValidatorAggregator(BundleContext bundleContext) { this.bundleContext = bundleContext; } @Nonnull @Override public IssueService.CreateValidationResult validate(@Nonnull final IssueService.CreateValidationResult originalResult, final ApplicationUser user, final IssueInputParameters issueInputParameters) { try { log.trace("Executing validate of IssueServiceValidateCreateValidatorAggregator"); final Collection<ServiceReference<IssueServiceValidateCreateValidator>> serviceReferences = bundleContext.getServiceReferences(IssueServiceValidateCreateValidator.class, null); log.debug("Found services: {}", serviceReferences); return applyValidations(originalResult, serviceReferences, user, issueInputParameters); } catch (InvalidSyntaxException e) { log.warn("Exception on getting IssueServiceValidateCreateValidator", e); return originalResult; } } private IssueService.CreateValidationResult applyValidations(@Nonnull IssueService.CreateValidationResult originalResult, Collection<ServiceReference<IssueServiceValidateCreateValidator>> serviceReferences, ApplicationUser user, IssueInputParameters issueInputParameters) { IssueService.CreateValidationResult result = originalResult; for (ServiceReference<IssueServiceValidateCreateValidator> serviceReference : serviceReferences) { final IssueServiceValidateCreateValidator service = bundleContext.getService(serviceReference); if (service != null) { result = service.validate(result, user, issueInputParameters); } else { log.debug("Failed to get service from {}", serviceReference); } } return result; } } Everything is ready - we collect, we establish

Test validation

To test the approach, we implement the simplest test:

@Component @ExportAsService public class TestIssueServiceCreateValidator implements IssueServiceValidateCreateValidator { @Nonnull @Override public IssueService.CreateValidationResult validate(@Nonnull IssueService.CreateValidationResult originalResult, ApplicationUser user, IssueInputParameters issueInputParameters) { originalResult.getErrorCollection().addError(IssueFieldConstants.ASSIGNEE, "This validation works", ErrorCollection.Reason.VALIDATION_FAILED); return originalResult; } } We are trying to create a new task and voila!

Now we can delete and reinstall any addon from the developed ones - the behavior of JIRA changes correctly.

Conclusion

Thus, we have obtained a tool for dynamic extension of the application API, in this case JIRA. Of course, before using such an approach in production, thorough testing is required, but in my opinion, the solution is not completely crumbled, and with proper elaboration, this approach can be used to solve “hopeless tasks” - fixing long-lived thirdparty defects, for API expansion, etc. .

The full code of the project itself can be viewed on Github - use on health!

ps In order not to complicate the article, I did not describe the details of the assembly of the project and the features of the development of add-ons for JIRA - see this here .

Source: https://habr.com/ru/post/316124/

All Articles