Auto-Deploying Django from GitLab

In this article I will describe the configuration of automatic deployment of a web application on the stack of Django + uWSGI + PostgreSQL + Nginx from the repository on the service GitLab.com . The foregoing also applies to the custom installation of GitLab. It is assumed that the reader has experience in creating web applications on Django, as well as experience in administering Linux-systems.

Deployment is implemented using Fabric , Docker and docker-compose , and it will be implemented by the continuous integration service built into GitLab, called GitLab CI .

Automatic Deployment Mechanism

The deployment will be as follows:

- When pushing new commits into the repository, GitLab CI will be automatically launched.

- GitLab CI will build a Docker image with a Django application ready for launch.

- Then GitLab CI will push the assembled Docker image to the GitLab container registry . Note that the privacy settings in the registry are the same as those of the repository, i.e. for public repositories GitLab registry is open to all .

- Gitlab CI will launch unit tests.

- If commits or merge requests were made to the main branch (

master), then after successful assembly and testing of Gitlab CI with the help of Fabric, it will deploy the assembled Docker image to the server with the IP address specified by us.

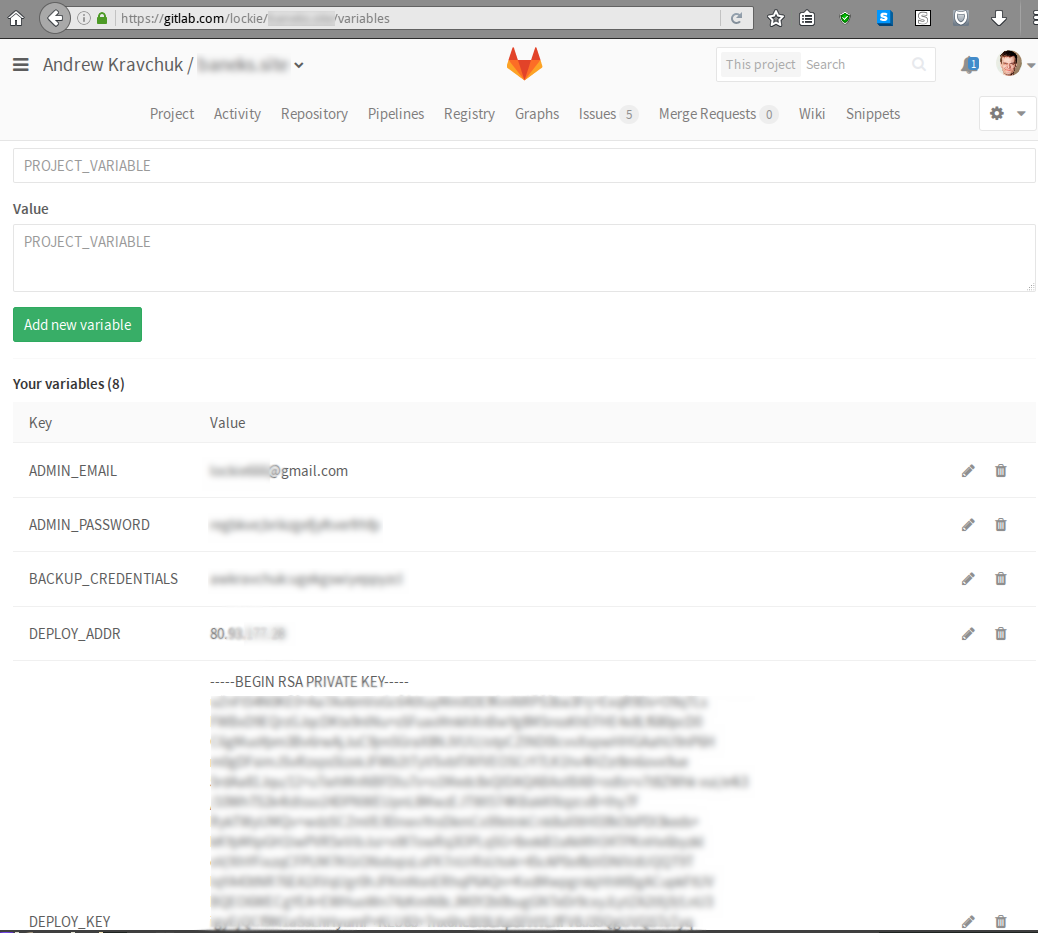

Private data required for deployment - private keys, SECRET_KEY for Django, third-party service tokens, etc. - to store in clear text in the repository is definitely not worth it, so for their storage we will use the mechanism GitLab Secret Variables :

With this approach, confidential data is available in plain text only in two places: in the project settings on GitLab.com and on the server to which the deployment is performed. In turn, on the server confidential data will be stored in the environment variables (read: will be visible to anyone who can access it via SSH).

The following variables are required for the deployment mechanism to work:

DEPLOY_KEY- the private part of the SSH key that is used to log in to the server;DEPLOY_ADDR- its IP address;SECRET_KEYis the corresponding Django setting.

In addition, in the Django project's settings.py file, we define SECRET_KEY as follows:

SECRET_KEY = os.getenv('SECRET_KEY') or sys.exit('SECRET_KEY environment variable is not set.') Step 1: Docker

First of all, create a Dockerfile to run Django and uWSGI based on the lightweight Alpine Linux image:

FROM python:3.5-alpine RUN mkdir -p /usr/src/app WORKDIR /usr/src/app RUN apk add --no-cache --virtual .build-deps gcc musl-dev linux-headers pkgconf \ autoconf automake libtool make postgresql-dev postgresql-client openssl-dev && \ apk add postgresql-libs postgresql-client && \ # uWSGI Docker, . https://git.io/v1ve3 (while true; do pip --no-cache-dir install uwsgi==2.0.14 && break; done) COPY requirements.txt /usr/src/app/ RUN pip install --no-cache-dir -r requirements.txt COPY . /usr/src/app RUN SECRET_KEY=build ./manage.py collectstatic --noinput && \ ./manage.py makemessages && \ apk del .build-deps It is assumed that our web application dependencies, as is customary in the Python world, are stored in the requirements.txt file.

Step 2: docker-compose

Next, to orchestrate the docker-compose stack containers we need docker-compose .

Theoretically, one could do without it, but then the file with instructions for CI would become bloated and unreadable (see for an example here ).

So, in the root directory of the repository, create a docker-compose.yml file docker-compose.yml following content:

version: '2' services: web: # TODO: username project . image: registry.gitlab.com/username/project:${CI_BUILD_REF_NAME} build: ./web environment: # , # - SECRET_KEY command: uwsgi /usr/src/app/uwsgi.ini volumes: - static:/srv/static restart: unless-stopped test: # TODO: username project . image: registry.gitlab.com/username/project:${CI_BUILD_REF_NAME} command: python manage.py test restart: "no" postgres: image: postgres:9.6 environment: # : - POSTGRES_USER=root - POSTGRES_DB=database volumes: # - data:/var/lib/postgresql/data restart: unless-stopped nginx: image: nginx:mainline ports: # - "80:80" - "443:443" volumes: # - ./nginx:/etc/nginx:ro - static:/srv/static:ro depends_on: - web restart: unless-stopped The given file corresponds to the following project structure:

repository ├── nginx │ ├── mime.types │ ├── nginx.conf │ ├── ssl_params │ └── uwsgi_params ├── web │ ├── project │ │ ├── __init__.py │ │ ├── settings.py │ │ ├── urls.py │ │ └── wsgi.py │ ├── app │ │ ├── migrations │ │ │ └── ... │ │ ├── __init__.py │ │ ├── admin.py │ │ ├── apps.py │ │ ├── models.py │ │ ├── tests.py │ │ └── views.py │ ├── Dockerfile │ ├── manage.py │ ├── requirements.txt │ └── uwsgi.ini ├── docker-compose.yml └── fabfile.py Now the entire stack is started with a single docker-compose up , and inside the Docker stack containers, access to other running containers occurs using DNS names corresponding to the entries in the docker-compose.yml , in addition, all open container ports are accessible to each other, how they are on the same internal Docker network. So, the relevant part of the Nginx config will look like this:

upstream django { server web:8000; } ... and the Django database access settings are as follows:

DATABASES = { 'default': { 'ENGINE': 'django.db.backends.postgresql', 'NAME': 'database', 'HOST': 'postgres', } } Thanks to the restart: unless-stopped when the server is restarted, all the containers in our stack are automatically restarted with the parameters with which they were started initially, i.e. no additional actions are required when restarting the server.

Step 3: GitLab CI

Create a .gitlab-ci.yml file in the root of the repository with instructions for GitLab CI:

# Gitlab CI, Docker . image: docker:latest services: - docker:dind # , : # - Docker-, # - Django, # - . stages: - build - test - deploy # , # . # , , , # Docker-, . before_script: # pip - apk add --no-cache py-pip # docker-compose - pip install docker-compose==1.9.0 # Gitlab Docker registry - docker login -u gitlab-ci-token -p $CI_BUILD_TOKEN $CI_REGISTRY # Docker- build: stage: build script: # - docker-compose build # registry - docker-compose push # test: stage: test script: # , # registry - docker-compose pull test # - docker-compose run test # deploy: stage: deploy # master only: - master # before_script: # Fabric, bash rsync - apk add --no-cache openssh-client py-pip py-crypto bash rsync # Fabric - pip install fabric==1.12.0 # - eval $(ssh-agent -s) - bash -c 'ssh-add <(echo "$DEPLOY_KEY")' - mkdir -p ~/.ssh - echo -e "Host *\n\tStrictHostKeyChecking no\n\n" > ~/.ssh/config script: - fab -H $DEPLOY_ADDR deploy It is worth noting that the GitLab CI Docker runners that we use use the same Alpine Linux image as a basis, which creates a number of difficulties - there is no bash out of the box, an unusual package manager apk , an unusual standard library musl-libc , etc. Difficulties are compensated by the fact that Apline Linux-based images are truly lightweight; so, the official python:3.5.2-alpine image python:3.5.2-alpine weighs only 27.6 MB.

Step 4: Fabric

To roll out the application to the server, in the root directory of the repository, create the file fabfile.py , at least containing the following:

#!/usr/bin/env python2 from fabric.api import hide, env, settings, abort, run, cd, shell_env from fabric.colors import magenta, red from fabric.contrib.files import append from fabric.contrib.project import rsync_project import os env.user = 'root' env.abort_on_prompts = True # TODO: , PATH = '/srv/mywebapp' ENV_FILE = '/etc/profile.d/variables.sh' VARIABLES = ('SECRET_KEY', ) def deploy(): def rsync(): exclusions = ('.git*', '.env', '*.sock*', '*.lock', '*.pyc', '*cache*', '*.log', 'log/', 'id_rsa*', 'maintenance') rsync_project(PATH, './', exclude=exclusions, delete=True) def docker_compose(command): with cd(PATH): with shell_env(CI_BUILD_REF_NAME=os.getenv( 'CI_BUILD_REF_NAME', 'master')): # -, . https://git.io/vXH8a run('set -o pipefail; docker-compose %s | tee' % command) # variables_set = True for var in VARIABLES + ('CI_BUILD_TOKEN', ): if os.getenv(var) is None: variables_set = False print(red('ERROR: environment variable ' + var + ' is not set.')) if not variables_set: abort('Missing required parameters') with hide('commands'): run('rm -f "%s"' % ENV_FILE) append(ENV_FILE, ['export %s="%s"' % (var, val) for var, val in zip( VARIABLES, map(os.getenv, VARIABLES))]) # Fabric run(), # . . http://stackoverflow.com/q/38024726/1336774 # registry run('docker login -u %s -p %s %s' % (os.getenv('REGISTRY_USER', 'gitlab-ci-token'), os.getenv('CI_BUILD_TOKEN'), os.getenv('CI_REGISTRY', 'registry.gitlab.com'))) # , with settings(warn_only=True): with hide('warnings'): need_bootstrap = run('docker ps | grep -q web').return_code != 0 if need_bootstrap: print(magenta('No previous installation found, bootstrapping')) rsync() docker_compose('up -d') # " ", . https://habr.ru/post/139968 run('touch %s/nginx/maintenance && docker kill -s HUP nginx_1' % PATH) rsync() docker_compose('pull') docker_compose('up -d') # run('rm -f %s/nginx/maintenance && docker kill -s HUP nginx_1' % PATH) Generally speaking, copying rsync 's entire repository is optional; a docker-compose.yml and the contents of the nginx directory would be enough to run.

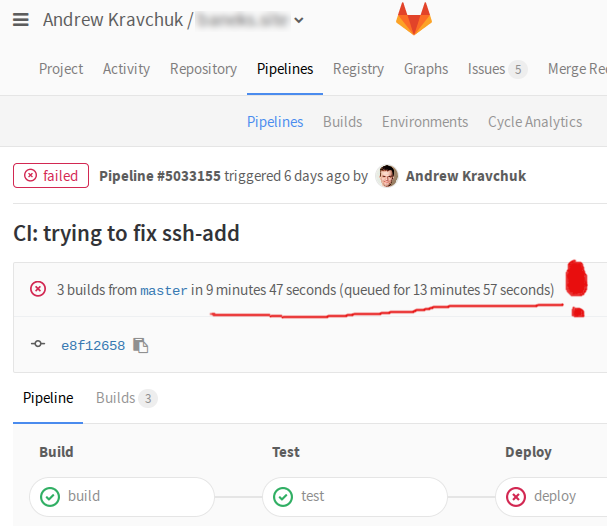

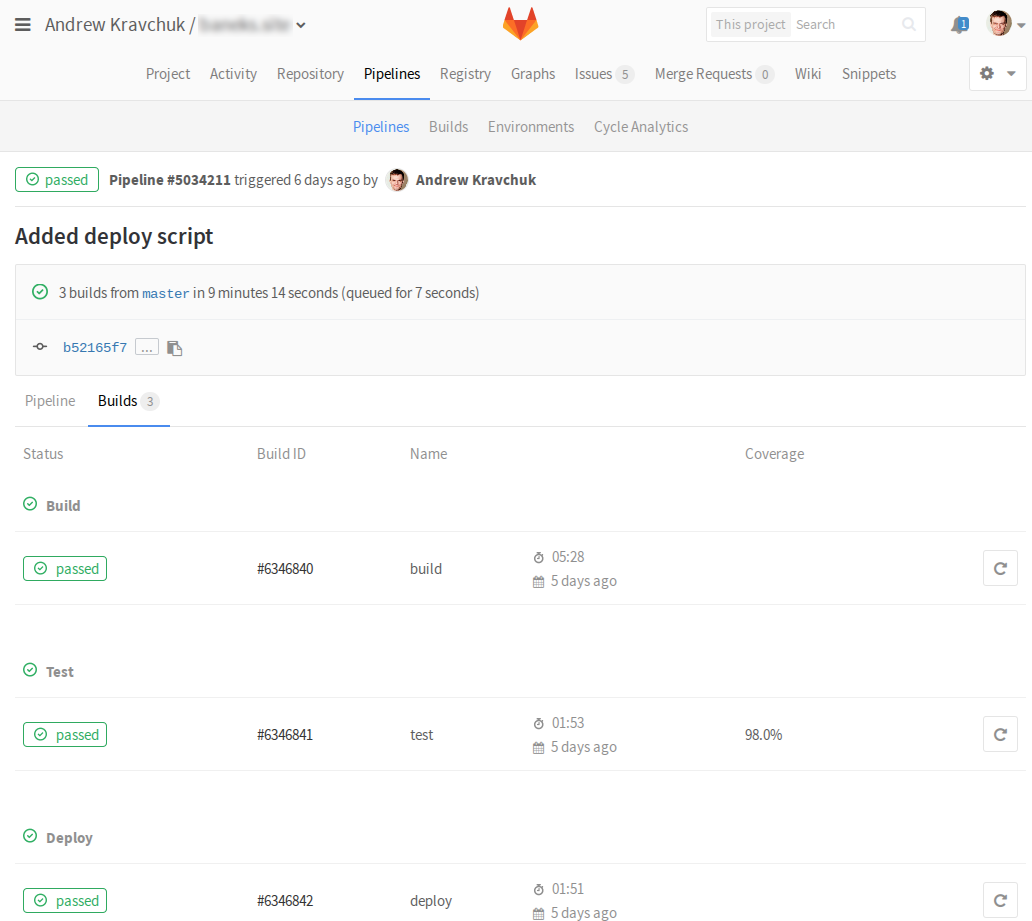

The application code is stored on the server in case you need to make urgent "profitable" changes. On free gitlab.com accounts, relatively weak virtualized hardware is used to run CI, so the build, tests, and rollout usually take 5-10 minutes to complete.

(and it happens that they are still in the queue sticking out for ages)

However, there are cases when every second counts - for such cases we leave a loophole in the form of complete application sources. To apply the changes made by "profitable", just go to the directory /srv/mywebapp and say in the console

docker-compose build docker-compose up -d Conclusion

Thus, we have implemented continuous web application integration using the GitLab service.

Now all changes will be run through the battery of automatic tests (which, of course, also need to write), and changes in the main branch will automatically be deployed to the combat server with near-zero downtime.

The following questions were left out of the article:

- setting up Nginx log rotation;

- PostgreSQL backups setup;

- setting docker-gc to prevent the awkward "

No space left on device" situation.

Let's leave them to the inquisitive reader as an independent exercise.

Links

» GitLab CI: Learning to Deploy

» GitLab CI Quick Start

» GitLab Container Registry

» Django on production. uWSGI + nginx. Detailed guide

" Fabric documentation

')

Source: https://habr.com/ru/post/316054/

All Articles