“I will know when I see” - we study the accuracy of Google Cloud Vision using Tumblr and NSFW content

For small teams and technology startups, cloud services are often the only chance to establish business processes and launch a product on the market within a reasonable time. Such large players as Google, Microsoft, Amazon, Yandex offer a wide range of products to everyone. And if there is no question of the reliability of corporate email, then sometimes machine learning services should be treated with caution.

Especially when you are trying to teach the machine to distinguish objects, the difference between which is not always possible to clearly describe even a knowledgeable person. How accurate are the algorithms underlying out-of-the-box solutions from large companies?

In 1964, a member of the US Supreme Court stated:

I will not attempt now to define more precisely the material falling under this brief description [“hard pornography”]; I may never be able to give this a clear definition. However, I know when I see.

The state machine in our country periodically falls into a state of active struggle with pornography in particular and eroticism in general. We will not discuss the goals, content and prospects of this struggle. But we note that sometimes the answer to the question is nsfw or not nsfw? - quite difficult.

The modest team of smmframe.com has long been interested in Api reliability issues from the Good Corporation and once for running several technologies, it was decided to check the quality of Google Cloud Vision performance using the example of erotic and pseudo-erotic image recognition.

Technical implementation or "Download photos of naked girls"

First question - where to get dataset? We need a dataset satisfying three requirements:

- Match all photos to one specific tag (for a quick start)

- the presence of neutral photos for this tag

- the presence of not neutral photos on this tag

- it is desirable to do without outright porn and nudity

not sports

Our choice fell on Tumblr - a lot of suitable photos on the tag girl and convenient API. Of course, it would be better to look for a sexy girl, but the tumbler severely limits the output by nsfw tags.

Collecting data to assess the quality of service performance is simple in itself. We register on the Google Cloud Vision service, look at pricing in the Explicit Content Detection line. We understand with api from tumbler.

We read the documentation and write a script that will cron download photos and then transfer them to Google. We get the answer of the service - an estimate of the probability that the picture has erotic on a scale from 1 to 5. We analyze the answer of the service and save it in a file for the future.

It remains the longest and most tedious stage - manually add a rating from 1 to 5 to all photos in the dataset. For the convenience of viewing images made site: labs.smmframe.com . The brave man, who volunteered to view all the photos, noted two points: a lot of repetition of photos and a lot of Emma Watson

Oddities or feel like Milonov

There are enough oddities in the results from the Vision API. We note right away - the service works very well for obvious boundary cases.

Safe:

Unsafe: (link to the picture itself here .) In the meantime, just a cat.

Almost insecure:

Note that the girls tag we chose quite often includes images of manicure sets, flowers or typical objects inhabiting a woman's handbag. All this is classified as Very Unlikely, which is correct. Naked girls are detected as Likely - which is also true.

Are there frankly incorrect assessments of absolutely safe content? Of course! But it is difficult to describe these cases clearly.

The difficulties of working with girls in underwear or a thin-thin face of what is permitted

In retrospect, the reason for the appearance of the article was this image, which happened to be on a monitor in one club. Let's say right away - two lovely ladies do not cause Google any bad associations, as evidenced by the Unlikely rating.

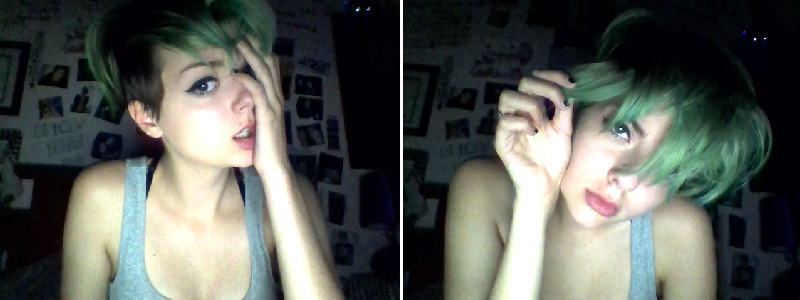

And this is at least strange, because the other three pictures of much more decent content were rated as close to the NSFW:

From left to right: Unlikely, Likely, Likely

This is a very strange result, when Unlikely affixed to photos with clothed girls:

What is Explicit Content?

Here, for example, a cute picture of a severed head with horns and a fairly authentic traced anatomy of the neck.

The question immediately arises - why tagged girls filter missed it? Referring to the dictionary, you can learn that the concept of Explicit Content is not limited to obscene things of a sexual nature, but includes pronounced aggression, foul language, propaganda of bad habits, blood, dismemberment, and much more, which should not pay attention to particularly impressionable people.

It turns out that Google Cloud Vision in this case was more likely wrong than it was right, because the picture should not be shown to children.

Gifs

Here is a more interesting example - a gif with iridescent colors, a cat and a bare chest. We do not know exactly how gif files are analyzed, but for a person, the assessment of this picture as obscene is obvious. Probably far from the standard color of the skin and atypical angle allowed the picture to deceive the filters - very Unlikely rating.

Interestingly, some apparently decent and well-behaved things in movement may seem piquant. Compare just a frame from a gif (peak below) and the gif itself

Difficulties of Augmented Reality

Since we are discussing the pictures, let's consider this example. Two images are taken in the same well-known application. Here only the first is regarded as safe, and the second - Likely.

The poor guy is recognized by the NSFW.

The girl is just having fun.

Why is it so difficult to guess, but Cloud Vision Api has an unhealthy habit of changing the classification with relatively small changes in the facial expression or posture of people.

Here are some more examples:

Left - Unlikely, Right - Likely

Left - Unlikely, right - Likely. There is a version that the matter is in bad focus on the left image.

Left - Unlikely, right - Likely.

Left - Very Unlikely, right - Unlikely.

5 points or why do we need gradation?

You might think that we quibble. Indeed, it is not always possible to distinguish between a girl in a bathing suit and a sports bra. Moreover, the tastes do not argue. Therefore, even if Google behaves strangely with seemingly slightly different photos, then let's show where it is cool. Tyts and tyts . Everything is absolutely correct, it remains only to be amazed at the work done and the ability to notice the details occupying such a small area in the images.

After the first few hundred photos in the dataset, we decided not to score points from 1 to 5 and go to the nsfw / non nsfw graduation. The results of the comparison of our assessment with the assessment of Google are given in the table below. Of the 13k photos after removing duplicates received 11246.

| Type of assessment | Number of photos | Marked as NSFW | Share |

|---|---|---|---|

| VERY_LIKELY | 116 | 62 | 0.53445 |

| LIKELY | 176 | 47 | 0.26705 |

| POSSIBLE | 503 | 44 | 0.08748 |

| UNLIKELY | 3709 | 112 | 0.03020 |

| VERY_UNLIKELY | 6690 | 50 | 0.00747 |

| ERROR | 52 | 2 | 0.03846 |

Summary

In the results of the work of any classifier, both false-positive and false-negative cases are always encountered. Initially, we were faced with the task of finding images of a possibly indecent nature in a decent environment when analyzing the output of images from Instagram using a specific hash tag. This was supposed to protect our clients from showing on the screen in any institution of frankly unattractive scenes. In general, the API copes with its task if it is used at the level YES / NO. Thinner gradation works well for searching for NSFW content, and it is often better to filter out the false positive results that are found than to skip - God saves your savings.

And if you answer the question raised at the beginning of the article - the Corporation of Good has done an excellent job. A small class of tasks in which you need to pay special attention to false-positive and false-negative results are relatively easy to solve by adding additional checks — for example, estimating the area of open skin in an image.

About us:

Smmframe.com is a service that allows you to organize translations from photos on a given hashtag. With the help of our service, places of entertainment can advertise themselves in social networks. We have developed several advanced algorithms that allow us to find the most interesting photos that will accurately hook future visitors.

')

Source: https://habr.com/ru/post/315532/

All Articles