A bit about Windows VM disk performance in Proxmox VE. Results of ZFS and MDADM + LVM benchmarks

If anyone is interested, we recently tested the read / write performance inside the windows of the machine on the node with Proxmox 4.3.

The host system was installed on raid10 implemented in two different ways (zfs and mdadm + lvm)

Tests were conducted on a Windows guest, as the performance of this particular OS was primarily of interest.

I have to admit, this is the second version of the article, the first one was a fatal error:

zfs was tested on local storage, and not on zvol, because I thought to the last that proxmox does not support zvol.

Many thanks to winduzoid for noticing this misunderstanding.

The thought of writing this article made comments to a recent article about Installing PROXMOX 4.3 on Soft-RAID 10 GPT from vasyakrg . Not for the sake of holivar, but I decided to publish our recent results.

Water data:

But yes:

CPU: Intel® Core (TM) i7-3820 CPU @ 3.60GHz

RAM: 20GB (1334 MHz)

HDD: 4x500GIB (ST500NM0011, ST500NM0011, ST3500418AS, WDC WD5000AAKX-22ERMA0)

SSD: 250GiB (PLEXTOR PX-256M5Pro)

OS: Proxmox Virtual Environment 4.3-10

Virtual machine:

CPU: 8 (1 sockets, 8 cores)

RAM: 6.00 GiB

HDD: 60 GiB (virtio)

OS: Windows Server 2008 R2 Server Standard (full installation) SP1 [6.1 Build 7601] (x64)

All results are obtained using the CrystalDiskMark 5.2.0 x64 utility.

Each test was conducted in 5 iterations of 32GB .

No additional tweaks and changes not specified in the article were made either in the hypervisor configuration or in the virtual machine configuration. That is, just a freshly installed Proxmox and a restored virtual machine with Windows was used.

Results:

So the results themselves:

----------------------------------------------------------------------- CrystalDiskMark 5.2.0 x64 (C) 2007-2016 hiyohiyo Crystal Dew World : http://crystalmark.info/ ----------------------------------------------------------------------- * MB/s = 1,000,000 bytes/s SATA/600 = 600,000,000 bytes/s * KB = 1000 bytes, KiB = 1024 bytes Sequential Read (Q= 32,T= 1) : 274.338 MB/s Sequential Write (Q= 32,T= 1) : 171.358 MB/s Random Read 4KiB (Q= 32,T= 1) : 3.489 MB/s 851.8 IOPS Random Write 4KiB (Q= 32,T= 1) : 0.927 MB/s 226.3 IOPS Sequential Read (T= 1) : 233.437 MB/s Sequential Write (T= 1) : 183.158 MB/s Random Read 4KiB (Q= 1,T= 1) : 0.522 MB/s 127.4 IOPS Random Write 4KiB (Q= 1,T= 1) : 2.499 MB/s 610.1 IOPS Test : 32768 MiB E: 0.1% (0.1/60.0 GiB) (x5) Interval=5 sec Date : 2016/11/08 15:21:41 OS : Windows Server 2008 R2 Server Standard (full installation) SP1 6.1 Build 7601 (x64) ----------------------------------------------------------------------- CrystalDiskMark 5.2.0 x64 (C) 2007-2016 hiyohiyo Crystal Dew World : http://crystalmark.info/ ----------------------------------------------------------------------- * MB/s = 1,000,000 bytes/s SATA/600 = 600,000,000 bytes/s * KB = 1000 bytes, KiB = 1024 bytes Sequential Read (Q= 32,T= 1) : 1084.752 MB/s Sequential Write (Q= 32,T= 1) : 503.291 MB/s Random Read 4KiB (Q= 32,T= 1) : 31.148 MB/s 7604.5 IOPS Random Write 4KiB (Q= 32,T= 1) : 203.832 MB/s 49763.7 IOPS Sequential Read (T= 1) : 1890.617 MB/s Sequential Write (T= 1) : 268.878 MB/s Random Read 4KiB (Q= 1,T= 1) : 33.369 MB/s 8146.7 IOPS Random Write 4KiB (Q= 1,T= 1) : 54.938 MB/s 13412.6 IOPS Test : 32768 MiB E: 0.1% (0.1/60.0 GiB) (x5) Interval=5 sec Date : 2016/11/08 14:55:15 OS : Windows Server 2008 R2 Server Standard (full installation) SP1 6.1 Build 7601 (x64) ----------------------------------------------------------------------- CrystalDiskMark 5.2.0 x64 (C) 2007-2016 hiyohiyo Crystal Dew World : http://crystalmark.info/ ----------------------------------------------------------------------- * MB/s = 1,000,000 bytes/s SATA/600 = 600,000,000 bytes/s * KB = 1000 bytes, KiB = 1024 bytes Sequential Read (Q= 32,T= 1) : 1428.912 MB/s Sequential Write (Q= 32,T= 1) : 281.715 MB/s Random Read 4KiB (Q= 32,T= 1) : 76.261 MB/s 18618.4 IOPS Random Write 4KiB (Q= 32,T= 1) : 64.809 MB/s 15822.5 IOPS Sequential Read (T= 1) : 1337.939 MB/s Sequential Write (T= 1) : 247.119 MB/s Random Read 4KiB (Q= 1,T= 1) : 27.926 MB/s 6817.9 IOPS Random Write 4KiB (Q= 1,T= 1) : 21.005 MB/s 5128.2 IOPS Test : 32768 MiB E: 0.1% (0.1/60.0 GiB) (x5) Interval=5 sec Date : 2016/11/16 14:42:05 OS : Windows Server 2008 R2 Server Standard (full installation) SP1 6.1 Build 7601 (x64) ----------------------------------------------------------------------- CrystalDiskMark 5.2.0 x64 (C) 2007-2016 hiyohiyo Crystal Dew World : http://crystalmark.info/ ----------------------------------------------------------------------- * MB/s = 1,000,000 bytes/s SATA/600 = 600,000,000 bytes/s * KB = 1000 bytes, KiB = 1024 bytes Sequential Read (Q= 32,T= 1) : 379.678 MB/s Sequential Write (Q= 32,T= 1) : 373.262 MB/s Random Read 4KiB (Q= 32,T= 1) : 12.409 MB/s 3029.5 IOPS Random Write 4KiB (Q= 32,T= 1) : 150.885 MB/s 36837.2 IOPS Sequential Read (T= 1) : 931.972 MB/s Sequential Write (T= 1) : 187.517 MB/s Random Read 4KiB (Q= 1,T= 1) : 14.106 MB/s 3443.8 IOPS Random Write 4KiB (Q= 1,T= 1) : 54.419 MB/s 13285.9 IOPS Test : 32768 MiB E: 0.1% (0.1/60.0 GiB) (x5) Interval=5 sec Date : 2016/11/16 14:21:47 OS : Windows Server 2008 R2 Server Standard (full installation) SP1 6.1 Build 7601 (x64) Later we added a caching SSD to our zfs pool.

----------------------------------------------------------------------- CrystalDiskMark 5.2.0 x64 (C) 2007-2016 hiyohiyo Crystal Dew World : http://crystalmark.info/ ----------------------------------------------------------------------- * MB/s = 1,000,000 bytes/s SATA/600 = 600,000,000 bytes/s * KB = 1000 bytes, KiB = 1024 bytes Sequential Read (Q= 32,T= 1) : 1518.768 MB/s Sequential Write (Q= 32,T= 1) : 312.825 MB/s Random Read 4KiB (Q= 32,T= 1) : 157.763 MB/s 38516.4 IOPS Random Write 4KiB (Q= 32,T= 1) : 96.962 MB/s 23672.4 IOPS Sequential Read (T= 1) : 1474.409 MB/s Sequential Write (T= 1) : 236.638 MB/s Random Read 4KiB (Q= 1,T= 1) : 28.693 MB/s 7005.1 IOPS Random Write 4KiB (Q= 1,T= 1) : 24.380 MB/s 5952.1 IOPS Test : 32768 MiB E: 0.1% (0.1/60.0 GiB) (x5) Interval=5 sec Date : 2016/11/16 17:07:45 OS : Windows Server 2008 R2 Server Standard (full installation) SP1 6.1 Build 7601 (x64) ----------------------------------------------------------------------- CrystalDiskMark 5.2.0 x64 (C) 2007-2016 hiyohiyo Crystal Dew World : http://crystalmark.info/ ----------------------------------------------------------------------- * MB/s = 1,000,000 bytes/s SATA/600 = 600,000,000 bytes/s * KB = 1000 bytes, KiB = 1024 bytes Sequential Read (Q= 32,T= 1) : 353.932 MB/s Sequential Write (Q= 32,T= 1) : 401.659 MB/s Random Read 4KiB (Q= 32,T= 1) : 30.015 MB/s 7327.9 IOPS Random Write 4KiB (Q= 32,T= 1) : 110.644 MB/s 27012.7 IOPS Sequential Read (T= 1) : 923.238 MB/s Sequential Write (T= 1) : 167.356 MB/s Random Read 4KiB (Q= 1,T= 1) : 31.210 MB/s 7619.6 IOPS Random Write 4KiB (Q= 1,T= 1) : 56.429 MB/s 13776.6 IOPS Test : 32768 MiB E: 0.1% (0.1/60.0 GiB) (x5) Interval=5 sec Date : 2016/11/16 17:24:12 OS : Windows Server 2008 R2 Server Standard (full installation) SP1 6.1 Build 7601 (x64) For the sake of interest, we also launched tests on a single SSD:

----------------------------------------------------------------------- CrystalDiskMark 5.2.0 x64 (C) 2007-2016 hiyohiyo Crystal Dew World : http://crystalmark.info/ ----------------------------------------------------------------------- * MB/s = 1,000,000 bytes/s SATA/600 = 600,000,000 bytes/s * KB = 1000 bytes, KiB = 1024 bytes Sequential Read (Q= 32,T= 1) : 526.147 MB/s Sequential Write (Q= 32,T= 1) : 361.292 MB/s Random Read 4KiB (Q= 32,T= 1) : 189.502 MB/s 46265.1 IOPS Random Write 4KiB (Q= 32,T= 1) : 78.780 MB/s 19233.4 IOPS Sequential Read (T= 1) : 456.598 MB/s Sequential Write (T= 1) : 368.912 MB/s Random Read 4KiB (Q= 1,T= 1) : 18.632 MB/s 4548.8 IOPS Random Write 4KiB (Q= 1,T= 1) : 32.528 MB/s 7941.4 IOPS Test : 32768 MiB F: 0.1% (0.1/60.0 GiB) (x5) Interval=5 sec Date : 2016/11/09 12:56:31 OS : Windows Server 2008 R2 Server Standard (full installation) SP1 6.1 Build 7601 (x64) ----------------------------------------------------------------------- CrystalDiskMark 5.2.0 x64 (C) 2007-2016 hiyohiyo Crystal Dew World : http://crystalmark.info/ ----------------------------------------------------------------------- * MB/s = 1,000,000 bytes/s SATA/600 = 600,000,000 bytes/s * KB = 1000 bytes, KiB = 1024 bytes Sequential Read (Q= 32,T= 1) : 1587.672 MB/s Sequential Write (Q= 32,T= 1) : 524.242 MB/s Random Read 4KiB (Q= 32,T= 1) : 248.953 MB/s 60779.5 IOPS Random Write 4KiB (Q= 32,T= 1) : 320.532 MB/s 78254.9 IOPS Sequential Read (T= 1) : 2481.313 MB/s Sequential Write (T= 1) : 825.351 MB/s Random Read 4KiB (Q= 1,T= 1) : 58.060 MB/s 14174.8 IOPS Random Write 4KiB (Q= 1,T= 1) : 59.725 MB/s 14581.3 IOPS Test : 32768 MiB F: 0.1% (0.1/60.0 GiB) (x5) Interval=5 sec Date : 2016/11/09 13:28:20 OS : Windows Server 2008 R2 Server Standard (full installation) SP1 6.1 Build 7601 (x64) Charts:

For clarity, I decided to draw a few graphs

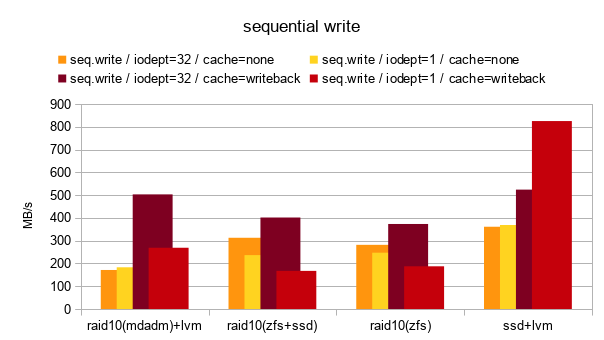

Sequential read and write speed:

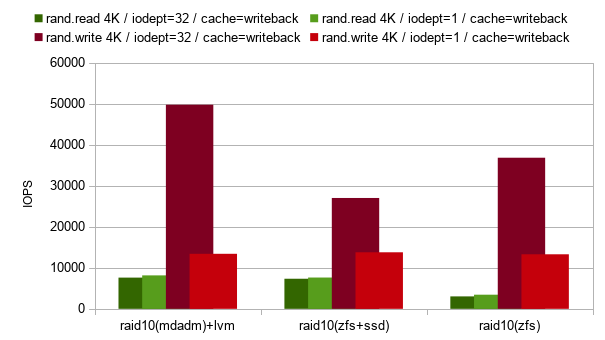

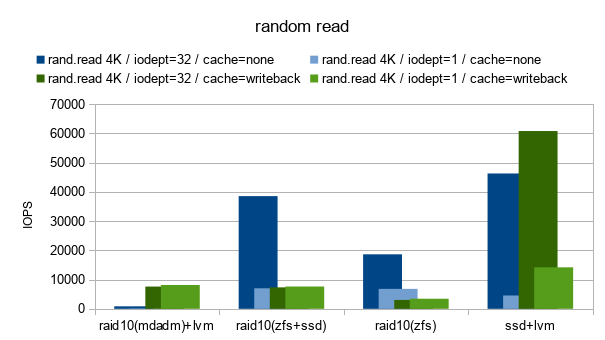

Random read and write speed:

The number of IOPS for random reading and writing:

Findings:

Despite the fact that the results were rather unusual, and sometimes even strange, you can see that raid10 compiled on zfs, with the cache=none option for the disk, showed a better result than raid10 compiled on mdadm + lvm for both reading and record

However, with the option cache=writeback raid10 compiled on mdadm + lvm goes ahead noticeably.

How much the cache=writeback increases the risk of data loss I haven’t been able to figure out yet, but there is a message on the official proxmox forum that this information is outdated in new versions of the kernel and qemu, it is recommended, in any case, for disks hosted in zfs.

newer kernels and newer version are in place now. for zfs, cache = writeback is the recommended setting.

In addition, IlyaEvseev in his article wrote that writeback cache gives good results for Windows virtual machines.

According to a subjective assessment, writeback is optimal for Windows VMs, none is for Linux VMs and FreeBSD VMs.

This is probably all, if you have any suggestions on how else you can increase the performance of disk operations on a guest machine, I’ll be happy to hear them.

')

Source: https://habr.com/ru/post/315334/

All Articles