In simple words: how machine learning works

Recently, all technology companies have been telling about machine learning. They say that it solves so many problems that previously only people could solve. But how exactly it works, no one tells. And even someone, to be honest, machine learning calls artificial intelligence.

As usual, there is no magic here, all technologies alone. And once technology, it is easy to explain all this in human language, which we will now do. The task we will solve the real. And the algorithm will describe the real, falling under the definition of machine learning. The complexity of this algorithm is toy - but the conclusions it allows to make the real ones.

The text that real people write looks like this:

Slaberda looks like this:

')

Our task is to develop an algorithm for machine learning, which would distinguish one from the other. And since we are talking about this in relation to anti-virus topics, we will call the meaningful text “pure”, and the rubbish - “malicious”. This is not just some kind of thought experiment, a similar task is actually solved when analyzing real files in a real antivirus.

For a person, the task seems trivial, because you can immediately see where it is clean and where it is malicious, but formalizing the difference or, moreover, explaining it to a computer is already more difficult. We use machine learning: first, we will give examples of the algorithm, he will “learn” them, and then he will correctly answer where that is.

Our algorithm will consider how often in a normal text one particular letter follows another specific letter. And so for each pair of letters. For example, for the first pure phrase - “I can create, I can do it!” - the distribution will turn out like this:

What happened: the letter o is followed by the letter o - twice, - and the letter a is followed by the letter t - once. For simplicity, we do not consider punctuation and spaces.

At this stage, we understand that one phrase is not enough for teaching our model: there are not enough combinations, and the difference between the frequency of occurrence of different combinations is not so great. Therefore, it is necessary to take some substantially larger amount of data. For example, let's calculate the combinations of letters found in the first volume of "War and Peace":

Of course, this is not the entire table of combinations, but only a small part of it. It turns out that the probability of meeting “that” is twice as high as “en”. And so that the letter T should be well - this occurs only once, in the word "obsolete."

Great, we now have a “model” of the Russian language, how can we use it? To determine how likely the string we are examining is clean or harmful, let's calculate its “plausibility”. We will take each pair of letters from this line, determine its frequency (in fact, the realism of the letter combination) using the “model” and multiply these numbers:

Also in the final value of the credibility should take into account the number of characters in the line under study - because the longer it was, the more numbers we multiplied. Therefore, from the work we extract the root of the desired degree (the length of the string minus one).

Now we can draw conclusions: the larger the resulting number, the more likely the line under study falls into our model. Therefore, the more likely it is that the person wrote it, that is, it is clean .

If, however, the line under investigation contains a suspiciously large number of extremely rare combinations of letters (for example, , tzh, , and so on), then, most likely, it is artificial - malicious .

For the lines above, the plausibility is as follows:

As you can see, clean lines are plausible for 1000-2000 points, and malicious ones do not reach even 150 points. That is, everything works as intended.

In order not to guess what “a lot” is and what is “not enough”, it is better to entrust the definition of the threshold value to the machine itself (let it be trained). To do this, we feed it a certain number of blank lines and calculate their plausibility, and then we feed a few malicious lines - and we also calculate. And we calculate some value in the middle, which will be best separated from one another. In our case, we get something in the region of 500.

Let's make sense of what happened with us.

1. We have identified the signs of blank lines, namely pairs of characters.

In real life, when developing a real antivirus, they also extract signs from files or other objects. And this, by the way, is the most important step: the quality of distinguished features directly depends on the level of expertise and experience of researchers. To understand what is really important is still a human task. For example, who said that you need to use pairs of characters, not triples? Such hypotheses are precisely tested in the antivirus laboratory. I note that we also use machine learning to select the best and complementary features.

2. Based on the selected features, we built a mathematical model and taught it with examples.

Of course, in real life, we use models a little more complicated. Now the best results are shown by the ensemble of decisive trees, built using the Gradient boosting method, but striving for perfection does not allow us to calm down.

3. Based on the mathematical model, we calculated the rating of "plausibility".

In real life, we usually consider the opposite rating - rating of harmfulness. The difference would seem insignificant, but guess how implausible for our mathematical model does the string appear in another language - or with a different alphabet?

Anti-Virus does not have the right to allow false positives on a whole class of files only for the reason that “we did not pass it”.

Twenty years ago, when there were few malware, every “rubbish” could be easily spotted with the help of signatures - characteristic passages. For the examples above, the “signatures” might be:

ORPoryav ayoryorpyor Ororadyutsts zushkkgeu byvyyvdtsulvdloaduztsch

Ytskhya dvolopolopoltsy yadoltslopioly bam Dlotdlamd a

The anti-virus scans the file if it met “ zushgkgeu ”, says: “Well, of course, this is rubbish number 17”. And if he finds " Dlotdlamd " - then "rubbish number 139".

15 years ago, when there was a lot of malware, the “generic” -detection became prevail. The virus analyst writes rules that are characteristic of meaningful strings:

Essentially, this is about the same thing, only instead of the mathematical model in this case, the set of rules that the analyst must manually write. It works well, but takes time.

And 10 years ago, when there were just a lot of malware, machine learning algorithms began to be timidly implemented. At first, they were comparable in complexity to the simplest example described by us, but we actively hired specialists and increased our level of expertise.

No normal antivirus works without machine learning now. If we evaluate the contribution to the protection of users, then methods based on machine learning on static grounds can compete except methods based on behavioral analysis. But only in the analysis of behavior machine learning is also used. In general, without him already anywhere.

The advantages are clear, but is it really a silver bullet, you ask. Not really. This method works well if the algorithm described above works in the cloud or in infrastructure, constantly learning from huge numbers of both clean and malicious objects.

It is also very good if the team of experts oversees the learning outcomes, intervening in cases where an experienced person is not enough.

In this case, there are really a few shortcomings, and by and large only one - this expensive infrastructure and a no less expensive team of specialists are needed.

Another thing is when someone tries to save radically and use only a mathematical model and only on the product side, right at the client. Then difficulties can begin.

1. False alarms.

Machine learning based detection is always a search for a balance between the level of detection and the level of false positives. And if we want to detect more, then there will be false positives. In the case of machine learning, they will occur in unpredictable and often difficult to explain places. For example, this clean line - “Mtsyri and Mkrtchyan” - is recognized as implausible: 145 points in the model from our example. Therefore, it is very important that the anti-virus laboratory has an extensive collection of clean files for training and model testing.

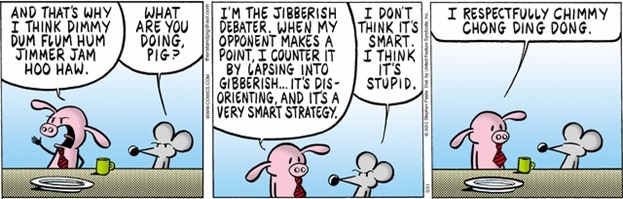

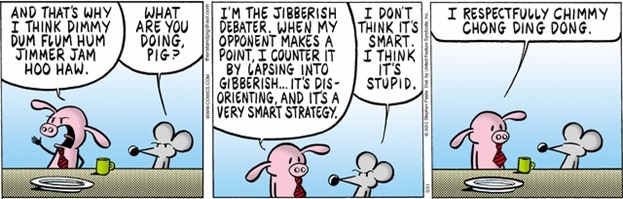

2. Bypassing the model.

An attacker can disassemble such a product and see how the model works. He is a man and for now, if not smarter, then at least more creative than a car - therefore he will tune it. For example, the following line is considered to be clean (1200 points), although its first half is clearly malicious: “well-drawn trailers) Add a lot of meaningful text to the end in order to trick the car”. No matter how clever the algorithm is used, it can always be bypassed by a person (smart enough). Therefore, the anti-virus laboratory must have an advanced infrastructure for a quick response to new threats.

3. Update the model.

Using the algorithm described above as an example, we mentioned that a model trained in Russian texts would be unsuitable for analyzing texts with a different alphabet. And malicious files, taking into account the creativity of intruders (see the previous paragraph), are like a gradually evolving alphabet. The threat landscape is changing pretty quickly. Over the years of research, we have developed an optimal approach to gradually updating the model right in antivirus databases. This allows you to retrain and even completely retrain the model "on the job."

So.

Despite the enormous importance of machine learning in the field of cybersecurity, we at Kaspersky Lab understand that a multi-level approach provides the best cyber defense.

Everything in antivirus should be fine - and behavioral analysis, and cloud protection, and machine learning algorithms, and much more. But about this “many other things” - next time.

As usual, there is no magic here, all technologies alone. And once technology, it is easy to explain all this in human language, which we will now do. The task we will solve the real. And the algorithm will describe the real, falling under the definition of machine learning. The complexity of this algorithm is toy - but the conclusions it allows to make the real ones.

Task: to distinguish meaningful text from rubbish

The text that real people write looks like this:

- I can create, I can do it!

- I have two drawbacks: bad memory and something else.

- No one knows as much as I do not know.

Slaberda looks like this:

')

- ORPoryav AOROyor ORORAydtsutszushkkgeubyvyivdtsulvdloaduztsch

- Ytskhya dvolopolopoltsy yadoltslopioly bamdlotdlamda

Our task is to develop an algorithm for machine learning, which would distinguish one from the other. And since we are talking about this in relation to anti-virus topics, we will call the meaningful text “pure”, and the rubbish - “malicious”. This is not just some kind of thought experiment, a similar task is actually solved when analyzing real files in a real antivirus.

For a person, the task seems trivial, because you can immediately see where it is clean and where it is malicious, but formalizing the difference or, moreover, explaining it to a computer is already more difficult. We use machine learning: first, we will give examples of the algorithm, he will “learn” them, and then he will correctly answer where that is.

Algorithm

Our algorithm will consider how often in a normal text one particular letter follows another specific letter. And so for each pair of letters. For example, for the first pure phrase - “I can create, I can do it!” - the distribution will turn out like this:

| at 1 | mo 2 | pu 2 |

| at 2 | on 1 | tv 2 |

| gu 2 | og 2 | m 2 |

| IT 2 | op 2 |

What happened: the letter o is followed by the letter o - twice, - and the letter a is followed by the letter t - once. For simplicity, we do not consider punctuation and spaces.

At this stage, we understand that one phrase is not enough for teaching our model: there are not enough combinations, and the difference between the frequency of occurrence of different combinations is not so great. Therefore, it is necessary to take some substantially larger amount of data. For example, let's calculate the combinations of letters found in the first volume of "War and Peace":

| then 8411 | at 6236 | at 6236 |

| st 6591 | not 5199 | ou 31 |

| at 6236 | on 5174 | mb 2 |

| ou 31 | en 4211 | tzh 1 |

Of course, this is not the entire table of combinations, but only a small part of it. It turns out that the probability of meeting “that” is twice as high as “en”. And so that the letter T should be well - this occurs only once, in the word "obsolete."

Great, we now have a “model” of the Russian language, how can we use it? To determine how likely the string we are examining is clean or harmful, let's calculate its “plausibility”. We will take each pair of letters from this line, determine its frequency (in fact, the realism of the letter combination) using the “model” and multiply these numbers:

F ( mo ) * F ( og ) * F ( gu ) * F ( tv ) * ... = 2131 * 2943 * 474 * 1344 * ... = plausibility

Also in the final value of the credibility should take into account the number of characters in the line under study - because the longer it was, the more numbers we multiplied. Therefore, from the work we extract the root of the desired degree (the length of the string minus one).

Using the model

Now we can draw conclusions: the larger the resulting number, the more likely the line under study falls into our model. Therefore, the more likely it is that the person wrote it, that is, it is clean .

If, however, the line under investigation contains a suspiciously large number of extremely rare combinations of letters (for example, , tzh, , and so on), then, most likely, it is artificial - malicious .

For the lines above, the plausibility is as follows:

- I can create, I can do it! - 1805 points

- I have two drawbacks: bad memory and something else. - 1535 points

- No one knows as much as I do not know. - 2274 points

- ORPoryav ORIOROydtsutszushkkgeubyvyyivdtsulvdloaduztsch AoryOR - 44 points

- Ytskhya dvolopolopoltsy yadoltslopioly bamdlotdlamda - 149 points

As you can see, clean lines are plausible for 1000-2000 points, and malicious ones do not reach even 150 points. That is, everything works as intended.

In order not to guess what “a lot” is and what is “not enough”, it is better to entrust the definition of the threshold value to the machine itself (let it be trained). To do this, we feed it a certain number of blank lines and calculate their plausibility, and then we feed a few malicious lines - and we also calculate. And we calculate some value in the middle, which will be best separated from one another. In our case, we get something in the region of 500.

In real life

Let's make sense of what happened with us.

1. We have identified the signs of blank lines, namely pairs of characters.

In real life, when developing a real antivirus, they also extract signs from files or other objects. And this, by the way, is the most important step: the quality of distinguished features directly depends on the level of expertise and experience of researchers. To understand what is really important is still a human task. For example, who said that you need to use pairs of characters, not triples? Such hypotheses are precisely tested in the antivirus laboratory. I note that we also use machine learning to select the best and complementary features.

2. Based on the selected features, we built a mathematical model and taught it with examples.

Of course, in real life, we use models a little more complicated. Now the best results are shown by the ensemble of decisive trees, built using the Gradient boosting method, but striving for perfection does not allow us to calm down.

3. Based on the mathematical model, we calculated the rating of "plausibility".

In real life, we usually consider the opposite rating - rating of harmfulness. The difference would seem insignificant, but guess how implausible for our mathematical model does the string appear in another language - or with a different alphabet?

Anti-Virus does not have the right to allow false positives on a whole class of files only for the reason that “we did not pass it”.

Alternative to machine learning

Twenty years ago, when there were few malware, every “rubbish” could be easily spotted with the help of signatures - characteristic passages. For the examples above, the “signatures” might be:

ORPoryav ayoryorpyor Ororadyutsts zushkkgeu byvyyvdtsulvdloaduztsch

Ytskhya dvolopolopoltsy yadoltslopioly bam Dlotdlamd a

The anti-virus scans the file if it met “ zushgkgeu ”, says: “Well, of course, this is rubbish number 17”. And if he finds " Dlotdlamd " - then "rubbish number 139".

15 years ago, when there was a lot of malware, the “generic” -detection became prevail. The virus analyst writes rules that are characteristic of meaningful strings:

- Word length is from one to twenty characters.

- Capital letters are very rarely found in the middle of a word, numbers too.

- Vowels are usually more or less evenly interspersed with consonants.

- And so on. If many criteria are violated, we detect this line as malicious.

Essentially, this is about the same thing, only instead of the mathematical model in this case, the set of rules that the analyst must manually write. It works well, but takes time.

And 10 years ago, when there were just a lot of malware, machine learning algorithms began to be timidly implemented. At first, they were comparable in complexity to the simplest example described by us, but we actively hired specialists and increased our level of expertise.

No normal antivirus works without machine learning now. If we evaluate the contribution to the protection of users, then methods based on machine learning on static grounds can compete except methods based on behavioral analysis. But only in the analysis of behavior machine learning is also used. In general, without him already anywhere.

disadvantages

The advantages are clear, but is it really a silver bullet, you ask. Not really. This method works well if the algorithm described above works in the cloud or in infrastructure, constantly learning from huge numbers of both clean and malicious objects.

It is also very good if the team of experts oversees the learning outcomes, intervening in cases where an experienced person is not enough.

In this case, there are really a few shortcomings, and by and large only one - this expensive infrastructure and a no less expensive team of specialists are needed.

Another thing is when someone tries to save radically and use only a mathematical model and only on the product side, right at the client. Then difficulties can begin.

1. False alarms.

Machine learning based detection is always a search for a balance between the level of detection and the level of false positives. And if we want to detect more, then there will be false positives. In the case of machine learning, they will occur in unpredictable and often difficult to explain places. For example, this clean line - “Mtsyri and Mkrtchyan” - is recognized as implausible: 145 points in the model from our example. Therefore, it is very important that the anti-virus laboratory has an extensive collection of clean files for training and model testing.

2. Bypassing the model.

An attacker can disassemble such a product and see how the model works. He is a man and for now, if not smarter, then at least more creative than a car - therefore he will tune it. For example, the following line is considered to be clean (1200 points), although its first half is clearly malicious: “well-drawn trailers) Add a lot of meaningful text to the end in order to trick the car”. No matter how clever the algorithm is used, it can always be bypassed by a person (smart enough). Therefore, the anti-virus laboratory must have an advanced infrastructure for a quick response to new threats.

One example of a workaround for the method we described above: all the words look believable, but in fact this is nonsense. Source of

3. Update the model.

Using the algorithm described above as an example, we mentioned that a model trained in Russian texts would be unsuitable for analyzing texts with a different alphabet. And malicious files, taking into account the creativity of intruders (see the previous paragraph), are like a gradually evolving alphabet. The threat landscape is changing pretty quickly. Over the years of research, we have developed an optimal approach to gradually updating the model right in antivirus databases. This allows you to retrain and even completely retrain the model "on the job."

Conclusion

So.

- We reviewed the real problem.

- Developed a real machine learning algorithm to solve it.

- Parallels with the antivirus industry.

- Considered with examples of the advantages and disadvantages of this approach.

Despite the enormous importance of machine learning in the field of cybersecurity, we at Kaspersky Lab understand that a multi-level approach provides the best cyber defense.

Everything in antivirus should be fine - and behavioral analysis, and cloud protection, and machine learning algorithms, and much more. But about this “many other things” - next time.

Source: https://habr.com/ru/post/315326/

All Articles