The implementation of the classification of text convolutional network on keras

Speaking, strangely enough, it will be about a text classifier using a convolutional network (vectorization of individual words is another question). The code, test data and examples of their use are on bitbucket (rested on the size limits from github and the proposal to use Git Large File Storage (LFS), until he mastered the proposed solution).

Converted sets were used: reuters - 22000 records , watson-th - 530 records , and 1 more watson-th - 50 records . By the way, I would not give up on typing in the comments / drugs (but better still in the comments) typing in Russian.

Based on one implementation of the network described here . The implementation code used on github .

')

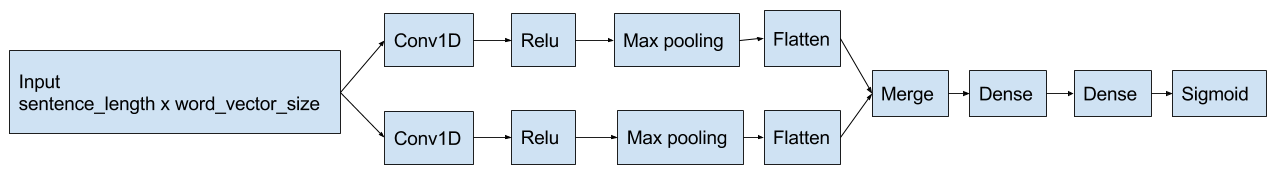

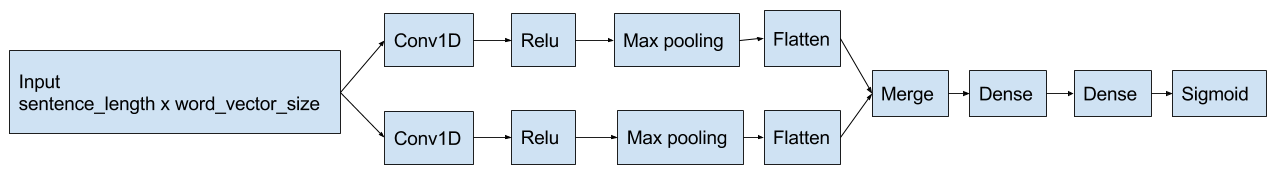

In my case, there are word vectors at the input of the network (the gensim implementation of word2vec is used). The network structure is shown below:

In short:

I stop the words from being prefiltered (this did not affect the reuter of the dataset, but in smaller sets it had an effect). About this below.

Installation for linux was significantly easier. Required:

In my case with win10 x64, the approximate sequence was as follows:

At this stage, the removal of stop words that are not included in the combination from the “white list” (about it further) and the vectorization of the remaining ones occurs. Input data for the algorithm used:

Well, the algorithm (I see at least 2 possible improvements, but not mastered):

Here you see:

Exhaust something like this:

In the course of experiments with stopwords:

Actually, the TextClassifier.config property — a dictionary that can be rendered, for example, in json and after being restored from json-a — transfers its elements to the TextClassifier's constructor. For example:

And its exhaust:

And yes, the config of the network trained in datedset from reuters is here . Gigabyte grid for 19MB dataset, yes :-)

Datasets

Converted sets were used: reuters - 22000 records , watson-th - 530 records , and 1 more watson-th - 50 records . By the way, I would not give up on typing in the comments / drugs (but better still in the comments) typing in Russian.

Network device

Based on one implementation of the network described here . The implementation code used on github .

')

In my case, there are word vectors at the input of the network (the gensim implementation of word2vec is used). The network structure is shown below:

In short:

- The text is represented as a matrix of the form word_count x word_vector_size. The vectors of individual words are from word2vec, about which you can read, for example, in this post . Since I don’t know in advance what kind of text the user will slip - I take a length of 2 * N, where N is the number of vectors in the longest text of the training set. Yes, he jabbed his fingers into the sky.

- The matrix is processed by convolutional sections of the network (at the output we obtain the transformed signs of the word)

- Selected features are processed by a fully connected network section.

I stop the words from being prefiltered (this did not affect the reuter of the dataset, but in smaller sets it had an effect). About this below.

Installing the necessary software (keras / theano, cuda) in Windows

Installation for linux was significantly easier. Required:

- python3.5

- python header files (python-dev in debian)

- gcc

- cuda

- Python libraries are the same as in the list below.

In my case with win10 x64, the approximate sequence was as follows:

- Anaconda with python3.5 .

- Cuda 8.0 . You can also run on the CPU (then gcc is enough and the next 4 steps are not needed), but on relatively large datasets, the drop in speed should be significant (not tested).

- The path to nvcc is added to PATH (otherwise theano will not detect it).

- Visual Studio 2015 with C ++, including windows 10 kit (corecrt.h is required).

- The path to cl.exe is added to the PATH.

- The path to corecrt.h in INCLUDE (in my case - C: \ Program Files (x86) \ Windows Kits \ 10 \ Include \ 10.0.10240.0 \ ucrt).

conda install mingw libpython- gcc and libpython will be required when compiling the mesh.- well,

pip install keras theano python-levenshtein gensim nltk(maybe it waspip install keras theano python-levenshtein gensim nltkwith replacing keras th backend from theano with tensorflow, but I haven’t checked it). - The .theanorc flag is the following flag for gcc:

[gcc] cxxflags = -D_hypot=hypot - Run python and execute

import nltk nltk.download()

Word processing

At this stage, the removal of stop words that are not included in the combination from the “white list” (about it further) and the vectorization of the remaining ones occurs. Input data for the algorithm used:

- language - nltk is required for tokenization and return of the list of stopwords

- "White list" of combinations of words in which stopwords are used. For example - “on” is related to stop words, but [“turn”, “on”] is another matter.

- word2vec vectors

Well, the algorithm (I see at least 2 possible improvements, but not mastered):

- I split the input text into tokens ntlk.tokenize-m (conditionally - “Hello, world!” Is converted to [“hello”, ",", "world", "!"]).

- I throw away tokens that are not in the word2vec-m dictionary.

In fact - which are not there and select a similar distance did not work. So far only Levenshtein distance, there is an idea to filter tokens with the smallest Levenshtein distance by distance from their vectors to vectors included in the training set. - Select tokens:

- which are not in the list of stopwords (reduced the error on the weather dataset, but without the next step - it spoiled the result very much on the “car_intents” -th).

- if the token in the list of stop words - check the entry in the text of sequences from the white list in which it is (conditionally, if “on” is found, check the presence of sequences from the list [[“turn”, “on”]]). If there is one, add it. There is something to improve - now I check (in our example) the presence of “turn”, but it may not apply to this “on”.

- which are not in the list of stopwords (reduced the error on the weather dataset, but without the next step - it spoiled the result very much on the “car_intents” -th).

- Replace selected tokens with their vectors.

Code us

Actually, the code that I evaluated the impact of changes

import itertools import json import numpy from gensim.models import Word2Vec from pynlc.test_data import reuters_classes, word2vec, car_classes, weather_classes from pynlc.text_classifier import TextClassifier from pynlc.text_processor import TextProcessor from sklearn.metrics import mean_squared_error def classification_demo(data_path, train_before, test_before, train_epochs, test_labels_path, instantiated_test_labels_path, trained_path): with open(data_path, 'r', encoding='utf-8') as data_source: data = json.load(data_source) texts = [item["text"] for item in data] class_names = [item["classes"] for item in data] train_texts = texts[:train_before] train_classes = class_names[:train_before] test_texts = texts[train_before:test_before] test_classes = class_names[train_before:test_before] text_processor = TextProcessor("english", [["turn", "on"], ["turn", "off"]], Word2Vec.load_word2vec_format(word2vec)) classifier = TextClassifier(text_processor) classifier.train(train_texts, train_classes, train_epochs, True) prediction = classifier.predict(test_texts) with open(test_labels_path, "w", encoding="utf-8") as test_labels_output: test_labels_output_lst = [] for i in range(0, len(prediction)): test_labels_output_lst.append({ "real": test_classes[i], "classified": prediction[i] }) json.dump(test_labels_output_lst, test_labels_output) instantiated_classifier = TextClassifier(text_processor, **classifier.config) instantiated_prediction = instantiated_classifier.predict(test_texts) with open(instantiated_test_labels_path, "w", encoding="utf-8") as instantiated_test_labels_output: instantiated_test_labels_output_lst = [] for i in range(0, len(instantiated_prediction)): instantiated_test_labels_output_lst.append({ "real": test_classes[i], "classified": instantiated_prediction[i] }) json.dump(instantiated_test_labels_output_lst, instantiated_test_labels_output) with open(trained_path, "w", encoding="utf-8") as trained_output: json.dump(classifier.config, trained_output, ensure_ascii=True) def classification_error(files): for name in files: with open(name, "r", encoding="utf-8") as src: data = json.load(src) classes = [] real = [] for row in data: classes.append(row["real"]) classified = row["classified"] row_classes = list(classified.keys()) row_classes.sort() real.append([classified[class_name] for class_name in row_classes]) labels = [] class_names = list(set(itertools.chain(*classes))) class_names.sort() for item_classes in classes: labels.append([int(class_name in item_classes) for class_name in class_names]) real_np = numpy.array(real) mse = mean_squared_error(numpy.array(labels), real_np) print(name, mse) if __name__ == '__main__': print("Reuters:\n") classification_demo(reuters_classes, 10000, 15000, 10, "reuters_test_labels.json", "reuters_car_test_labels.json", "reuters_trained.json") classification_error(["reuters_test_labels.json", "reuters_car_test_labels.json"]) print("Car intents:\n") classification_demo(car_classes, 400, 500, 20, "car_test_labels.json", "instantiated_car_test_labels.json", "car_trained.json") classification_error(["cars_test_labels.json", "instantiated_cars_test_labels.json"]) print("Weather:\n") classification_demo(weather_classes, 40, 50, 30, "weather_test_labels.json", "instantiated_weather_test_labels.json", "weather_trained.json") classification_error(["weather_test_labels.json", "instantiated_weather_test_labels.json"]) Here you see:

- Data preparation:

with open(data_path, 'r', encoding='utf-8') as data_source: data = json.load(data_source) texts = [item["text"] for item in data] class_names = [item["classes"] for item in data] train_texts = texts[:train_before] train_classes = class_names[:train_before] test_texts = texts[train_before:test_before] test_classes = class_names[train_before:test_before] - Creating a new classifier:

text_processor = TextProcessor("english", [["turn", "on"], ["turn", "off"]], Word2Vec.load_word2vec_format(word2vec)) classifier = TextClassifier(text_processor) - His training:

classifier.train(train_texts, train_classes, train_epochs, True) - Predicting classes for a test sample and storing pairs of “real classes” - “predicted class probabilities”:

prediction = classifier.predict(test_texts) with open(test_labels_path, "w", encoding="utf-8") as test_labels_output: test_labels_output_lst = [] for i in range(0, len(prediction)): test_labels_output_lst.append({ "real": test_classes[i], "classified": prediction[i] }) json.dump(test_labels_output_lst, test_labels_output) - Creating a new instance of the classifier by configuration (dict, can be serialized into / deserialized from, for example json):

instantiated_classifier = TextClassifier(text_processor, **classifier.config)

Exhaust something like this:

C:\Users\user\pynlc-env\lib\site-packages\gensim\utils.py:840: UserWarning: detected Windows; aliasing chunkize to chunkize_serial warnings.warn("detected Windows; aliasing chunkize to chunkize_serial") C:\Users\user\pynlc-env\lib\site-packages\gensim\utils.py:1015: UserWarning: Pattern library is not installed, lemmatization won't be available. warnings.warn("Pattern library is not installed, lemmatization won't be available.") Using Theano backend. Using gpu device 0: GeForce GT 730 (CNMeM is disabled, cuDNN not available) Reuters: Train on 3000 samples, validate on 7000 samples Epoch 1/10 20/3000 [..............................] - ETA: 307s - loss: 0.6968 - acc: 0.5376 .... 3000/3000 [==============================] - 640s - loss: 0.0018 - acc: 0.9996 - val_loss: 0.0019 - val_acc: 0.9996 Epoch 8/10 20/3000 [..............................] - ETA: 323s - loss: 0.0012 - acc: 0.9994 ... 3000/3000 [==============================] - 635s - loss: 0.0012 - acc: 0.9997 - val_loss: 9.2200e-04 - val_acc: 0.9998 Epoch 9/10 20/3000 [..............................] - ETA: 315s - loss: 3.4387e-05 - acc: 1.0000 ... 3000/3000 [==============================] - 879s - loss: 0.0012 - acc: 0.9997 - val_loss: 0.0016 - val_acc: 0.9995 Epoch 10/10 20/3000 [..............................] - ETA: 327s - loss: 8.0144e-04 - acc: 0.9997 ... 3000/3000 [==============================] - 655s - loss: 0.0012 - acc: 0.9997 - val_loss: 7.4761e-04 - val_acc: 0.9998 reuters_test_labels.json 0.000151774189194 reuters_car_test_labels.json 0.000151774189194 Car intents: Train on 280 samples, validate on 120 samples Epoch 1/20 20/280 [=>............................] - ETA: 0s - loss: 0.6729 - acc: 0.5250 ... 280/280 [==============================] - 0s - loss: 0.2914 - acc: 0.8980 - val_loss: 0.2282 - val_acc: 0.9375 ... Epoch 19/20 20/280 [=>............................] - ETA: 0s - loss: 0.0552 - acc: 0.9857 ... 280/280 [==============================] - 0s - loss: 0.0464 - acc: 0.9842 - val_loss: 0.1647 - val_acc: 0.9494 Epoch 20/20 20/280 [=>............................] - ETA: 0s - loss: 0.0636 - acc: 0.9714 ... 280/280 [==============================] - 0s - loss: 0.0447 - acc: 0.9849 - val_loss: 0.1583 - val_acc: 0.9530 cars_test_labels.json 0.0520754688092 instantiated_cars_test_labels.json 0.0520754688092 Weather: Train on 28 samples, validate on 12 samples Epoch 1/30 20/28 [====================>.........] - ETA: 0s - loss: 0.6457 - acc: 0.6000 ... Epoch 29/30 20/28 [====================>.........] - ETA: 0s - loss: 0.0021 - acc: 1.0000 ... 28/28 [==============================] - 0s - loss: 0.0019 - acc: 1.0000 - val_loss: 0.1487 - val_acc: 0.9167 Epoch 30/30 ... 28/28 [==============================] - 0s - loss: 0.0018 - acc: 1.0000 - val_loss: 0.1517 - val_acc: 0.9167 weather_test_labels.json 0.0136964029149 instantiated_weather_test_labels.json 0.0136964029149 In the course of experiments with stopwords:

- the error in the reuter set remained comparable regardless of deletion / preservation of stop words.

- an error in weather — dropped from 8% while removing stop words. The complication of the algorithm did not affect (since there are no combinations of words for which the stop word is still to be kept).

- the error in car_intent is increased to about 15% when deleting stop words (for example, the conditional “turn on” was curtailed to “turn”). When adding processing "white list" - back to the previous level.

Example with the launch of a pre-trained classifier

Actually, the TextClassifier.config property — a dictionary that can be rendered, for example, in json and after being restored from json-a — transfers its elements to the TextClassifier's constructor. For example:

import json from gensim.models import Word2Vec from pynlc.test_data import word2vec from pynlc import TextProcessor, TextClassifier if __name__ == '__main__': text_processor = TextProcessor("english", [["turn", "on"], ["turn", "off"]], Word2Vec.load_word2vec_format(word2vec)) with open("weather_trained.json", "r", encoding="utf-8") as classifier_data_source: classifier_data = json.load(classifier_data_source) classifier = TextClassifier(text_processor, **classifier_data) texts = [ "Will it be windy or rainy at evening?", "How cold it'll be today?" ] predictions = classifier.predict(texts) for i in range(0, len(texts)): print(texts[i]) print(predictions[i]) And its exhaust:

C:\Users\user\pynlc-env\lib\site-packages\gensim\utils.py:840: UserWarning: detected Windows; aliasing chunkize to chunkize_serial warnings.warn("detected Windows; aliasing chunkize to chunkize_serial") C:\Users\user\pynlc-env\lib\site-packages\gensim\utils.py:1015: UserWarning: Pattern library is not installed, lemmatization won't be available. warnings.warn("Pattern library is not installed, lemmatization won't be available.") Using Theano backend. Will it be windy or rainy at evening? {'temperature': 0.039208538830280304, 'conditions': 0.9617446660995483} How cold it'll be today? {'temperature': 0.9986168146133423, 'conditions': 0.0016815820708870888} And yes, the config of the network trained in datedset from reuters is here . Gigabyte grid for 19MB dataset, yes :-)

Source: https://habr.com/ru/post/315118/

All Articles