How to launch ClickHouse on your own and win the jackpot

We decided to describe a simple and proven way for those who want to implement ClickHouse analytical database on their own or just try ClickHouse on their own data. It was this path we ourselves went through in the news aggregator Mass Media2 and achieved impressive results.

In the preface of the article - a short story about our attempts to introduce Druid and InfluxDB. Why, after the successful launch of ClickHouse, we were able to stop using InfiniDB and Cassandra.

The main part of the article is devoted to ClickHouse helpers , which we developed and released in open-source . By the way, welcome to pull requests with suggestions and comments.

We assume that the reader is familiar with the official ClickHouse documentation .

Who we are and what data we work with

In the beginning we will tell about who we are and about the data, by the example of which we will further analyze the work with ClickHouse. Media2 is an information service that has been providing relevant news around the clock since 2008 and forms a complete information picture of the day. Today, Media2 includes a news aggregator and exchange network with more than 2500 partners, including the leading federal online media, industry sites and regional publications. The monthly audience of mass media2 is about 15 million people.

We will analyze the work with ClickHouse on the example of one of the simple parts of the data collected from our news aggregator , which is represented by three regional sites: smi2.ru , smi2.ua and smi2.kz. At each site we collect and process data about views and clicks on the news. This data is used both in real time - for the issuance of content, and for post-analysis of the effectiveness of materials.

In your case, the analyzed data can be, for example, server logs, statistics on events on websites, in booking systems, e-mailing, tracking sensors, etc. In all these cases, and if you have a lot of data, ClickHouse is worth it. to try.

How we came to ClickHouse

We identified the following critical requirements for an analytical database:

- real-time query processing speed

- presence of built-in analytic functions

- availability of functions for approximate calculations

- linear scalability, since to achieve linear scalability without degradation with an increase in the number of servers is a rather difficult technical task

- availability of sharding and data replication "out of the box"

- no single point of failure (data can be written to each node in the cluster)

- optimal cost of ownership (value for money)

As a background, I would like to talk about what technological stack we used earlier, what we had to give up and how we came to ClickHouse.

Bad experience with Druid and InfluxDB

This year we deployed an assembly based on the Druid - Imply Analytics Platform , as well as Tranquility , and were ready to launch in production ... But after the release of ClickHouse, they immediately abandoned Druid, although they spent two months studying and implementing it.

Of the benefits noted for themselves the following:

- Support RT stream from HTTP, Spark, Kafka, etc.

- Graphic Tools Pivot, Caravel

However, the following disadvantages outweighed the balance:

- Infrastructure complexity: separate nodes are required for receiving, processing and storing data; for fault tolerance, a double number of servers is required

- Tranquility, designed for realtime data processing, contains errors that lead to the fall of the entire Tranquility; Tranquility versions are not compatible with each other; for ourselves, we appreciated Tranquility for a good and interesting product, but so far Beta

We also had a trial approach to the InfluxDB system (see the article ), which we planned to use for building and analyzing metrics. We evaluated the project as deep Alfa due to frequent data loss and system crashes, so we also stopped working in this direction. Perhaps now the state of the product has changed for the better.

Cassandra and InfiniDB stayed with us for two years

Cassandra was used in our production from 2014 to 2016:

- Worked on 5 servers

- Maintained a load of up to 10K events per second for insertion and up to about 1K events per second for reading

- Approximately 1 time in 2 months there were desynchronization of data schemes (perhaps it was the problem of the version we used)

In the same period, we used InfiniDB . From the positive moments I would like to note the following:

- Window support

- Easy integration with existing MySQL via Federated engine

- The built-in MyISAM and InnoDB engines, which allowed unloading from the InfiniDB engine to the InnoDB engine inside a single server

- The ability to delete partitions of data for each day, according to specific columns

However, it was not without negative points:

- Lack of normal cluster and data replication. I had to make a hot copy of the data, i.e. a server clone

- The first versions had to be reloaded regularly due to memory leaks and service hangs.

- Hanging processes to write or read requests. Had to kill long processes through event handlers nagios

- The difficulty of loading data. There is only a separate console tool cpimport. I had to implement a wrapper that parses the utility output in stdout for errors and statistics for the result of the execution of the insert.

- Conditional single-threading: either we write, or we read. Large system resources consumed

And here "Yandex" laid out in open access ClickHouse

Due to shortcomings and problems with the DBMS we use for analytics, we regularly looked around in search of alternatives. In particular, we paid attention to the internal development of “Yandex” , which bribed with its incredible speed and generally met our expectations from an analytical database (see above).

There are currently no free or low-cost analytical databases for processing real-time, large data at a level like ClickHouse. In any case, we do not know about these. From paid databases, we tested HP Vertica and Greenplum. Analytics can also be read using Hadoop MapReduce, but not in near real-time mode. By the way, in the “Yandex” there is YT (“Yt”, as they call it themselves) - MapReduce is a platform for working with big data, but it also does not work in real time, although it is actively used. That is, for analytics in real time, in our opinion, ClickHouse is best suited. Therefore, when Yandex published ClickHouse in open access in the summer, we definitely decided to try it.

How ClickHouse helped us

We can confidently say that the ClickHouse launch process was faster and easier than with other DBMS. We hope that our article will allow you to do this much faster :)

If you skip the story about how we launched ClickHouse and eventually successfully launched, it is worth noting the following results of the launch of ClickHouse.

Benefits in development . In a relatively short time, we managed to close 80% of the tasks associated with data analysis, and these tasks have accumulated a lot. New analytics tasks have become much easier and faster.

Benefits in the gland . Compared with the same Druid, ClickHouse's hardware requirements were significantly lower, so we were able to save on hardware. Plus, we abandoned 5 nodes under Cassandra, 4 nodes under InfiniDB and 2 nodes under MySQL (historically remaining analytics). In total, we abandoned 11 servers, for which we had to constantly monitor and not to miss alerts about problems from nagios.

The benefits of data storage . ClickHouse stores data using various compression mechanisms. Due to the support of sharding and replication, ClickHouse is able to store and process data distributedly. Replication not only improves data storage reliability, but also optimizes read operations within the cluster.

Speed benefits . ClickHouse is really fast, we have seen this on our tasks, the speed has increased several times!

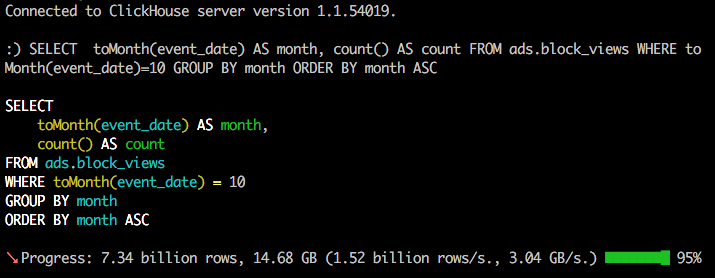

Here, many will think that it would be nice to give for an example benchmarks ... We suggest turning to Yandex benchmarks and watching our videos with requests for real data sets. The statistics collected and analyzed by us using ClickHouse data at the moment is as follows:

- up to 8,000–12,000 events per second are recorded

- approximately 21.5 billion events per month

- about 10 billion lines in the database for the month

The data is stored on 6 SX131 servers from Hetzner with 3 shards with 2 replicas.

Features ClickHouse

Like any data product, ClickHouse has its own characteristics . Here are some of them:

- No UPDATE and Derivatives: INSERT UPDATE and DELETE

- No transaction

- Deleting data by month through deleting partitions

In addition, ClickHouse does not know how to build out-of-the-box graphics, this requires additional tools.

For us, transactionality and the lack of UPDATE / DELETE are not important. We have long been accustomed to bypass these problems. However, we would love to be able to store data for only a few days. The plans of "Yandex" - add the ability to delete partitions by day.

Our projects for ClickHouse

In the process of developing and implementing ClickHouse, we are faced with some inconveniences and the lack of the “buns” we need. Therefore, without becoming wait for favors from " Yandex ”Nature, we decided to facilitate the work themselves. Another motivator was that we wanted to contribute to the development of a promising open-source project. Plus - this was our first experience of participating in open-source development.

So two of our open-source projects were born, which allowed ourselves to significantly speed up and simplify the process of implementing ClickHouse and working with it:

- Graphic client for working with DB

- Wrapper for PHP for convenient work with the database that implements the features of ClickHouse

The following describes the main features of each project.

Our graphical client for ClickHouse: features and features

- View a list of databases and tables

- View table contents

- Highlight ClickHouse functions, table and field names

- Auto-completion for table, column, and built-in names

- Execution of selected / current / multiple requests in the editor

- Automatic query type detection: CREATE TABLE / INSERT / SELECT

- Easy insertion of dictionary values

- Themes for the query editor, the themes for the entire editor (light and dark)

- Hotkeys

The client is written entirely in JavaScript, without using the server side.

You can safely use our latest published build .

Our PHP driver for ClickHouse: features and features

- No dependencies, only curl and json modules are required

- Work with ClickHouse cluster, automatic detection of necessary nodes for different configurations

- Perform a query on each node in the cluster (see our separate project on ClickHouse migrations )

- Asynchronous requests to read data and insert data

- Compression support on the fly when writing data to ClickHouse from a local file without creating temporary files

- Supports read requests using a local CSV file to make a query of the form

select * from X where id in (local_csv_file) - Work with table partitions

- Insert array into column

- Write the result of the query directly to a file with compression support without creating temporary files

- Getting the size of the table, database and list of processes on each node

- Getting statistics on SELECT query

Driver tested for PHP 5.6 and 7, HHVM 3.9.

We want to immediately warn readers that the driver does not use ready-made solutions like Guzzle (and PSR-7 in general), but is implemented through the include.php file. We hope that this fact will not scare you from further reading.

Examples of working with ClickHouse

Let’s look at an example of how ClickHouse works from PHP and using our graphical client .

We believe that you have successfully installed ClickHouse from the deb-package of the latest version and read the Quick start guide .

Let site_id = 1 for smi2.ru, site_id = 2 for smi2.ua, and site_id = 3 for smi2.kz.

On each site events related to articles (news). We will register data about the impressions of articles (views) and clicks on each article (clicks).

For each event we will fix several attributes:

- User IP Address

- city of user

- referer

- UTM tag from referer

- unique user ID

Connecting to the ClickHouse server, creating a database and a table

To record event data, create a database of articles on the ClickHouse server and inside it the events table with the following structure:

event_date Date event_time DateTime event_type Enum8('VIEWS' = 1, 'CLICKS' = 2) site_id Int32 article_id Int32 ip String city String user_uuid String referer String utm String First consider the creation of a database and a table using our graphical client . We connect via a graphical client to the ClickHouse server and execute a query to create a new database and a new table:

CREATE DATABASE articles ; CREATE TABLE articles.events ( event_date Date, event_time DateTime, event_type Enum8('VIEWS' = 1, 'CLICKS' = 2), site_id Int32, article_id Int32, ip String, city String, user_uuid String, referer String, utm String ) engine=MergeTree(event_date, (site_id, event_type, article_id), 8192)

Let us explain some parameters of this request:

MergeTreeis a table engine. There are alsoLog,CollapsingMergeTree,SummingMergeTree,ReplacingMergeTreeand others.- The first parameter,

event_dateindicates the name of a Date column containing a date. (site_id, event_type, article_id)- a tuple defining the primary key of the table (index).

Most of the read requests plan to indicate which site we need data for, so site_id used first in the index.

Now we will try to create a connection to the ClickHouse server, a database and a table through our PHP driver . To do this, first install the driver.

Installing a stable driver assembly can be done through composer :composer require smi2/phpclickhouse

or clone the driver from the main (master) branch of the Git repository:git clone https://github.com/smi2/phpClickHouse.git

More detailed information on driver installation is available in the driver documentation , which also contains a description of driver functions and ChangeLog.

After the driver has been successfully installed, we perform a request to connect to the server, create a database and a table:

<?php // $config=['host'=>'192.168.1.20','port'=>'8123','username'=>'default','password'=>'']; // $client=new \ClickHouseDB\Client($config); // $client->ping(); // $client->write('CREATE DATABASE IF NOT EXISTS articles'); $client->write("CREATE TABLE IF NOT EXISTS articles.events ( event_date Date, event_time DateTime, event_type Enum8('VIEWS' = 1, 'CLICKS' = 2), site_id Int32, article_id Int32, ip String, city String, user_uuid String, referer String, utm String ) engine=MergeTree(event_date, (site_id, event_type, article_id), 8192) "); // default $client->database('articles'); // print_r($client->showTables()); Please note that requests in the driver are divided into the following:

- a record

- data insertion

- reading

Inserts and reads can be performed in parallel.

Requests to write and insert data do not contain a response, only checks are made that the server’s response was positive. Requests for reading the answer contain (the exception is the direct recording of the response to the file).

Inserting data, including from a TSV file

Insert the data that we will use for testing:

$client->insert('events', [ [date('Ym-d'), time(), 'CLICKS', 1, 1234, '192.168.1.1', 'Moscow', 'xcvfdsazxc', '', ''], [date('Ym-d'), time(), 'CLICKS', 1, 1235, '192.168.1.1', 'Moscow', 'xcvfdsazxc', 'http://yandex.ru', ''], [date('Ym-d'), time(), 'CLICKS', 1, 1236, '192.168.1.1', 'Moscow', 'xcvfdsazxc', '', ''], [date('Ym-d'), time(), 'CLICKS', 1, 1237, '192.168.1.1', 'Moscow', 'xcvfdsazxc', '', ''], ], [ 'event_date', 'event_time', 'event_type', 'site_id', 'article_id', 'ip', 'city', 'user_uuid', 'referer', 'utm' ] ); This insert method is suitable only for small tables or tables of reference books, since in this case the array will be converted to a row.

Get the result of inserting data:

print_r( $client->select('SELECT * FROM events')->rows() ); More information about reading data is written below. To insert more lines, we will use direct loading of the TSV file that will be generated during the event. To do this, we will record the TSV file on the server where the events occur, and to simplify sending it to ClickHouse.

Suppose that we have a certain UserEvent class that allows us to obtain all the necessary data for insertion, the data is checked for validity inside the class:

$row = [ 'event_date' => $userEvent->getDate(), 'event_time' => $userEvent->getTime(), 'event_type' => $userEvent->getType(), 'site_id' => $userEvent->getSiteId(), 'article_id' => $userEvent->getArticleId(), 'ip' => $userEvent->getIp(), 'city' => $userEvent->getCity(), 'user_uuid' => $userEvent->getUserUuid(), 'referer' => $userEvent->getReferer(), 'utm' => $userEvent->getUtm(), ]; We will write to a file that is rotated every minute in the following way (we assume all the flaws - write errors, locks, etc. - the line is always written):

// $filename='/tmp/articles.events_version1_'.date("YmdHi").'.TSV'; // TabSeparated $text=\ClickHouseDB\FormatLine::TSV($row)."\n"; // CSV // $text=\ClickHouseDB\FormatLine::CSV($row)."\n"; file_put_contents($filename,$text,FILE_APPEND); On GitHub for tests, the UserEvent class emulator is made and an example of using this class with writing to the base.

Suppose that we have accumulated 5-10 such files, and we are ready to send them to the database:

$file_data_names= [ '/tmp/articles.events_version1_201612121201.TSV', '/tmp/articles.events_version1_201612121301.TSV', '/tmp/articles.events_version1_201612121401.TSV' ] // $client->enableHttpCompression(true); // TSV- ClickHouse $result_insert = $client->insertBatchTSVFiles('events', [$file_data_names], [ 'event_date', 'event_time', 'event_type', 'site_id', 'article_id', 'ip', 'city', 'user_uuid', 'referer', 'utm' ]); // , foreach ($file_data_names as $fileName) { echo $fileName . " : " . $result_insert[$fileName]->totalTimeRequest() . "\n"; } It is worth noting that working with CSV files is also supported. For them, you need to use the insertBatchFiles() function, similar to the insertBatchTSVFiles() function. However, when using TSV files, there is an additional possibility to insert the date and time in the DateTime field in the unix timestamp format. See the ClickHouse documentation for more information about the support for the TabSeparated format.

ClickHouse uses the CSV format corresponding to RFC 4180 . At the same time, standard PHP tools, namely the fputcsv() function, does not fully comply with the format requirements (see error report ).

To fully support the TSV and CSV files, we implemented array-to-string converters: FormatLine::CSV() and FormatLine::TSV() , which use the ClickHouse ability to store data in columns in the form of arrays.

With large amounts of data inserted from data files, we enable compression mode. In this case, streaming compression is used without creating temporary files, which saves server server network resources, slightly increasing the CPU load. The data transfer rate increases, and the total time spent on processing a single file is reduced several times.

In our example, for each row we pass the event_date field, although the same date is passed in the event_time field. You can save resources and don’t transfer fields each time that you can calculate on the ClickHouse server from another field. For more information about defaults, see the ClickHouse documentation .

The utm field will be filled from the referer field, if it contains utm_campaign, through the extractURLParameter(referer,'utm_campaign') function extractURLParameter(referer,'utm_campaign') .

Recreate the table:

CREATE TABLE articles.events ( event_date Date DEFAULT toDate(event_time), event_time DateTime, event_type Enum8('VIEWS' = 1, 'CLICKS' = 2), site_id Int32, article_id Int32, ip String, city String, user_uuid String, referer String, utm String DEFAULT extractURLParameter(referer, 'utm_campaign') ) engine=MergeTree(event_date, (site_id, event_type,article_id), 8192) Change the record:

$client->insert('events', [ [time(), 'CLICKS', 1, 1234, '192.168.1.11', 'Moscow', 'user_11', ''], [time(), 'VIEWS' , 1, 1237, '192.168.1.1', 'Tobruk', 'user_32', 'http://smi2.ru?utm_campaign=CM1'], [time(), 'VIEWS' , 1, 1237, '192.168.1.1', 'Gisborne', 'user_12', 'http://smi2.ru?utm_campaign=CM1'], [time(), 'VIEWS' , 1, 1237, '192.168.1.1', 'Moscow', 'user_43', 'http://smi2.ru?utm_campaign=CM3'], ], ['event_time', 'event_type', 'site_id', 'article_id', 'ip', 'city', 'user_uuid', 'referer'] ); Reading data

Less words - more code! .. Let's give a simple example of how two requests are executed in parallel through a driver:

$state1 = $db->selectAsync('SELECT 1 AS ping'); $state2 = $db->selectAsync('SELECT 2 AS ping'); // ClickHouse $db->executeAsync(); // print_r($state1->rows()) print_r($state2->rows()) Option without asynchrony:

$statement = $db->select(''SELECT 33 AS ping'); The result of requests is a Statement object that can do the following:

// $statement->count(); // LIMIT- (rows_before_limit_at_least) $statement->countAll(); // $statement->fetchOne(); // "" , SELECT WITH TOTALS print_r($statement->totals()); // print_r($statement->rows()); // , , curl print_r($statement->totalTimeRequest()); // curl_info print_r($statement->responseInfo()); // , ClickHouse print_r($result->statistics()); Let's try to read our data. Suppose we need to calculate how many unique users viewed articles by day:

SELECT event_date, uniqCombined(user_uuid) AS count_users FROM events WHERE site_id=1 GROUP BY event_date ORDER BY event_date LIMIT 4 How many users who viewed articles clicked:

SELECT user_uuid, count() AS clicks FROM articles.events WHERE event_type IN ( 'CLICKS' ) AND site_id = 1 AND user_uuid IN ( SELECT user_uuid FROM articles.events WHERE event_type IN ( 'VIEWS' ) AND site_id = 1 GROUP BY user_uuid ) GROUP BY user_uuid LIMIT 5 What UTM tags gave the most views and clicks:

SELECT utm, countIf(event_type IN('VIEWS')) AS views, countIf(event_type IN('CLICKS')) AS clicks FROM events WHERE event_date = today() AND site_id = 1 GROUP BY utm ORDER BY views DESC LIMIT 15 Using external data to process a request

Suppose that we need to calculate how many unique users have viewed article X per day , in which several article identifiers are listed in X. This can be done like this:

WHERE article_id IN (1,2,3,4,5,6,7,8,9) In this example, everything will work fine. But what if the identifiers are thousands or tens of thousands? In this case, ClickHouse functionality is useful, which allows you to use external data to process the request .

Consider this opportunity ClickHouse example. Create a CSV file '/tmp/articles_list.csv' , in which we list all the necessary article_id for the article_id request, and ask ClickHouse to create a temporary namex table containing one column:

$whereIn = new \ClickHouseDB\WhereInFile(); $whereIn->attachFile('/tmp/articles_list.csv', 'namex', ['article_id' => 'Int32'], \ClickHouseDB\WhereInFile::FORMAT_CSV); Then the contents of the CSV file can be used on the server:

$sql = " SELECT article_id, countIf(event_type='CLICKS') AS count_clicks, countIf(event_type='VIEWS') AS count_views FROM articles.events WHERE article_id IN (SELECT article_id FROM namex) GROUP BY article_id ORDER BY count_views DESC "; $result = $db->select($sql, [], $whereIn); See this example on github .

Also, the attachFile() function supports TabSeparated and TabSeparatedWithNames formats.

What's next

On this, we, perhaps, will complete the first part of our story about ClickHouse.

You can find out a lot of useful information about ClickHouse in the Google group .

If you have comments or you have found errors, typos - welcome to the world of open-source, we will wait for your pull request for this article . If you like data analysis and you are interested in working with data and ClickHouse - welcome to our team;)

We plan to do a series of materials on our experience with ClickHouse .

The plans - the following topics.

Part 2:

- Connect to a ClickHouse cluster from PHP

- Sending requests to the cluster, the implementation of migrations to PHP

Part 3:

- Using MySQL dictionaries in ClickHouse

- Table engines: CollapsingMergeTree, SummingMergeTree, MaterializedView

Part 4:

- Examples of requests in ClickHouse on open data media2

- Sampling data in ClickHouse

')

Source: https://habr.com/ru/post/314558/

All Articles