Recommendations based on product images

In this article I would like to consider in practice the option of constructing the simplest recommender system based on the similarity of product images. This material is intended for those who would like to try to apply Deep Learning, namely convolutional neural networks, in a simple, interesting and practically applicable project, but does not know where to start.

Prehistory

By writing this prototype pushed me the process of choosing T-shirts in the online store. Scrolling through 1000 out of 11,000 products, I got a bit tired. I really wanted to be able to look for products similar to those that I have already selected. The built-in recommendation system could not help with anything. It was decided to file my version and see how it works on real data.

Parsing images

For a start, a simple parser of images from this category was implemented. The previews with a resolution of 250x250 were put in one folder with names like ItemID.jpg. It turned out about 11,000 pictures.

')

Feature extraction

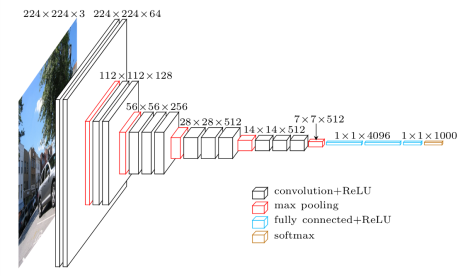

To determine the similarity of images, we need to get their vector representations. To do this, take the convolutional neural network used to classify images (VGG-16), already trained on a large dataset (ImageNet), and cut off the last layer from it, giving the output probabilities of each of the 1000 ImageNet classes. As a result, we get a 4096-dimensional vector for each of the images.

The most convenient prototype to implement in ipython notebook, I strongly advise those who have not tried.

This code was taken as a basis: gist.github.com/baraldilorenzo/07d7802847aaad0a35d3

We load libraries:

%matplotlib inline from keras.models import Sequential from keras.layers.core import Flatten, Dense, Dropout from keras.layers.convolutional import Convolution2D, MaxPooling2D, ZeroPadding2D from keras.optimizers import SGD import cv2, numpy as np import os import h5py from matplotlib import pyplot as plt import theano theano.config.openmp = True Load pre-trained on ImageNet VGG-16 and delete the last layer from it:

def VGG_16(weights_path=None): model = Sequential() model.add(ZeroPadding2D((1,1),input_shape=(3,224,224))) model.add(Convolution2D(64, 3, 3, activation='relu')) model.add(ZeroPadding2D((1,1))) model.add(Convolution2D(64, 3, 3, activation='relu')) model.add(MaxPooling2D((2,2), strides=(2,2))) model.add(ZeroPadding2D((1,1))) model.add(Convolution2D(128, 3, 3, activation='relu')) model.add(ZeroPadding2D((1,1))) model.add(Convolution2D(128, 3, 3, activation='relu')) model.add(MaxPooling2D((2,2), strides=(2,2))) model.add(ZeroPadding2D((1,1))) model.add(Convolution2D(256, 3, 3, activation='relu')) model.add(ZeroPadding2D((1,1))) model.add(Convolution2D(256, 3, 3, activation='relu')) model.add(ZeroPadding2D((1,1))) model.add(Convolution2D(256, 3, 3, activation='relu')) model.add(MaxPooling2D((2,2), strides=(2,2))) model.add(ZeroPadding2D((1,1))) model.add(Convolution2D(512, 3, 3, activation='relu')) model.add(ZeroPadding2D((1,1))) model.add(Convolution2D(512, 3, 3, activation='relu')) model.add(ZeroPadding2D((1,1))) model.add(Convolution2D(512, 3, 3, activation='relu')) model.add(MaxPooling2D((2,2), strides=(2,2))) model.add(ZeroPadding2D((1,1))) model.add(Convolution2D(512, 3, 3, activation='relu')) model.add(ZeroPadding2D((1,1))) model.add(Convolution2D(512, 3, 3, activation='relu')) model.add(ZeroPadding2D((1,1))) model.add(Convolution2D(512, 3, 3, activation='relu')) model.add(MaxPooling2D((2,2), strides=(2,2))) model.add(Flatten()) model.add(Dense(4096, activation='relu')) model.add(Dropout(0.5)) model.add(Dense(4096, activation='relu')) # model.add(Dropout(0.5)) # model.add(Dense(1000, activation='softmax')) assert os.path.exists(weights_path), 'Model weights not found (see "weights_path" variable in script).' f = h5py.File(weights_path) for k in range(f.attrs['nb_layers']): if k >= len(model.layers): # we don't look at the last (fully-connected) layers in the savefile break g = f['layer_{}'.format(k)] weights = [g['param_{}'.format(p)] for p in range(g.attrs['nb_params'])] model.layers[k].set_weights(weights) f.close() print('Model loaded.') return model model = VGG_16('../../keras/vgg16_weights.h5') sgd = SGD(lr=0.1, decay=1e-6, momentum=0.9, nesterov=True) model.compile(optimizer=sgd, loss='categorical_crossentropy') We load and convert images into a format suitable for our neural network:

path = "../../keras/tshirts/out/" ims = [] files = [] for f in os.listdir(path): if (f.endswith(".jpg")) and (os.stat(path+f) > 10000): try: files.append(f) im = cv2.resize(cv2.imread(path+f), (224, 224)).astype(np.float32) # plt.imshow(im) # plt.show() im[:,:,0] -= 103.939 im[:,:,1] -= 116.779 im[:,:,2] -= 123.68 im = im.transpose((2,0,1)) im = np.expand_dims(im, axis=0) ims.append(im) except: print f images = np.vstack(ims) Create dictionaries for converting external IDs to ours and vice versa:

r1 =[] r2= [] for i,x in enumerate(files): r1.append((int(x[:-4]),i)) r2.append((i,int(x[:-4]))) extid_to_intid_dict = dict(r1) intid_to_extid_dict = dict(r2) Extract vector views from images:

out = model.predict(images) print out print out.shape It turns out on a 4096-dimensional vector for each of 11,556 pictures:

[[ 0.00000000e+00 5.96046448e-08 4.35693979e+00 ..., 2.01165676e-07 -2.30967999e-07 5.48017263e+00] [ -2.98023224e-08 -1.78813934e-07 5.60834265e+00 ..., 2.01165676e-07 7.45058060e-09 9.42541122e+00] [ 8.94069672e-08 0.00000000e+00 8.79157162e+00 ..., 2.01165676e-07 -2.30967999e-07 8.50830841e+00] ..., [ 5.17337513e+00 -5.96046448e-08 6.89156103e+00 ..., 2.01165676e-07 7.45058060e-09 1.49011612e-08] [ 3.18071890e+00 -1.78813934e-07 -5.96046448e-08 ..., 2.01165676e-07 -2.30967999e-07 1.49011612e-08] [ 8.19161701e+00 5.96046448e-08 9.62305927e+00 ..., -3.72529030e-08 -2.30967999e-07 7.47453260e+00]] (11556, 4096) Search for similar images

Find how closest in cosine images are:

from sklearn.metrics.pairwise import pairwise_distances extid = 875317 i = extid_to_intid_dict[extid] print i plt.imshow(cv2.imread(path+files[i])) plt.show() dist = pairwise_distances(out[i],out, metric='cosine', n_jobs=1) top = np.argsort(dist[0])[0:7] for t in top: print t,dist[0][t] plt.imshow(cv2.imread(path+files[t])) plt.show()

Web interface for tests

Testing all this in ipython is not very convenient, so it was decided to make a web interface on Django.

Save the necessary data for use in the web interface:

import joblib joblib.dump((extid_to_intid_dict,intid_to_extid_dict,out),"../../keras/tshirts/models/wo_1_layer.pkl") Next, when we start, we raise the data into memory and, with each request, we find 5 nearest neighbors

Here is the result . When prompted without a parameter, selects a random image. Provides 5 nearest images for different distance metrics - “cosine”, “euclidean”, “manhattan”

In my opinion, it turned out not bad enough.

Interestingly, the neural network, trained to classify images on ImageNet, can “understand” what is depicted on a T-shirt and select images that are similar in meaning.

For example:

Cats

The Bears

People

Fruits

Similar style

Similar texture

Source: https://habr.com/ru/post/314490/

All Articles