How the Python parser works, and how to reduce memory consumption by three times

Anyone who has studied the programming language device has an idea of how they work: the parser, in accordance with the formal grammar of the PL, turns the input text into a kind of tree view that the subsequent steps work with (semantic analysis, various transformations and code generation).

In Python, everything is a bit more complicated: there are two parsers. The first parser is guided by the grammar specified in the

It is easiest to illustrate the work of the first parser with an example. Suppose we have a program

(The following description of the operation of the parser would be convenient to follow this listing - for example, by opening it in the adjacent tab.)

')

The parser starts parsing with the

From the initial state of this spacecraft there is only one arc

To work with KSD in Python there is a standard

In its source code (

It is easy to guess that such a uniform code could be automatically generated by a formal grammar. It is a little harder to guess that such a code is already generated automatically - this is how the automata used by the first parser work! Above, I then explained his device in detail in order to explain how, in March of this year, I proposed replacing all these sheets of handwritten code that need to be edited each time the grammar changes, with a run of all the same automatically generated SCs that are used the parser itself. This is to talk about whether programmers need to know the algorithms.

In June, my patch was accepted, so in Python 3.6+ the above sheets in

Working with such a bulky and redundant QDC, as was shown above, is rather inconvenient; and therefore the second parser (

Instead of working with KSD using the

As soon as the SDA is created - the CFC is no longer necessary, and all the memory occupied by it is released; therefore, for a “long-running” Python program, there is not much value for how much memory the KSD takes. On the other hand, for large, but “quick-firing” scripts (for example, searching for a value in a huge

The need to go through long “bamboo branches” makes the

Repeated many times throughout the second parser

But does something interfere right away during the creation of the KSD to remove the “one-child" nodes, substituting their children instead? After all, it will save a lot of memory, and simplify the second parser, allowing you to get rid of repeating the

In March, along with the aforementioned patch for

The compressed QDC for our sample code contains a total of 21 nodes:

With my patch, a standard set of benchmarks shows a reduction in memory consumption by the

But alas, for half a year since I offered my patch, none of the main maintainers have ever dared to revisit it - it’s so big and scary. In September, Guido van Rossum himself answered me : “Once in all this time, no one showed interest in the patch,” which means that no one else cares about the memory used by the parser. So it makes no sense to waste the time on the reviewers. ”

I hope this article explains why my big and scary patch is really necessary and useful; and hopefully, after this explanation, the Python community will reach into his hands.

In Python, everything is a bit more complicated: there are two parsers. The first parser is guided by the grammar specified in the

Grammar/Grammar file as regular expressions (with a slightly different syntax). According to this grammar, during the compilation of python using Parser/pgen , a whole set of finite state machines is generated that recognize the given regular expressions — one spacecraft for each nonterminal. The format of the resulting set of spacecraft is described in Include/grammar.h , and the spacecraft itself is defined in Python/graminit.c , in the form of a global structure _PyParser_Grammar . Terminal symbols are defined in Include/token.h , and they correspond to the numbers 0..56; non-terminal numbers start at 256.It is easiest to illustrate the work of the first parser with an example. Suppose we have a program

if 42: print("Hello world") .Here is the part of the grammar that corresponds to our program.

single_input: NEWLINE | simple_stmt | compound_stmt NEWLINE

compound_stmt: if_stmt | while_stmt | for_stmt | try_stmt | with_stmt | funcdef | classdef | decorated | async_stmt

if_stmt: 'if' test ':' suite ('elif' test ':' suite) * ['else' ':' suite]

suite: simple_stmt | NEWLINE INDENT stmt + DEDENT

simple_stmt: small_stmt (';' small_stmt) * [';'] NEWLINE

small_stmt: expr_stmt | del_stmt | pass_stmt | flow_stmt | import_stmt | global_stmt | nonlocal_stmt | assert_stmt

expr_stmt: testlist_star_expr (annassign | augassign (yield_expr | testlist) | ('=' (yield_expr | testlist_star_expr)) *)

testlist_star_expr: (test | star_expr) (',' (test | star_expr)) * [',']

test: or_test ['if' or_test 'else' test] | lambdef

or_test: and_test ('or' and_test) *

and_test: not_test ('and' not_test) *

not_test: 'not' not_test | comparison

comparison: expr (comp_op expr) *

expr: xor_expr ('|' xor_expr) *

xor_expr: and_expr ('^' and_expr) *

and_expr: shift_expr ('&' shift_expr) *

shift_expr: arith_expr (('<<' | '>>') arith_expr) *

arith_expr: term (('+' | '-') term) *

term: factor (('*' | '@' | '/' | '%' | '//') factor) *

factor: ('+' | '-' | '~') factor | power

power: atom_expr ['**' factor]

atom_expr: [AWAIT] atom trailer *

atom: '(' [yield_expr | testlist_comp] ')' | '[' [testlist_comp] ']' | '{' [dictorsetmaker] '}' |

NAME | NUMBER | STRING + | '...' | 'None' | 'True' | 'False'

trailer: '(' [arglist] ')' | '[' subscriptlist ']' | '.' NAME

arglist: argument (',' argument) * [',']

argument: test [comp_for] | test '=' test | '**' test | '*' test

And this is how the parts of the _PyParser_Grammar structure in Python are defined as such:

static arc arcs_0_0[3] = { {2, 1}, {3, 1}, {4, 2}, }; static arc arcs_0_1[1] = { {0, 1}, }; static arc arcs_0_2[1] = { {2, 1}, }; static state states_0[3] = { {3, arcs_0_0}, {1, arcs_0_1}, {1, arcs_0_2}, }; //... static arc arcs_39_0[9] = { {91, 1}, {92, 1}, {93, 1}, {94, 1}, {95, 1}, {19, 1}, {18, 1}, {17, 1}, {96, 1}, }; static arc arcs_39_1[1] = { {0, 1}, }; static state states_39[2] = { {9, arcs_39_0}, {1, arcs_39_1}, }; //... static arc arcs_41_0[1] = { {97, 1}, }; static arc arcs_41_1[1] = { {26, 2}, }; static arc arcs_41_2[1] = { {27, 3}, }; static arc arcs_41_3[1] = { {28, 4}, }; static arc arcs_41_4[3] = { {98, 1}, {99, 5}, {0, 4}, }; static arc arcs_41_5[1] = { {27, 6}, }; static arc arcs_41_6[1] = { {28, 7}, }; static arc arcs_41_7[1] = { {0, 7}, }; static state states_41[8] = { {1, arcs_41_0}, {1, arcs_41_1}, {1, arcs_41_2}, {1, arcs_41_3}, {3, arcs_41_4}, {1, arcs_41_5}, {1, arcs_41_6}, {1, arcs_41_7}, }; //... static dfa dfas[85] = { {256, "single_input", 0, 3, states_0, "\004\050\340\000\002\000\000\000\012\076\011\007\262\004\020\002\000\300\220\050\037\102"}, //... {270, "simple_stmt", 0, 4, states_14, "\000\040\200\000\002\000\000\000\012\076\011\007\000\000\020\002\000\300\220\050\037\100"}, //... {295, "compound_stmt", 0, 2, states_39, "\000\010\140\000\000\000\000\000\000\000\000\000\262\004\000\000\000\000\000\000\000\002"}, {296, "async_stmt", 0, 3, states_40, "\000\000\040\000\000\000\000\000\000\000\000\000\000\000\000\000\000\000\000\000\000\000"}, {297, "if_stmt", 0, 8, states_41, "\000\000\000\000\000\000\000\000\000\000\000\000\002\000\000\000\000\000\000\000\000\000"}, // ^^^ //... }; static label labels[176] = { {0, "EMPTY"}, {256, 0}, {4, 0}, // №2 {270, 0}, // №3 {295, 0}, // №4 //... {305, 0}, // №26 {11, 0}, // №27 //... {297, 0}, // №91 {298, 0}, {299, 0}, {300, 0}, {301, 0}, {296, 0}, {1, "if"}, // №97 //... }; grammar _PyParser_Grammar = { 86, dfas, {176, labels}, 256 }; (The following description of the operation of the parser would be convenient to follow this listing - for example, by opening it in the adjacent tab.)

')

The parser starts parsing with the

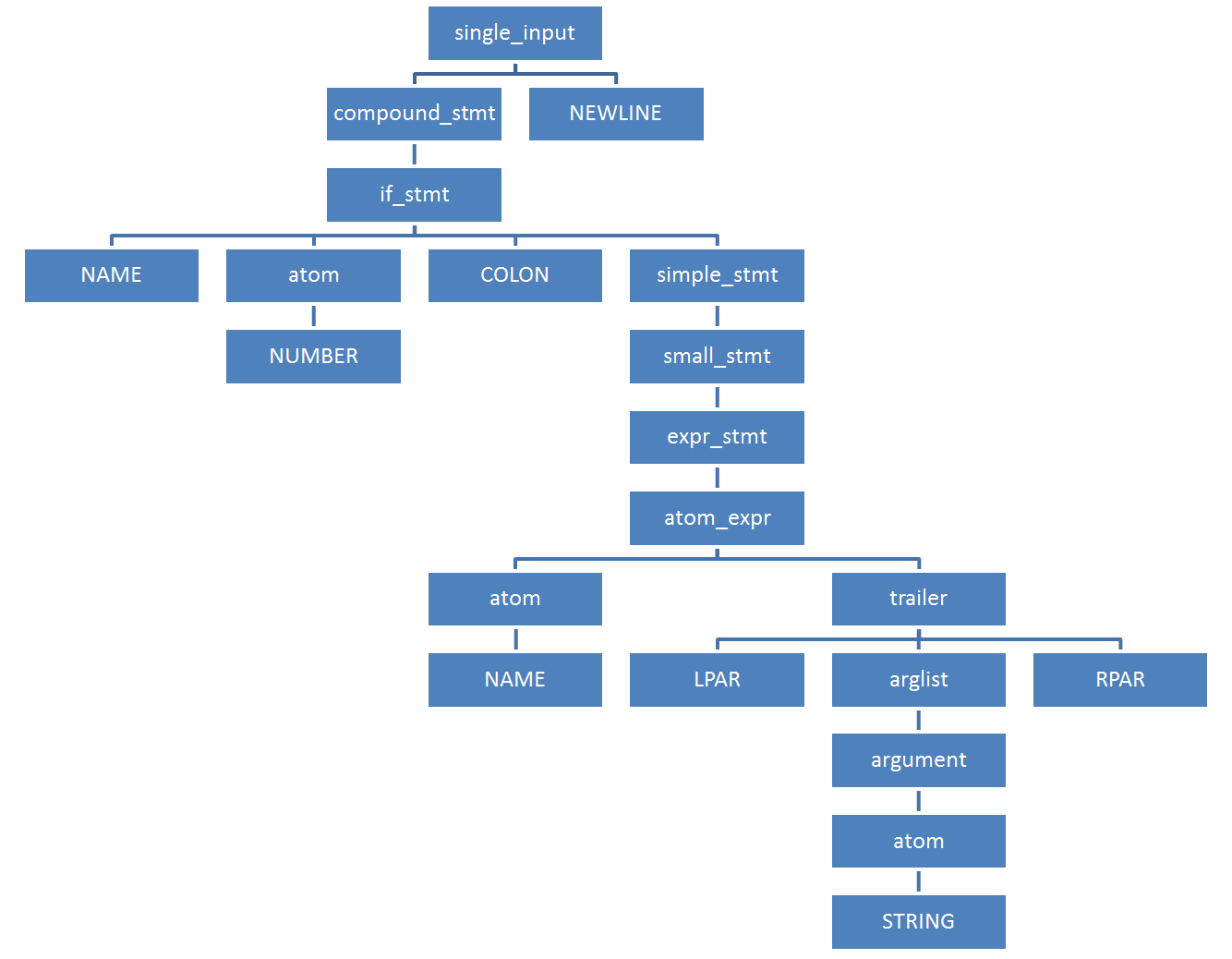

single_input ; this spacecraft is defined by the states_0 array of three states. Three arcs ( arcs_0_0 ) correspond to the initial state, corresponding to the input symbols NEWLINE (label No. 2, symbol No. 4), simple_stmt (label No. 3, symbol No. 270) and compound_stmt (label No. 4, symbol No. 295). The input terminal "if" (symbol No. 1, label No. 97) is included in the d_first set for compound_stmt , but not for simple_stmt — it corresponds to the \ 002 bit in the 13th byte of the set. (If during parsing it turns out that the next terminal enters the d_first sets for several outgoing arcs at once, then the parser displays a message stating that the grammar is ambiguous and refuses to continue parsing.) So, the parser goes over the {4, 2} arc to the state No. 2, and simultaneously switches to the spacecraft compound_stmt specified by the states_39 array. In this KA, nine arcs go out of the initial state at once ( arcs_39_0 ); among them we choose the arc {91, 1} corresponding to the input symbol if_stmt ( if_stmt 297). The parser goes to the state # 1 and switches to the if_stmt spacecraft specified by the states_41 array.

From the initial state of this spacecraft there is only one arc

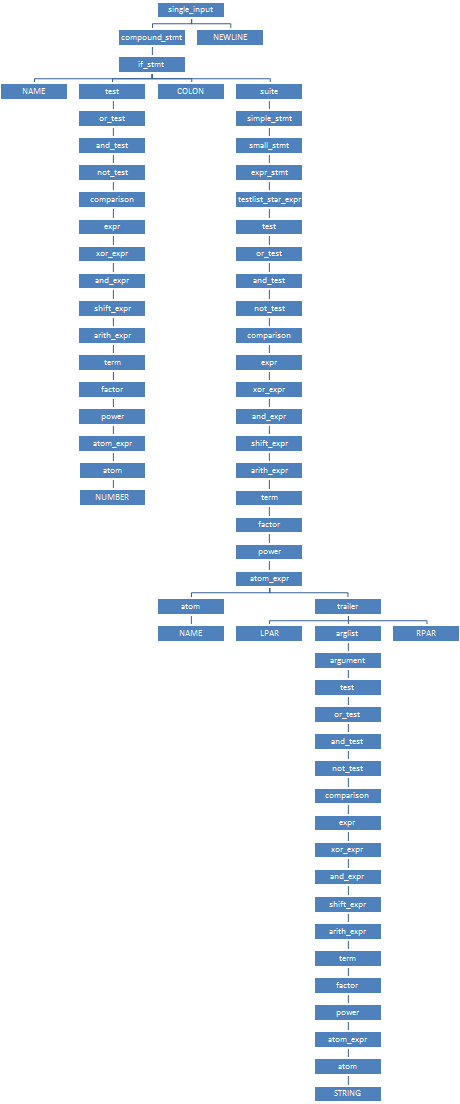

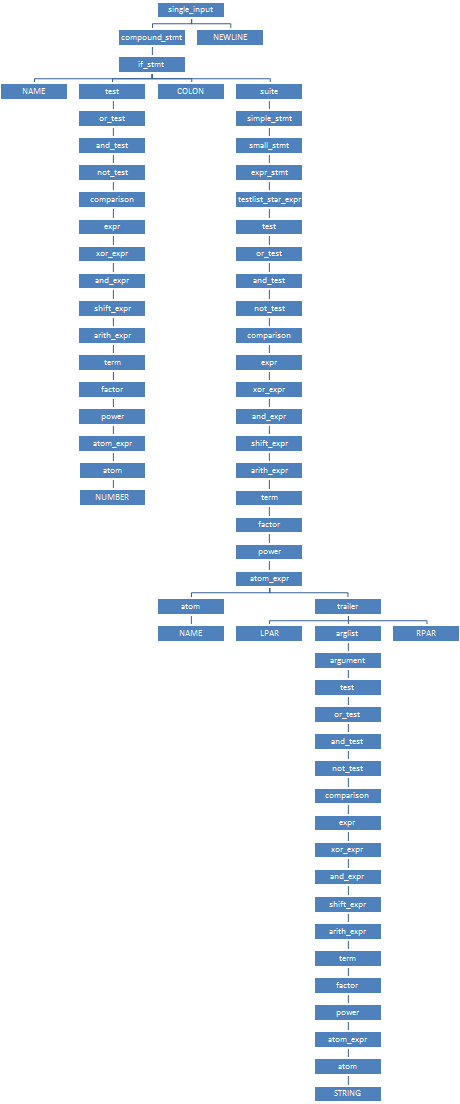

{97, 1} "if" . According to it, the parser goes to the state # 1, from where the single arc {26, 2} , which corresponds to the non-terminal test (# 305), again comes out. Along this arc, the parser goes to state # 2 and switches to the spacecraft test , and so on. After a long-long transformation of the number 42 into a nonterminal test , the parser will return to the state # 2, from which the only arc {27, 3} , corresponding to the COLON terminal (symbol # 11), will continue and will continue to parse the nonterminal if_stmt . As a result of its parsing, the parser will create a node of a specific syntactic tree ( node structure), which will have node type 297, and four children - types 1 ( NAME ), 305 ( test ), 11 ( COLON ) and 304 ( suite ). In state # 4, the creation of a node for if_stmt completed, the parser returns to state # 1 of the KA compound_stmt , and the newly created node for if_stmt becomes the only child of the node corresponding to compound_stmt . The entire QDC of our program will look like the one shown on the right. Notice how the short program of the seven terminals NAME NUMBER COLON NAME LPAR STRING RPAR turned into a "bamboo" - a huge, almost unbranching parse tree from as many as 64 nodes! If you count in bytes, then the source program takes 27 bytes, and its QDC is two orders of magnitude more: one and a half kilobytes on a 32-bit system, and three - on a 64-bit one! (64 nodes of 24 or 48 bytes each). It is because of the rampant KSD that trying to skip source files of just a few tens of megabytes through python results in a fatal MemoryError .To work with KSD in Python there is a standard

parser module: $ python Python 3.7.0a0 (default:98c078fca8e0, Oct 31 2016, 08:33:23) [GCC 4.7.3] on linux Type "help", "copyright", "credits" or "license" for more information. >>> import parser >>> parser.suite('if 42: print("Hello world")').tolist() [257, [269, [295, [297, [1, 'if'], [305, [309, [310, [311, [312, [315, [316, [317, [318, [319, [320, [321, [322, [323, [324, [2, '42']]]]]]]]]]]]]]]], [11, ':'], [304, [270, [271, [272, [274, [305, [309, [310, [311, [312, [315, [316, [317, [318, [319, [320, [321, [322, [323, [324, [1, 'print']], [326, [7, '('], [334, [335, [305, [309, [310, [311, [312, [315, [316, [317, [318, [319, [320, [321, [322, [323, [324, [3, '"Hello world"']]]]]]]]]]]]]]]]]], [8, ')']]]]]]]]]]]]]]]]]]], [4, '']]]]]], [4, ''], [0, '']] >>> In its source code (

Modules/parsermodule.c ), there were> 2000 handwritten lines to check the QDC for compliance with the Python grammar, which looked like this: //... /* simple_stmt | compound_stmt * */ static int validate_stmt(node *tree) { int res = (validate_ntype(tree, stmt) && validate_numnodes(tree, 1, "stmt")); if (res) { tree = CHILD(tree, 0); if (TYPE(tree) == simple_stmt) res = validate_simple_stmt(tree); else res = validate_compound_stmt(tree); } return (res); } static int validate_small_stmt(node *tree) { int nch = NCH(tree); int res = validate_numnodes(tree, 1, "small_stmt"); if (res) { int ntype = TYPE(CHILD(tree, 0)); if ( (ntype == expr_stmt) || (ntype == del_stmt) || (ntype == pass_stmt) || (ntype == flow_stmt) || (ntype == import_stmt) || (ntype == global_stmt) || (ntype == nonlocal_stmt) || (ntype == assert_stmt)) res = validate_node(CHILD(tree, 0)); else { res = 0; err_string("illegal small_stmt child type"); } } else if (nch == 1) { res = 0; PyErr_Format(parser_error, "Unrecognized child node of small_stmt: %d.", TYPE(CHILD(tree, 0))); } return (res); } /* compound_stmt: * if_stmt | while_stmt | for_stmt | try_stmt | with_stmt | funcdef | classdef | decorated */ static int validate_compound_stmt(node *tree) { int res = (validate_ntype(tree, compound_stmt) && validate_numnodes(tree, 1, "compound_stmt")); int ntype; if (!res) return (0); tree = CHILD(tree, 0); ntype = TYPE(tree); if ( (ntype == if_stmt) || (ntype == while_stmt) || (ntype == for_stmt) || (ntype == try_stmt) || (ntype == with_stmt) || (ntype == funcdef) || (ntype == async_stmt) || (ntype == classdef) || (ntype == decorated)) res = validate_node(tree); else { res = 0; PyErr_Format(parser_error, "Illegal compound statement type: %d.", TYPE(tree)); } return (res); } //... It is easy to guess that such a uniform code could be automatically generated by a formal grammar. It is a little harder to guess that such a code is already generated automatically - this is how the automata used by the first parser work! Above, I then explained his device in detail in order to explain how, in March of this year, I proposed replacing all these sheets of handwritten code that need to be edited each time the grammar changes, with a run of all the same automatically generated SCs that are used the parser itself. This is to talk about whether programmers need to know the algorithms.

In June, my patch was accepted, so in Python 3.6+ the above sheets in

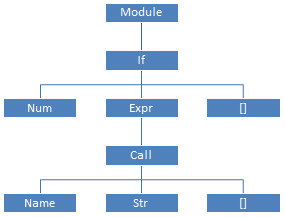

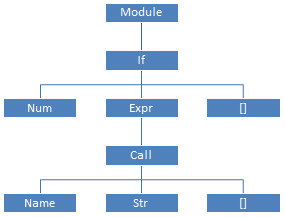

Modules/parsermodule.c are no longer there, but then there are a few dozen lines of my code.Working with such a bulky and redundant QDC, as was shown above, is rather inconvenient; and therefore the second parser (

Python/ast.c ), written entirely by hand, bypasses the CFC and creates an abstract syntax tree from it . The grammar of the SDA is described in the Parser/Python.asdl ; for our program, the SDA will be as shown on the right.

Instead of working with KSD using the

parser module, the documentation advises using the ast module and working with an abstract tree: $ python Python 3.7.0a0 (default:98c078fca8e0, Oct 31 2016, 08:33:23) [GCC 4.7.3] on linux Type "help", "copyright", "credits" or "license" for more information. >>> import ast >>> ast.dump(ast.parse('if 42: print("Hello world")')) "Module(body=[If(test=Num(n=42), body=[Expr(value=Call(func=Name(id='print', ctx=Load()), args=[Str(s='Hello world')], keywords=[]))], orelse=[])])" >>> As soon as the SDA is created - the CFC is no longer necessary, and all the memory occupied by it is released; therefore, for a “long-running” Python program, there is not much value for how much memory the KSD takes. On the other hand, for large, but “quick-firing” scripts (for example, searching for a value in a huge

dict literal), the size of the CFC will determine the memory consumption of the script. All this in addition to the fact that the size of the KSD determines whether there is enough memory to load and run the program.The need to go through long “bamboo branches” makes the

Python/ast.c just disgusting: static expr_ty ast_for_expr(struct compiling *c, const node *n) { //... loop: switch (TYPE(n)) { case test: case test_nocond: if (TYPE(CHILD(n, 0)) == lambdef || TYPE(CHILD(n, 0)) == lambdef_nocond) return ast_for_lambdef(c, CHILD(n, 0)); else if (NCH(n) > 1) return ast_for_ifexpr(c, n); /* Fallthrough */ case or_test: case and_test: if (NCH(n) == 1) { n = CHILD(n, 0); goto loop; } // case not_test: if (NCH(n) == 1) { n = CHILD(n, 0); goto loop; } // not_test case comparison: if (NCH(n) == 1) { n = CHILD(n, 0); goto loop; } // comparison case star_expr: return ast_for_starred(c, n); /* The next five cases all handle BinOps. The main body of code is the same in each case, but the switch turned inside out to reuse the code for each type of operator. */ case expr: case xor_expr: case and_expr: case shift_expr: case arith_expr: case term: if (NCH(n) == 1) { n = CHILD(n, 0); goto loop; } return ast_for_binop(c, n); // case yield_expr: case factor: if (NCH(n) == 1) { n = CHILD(n, 0); goto loop; } return ast_for_factor(c, n); case power: return ast_for_power(c, n); default: PyErr_Format(PyExc_SystemError, "unhandled expr: %d", TYPE(n)); return NULL; } /* should never get here unless if error is set */ return NULL; } Repeated many times throughout the second parser

if (NCH(n) == 1) n = CHILD(n, 0); - sometimes, as in this function, inside the cycle - means “if the current node has only one child, then instead of the current node it is necessary to consider its child”.But does something interfere right away during the creation of the KSD to remove the “one-child" nodes, substituting their children instead? After all, it will save a lot of memory, and simplify the second parser, allowing you to get rid of repeating the

goto loop; all over the code, from the look of which Dijkstra would have been spinning in a coffin!In March, along with the aforementioned patch for

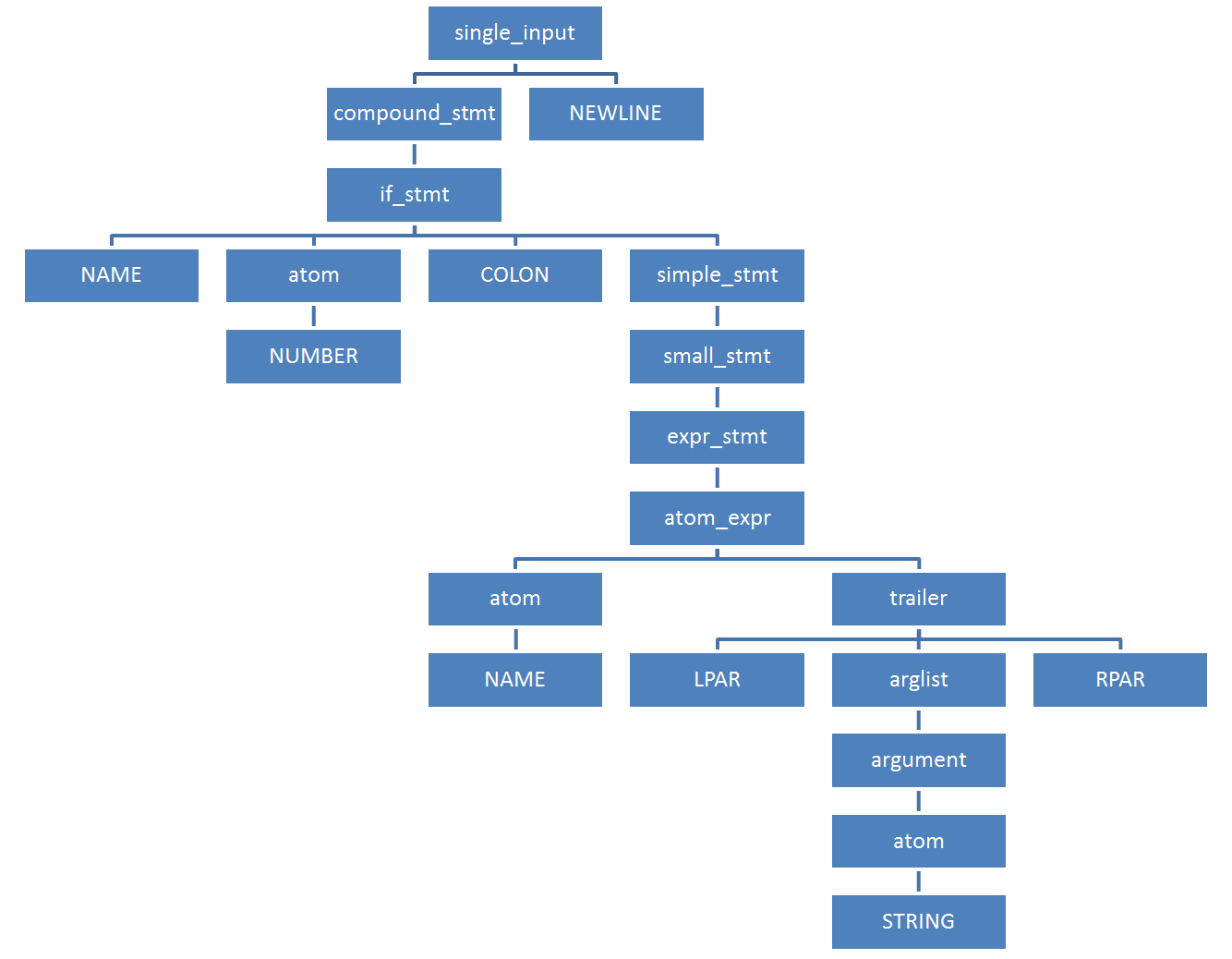

Modules/parsermodule.c , I proposed another patch , which:- An automatic “compression” of non-branching subtrees is added to the first parser — at the moment of convolution (creating the KSD node and returning from the current spacecraft to the previous one), the “one-child” node of a suitable type is replaced with its child:

diff -r ffc915a55a72 Parser/parser.c --- a/Parser/parser.c Mon Sep 05 00:01:47 2016 -0400 +++ b/Parser/parser.c Mon Sep 05 08:30:19 2016 +0100 @@ -52,16 +52,16 @@ #ifdef Py_DEBUG static void s_pop(stack *s) { if (s_empty(s)) Py_FatalError("s_pop: parser stack underflow -- FATAL"); - s->s_top++; + PyNode_Compress(s->s_top++->s_parent); } #else /* !Py_DEBUG */ -#define s_pop(s) (s)->s_top++ +#define s_pop(s) PyNode_Compress((s)->s_top++->s_parent) #endif diff -r ffc915a55a72 Python/ast.c --- a/Python/ast.c Mon Sep 05 00:01:47 2016 -0400 +++ b/Python/ast.c Mon Sep 05 08:30:19 2016 +0100 @@ -5070,3 +5056,24 @@ FstringParser_Dealloc(&state); return NULL; } + +void PyNode_Compress(node* n) { + if (NCH(n) == 1) { + node* ch; + switch (TYPE(n)) { + case suite: case comp_op: case subscript: case atom_expr: + case power: case factor: case expr: case xor_expr: + case and_expr: case shift_expr: case arith_expr: case term: + case comparison: case testlist_star_expr: case testlist: + case test: case test_nocond: case or_test: case and_test: + case not_test: case stmt: case dotted_as_name: + if (STR(n) != NULL) + PyObject_FREE(STR(n)); + ch = CHILD(n, 0); + *n = *ch; + // All grandchildren are now adopted; don't need to free them, + // so no need for PyNode_Free + PyObject_FREE(ch); + } + } +} - Corrects the second parser accordingly, excluding the “bamboo branches” bypass from it: for example, the above

ast_for_exprfunctionast_for_exprreplaced with:static expr_ty ast_for_expr(struct compiling *c, const node *n) { //... switch (TYPE(n)) { case lambdef: case lambdef_nocond: return ast_for_lambdef(c, n); case test: case test_nocond: assert(NCH(n) > 1); return ast_for_ifexpr(c, n); case or_test: case and_test: assert(NCH(n) > 1); // case not_test: assert(NCH(n) > 1); // not_test case comparison: assert(NCH(n) > 1); // comparison case star_expr: return ast_for_starred(c, n); /* The next five cases all handle BinOps. The main body of code is the same in each case, but the switch turned inside out to reuse the code for each type of operator. */ case expr: case xor_expr: case and_expr: case shift_expr: case arith_expr: case term: assert(NCH(n) > 1); return ast_for_binop(c, n); // case yield_expr: case factor: assert(NCH(n) > 1); return ast_for_factor(c, n); case power: return ast_for_power(c, n); case atom: return ast_for_atom(c, n); case atom_expr: return ast_for_atom_expr(c, n); default: PyErr_Format(PyExc_SystemError, "unhandled expr: %d", TYPE(n)); return NULL; } /* should never get here unless if error is set */ return NULL; }

On the other hand, many nodes as a result of the “compression of branches” children can now have new types, and therefore in many places in the code one has to add new conditions. - Since the “compressed QDC” no longer corresponds to the Python grammar, to verify its correctness in

Modules/parsermodule.cinstead of “raw”_PyParser_Grammarnow need to use automata corresponding to the “transitive closure” of the grammar: for example, for a pair of products

—The following "transitive" products are added:or_test :: = and_test test :: = or_test 'if' or_test 'else' test

test :: = or_test 'if' and_test 'else' test test :: = and_test 'if' or_test 'else' test test :: = and_test 'if' and_test 'else' test

During the initialization of theparsermodule, theInit_ValidationGrammarfunction bypasses the auto-generated spacecraft in_PyParser_Grammar, creates “transitively closed” spacecraft on the basis of them, and stores them in theValidationGrammarstructure.ValidationGrammarused to check the correctness of the CFC.

The compressed QDC for our sample code contains a total of 21 nodes:

$ python Python 3.7.0a0 (default:98c078fca8e0+, Oct 31 2016, 09:00:27) [GCC 4.7.3] on linux Type "help", "copyright", "credits" or "license" for more information. >>> import parser >>> parser.suite('if 42: print("Hello world")').tolist() [257, [295, [297, [1, 'if'], [324, [2, '42']], [11, ':'], [270, [271, [272, [323, [324, [1, 'print']], [326, [7, '('], [334, [335, [324, [3, '"Hello world"']]]], [8, ')']]]]], [4, '']]]], [4, ''], [0, '']] >>> With my patch, a standard set of benchmarks shows a reduction in memory consumption by the

python process up to 30% , with unchanged operating time. In degenerate examples, the reduction in memory consumption reaches threefold !

But alas, for half a year since I offered my patch, none of the main maintainers have ever dared to revisit it - it’s so big and scary. In September, Guido van Rossum himself answered me : “Once in all this time, no one showed interest in the patch,” which means that no one else cares about the memory used by the parser. So it makes no sense to waste the time on the reviewers. ”

I hope this article explains why my big and scary patch is really necessary and useful; and hopefully, after this explanation, the Python community will reach into his hands.

Source: https://habr.com/ru/post/314062/

All Articles