Acceleration of the library HeatonResearchNeural (neural network) 30 times

Hello! I want to share a little history of finishing HeatonResearchNeural - libraries of various neural networks. At once I will make a reservation that I work as an analyst, and I stopped being an honest programmer 10 years ago.

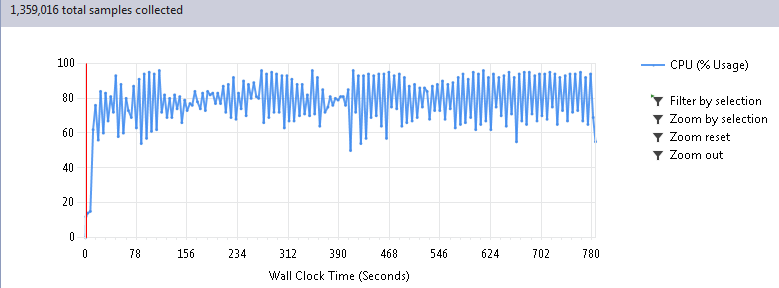

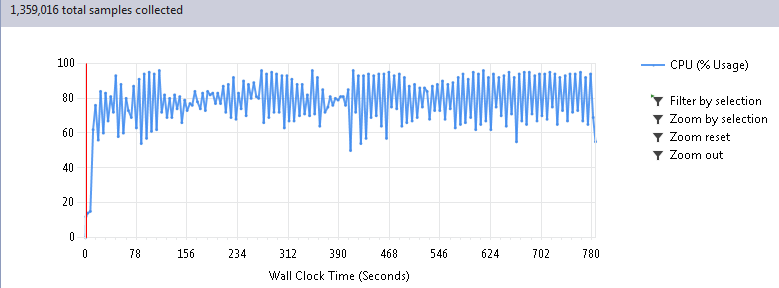

However, I have my own project in C #, which I develop in my free time. In order not to bother with writing a bicycle, I once downloaded HeatonResearchNeural with an adhesive tape and calmly drove tests, refined the logic of my code, etc. For maximum acceleration, laid into the architecture of the solution the parallelization of calculations and looking at the CPU load, 80-90% of the body spread pleasant warmth of the body - everyone plows, everyone does it!

On the other hand, I have large volumes, I had to wait a long time until it worked out. I even thought about buying a second server, until I suddenly had the idea to look under the hood of this very bike with the help of a profiler. Moreover, it did not appear on its own, but under the impression of this article by a respected habial society.

Initially, in my code, I was sure. It is clear that the neural network is such a thing that must fly, otherwise it will not pull serious topics. However, just like after reading the medical reference book, you find most of the signs of the most horrendous diseases in yourself, I decided tocheck it out by going through the code with the profiler.

')

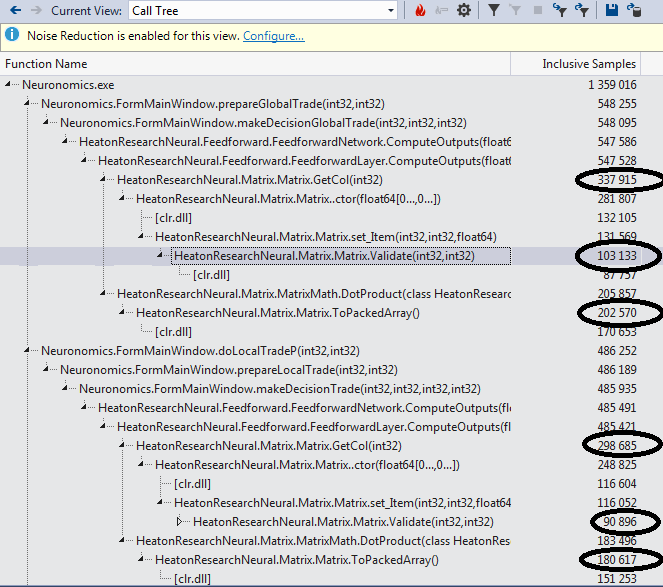

When profiling pointed to library functions, cold-blooded anxiety fluttered in my heart. It is clear that the developers of such a good library thought about speed, right? Or the world is not ruled by a secret lodge, but an obvious crap? To answer this question, let's take a closer look at the result of running 100 cycles of my program, almost entirely consisting of the work of neural networks:

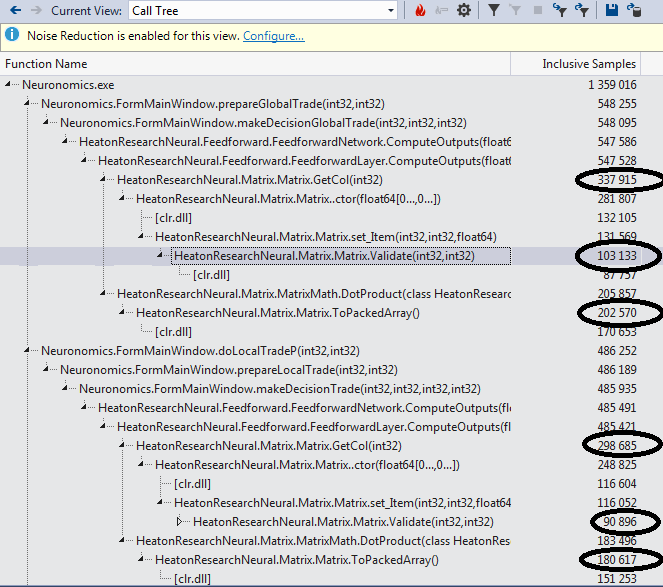

We fall into the Call Tree report and find the most difficult functions:

We go to them and see a truly heartbreaking sight. If you are not completely confident in your psyche, it may be worthwhile to turn away and not see how the GetCol function deals with the ungodly extraction of a vector from the matrix:

Only for transfer to DotProduct:

We deprive this parasite of nutrients and vitamins full of vitamins with two exact slashes and pass the whole matrix together with the required column number to DotProduct:

And already inside instead of elegant lace:

Sculpt as simple as an ax, bydlokod:

Validators for going beyond the array also make sense to comment out, still sizes are set statically during compilation and it’s hard to mess up, and the time for them is killed as much as the Japanese from skyscrapers weakening the yen by 5%.

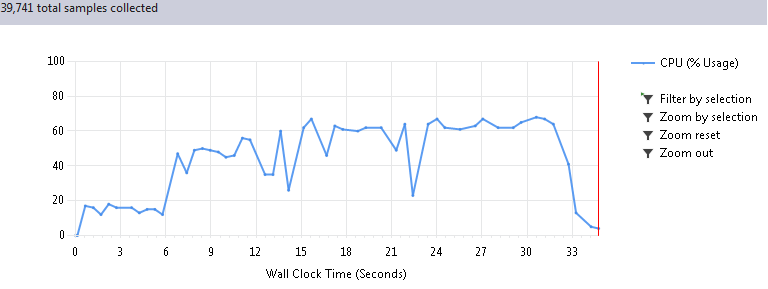

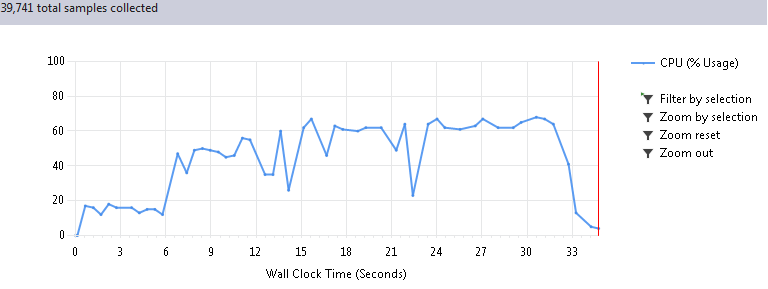

So, an exciting moment, we run again 100 program cycles. When I saw the effect of these simple actions, I almost poplohe from joy. Such a strange feeling, as if you were given and presented 30 servaks like that (which, it turns out, you were standing in the closet, but you just did not know to look there):

The first 6 seconds on the graph is data preparation, so the real time of work was reduced from 780 to 26 seconds, with absolutely the same result, naturally. Thus, the acceleration happened 30 times!

Thus, the practice still shows that Murphy's laws are as stable as African-Americans sit on the welfer and if something can go wrong, then there is no doubt that it will happen. It is also worth noting that maybe some types of networks will not work on such code, it is worth testing and taking into account if necessary. Thank you all for your attention, I hope this will help someone in some way in a successful fight against the uncontrollably growing entropy of the universe and code.

However, I have my own project in C #, which I develop in my free time. In order not to bother with writing a bicycle, I once downloaded HeatonResearchNeural with an adhesive tape and calmly drove tests, refined the logic of my code, etc. For maximum acceleration, laid into the architecture of the solution the parallelization of calculations and looking at the CPU load, 80-90% of the body spread pleasant warmth of the body - everyone plows, everyone does it!

On the other hand, I have large volumes, I had to wait a long time until it worked out. I even thought about buying a second server, until I suddenly had the idea to look under the hood of this very bike with the help of a profiler. Moreover, it did not appear on its own, but under the impression of this article by a respected habial society.

Initially, in my code, I was sure. It is clear that the neural network is such a thing that must fly, otherwise it will not pull serious topics. However, just like after reading the medical reference book, you find most of the signs of the most horrendous diseases in yourself, I decided to

')

When profiling pointed to library functions, cold-blooded anxiety fluttered in my heart. It is clear that the developers of such a good library thought about speed, right? Or the world is not ruled by a secret lodge, but an obvious crap? To answer this question, let's take a closer look at the result of running 100 cycles of my program, almost entirely consisting of the work of neural networks:

We fall into the Call Tree report and find the most difficult functions:

We go to them and see a truly heartbreaking sight. If you are not completely confident in your psyche, it may be worthwhile to turn away and not see how the GetCol function deals with the ungodly extraction of a vector from the matrix:

public Matrix GetCol(int col) { if (col > this.Cols) { throw new MatrixError("Can't get column #" + col + " because it does not exist."); } double[,] newMatrix = new double[this.Rows, 1]; for (int row = 0; row < this.Rows; row++) { newMatrix[row, 0] = this.matrix[row, col]; } return new Matrix(newMatrix); } Only for transfer to DotProduct:

for (i = 0; i < this.next.NeuronCount; i++) { Matrix.Matrix col = this.matrix.GetCol(i); double sum = MatrixMath.DotProduct(col, inputMatrix); this.next.SetFire(i, this.activationFunction.ActivationFunction(sum)); } We deprive this parasite of nutrients and vitamins full of vitamins with two exact slashes and pass the whole matrix together with the required column number to DotProduct:

//Matrix.Matrix col = this.matrix.GetCol(i); double sum = MatrixMath.DotProduct(this.matrix, i, inputMatrix); And already inside instead of elegant lace:

public static double DotProduct(Matrix a, Matrix b) { if (!a.IsVector() || !b.IsVector()) { throw new MatrixError( "To take the dot product, both matrixes must be vectors."); } Double[] aArray = a.ToPackedArray(); Double[] bArray = b.ToPackedArray(); if (aArray.Length != bArray.Length) { throw new MatrixError( "To take the dot product, both matrixes must be of the same length."); } double result = 0; int length = aArray.Length; for (int i = 0; i < length; i++) { result += aArray[i] * bArray[i]; } return result; Sculpt as simple as an ax, bydlokod:

public static double DotProduct(Matrix a, int i, Matrix b) { double result = 0; if (!b.IsVector()) { throw new MatrixError( "To take the dot product, both matrixes must be vectors."); } if (a.Rows != b.Cols || b.Rows != 1) { throw new MatrixError( "To take the dot product, both matrixes must be of the same length."); } int rows = a.Rows; // , a.Rows for (int r = 0; r < rows; r++) { result += a[r, i] * b[0, r]; } return result; Validators for going beyond the array also make sense to comment out, still sizes are set statically during compilation and it’s hard to mess up, and the time for them is killed as much as the Japanese from skyscrapers weakening the yen by 5%.

public double this[int row, int col] { get { //Validate(row, col); return this.matrix[row, col]; } set { //Validate(row, col); if (double.IsInfinity(value) || double.IsNaN(value)) { throw new MatrixError("Trying to assign invalud number to matrix: " + value); } this.matrix[row, col] = value; } } So, an exciting moment, we run again 100 program cycles. When I saw the effect of these simple actions, I almost poplohe from joy. Such a strange feeling, as if you were given and presented 30 servaks like that (which, it turns out, you were standing in the closet, but you just did not know to look there):

The first 6 seconds on the graph is data preparation, so the real time of work was reduced from 780 to 26 seconds, with absolutely the same result, naturally. Thus, the acceleration happened 30 times!

Thus, the practice still shows that Murphy's laws are as stable as African-Americans sit on the welfer and if something can go wrong, then there is no doubt that it will happen. It is also worth noting that maybe some types of networks will not work on such code, it is worth testing and taking into account if necessary. Thank you all for your attention, I hope this will help someone in some way in a successful fight against the uncontrollably growing entropy of the universe and code.

Source: https://habr.com/ru/post/313740/

All Articles