Scalable nginx configuration

Igor Sysoev ( isysoev )

My name is Igor Sysoev, I am the author of nginx and the co-founder of the same name company.

We continue to develop open source. Since the founding of the company, the pace of development has increased significantly since a lot of people work on the product. As part of open source, we provide paid support .

')

I’ll talk about the scalable nginx configuration, but it’s not about how to service hundreds of thousands of simultaneous connections with nginx, because you don’t need to configure nginx for this. You need to set an adequate number of workflows or put it in the “auto” mode, set worker_connections to 100,000 connections, then configuring the kernel is a much more global task than simply setting up nginx. Therefore, I will talk about other scalability - about the scalability of the nginx configuration, i.e. how to ensure the growth of the configuration from hundreds of lines to several thousand and at the same time spend the minimum (preferably constant) time to maintain this configuration.

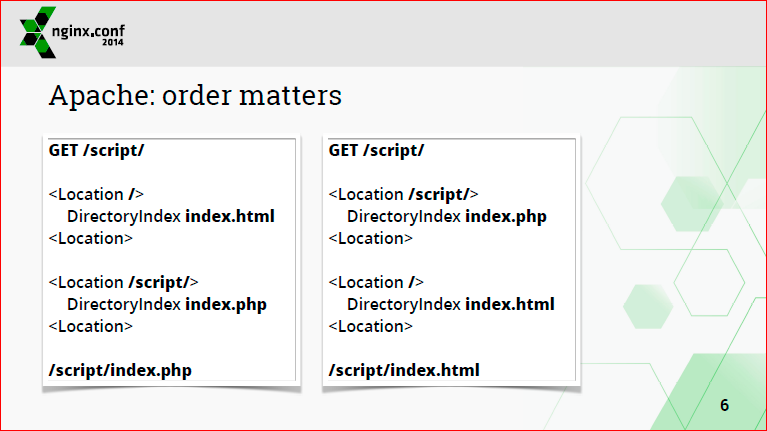

Why, in fact, there was such a topic? About 15 years ago I started working at the Rambler and administered servers, in particular, apache. And apache has such an unpleasant feature, which is well illustrated by the following two configurations:

There are two locations here and they go in a different order. The same request, depending on which configuration is used, will be processed by different files - either a php file or an html file. That is, when working with the apache configuration, the order matters. And you can not cancel it - when processing requests, apache goes through all the locations, tries to find those that somehow coincide with this request, and collects the configuration from all these locations. It merges it and, in the end, uses the resultant.

This is convenient if you have a small configuration - so you can make it even smaller. But as you grow, you encounter the following problems. For example, if you add a new location at the end, everything works, but after that you need to change the configuration in the middle or throw out the irrelevant location from the middle. You need to review the entire configuration after these locations to make sure that everything continues to work as before. Thus, the configuration turns into a house of cards - by pulling out one card, we can tear down the whole structure.

In apache, to add hell to the configuration there are several more sections that work in exactly the same way, they are processed in a different order, but one of the resulting configuration is assembled from all of them. All this is done in runtime, i.e. if you have many modules, then each module will merge configurations (this partially explains why nginx in some tests is faster than apache - because nginx does not merge configuration in the runtime). Some of these sections and most of the directives can be placed in .htaccess files that are scattered throughout the site, and in order to make your life and your colleagues even more “interesting”, these files can be renamed, and look for this configuration ...

And the “cherry on the cake” are RewriteRules, which allow you to make the configuration similar to sendmail. Few appreciated the humor, because, fortunately, most no longer know what it is.

RewriteRules are a nightmare. A lot of administrators come not so much with the apache background, as with the apache administration background on shared hosting, i.e. when the only administration tool was .htaccess. And in it, they make very intricate rewriteRules, which are very hard to understand both by virtue of syntax and logic.

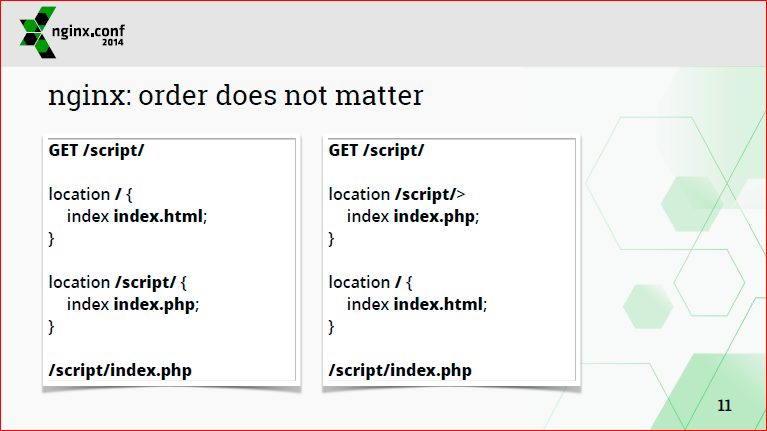

This was one of the drawbacks of apache, which really annoyed me; it did not allow me to create large enough configurations. During the development of nginx I wanted to change this, I fixed a lot of annoying apache features, added my own. Here is the previous example in nginx:

Unlike apache, regardless of the order of locations, the request will be processed in the same way, because nginx searches for the maximum possible match with the prefix location, not specified by a regular expression, and then selects this location. The configuration of the selected location is used, and all other locations are ignored. This approach allows you to write configurations with hundreds of locations and not think about how it will affect everything else, i.e. we get some kind of containers. You isolate processing in one small place.

Consider how nginx selects the configuration that it will use when processing the request. First of all, a suitable server subquery is searched. The selection is made based on the address and port first, and then all the server-name names associated with the data address and port.

If you want to, say, place the server at several addresses, write there many server names, and you want all these server names to work at all addresses, then you need to duplicate addresses on all names. After the server is selected, a suitable location is searched for inside the server. First, all prefix locations are checked, the maximum match is searched, then it is checked if there are locations defined by regular expressions. Since we cannot determine the maximum match for regular expressions, the location is chosen, for which the regular expression matches the first one. After that, the configuration names of this location are used. If no regular expression matches, the configuration that was found before is used with the maximum matching prefix.

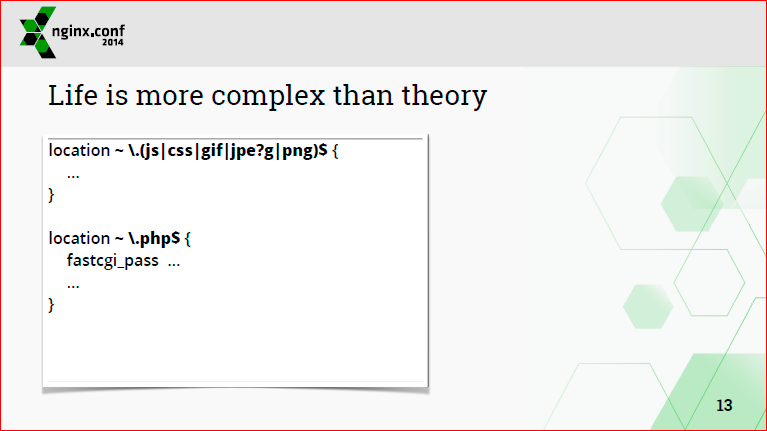

Regular expressions add dependency on order, thus creating poorly maintained configurations, because life is more complicated than theory, and very often the site structure is such a dump from a heap of static files, scripts, etc., and in this case the only way to handle everything queries are regular expressions.

On this slide, an illustration of how to do is not necessary. If you already have such sites, they need to be redone.

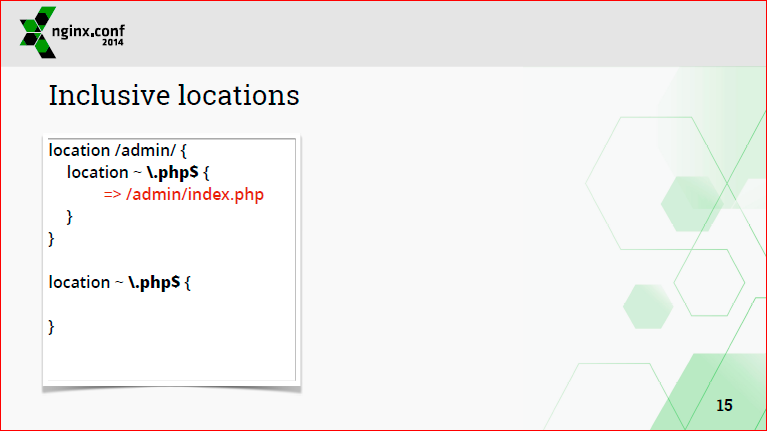

When I talked about how to handle the configuration, it was the original design. Then it became possible to describe locations within a location, i.e. inclusive locations, and the order has adapted a bit. Those. First, the maximum matching prefix location is searched, then inside it the maximum matching prefix location is searched. Such a recursive search continues until we reach the location, in which there is nothing left.

After that, we start checking locations with regular expressions in the reverse order, i.e. we entered the most nested location, see if there is a regular expression. If not, then go down to the level below, etc. Again, the first matching location with the regular expression "wins." This approach allows you to do this processing:

We have two locations here with regular expressions, but for the request /admin/index.php the nested first location will be selected, not the second.

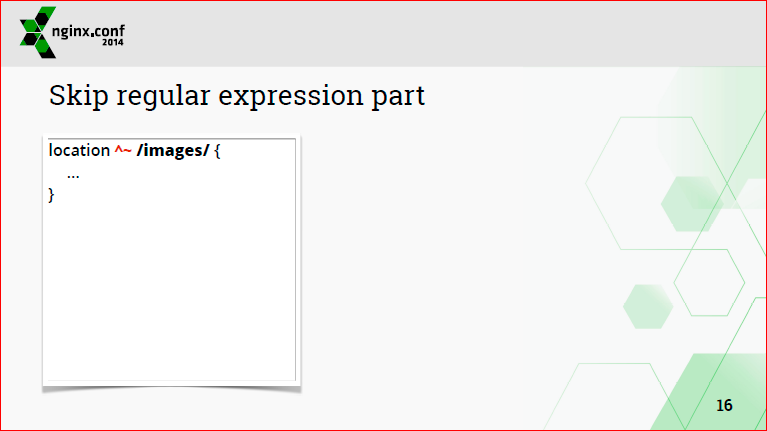

In addition, the second part of the search for regular expressions can be disabled by marking the location with the symbol ^ ~:

Such a ban means that if this location showed the maximum match, then regular expressions will not be searched for after it.

Very often, people try to make the configuration smaller, i.e. they take out some common part of the configuration and simply redirect requests there. Here, for example, is a very bad way to throw everything into php processing:

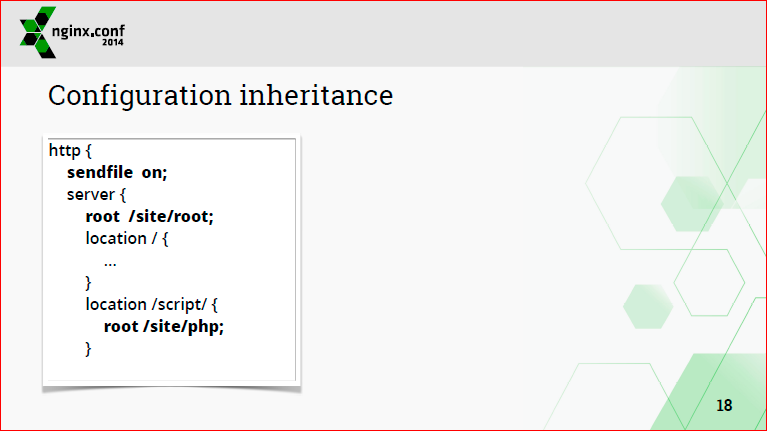

In nginx there are other methods for extracting common parts of the configuration. First of all, this is the inheritance of the configuration from the previous level. For example, here we can write at the http level to enable sendfile for all servers and all locations:

This configuration is inherited in all nested servers and locations. If we need to cancel sendfile somewhere, because, let's say, the file system does not support it, or for some other reason, then we can turn it off in a specific location or in a specific server.

Or, for example, for the server, we can write a common root, where we need to override it.

This approach differs from apache in that we know specific places where we need to look for common parts that may affect our location.

The only thing that cannot be done is shared - for example, at the level of http you cannot describe location. This was done deliberately. In apache, you can do this, but it causes a lot of problems when used.

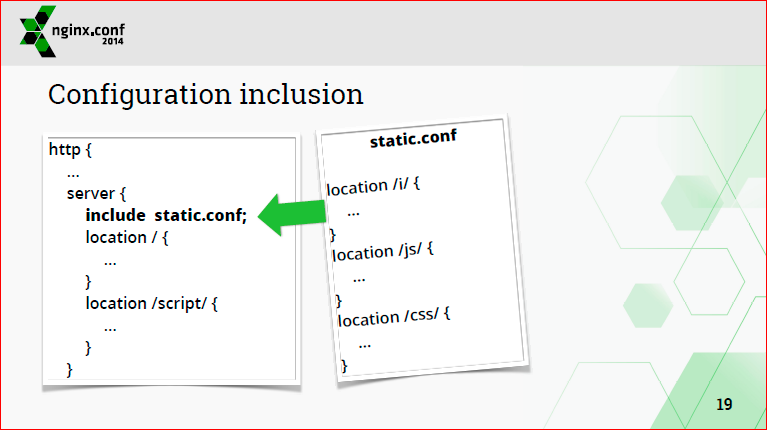

Personally, I prefer to describe the locations explicitly directly in the configuration. If you do not want to do this, then you can enable it through an external file.

Now I would like to talk about why people want to write less, i.e. why they fumble shared configurations. They believe they will spend less energy. In fact, people do not want to write as much as spend less time. But they don’t think about the future, but they think that if they write less now, it will be the same in the future ...

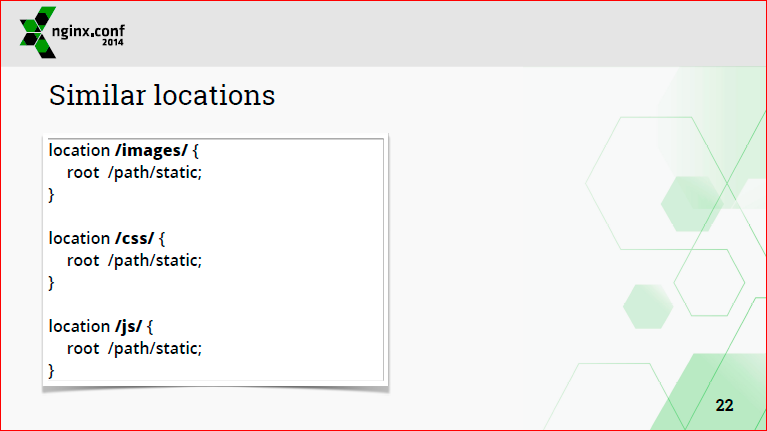

The correct approach is to use copy-paste. That is, inside a location should be all the necessary directives for its processing.

The usual argument for DRY lovers (Don't Repeat Youself) is that if you need to fix something, you can fix it in one place and everything will be fine.

In fact, modern editors have the find-replace functionality. If you need, for example, to fix the name / port of the backend or change the root, the header, transferred to the backend, etc., you can easily do this using find.

In order to understand whether you need to change some parameter in a given place, a couple of seconds is enough. For example, you have 100 locations, you will spend 2 seconds for each location, for a total of 200 seconds. ~ 3 min That's not a lot. But when in the future you have to untie some kind of location from the general part, then it will be much more difficult. You will need to understand what to change, how it will affect other locations, etc. Therefore, as for the nginx configuration, you need to use copy-paste.

Generally speaking, administrators do not like to spend a lot of time on their configurations. I myself am so. The administrator can have 2-3 favorite products, he can mess around with them a lot, while there are a dozen other products that you don’t want to spend time on. For example, I have mail on my personal site, this is Exim, Dovecot. I do not like to administer them. I just want them to work, and if you need to add something so that it takes no more than a couple of minutes. I'm just too lazy to learn the configuration, and I think most nginx administrators - they are the same, they want to administer ngnix as little as possible, it is important for them to work. If you are such an administrator, use copy-paste.

Examples of how short non-scalable configurations can be turned into what is needed:

Then a person thinks that he wrote a regular expression, just a little, everything is fine. In fact, because there is a regular expression, this is bad - it can affect everything else. Therefore, I personally do this:

If you have this root common to all locations, or at least used in most of them, then this can be done even this way:

This is, in general, a legal configuration, i.e. completely empty location configuration.

The second way to avoid copy-paste is an example:

Administrators who used to work with apache think that admin / index.php should request authorization. In nginx this does not work, because Index.php is processed in one location, and location / admin is completely different. But you can make a nested configuration and then index.php will naturally request authorization.

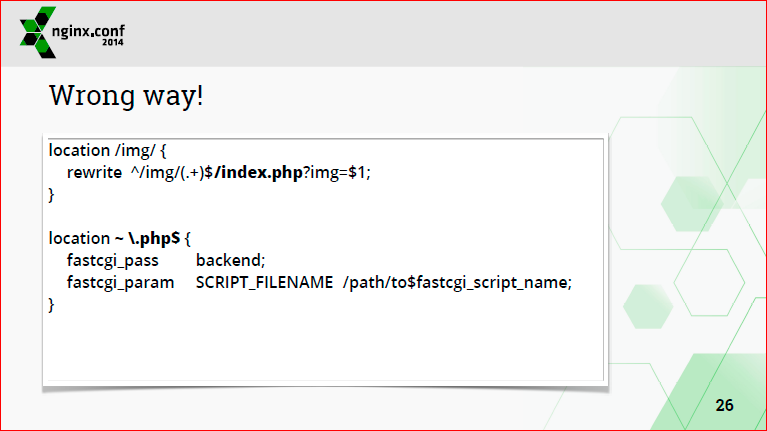

Often you need to use regular expressions in order to "bite" some parts of the URL and use them in processing. This is a bad way:

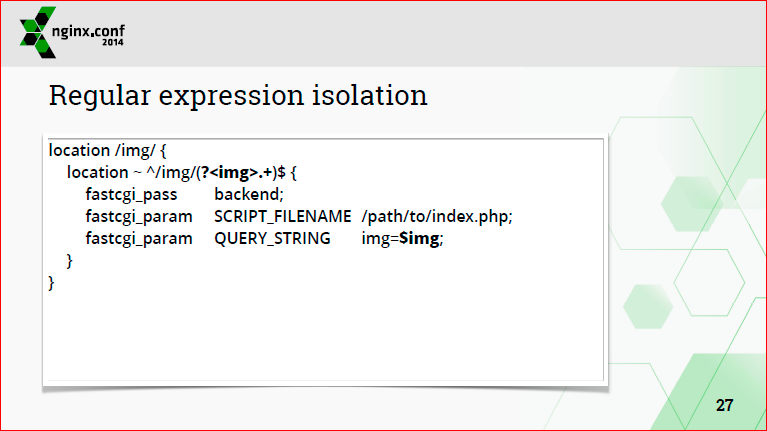

Right is to use nested locations, so we isolate regular expressions from the configuration of the rest of the site, i.e. beyond this location / img /, which is placed on the screen, the control will not go away:

Another place where it is safe to use regular expressions in nginx is the map, i.e. form variables based on some other variables using regular expressions, etc .:

I did not say anything about using Rewrites, because they should not be used at all. If you can not not use them, then use them on the side of the backend.

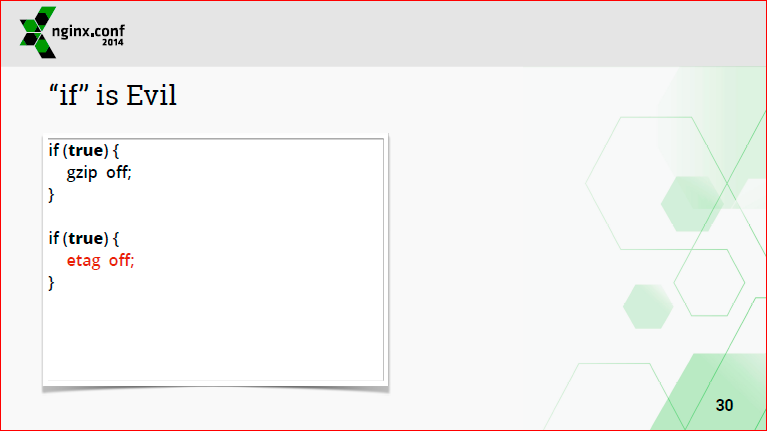

Evil is also not a recommended design in nginx, because, as Evil works inside, 10 people in the world know it, and you are unlikely to be one of them.

Here is the configuration when we have two if (true):

It is expected that we will have gzip and etag turned off. Actually, only the last if will work.

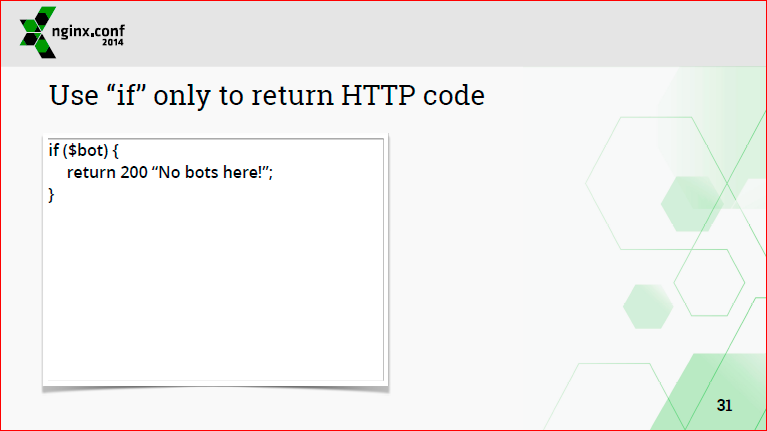

There is one safe use for if is when you use it to return a response to a client. You can use rewrite in this place, but I do not like it, I use return (it allows you to add code, etc.):

We summarize:

- it is desirable to use only prefix locations;

- Avoid regular expressions, but if regular expressions are still needed in the configuration, then they are better isolated;

- use maps;

- Do not listen to people who say that DRY is a universal paradigm. It's good when you like the product, or you program the product. If you just need to facilitate your administrative life, then copy-paste is for you. Your friend is an editor with a good find-replace;

- do not use rewrites;

- use if only to return some answer to the client.

Question from the audience: If I use http rewrites on https, where is it better to use it - in nginx or on the backend?

Answer: Use it in nginx. Ideally, this is how you make two servers. One server you have plain text and it only does rewrites. In this place there will be literally several directives - server listen on the port, server name, if needed, and return in 301 or 302 to https with duplication of the request URI. There even rewrite is not needed, use return.

If you want to do something more complicated, then somewhere you can insert an if. Suppose some of your locations are processed in plain text, describe them using regular expressions in the map, for example, and everything else can be redirected to https. Or, on the contrary, insert one if if inside each location, which will redirect to https.

Question from the audience: Thank you for nginx. I have a somewhat joking question. You do not plan to add a startup key or a compilation key that will not allow using the include directive, will it prevent using if regular expressions in locations?

Answer: No, it is unlikely. We usually add some directives, improve them and then make them deprecated. They print warning messages in the log for a while before they disappear completely, but they work in some mode. We are unlikely to do what you said, we will better write a good User Guide, perhaps based on the materials of this speech.

Question from the audience: The usual desire that arises when using if is because it can be used in the server and in the location, and the map cannot be used. Why did this happen?

Answer: All variables in nginx are calculated on demand, i.e. if map is described at the http level, this does not mean that this variable will be computed when processing the request. Map is needed in order to otmappit something into one, and then something one - into another, and you can use the resulting variable in if or within some expression, proxy somewhere, etc. A map is just like a declaration ... Maybe it makes sense to move them to the server in order to make them local to the server if you have the same variable. It was just harder to program there, so they were moved to a global server. In nginx there are no variables that are local to the server.

From the point of view of performance, there are no problems, it is just an inconvenience. It will be necessary to do, say, three servers and three maps, and the variable will have the prefix “server such and such” ... You can, in principle, describe them in front of the server, i.e. These maps are one before the first server, then before the second ... In the configuration, you will not have to go up and down, they will be closer to the server.

Question from the audience: I am new to the logic of the work of returns. Please tell us where it is worth using returns instead of rewritees, some use specific ones?

Answer: In general, rewrite is replaced with the following construction: location with a regular expression, in which you can make some captures - captures, selections, and return directive. Those. one rewrite is its left side in the location, and the right side is what will be in the return after the response code. Return offers the possibility of returning a different response code, and there are only 301, 302 in the rewrite to return to the client. Return can return 404 with some body, can - 200, 500, can return redirect. And in his body, you can use a variable, write something. If it is 301, 302, then it is not the body, it is already the URL to which you need to redirect. In general, return has richer functionality.

Question from the audience: I have an applied question. Nginx can be used as a mail proxy. Is it possible to give SMTP access to the email client, send an email through this email client, and nginx intercept the data and send it to the script, bypassing the webmail server? Now we will implement this task using postfix - it intercepts the letter and then throws it at the script where the processing takes place.

Answer: I doubt that this can be done through nginx. I can describe briefly the functionality that is in the SMTP Proxy in nginx. He knows how to do the following - an SMTP client connects to it, shows some kind of authentication, nginx goes to an external script, checks the username and password, and then says, let the client go to some servers (and passes on which ones), or do not let That's all he can do. If it is decided to start somewhere, then nginx via SMTP connects to this server and sends it to it. Whether it falls into your script, I can not say. Hardly.

SMTP Proxy with authorization appeared, because in the Rambler for mail clients there is a special server through which these clients send mail. And it turned out that about 90% of connections are not Rambler clients, but spam and viruses. In order not to load postfixes, not to raise unnecessary processes, nginx was put in front of this, which checks whether this client provides its authentication data. Actually, for this it was done - just to repel "junk" customers.

Question from the audience: You mentioned containers today, this is, of course, a promising approach, but it implies a changing topology and dynamic configuration. Now it still leads to the fact that people build some external “crutches”, which periodically react to events of topology changes, generate the actual nginx config through some template, slip it and kick it in order to recalculate the config. Interestingly, the company has some plans for development towards containerization, i.e. towards providing more convenient and natural means for this trend?

Answer: It depends on what you mean by containers in this case.When I talked about containers, compared, I said that these locations look different from each other.

Question: We returned back to docker, to the possibility of running backends somewhere in containers, which is dynamically executed on different hosts, and we need, roughly speaking, to add a new host to balancing ...

Answer: We have one of the Advanced Load parts in NGINX + Balancing just means that you can dynamically add servers to the upstream. It turns out that you do not need to reload the nginx config, and all this is done on the fly - for this there is an API.

More active chelschiki included there. When the usual open source nginx connects to the backend, if the backend does not respond, then nginx does not access it for some time, i.e. There is also a kind of helcheck here, but customers are suffering. If you have 50 clients at the same time went to one backend, and it lies or will time out after 5-10 seconds, then the clients will see it, and only after that they will be transferred to another upstream. In NGINX + we have proactive backend testing, i.e. the backends themselves are tested, and clients simply don’t go to fallen backends.

Question: And, since there is an active helcheck, then maybe they have already written down a beautiful JSON-like status page, which can be parted?

Answer: Yes, we have monitoring, it is available, including through JSON, and also it is in the form of a beautiful html.

Contacts

» Isysoev

» Nginx company blog

This report is a transcript of one of the best speeches at the conference of developers of high-loaded systems HighLoad ++ . Now we are actively preparing for the conference in 2016 - this year HighLoad ++ will be held in Skolkovo on November 7 and 8.

The DevOps section this year was prepared by a separate Program Committee, which was overseen by Express42 . A dozen reports , including a report by Maxim Dunin on news from the world of Nginx.

Also, some of these materials are used by us in an online training course on the development of high-load systems HighLoad.Guide is a chain of specially selected letters, articles, materials, videos. Already, in our textbook more than 30 unique materials. Get connected!

Source: https://habr.com/ru/post/313666/

All Articles