On the performance of named pipes in multi-process applications

In an article about the features of the new version of Visual Studio, one of the main innovations (from my point of view) was the division of the previously monolithic development environment process (devenv.exe) into components that will work in separate processes. This has already been done for the version control system (moving from libgit to git.exe) and some plug-ins, and in the future other parts of VS will be moved to sub-processes. In this regard, in the comments, the question arose: “But will this not slow down the work, because the exchange of data between processes requires the use of IPC ( Inter Process Communications )?”

In an article about the features of the new version of Visual Studio, one of the main innovations (from my point of view) was the division of the previously monolithic development environment process (devenv.exe) into components that will work in separate processes. This has already been done for the version control system (moving from libgit to git.exe) and some plug-ins, and in the future other parts of VS will be moved to sub-processes. In this regard, in the comments, the question arose: “But will this not slow down the work, because the exchange of data between processes requires the use of IPC ( Inter Process Communications )?”No, not slow. And that's why.

Speed

For the organization of communication between processes in Windows, there are various technologies: sockets, named pipes, shared memory, messaging. Since I don’t want to write a full-fledged benchmark of all of the above, let's quickly look for something similar to Habré and find an article 6 years ago in which adontz compared the performance of sockets and named pipes. Results: Sockets - 160 megabytes per second, named pipes - 755 megabytes per second. At the same time, it is necessary to make a correction for the 6-year-old iron and the .NET platform used for the tests. Those. we can safely say that on modern hardware with code, for example, in C, we get several gigabytes per second. At the same time, as Wikipedia suggests , the speed, for example, of DDR3 memory operation, is, depending on the frequency, from 6400 to 19200 MB / s - and this is in fact ideal MB / s in vacuum, in practice it will always be less.

Conclusion 1 : the speed of the payp is only several times less than the maximum possible speed of the RAM. Not a thousand times smaller, not an order of magnitude, but only several times. Modern OSs do their job well.

')

Data volumes

Let's take the same 755 MB / s from the paragraph above, as the speed of the named pipes. Is it a lot or a little? Well, if you, for example, wrote an application that would receive from the named channel uncompressed FullHD video at 60 frames per second and did something with it (encoded or streamed), then 355 MB / with. Those. even for such a very costly operation, the speed of the named channel would be enough with a margin of more than two times. What does Visual Studio use in communicating with its components? Well, for example, commands for git.exe and data from his answer. This is a few kilobytes, in very rare cases - megabytes. Data exchange with plug-ins can hardly be accurately assessed (plug-ins are very different). But in any case, not a single plugin I’ve seen needs hundreds of megabytes per second.

Conclusion 2 : taking into account the specifics of the data processed by Visual Studio (text, code, resources, pictures), the speed of the named pipes is enough with multiple reserves.

Latency

Well, ok, you say, speed-speed, but there is also latency. Each operation will require some kind of synchronization overhead. Yes, it will. And about this I recently published an article . People overestimate the overhead of blocking and synchronization. The trouble is not in the locks themselves (they take nanoseconds), but in the fact that people write bad synchronous code, allow deadlocks, live blocks , races and damage to shared memory. Fortunately, in the case of named pipes, the API itself hints at the advantages of the asynchronous approach and it is not that difficult to write correctly working code.

Conclusion 3 : so far there are no bugs in asynchronous / multithreaded code - it works quite fast, even with locks.

Practical example

Well, ok, you say, enough theory, you need practical proof! And we have it. This is one of the most popular desktop applications in the world - Google Chrome browser. Originally created in the form of several interacting processes, Chrome immediately showed the advantages of this approach - one tab stopped hanging the rest, the death of the plug-in did not mean a drop in the browser anymore, the load in drawing the content in one window no longer guaranteed the brakes in the other, etc. Chrome, if simplified, runs one main process, separate processes for rendering tabs, interactions with GPUs, plug-ins (in fact, the rules are a little trickier there, Chrome can optimize the number of child processes depending on different circumstances, but this is not very important now).

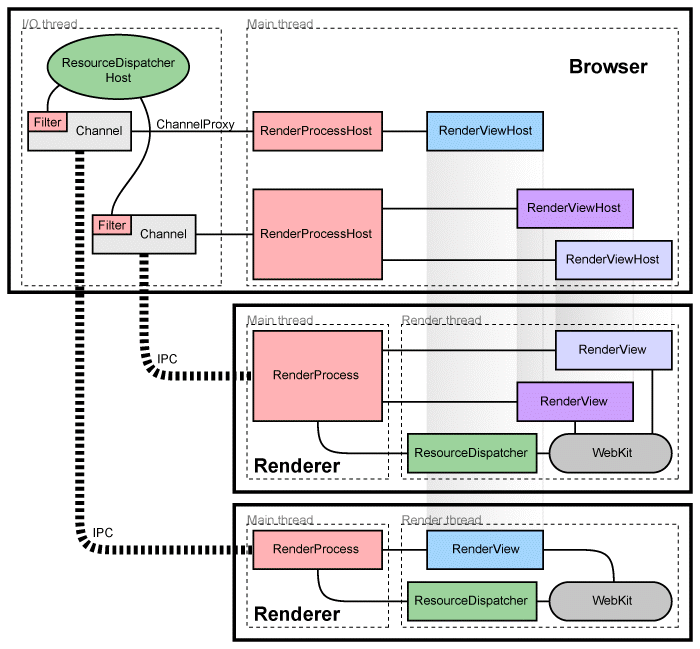

You can read about Chrome architecture in their documentation , but here is a simplified picture for you:

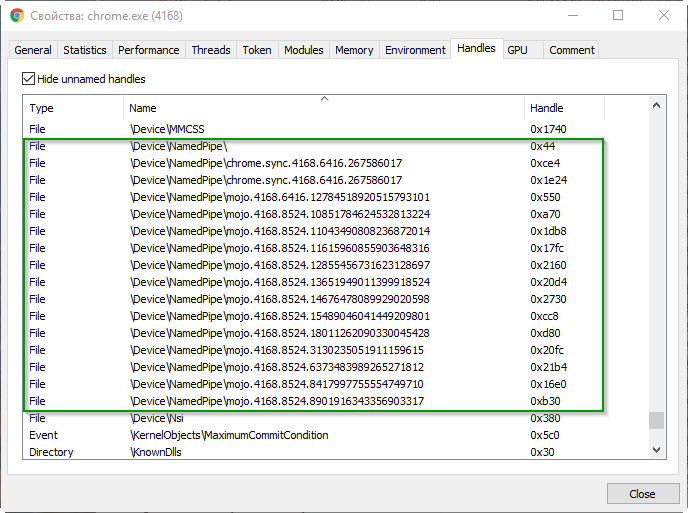

What on this picture hides under the line with the coloring of "zebra" and the inscription IPC? But just named pipes and hiding. You can see them, for example, using the Process Hacker application (Handles tab):

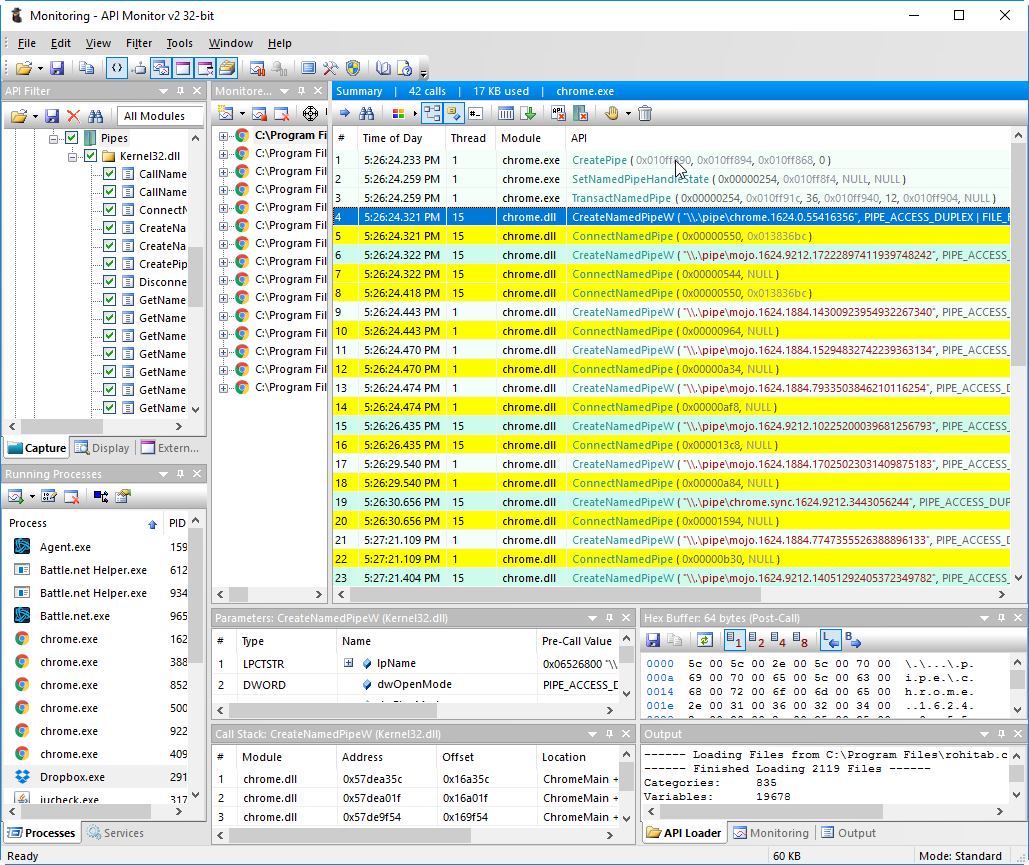

Well, or using Api Monitor :

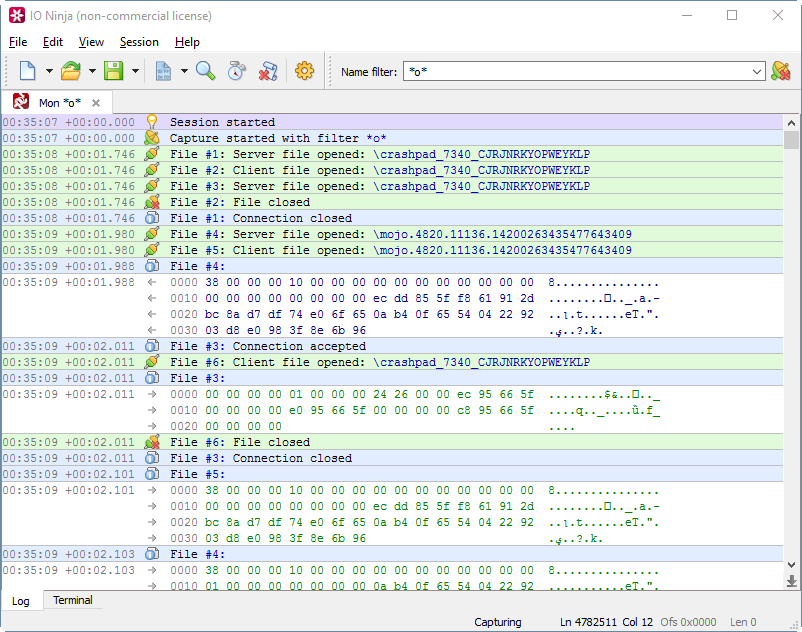

How much do named pipes inhibit Chrome? You yourself know the answer to this question: no matter how much. The multi-process architecture speeds up the browser, allowing you to better distribute the load between the cores, better control the performance of processes, and more efficient use of memory. Let's, for example, estimate how much data Chrome drives through its named channels when playing one minute of video from Youtube. To do this, you can use the good IO Ninja utility (normally, the data stream on the pipe, to their shame, does not show Wireshark, API Monitor, or Sysinternals utilities - shame!):

The measurement showed that in 1 minute of playing the Youtube video, Chrome transmits 76 MB of data through the named channels. At the same time, there were 79,715 individual read / write operations. As you can see, even such a serious program like Chrome, even on a nonweak website like Youtube, didn’t confuse the named pipes. So Visual Studio has every chance to benefit from the separation of a monolithic IDE into subprocesses.

Source: https://habr.com/ru/post/313638/

All Articles