GPU in the clouds

Need to build more GPU

Deep Learning is one of the most rapidly developing areas in machine learning. Advances in research in the field of in-depth (deep) learning lead to an increase in the number of ML / DL frameworks (including those from Google, Microsoft, Facebook) implementing these algorithms. For the ever increasing computational complexity of DL algorithms, and, as a result, for the increasing complexity of DL frameworks, the hardware power of either desktop or even server CPUs has not been stolen for a long time.

The solution was found, and it is simple (it seems so) to use GPU / FPGA calculations for this type of compute-intensive-tasks. But here is the problem: you can, of course, use the video card of your favorite laptop for these purposes, but which

')

There are at least two approaches to owning high-performance GPUs: buy ( on-premises ) and rent ( on-demand ). How to save and buy is not the topic of this article. In this one, we will look at what offers are available for renting VM instances with high-performance GPUs from cloud providers Amazon Web Service and Windows Azure .

1. GPU in Azure

In early August 2016, the launch of private testing of virtual machine instances equipped with NVidia Tesla cards was announced [1]. This feature is provided within the Azure VM service - IaaS -service providing on-demand virtual machines (similar to Amazon EC2 ).

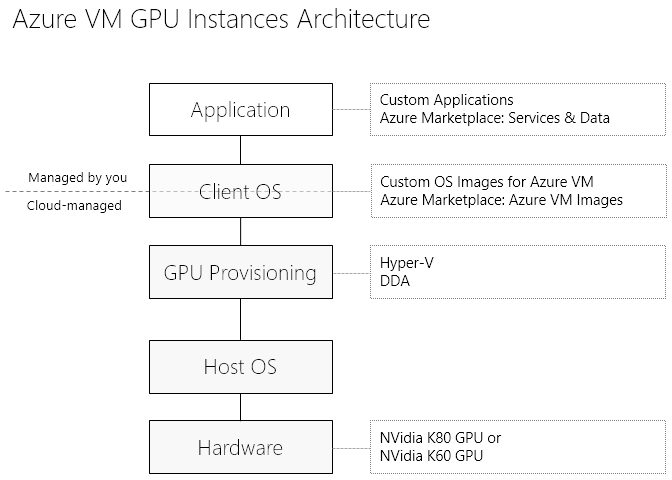

From the point of view of application access to the graphics processor, the service architecture looks like this:

GPU calculations are available on N-series virtual machines, which, in turn, fall into 2 categories:

- NC Series ( computer-focused ): GPU, aimed at computing;

- NV Series ( visualization-focused ): GPUs aimed at graphical calculations.

1.1. NC Series VMs

Graphics processors designed to compute-intensive loads using CUDA / OpenCL. NVidia Tesla K80: 4992 CUDA cores,> 2.91 / 8.93 Tflops (double / single precision) serve as graphics cards for them. Access to the cards is done using the DDA technology (discrete device assignment), which brings the performance of the GPU closer when using a VM to the bare-metal performance of the card.

It is easy to guess that VM series NC are designed for ML / DL tasks.

The following VM configurations equipped with a Tesla K80 are available in Azure.

| NC6 | NC12 | NC24 | |

| Corores | 6 (E5-2690v3) | 12 (E5-2690v3) | 24 (E5-2690v3) |

| GPU | 1 x K80 GPU (1/2 Physical Card) | 2 x K80 GPU (1 Physical Card) | 4 x K80 GPU (2 Physical Cards) |

| Memory | 56 GB | 112 GB | 224 GB |

| Disk | 380 GB SSD | 680 GB SSD | 1.44 TB SSD |

1.2. NV Series VMs

Virtual machines of the NV series are intended for visualization. On the VM data, there are Tesla M60 GPUs (4086 CUDA cores, 36 threads at 1080p H.264). These maps are suitable for tasks (de) coding, rendering, 3D-modeling.

Declared availability of VM instances with the following configurations:

| NV6 | NV12 | NV24 | |

| Corores | 6 (E5-2690v3) | 12 (E5-2690v3) | 24 (E5-2690v3) |

| GPU | 1 x M60 GPU (1/2 Physical Card) | 2 x M60 GPU (1 Physical Card) | 4 x M60 GPU (2 Physical Cards) |

| Memory | 56 GB | 112 GB | 224 GB |

| Disk | 380 GB SSD | 680 GB SSD | 1.44 TB SSD |

1.3. Prices

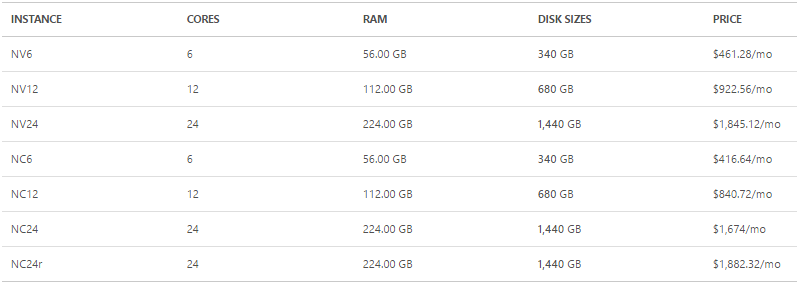

Prices for N-Series Azure VM are as follows (October 2016) [5]:

But let your curiosity do not diminish these 4-digit numbers: as always, in the cloud we pay for the use of resources. For IaaS services, which is the Azure VM service, this is understood as hourly billing. In addition, there are many ways to get Microsoft Azure. gold computing resources are completely free.

This applies to new accounts in Azure, for students, for startups, if you looking for a cure for cancer a researcher, or if you / the company you work for, is an MSDN subscription holder.

2. Amazon EC2 GPU Instances (+ dangerous comparison)

The cloud provider Amazon Web Services (AWS) started providing VM instances with GPUs back in 2010.

Back in early September (2016), AWS GPU instances were represented only by the G2 family.

Configurations of virtual machines of the G2 family:

| Model | GPUs | vCPU | Mem (GiB) | SSD Storage (GB) | Price, per hour / month |

| g2.2xlarge | one | eight | 15 | 1 x 60 | 0.65 / 468 |

| g2.8xlarge | four | 32 | 60 | 2 x 120 | 2.6 / 1872 |

G2 instances are equipped with NVidia GRID K520 graphics processors with 1556 CUDA cores, supporting 4x 1080p H.264 video streams. CUDA / OpenCL support announced. There is also support for HVM technology (hardware virtual machine), which, by analogy with the DDA in Azure VM, minimizes the costs associated with virtualization, allowing the guest VM to get a GPU performance close to bare-metal performance.

While I was writing an article a month ago (end of September 2016) AWS announced P2 instances containing more modern graphic cards.

Instances of the P2 family can include up to 8 NVIDIA Tesla K80 cards. CUDA 7.5, OpenCL 1.2 support announced. The p2.8xlarge and p2.16xlarge instances support a high-speed GPU-to-GPU connection, and a local network can connect up to 20 Gbps using ENA technology (Elastic Network Adapter — a high-speed network interface for Amazon EC2).

| Instance Name | GPU Cores | vCPU Cores | Memory, Gb | CUDA Cores | GPU Memory | Network, Gbps |

| p2.xlarge | one | four | 61 | 2496 | 12 | High |

| p2.8xlarge | eight | 32 | 488 | 19968 | 96 | ten |

| p2.16xlarge | sixteen | 64 | 732 | 39936 | 192 | 20 |

For comparison * let's take the most productive (NC24) and the most budget (NC6) instances in Azure VM and the ones that are closest in performance to Azhurovskih ones to Amazon EC2.

| Instance family | GPU Model | GPU Cores | vCPU Core | RAM, Gb | Network, Gbps | CUDA / OpenCL | Status | Price, $ / mo | Price, $ per GPU / mo |

| Amazon p2.xlarge | K80 | one | four | 61 | High | 7.5 / 1.2 | GA | 648 | 648 |

| Azure NC6 | K80 | one | 6 | 56 | ten (?) | + / + | Private preview | 461 | 461 |

| Amazon p2.8xlarge | K80 | eight | 32 | 488 | ten | 7.5 / 1.2 | GA | 5184 | 648 |

| Azure NC24 | K80 | eight | 24 | 224 | ten (?) | + / + | Private preview | 1882 | 235 |

Conclusion

AWS has long been “tormented” by the data-science-community by rather weak and at the same time expensive GPU instances of the G2 family. But the competition in the cloud providers market has done its job - a month ago GPU instances of the P2 family appeared, and they look very decent.

Microsoft Azure also tormented the community for a long time with the lack of GPU instances (this was one of the most anticipated features of the Azure platform). At the moment, the GPU instances in Azure look extremely good, although they lack technical details. The preview status of this feature is

In general, Microsoft literally over the course of a year or two has been seriously overgrown with various AI technologies / frameworks / tools, including (maybe - first of all) for developers and data scientists. How seriously and conveniently this can be assessed on your own by looking at the records of the Microsoft ML & DS Summit held in late September [6].

In addition, exactly one week later - on November 1 - the Microsoft DevCon School conference will take place, one of the tracks of which is entirely devoted to machine learning. And they will not talk there exclusively about proprietary technologies MS, but about the usual and "free" Python, R, Apache Spark.

List of sources

- NVIDIA GPUs in Azure : check in the preview program.

- Leveraging NVIDIA GPUs in Azure . Webcast on Channel 9.

- Linux GPU Instances : documentation.

- Announcement of P2 instances in AWS , September 29, 2016.

- Prices for Azure Virtual Machines (including Azure VM GPU).

- Microsoft Machine Learning & Data Science Summit Conference.

Source: https://habr.com/ru/post/313478/

All Articles