Programming & Music: Delay, Distortion and Parameter Modulation. Part 4

Hello! You are reading the fourth part of an article about creating a VST synthesizer in C #. In the previous parts, we generated a signal, applied an amplitude envelope and a frequency filter to it.

This time we will look at the effects of Distortion - distortion of the signal, familiar to any electric guitar player and Delay (it is an echo).

Many different interesting sounds can be obtained by changing (modulating) the parameters of the components of the synthesizer (generator, filter, effects) over time. Consider the option of how this can be done.

The source code for the synthesizer I've written is available on GitHub .

Screenshot VST plugin GClip

Cycle of articles

- We understand and write VSTi synthesizer on C # WPF

- ADSR signal envelope

- Buttervo Frequency Filter

- Delay, Distortion and Parameter Modulation

Table of contents

- Clipping, distortion, overdrive and distortion

- Code Distortion Effect

- Delay and Reverb

- Code Delay effect

- Parameter Modulation

- We write class LFO

- Interface IParameterModifier and the use of the current value of the parameter

- Conclusion

- Bibliography

Clipping, distortion, overdrive and distortion

The initial models of guitar amplifiers and pickups were simple and of low quality, respectively, adding distortion to the signal being processed. When using analog amplifiers, the signal was distorted depending on the outgoing volume of the signal. With an increase in the amplitude of the signal, the nonlinear distortion coefficient increases, various harmonics are added. If you turn on your home speakers to maximum, I am sure that you will also hear the distortion.

There is a story that, in the 51st year, the guitarist of the Kings of Rhythm band used an amplifier that was damaged on the way, and the producer liked the sound - so one of the earliest recordings of a distorted guitar was made.

The effect of "Distortion" - translated from English as "distortion". If the signal starts to be strictly limited in amplitude, nonlinear distortions will be created, new harmonics will appear. The greater the constraint (Theshold), the more distorted the signal.

Almost any guitar in the genre with the word "rock" is distorted or overdrive . Link to audio examples of famous effects .

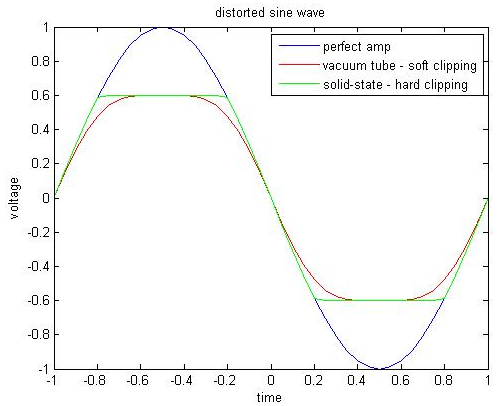

Overdrive has a smoother amplitude limit than distortion. Overdrive is also called Soft Clipping, and distortion, respectively, Hard Clipping. Overdrive on guitars is used in more "quiet" genres such as indie rock, pop rock and the like.

An approximate comparison of the effects of Distortion (Hard Clipping) and Overdrive (Soft Clipping)

Clipping is called unwanted artifacts (clicks), when the digital amplitude exceeds 0 dB. There are effects that implement "pure" (without emulating any analog pedals or preamps) signal distortion. For example, the GClip plugin (at the beginning of the article is just its screen) simply mathematically cuts off the incoming signal in amplitude.

Code Distortion Effect

From the above we deduce that, in fact, the hard distortion is determined only by the parameter of the maximum absolute value of the amplitude - Threshold. In our case, the absolute values of the sample do not exceed 1, which means that the Threshold is enclosed in the interval [0,1].

The more we limit the signal (the closer Threshold is to zero), the weaker it becomes. To keep the volume of the signal unchanged, you can restore it: divide the value of the sample into Threshold.

We obtain a simple algorithm for the hard distortion, which is used for each sample separately:

- If the value of the sample is greater than the threshold, make it equal to the threshold.

- If the value of the sample is less than-Threshold, make it equal to-Threshold.

- Multiply the value of the sample by the Threshold.

We return to the synthesizer written by me (a review of the architecture of classes in the first article ). The Distortion class will inherit the SyntageAudioProcessorComponentWithParameters <AudioProcessor> class and implement the IProcessor interface.

Add the Power parameter to indicate how the effect works. The Treshold parameter cannot be equal to 0, otherwise we will have to divide by 0. To limit the signal, take a maximum of the value of the sample and Treshold if the value of the sample is greater than zero; take the maximum of the value of the sample and -Treshold if the value of the sample is less than zero.

public enum EPowerStatus { Off, On } public class Distortion : SyntageAudioProcessorComponentWithParameters<AudioProcessor>, IProcessor { public EnumParameter<EPowerStatus> Power { get; private set; } public RealParameter Treshold { get; private set; } public Distortion(AudioProcessor audioProcessor) : base(audioProcessor) { } public override IEnumerable<Parameter> CreateParameters(string parameterPrefix) { Power = new EnumParameter<EPowerStatus>(parameterPrefix + "Pwr", "Power", "", false); Treshold = new RealParameter(parameterPrefix + "Trshd", "Treshold", "Trshd", 0.1, 1, 0.01); return new List<Parameter> {Power, Treshold}; } public void Process(IAudioStream stream) { if (Power.Value == EPowerStatus.Off) return; var count = Processor.CurrentStreamLenght; for (int i = 0; i < count; ++i) { var treshold = Treshold.Value; stream.Channels[0].Samples[i] = DistortSample(stream.Channels[0].Samples[i], treshold); stream.Channels[1].Samples[i] = DistortSample(stream.Channels[1].Samples[i], treshold); } } private static double DistortSample(double sample, double treshold) { return ((sample > 0) ? Math.Min(sample, treshold) : Math.Max(sample, -treshold)) / treshold; } } Delay and Reverb

Delay , it is an echo - the effect of repeating a signal with a delay. Usually, delay refers to a clear repetition (multiple repetitions) of a signal. Enter the archway, the transition - you will hear how a short loud sound will be reflected several times, losing volume. If you stand in the concert hall, with a much more complex architecture and sound-reflecting surfaces than the arch in the house, you can no longer hear clear repetitions, but a fading sound.

Reverberation is the process of gradually reducing the intensity of a sound during its multiple reflections. The received reverberation time is the time during which the sound level decreases by 60 dB. Depending on the device of the room / hall, the reverberation time and sound picture can vary greatly.

Listening is always better than reading about sound. And you can and see .

Mention should be made of the realization of the reverberation effect by means of convolution ( Convolution Reverb ). The bottom line is that having a special file on our hands that "describes" the room we need ( impulse response ), you can absolutely accurately reproduce the reverberation from the desired sound in this room.

To get impulse responses (they are simply called impulses / impulses, which are very many on the network), you need to install a microphone in the right room, start recording and play a sound - “impulse” - or rather, a phenomenon as close as possible: for example, some extremely sharp hit; record the echo of our impulse.

We got a way to completely recreate the acoustics of the room - at least to the extent that it guarantees us that the sound is unchanged while the impulse function is unchanged. Not all process parameters are determined by the impulse function, but most important for a person is still determined.

Similarly, they make impulse responses of guitar cabinets for use in reamping guitars on a computer.

Code Delay effect

Echo is a signal repetition with some time delay. That is, the current value of the signal is added as the current new value plus the value of the signal t time ago, t is the delay time.

A simple formula for the value of the sample:

Where x is the input sequence of samples, y is the resultant, T is the delay in the samples.

Need to store the last T calculated samples. Each time you need to get the value of the sample with a delay and save the new calculated value. For these purposes, suitable cyclic buffer.

Guitar pedal Ibanez AD9 Analog Delay

To adjust the volume (I would say "quantity") of the delay, you can substitute the factors in the formula. Usually, the plug-ins use the terms Dry / Wet - the ratio in the mix of untreated ("dry") and processed ("wet") signals. In the sum, the coefficients are equal to 1, since they denote shares. In the photo of the pedal, the Wet parameter is called Delay Level.

In this formula, there is no echo attenuation , it will always be repeated at the same volume level. This parameter is usually called Feedback (in the photo the parameter is called Repeat), it lowers the volume depending on time.

It turns out that in our simple delay there will be 4 parameters:

- Power - effect works or not

- Drylevel

- Time - delay time in seconds

- Feedback

To find T (the delay in the samples, the size of the sample buffer), multiply the sampling rate by the Time parameter. In order not to allocate memory for the buffer each time when the Time parameter is changed, we will immediately create an array of the maximum length Time.Max * SampleRate.

Let's write an auxiliary class for a circular buffer:

class Buffer { private int _index; private readonly double[] _data; public Buffer(int length) { _data = new double[length]; _index = 0; } public double Current { get { return _data[_index]; } set { _data[_index] = value; } } public void Increment(int currentLength) { _index = (_index + 1) % currentLength; } public void Clear() { Array.Clear(_data, 0, _data.Length); } } Function to calculate the sample:

private double ProcessSample(double sample, Buffer buffer) { var dry = DryLevel.Value; var wet = 1 - dry; var output = dry * sample + wet * buffer.Current; buffer.Current = sample + Feedback.Value * buffer.Current; int length = (int)(Time.Value * Processor.SampleRate); buffer.Increment(length); return output; } public class Delay : SyntageAudioProcessorComponentWithParameters<AudioProcessor>, IProcessor { private class Buffer { private int _index; private readonly double[] _data; public Buffer(int length) { _data = new double[length]; _index = 0; } public double Current { get { return _data[_index]; } set { _data[_index] = value; } } public void Increment(int currentLength) { _index = (_index + 1) % currentLength; } public void Clear() { Array.Clear(_data, 0, _data.Length); } } private Buffer _lbuffer; private Buffer _rbuffer; public EnumParameter<EPowerStatus> Power { get; private set; } public RealParameter DryLevel { get; private set; } public RealParameter Time { get; private set; } public RealParameter Feedback { get; private set; } public Delay(AudioProcessor audioProcessor) : base(audioProcessor) { audioProcessor.OnSampleRateChanged += OnSampleRateChanged; audioProcessor.PluginController.ParametersManager.OnProgramChange += ParametersManagerOnProgramChange; } public override IEnumerable<Parameter> CreateParameters(string parameterPrefix) { Power = new EnumParameter<EPowerStatus>(parameterPrefix + "Pwr", "Power", "", false); DryLevel = new RealParameter(parameterPrefix + "Dry", "Dry Level", "Dry", 0, 1, 0.01); Time = new RealParameter(parameterPrefix + "Sec", "Delay Time", "Time", 0, 5, 0.01); Feedback = new RealParameter(parameterPrefix + "Fbck", "Feedback", "Feedback", 0, 1, 0.01); return new List<Parameter> {Power, DryLevel, Time, Feedback}; } public void ClearBuffer() { _rbuffer?.Clear(); _lbuffer?.Clear(); } public void Process(IAudioStream stream) { if (Power.Value == EPowerStatus.Off) return; var leftChannel = stream.Channels[0]; var rightChannel = stream.Channels[1]; var count = Processor.CurrentStreamLenght; for (int i = 0; i < count; ++i) { leftChannel.Samples[i] = ProcessSample(leftChannel.Samples[i], i, _lbuffer); rightChannel.Samples[i] = ProcessSample(rightChannel.Samples[i], i, _rbuffer); } } private void OnSampleRateChanged(object sender, SyntageAudioProcessor.SampleRateEventArgs e) { var size = (int)(e.SampleRate * Time.Max); _lbuffer = new Buffer(size); _rbuffer = new Buffer(size); } private void ParametersManagerOnProgramChange(object sender, ParametersManager.ProgramChangeEventArgs e) { ClearBuffer(); } private double ProcessSample(double sample, int sampleNumber, Buffer buffer) { var dry = DryLevel.Value; var wet = 1 - dry; var output = dry * sample + wet * buffer.Current; buffer.Current = sample + Feedback.ProcessedValue(sampleNumber) * buffer.Current; int length = (int)(Time.ProcessedValue(sampleNumber) * Processor.SampleRate); buffer.Increment(length); return output; } } Parameter Modulation

At this stage, the following chain of sound generation has been reviewed and coded (you will find all this in previous articles):

- Generating a simple wave in an oscillator

- ADSR Envelope Signal Processing

- Signal processing frequency filter

- Further effects processing: Distrotion, Delay

After the effects, the signal usually undergoes master processing (usually just adjusting the resulting volume) and is fed to the output of the plug-in.

Having such a sequence, you can already get a variety of sounds.

A lot of sounds are made by changing any parameter in time. For example, in the sound of a “laser gun” shot, you can clearly hear how the fundamental frequency changes from high to low.

In theory, about all our parameters (the Parameter class) knows the host, they exist not only within our architecture. In a host, you can make parameter automation to change them over time.

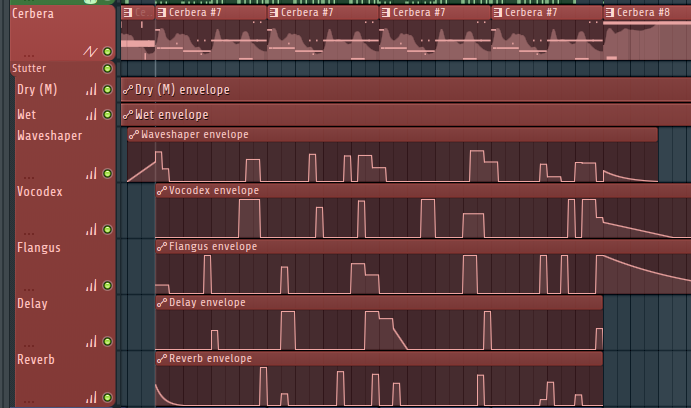

Automation of parameters in FL Studio 12. Top track "Cerbera" - the track with notes for the Sytrus synthesizer, below the tracks with the graphs of parameter changes (Vocodex, Flangus, Delay, Reverb) added VST effects on this synthesizer track

Of course, such automation is very convenient and very often used when creating music. But such automation will work only when playing a track, it is difficult to configure. If we want a parameter to change with each push of a note, or just to constantly change according to some law? It is more logical to make the automation already in the plugin itself - there will be more room for creating sound.

Usually in synthesizers there is a special part / block / module responsible for modulating the parameters. It is called so, modulation unit or modulation matrix. Modulation of the parameters is similar to the amplitude modulation of the ADSR envelope from the 2nd article . Only now imagine that instead of the envelope, you can come up with any law of parameter change at all, and modulate any parameter in the plugin (which implies that it can be modulated).

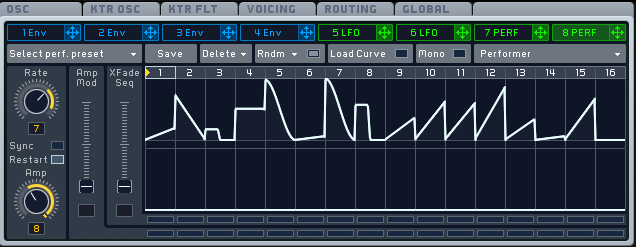

As a "law", envelopes and the LFO are usually taken (Low Frequency Oscillator is essentially the same oscillator, but its samples are used as multipliers for modulation and not as a sound wave). In many synthesizers, you can manually draw a graph of parameter changes, or collect it from pre-prepared patterns.

Modulation unit in the Sylenth1 synthesizer. There are two ADSR envelopes, two LFO oscillators, and modulation based on other sources (like Velocity from pushing a note). For each source, you can specify two modulated parameters and “modulation degree” as an intermediate factor (the twist to the left of the parameter name).

Modulation matrix in the Serum synthesizer. Each line describes a pair of “source - modulated parameter” with additional settings (modulation type, “quantity” multiplier, curve, and so on).

Envelopes and LFO in Massive synthesizer. You can manually draw a change curve from individual patterns / pieces.

We write class LFO

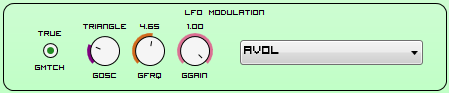

Modulation block in the synthesizer written by me

Write the LFO class: its task will be to modulate the parameters. The oscillator will generate a wave with an amplitude in the interval [-1,1], which we will use as a factor for the parameter. The LFO oscillator is basically no different in principle from the ordinary oscillator, which we code to generate a simple wave. The prefix "low frequency" is written because it can generate very low frequencies (less hertz). Since a person does not hear notes below ~ 20 Hertz, then there is no such low frequencies on the musical keyboard (respectively, on the main oscillator).

The oscillator has the following parameters: frequency, and wave type (Sine, Triangle, Square, Noise).

For convenient generation of such signals, the WaveGenerator.GenerateNextSample function was previously written.

Consider how we modify the value of the sample. All parameters (the Parameter class) have the RealValue property, which displays the parameter value in the interval [0, 1]. This is what we need. The oscillator generates values in the interval [-1,1]. In fact, we twist the parameter knob to the maximum to the right, then to the maximum to the left.

There is a problem - say, the value of the parameter is 0.25. In order to equally change a parameter to a smaller and larger side, you can change it only from 0 to 0.5 (-1 corresponds to 0, 1 corresponds to 0.5, with 0 - the parameter does not change and is equal to 0.25). Thus, we take the smallest segment that divides the value of the parameter r: f = min (r, 1 - r).

Now the parameter will change in the range [r - f, r + f].

Add another parameter to control the "width" of the variable range of values - Gain, with values in the interval [0, 1].

We get the following formula for the modified sample value:

Now you need to decide how the oscillator will work. The LFO class does not generate or modify an array of samples. Just for the oscillator to work, you need to remember the past tense. Therefore, we will inherit from the IProcessor interface, in the Process function (IAudioStream stream) we will count the number of samples passed. If we divide it into SampleRate, we get the elapsed time.

There is an option in synthesizers so that the LFO is synchronized with a keystroke. For us, this means that when you click (the MidiListenerOnNoteOn handler) you need to reset the oscillator phase (reset the time to 0). For this will answer the parameter MatchKey.

The function that calculates the value of the sample ModifyRealValue will take as input the current value of the currentValue parameter and the current sample number sampleNumber. How correctly to use the modified value will be written further. Now we need to understand that the ModifyRealValue function will be called for each sample in the incoming separate array (which is in the Process function).

We get the following methods:

public void Process(IAudioStream stream) { _time += Processor.CurrentStreamLenght / Processor.SampleRate; } public double ModifyRealValue(double currentValue, int sampleNumber) { var gain = Gain.Value; if (DSPFunctions.IsZero(gain)) return currentValue; var amplitude = GetCurrentAmplitude(sampleNumber); gain *= amplitude * Math.Min(currentValue, 1 - currentValue); return DSPFunctions.Clamp01(currentValue + gain); } private double GetCurrentAmplitude(int sampleNumber) { var timePass = sampleNumber / Processor.SampleRate; var currentTime = _time + timePass; var sample = WaveGenerator.GenerateNextSample(OscillatorType.Value, Frequency.Value, currentTime); return sample; } private void MidiListenerOnNoteOn(object sender, MidiListener.NoteEventArgs e) { if (MatchKey.Value) _time = 0; } The most important parameter in the LFO class is the reference / name of the modulated parameter. To do this, you have to write the ParameterName class, which will display a list of possible parameters for modulating. Inherited from IntegerParameter, the value of the parameter will mean the number in the sequence of parameters of the ParametersManager. Reef - you need to specify the maximum value of the parameter - the total number of parameters that changes during the development process.

class ParameterName : IntegerParameter { private readonly ParametersManager _parametersManager; public ParameterName(string parameterPrefix, ParametersManager parametersManager) : base(parameterPrefix + "Num", "LFO Parameter Number", "Num", -1, 34, 1, false) { _parametersManager = parametersManager; } public override int FromStringToValue(string s) { var parameter = _parametersManager.FindParameter(s); return (parameter == null) ? -1 : _parametersManager.GetParameterIndex(parameter); } public override string FromValueToString(int value) { return (value >= 0) ? _parametersManager.GetParameter(value).Name : "--"; } } Interface IParameterModifier and the use of the current value of the parameter

Now the parameter class must determine if its modulation is possible. In the synthesizer I encoded, a simple case is considered - there is one object of the LFO class, and no more than one parameter can be modulated.

public interface IParameterModifier { double ModifyRealValue(double currentValue, int sampleNumber); } Since the parameter can be associated with a single IParameterModifier, let's make a link and the ParameterModifier property. To get the current value, you need to use the ProcessedValue method instead of the Value property. To do this, pass the current sample number.

public abstract class Parameter { ... private IParameterModifier _parameterModifier; public bool CanBeAutomated { get; } public IParameterModifier ParameterModifier { get { return _parameterModifier; } set { if (_parameterModifier == value) return; if (_parameterModifier != null && !CanBeAutomated) throw new ArgumentException("Parameter cannot be automated."); _parameterModifier = value; } } public double ProcessedRealValue(int sampleNumber) { if (_parameterModifier == null) return RealValue; var modifiedRealValue = _parameterModifier.ModifyRealValue(RealValue, sampleNumber); return modifiedRealValue; } ... } public abstract class Parameter<T> : Parameter where T : struct { ... public T ProcessedValue(int sampleNumber) { return FromReal(ProcessedRealValue(sampleNumber)); } ... } Using the ProcessedValue method instead of Value makes programming a bit difficult due to the sampleNumber parameter that needs to be passed. When I wrote the LFO class, I had to change the Value of the parameters in ProcessedValue in all classes. Basically, the samples were processed in a loop, and transferring sampleNumber was not a big problem.

In the LFO class, we make the ParameterName parameter change handler, and in it we need to change the ParameterModifier parameter to this.

public class LFO : SyntageAudioProcessorComponentWithParameters<AudioProcessor>, IProcessor, IParameterModifier { private double _time; private Parameter _target; private class ParameterName : IntegerParameter { private readonly ParametersManager _parametersManager; public ParameterName(string parameterPrefix, ParametersManager parametersManager) : base(parameterPrefix + "Num", "LFO Parameter Number", "Num", -1, 34, 1, false) { _parametersManager = parametersManager; } public override int FromStringToValue(string s) { var parameter = _parametersManager.FindParameter(s); return (parameter == null) ? -1 : _parametersManager.GetParameterIndex(parameter); } public override string FromValueToString(int value) { return (value >= 0) ? _parametersManager.GetParameter(value).Name : "--"; } } public EnumParameter<WaveGenerator.EOscillatorType> OscillatorType { get; private set; } public FrequencyParameter Frequency { get; private set; } public BooleanParameter MatchKey { get; private set; } public RealParameter Gain { get; private set; } public IntegerParameter TargetParameter { get; private set; } public LFO(AudioProcessor audioProcessor) : base(audioProcessor) { audioProcessor.PluginController.MidiListener.OnNoteOn += MidiListenerOnNoteOn; } public override IEnumerable<Parameter> CreateParameters(string parameterPrefix) { OscillatorType = new EnumParameter<WaveGenerator.EOscillatorType>(parameterPrefix + "Osc", "LFO Type", "Osc", false); Frequency = new FrequencyParameter(parameterPrefix + "Frq", "LFO Frequency", "Frq", 0.01, 1000, false); MatchKey = new BooleanParameter(parameterPrefix + "Mtch", "LFO Phase Key Link", "Match", false); Gain = new RealParameter(parameterPrefix + "Gain", "LFO Gain", "Gain", 0, 1, 0.01, false); TargetParameter = new ParameterName(parameterPrefix, Processor.PluginController.ParametersManager); TargetParameter.OnValueChange += TargetParameterNumberOnValueChange; return new List<Parameter> {OscillatorType, Frequency, MatchKey, Gain, TargetParameter}; } public void Process(IAudioStream stream) { _time += Processor.CurrentStreamLenght / Processor.SampleRate; } public double ModifyRealValue(double currentValue, int sampleNumber) { var gain = Gain.Value; if (DSPFunctions.IsZero(gain)) return currentValue; var amplitude = GetCurrentAmplitude(sampleNumber); gain *= amplitude * Math.Min(currentValue, 1 - currentValue); return DSPFunctions.Clamp01(currentValue + gain); } private double GetCurrentAmplitude(int sampleNumber) { var timePass = sampleNumber / Processor.SampleRate; var currentTime = _time + timePass; var sample = WaveGenerator.GenerateNextSample(OscillatorType.Value, Frequency.Value, currentTime); return sample; } private void MidiListenerOnNoteOn(object sender, MidiListener.NoteEventArgs e) { if (MatchKey.Value) _time = 0; } private void TargetParameterNumberOnValueChange(Parameter.EChangeType obj) { var number = TargetParameter.Value; var parameter = (number >= 0) ? Processor.PluginController.ParametersManager.GetParameter(number) : null; if (_target != null) _target.ParameterModifier = null; _target = parameter; if (_target != null) _target.ParameterModifier = this; } } Conclusion

I consider the cycle of articles to be complete: I told about the most important (in my opinion, of course) components of the synthesizer: wave generation, envelope processing, filtering, effects, and modulation of parameters. Programming in places was far from ideal, without optimizations - I wanted to write the code as clearly as possible. An inquisitive researcher can take my code and experiment with it as much as I want — I will only be glad of it! There is a good site musicdsp.org with a large archive of source codes of various pieces of synthesis and sound processing, mostly in C ++, go for it!

Thanks to all who are interested! I'm sure my articles will be visible from Google and will help beginners to enter the world of music programming and signal processing. Thank you for your comments, especially Refridgerator .

All good!

Good luck in programming!

Bibliography

Do not forget to look at the lists of articles and books in previous articles.

')

Source: https://habr.com/ru/post/313338/

All Articles